Juniper EVPN Support

Overview

The Junos EVPN ESI multi-homing feature enables you to directly connect end servers to leaf devices and provide redundant connectivity via multi-homing. This feature is supported only on LAGs that span two leaf devices on the fabric. EVPN ESI also removes the need for "peer-link", and hence facilitates clean leaf-spine design.

Blueprints using the MP-EBGP EVPN Overlay Control Protocol can use Juniper Junos devices. Racks with leaf-pair redundancy can implement EVPN ESI multi-homing.

EVPN ESI multi-homing helps to maintain EVPN service and traffic forwarding to and from the multi-homed site in the event of the following types of network failures and avoid single point of failure as per the scenarios below:

- Link failure from one of the leaf devices to end server device

- Failure of one of the leaf devices

- Fast convergence on the local VTEP by changing next-hop adjacencies and maintaining end host reachability across multiple remote VTEPs

EVPN multi-homing Terminology and Concepts

The following terminology and concepts are used with EVPN multi-homing:

EVI - EVPN instance that spans between the leaf devices making up the EVPN. It's represented by the Virtual Network Identifier (VNI). EVI is mapped to VXLAN-type virtual networks (VN).

MAC-VRF - A virtual routing and forwarding (VRF) table to house MAC addresses on the VTEP leaf device (often called a "MAC table"). A unique route distinguisher and VRF target is configured per MAC-VRF.

Ethernet Segment (ES) - Ethernet links span from an end host to multiple ToR leaf devices and form ES. It constitutes a set of bundled links.

Ethernet Segment Identifier (ESI) - Represents each ES uniquely across the network. ESI is only supported on LAGs that span two leaf devices on the fabric.

ESI helps with end host level redundancy in an EVPN VXLAN-based blueprint. Ethernet links from each Juniper ToR leaf connected to the server are bundled as an aggregated Ethernet interface. LACP is enabled for each aggregated Ethernet interface of the Juniper devices. Multi-homed interfaces into the ES are identified using the ESI.

ESI has certain restrictions and requirements as listed below:

- ESI based ToR leaf devices cannot have any L2/L3 peer links as EVPN multi-homing eliminates peer links used by MLAG/vPC.

- A bond of two physical interfaces towards a single leaf is not supported in the ESI implementation; make sure the server with LAG in that rack type spans two leaf devices.

- ESI and MLAG/vPC-based rack types cannot be mixed in a single blueprint.

- L2 External Connectivity Points (ECPs) with an ESI-based rack type is not supported. Only L3 ECPs are supported.

- Per-leaf VN assignment - having different VLAN sets among individual leaf devices for an ESI-based port channel is not supported.

- Connecting a single server to a single leaf using a bond of two physical interfaces cannot use an ESI.

- ESI is supported only on LAGs (port-channels) and not directly on physical interfaces. This has no functional impact, as leaf local port-channels for multi-home links are automatically generated.

- Only ESI active-active redundancy mode is supported. Active-standby mode is not supported.

- active-active redundancy mode is only supported for Juniper EVPN multi-homing where each Juniper ToR leaf attached to an ES is allowed to forward traffic to and from a given VLAN.

- More than two leaf devices in one ESI segment using ESI-based rack types is not supported.

- Switching from an ESI to MLAG rack type or vice versa is not supported under Flexible Fabric Expansion (FFE) operations.

Topology Specification

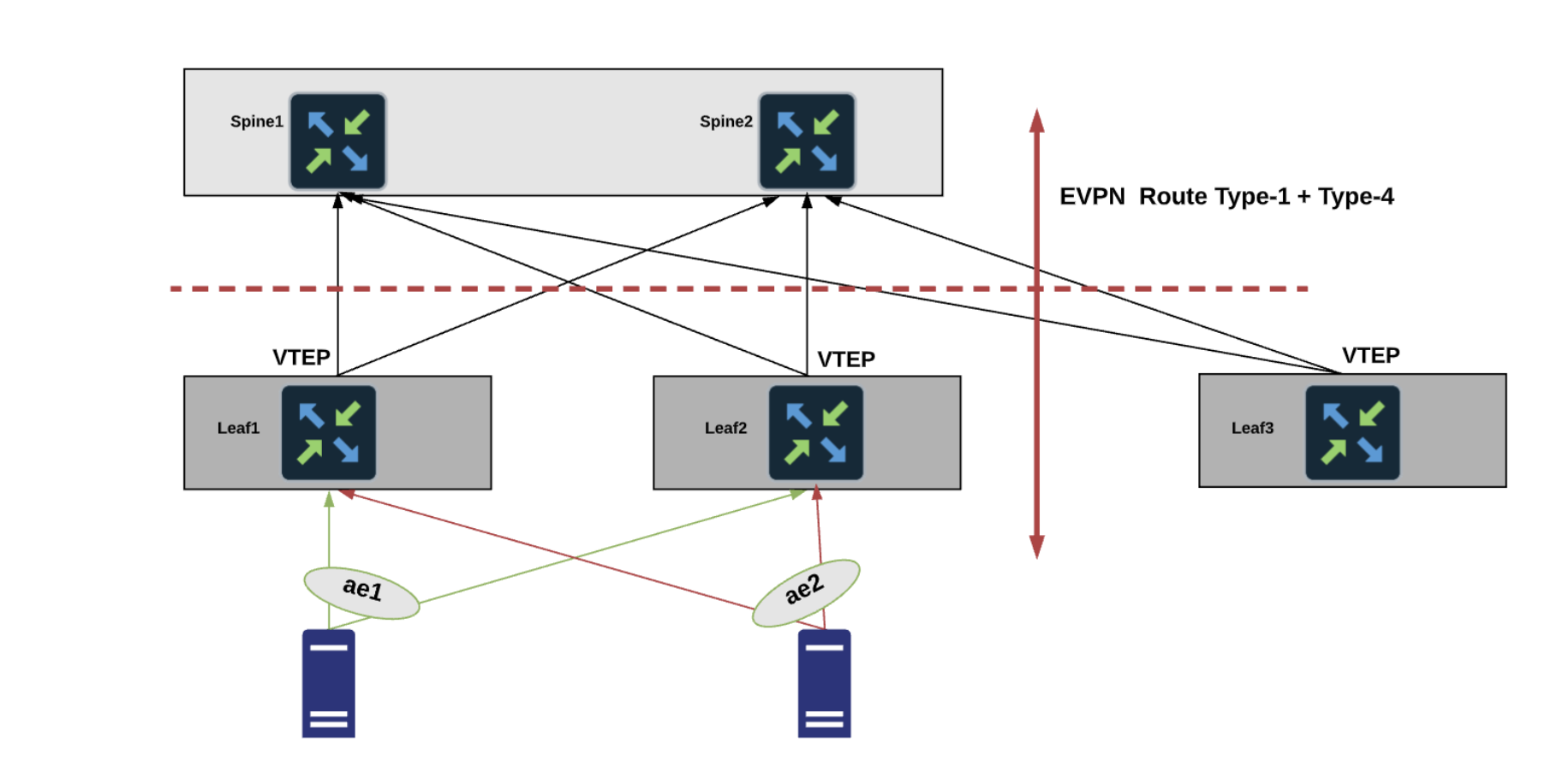

In the example below Leaf1 and Leaf2 are part of the same ES, and Leaf3 is the switch

sending traffic towards the ES.

Juniper EVPN multi-homing uses five route types:

- Type 1 - Ethernet Auto-Discovery (EAD) Route

- Type 2 - MAC advertisement Route

- Type 3 - Inclusive Multicast Route

- Type 4 - Ethernet Segment Route

- Type 5 - IP Prefix Route

BGP EVPN running on Juniper devices use:

- Type 2 to advertise MAC and IP (host) information

- Type 3 to carry VTEP information

- Type 5 to advertise IP prefixes in a Network Layer Reachability Information (NLRI).

In Junos MAC/IP Type 2 route type doesn't contain VNI and RT for the IP part of the route, it is derived from the accompanying Type 5 route type.

Type 1 routes are used for per-ES auto-discovery (A-D) to advertise EVPN multi-homing mode. Remote ToR leaf devices in the EVPN network use the EVPN Type 1 route type functionality to learn the EVPN Type 2 MAC routes from other leaf devices. In this route type ESI and the Ethernet Tag ID are considered to be part of the prefix in the NLRI. Upon a link failure between ToR leaf and end server VTEP withdraws Ethernet Auto-Discovery routes (Type 1) per ES. The Juniper EVPN multi-homing Ethernet Tag value is set to the VLAN ID for ES auto-discovery/ES route types.

Mass Withdrawal - Used for fast convergence during link failure scenarios between leaf devices to the end server using Type 1 EAD/ES routes.

DF Election - Used to prevent forwarding of the loops and the duplicates as only a single switch is allowed to decapsulate and forward the traffic for a given ES. Ethernet Segment Route is exported and imported when ESI is locally configured under the LAG. Type 4 NLRI is mainly used for designated forwarder(DF) elections and to apply Split Horizon Filtering.

Split Horizon - It is used to prevent forwarding of the loops and the duplicates for the Broadcast, Unknown-unicast and Multicast (BUM) traffic. Only the BUM traffic that originates from a remote site is allowed to be forwarded to a local site.

EVPN Services

EVPN VLAN-Aware

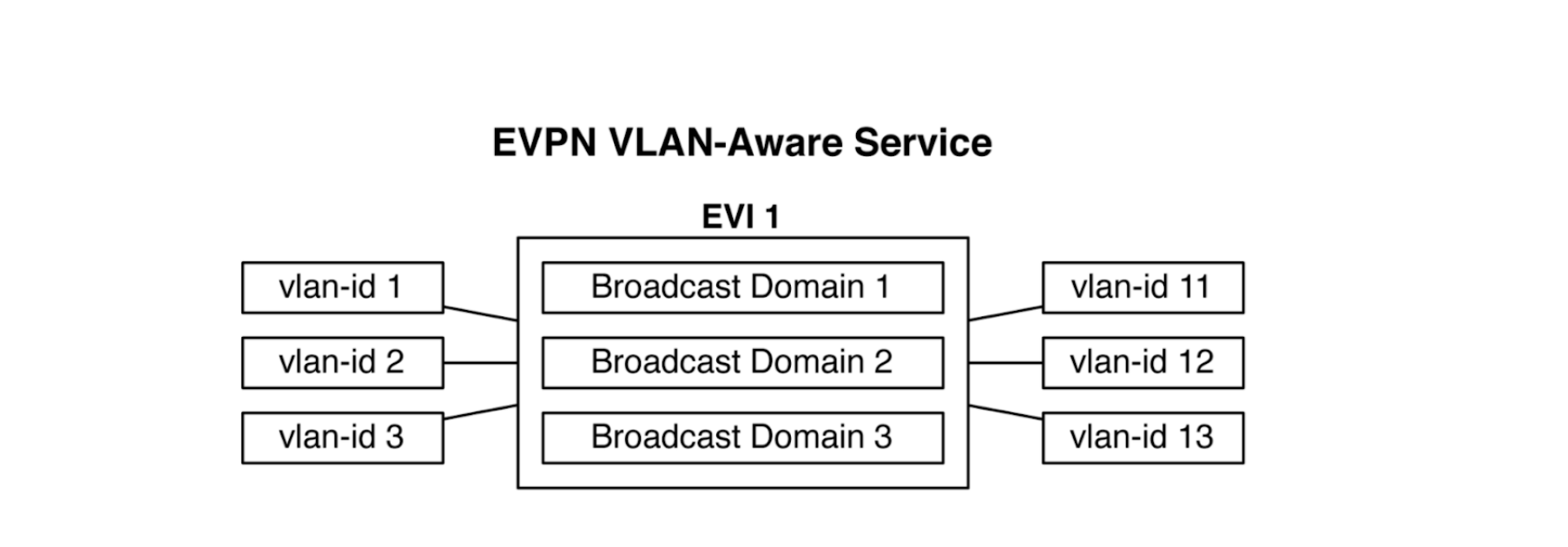

Junos can support three Ethernet Services: (1) VLAN-based, (2) VLAN Bundle, or

(3) VLAN-Aware. Apstra's data center reference design natively leverages the

VLAN-Aware model. With the EVPN VLAN-Aware Service each VLAN is mapped directly

to its own EVPN instance (EVI). The mapping between VLAN, Bridge Domain (BD) and

EVPN instance (EVI) is N:1:1. For example, N VLANs are mapped into a single BD

mapped into a single EVI. In this model all VLAN IDs share the same EVI as shown

below:

VLAN-aware Ethernet Services in Junos have a separate Route target for each VLAN (which is Juniper internal optimization), so each VLAN has a label to mimic VLAN-based implementations.

From the control plane perspective EVPN MAC/IP routes (Type 2) for VLAN-aware services carry VLAN ID in the Ethernet Tag ID attribute that is used to disambiguate MAC routes received.

From the data plane perspective - every VLAN is tagged with its own VNI that is used during packet lookup to place it onto the right Bridge Domain(BD)/VLAN.

Create EVPN Network

Creating an EVPN network follows the same workflow as for other networks.

- Create/Install offbox device agents for all switches. (Onbox agents are not supported on Junos.)

- Confirm that the global catalog includes logical devices (Design > Logical Devices) that meet Juniper device requirements; create them if necessary:

- Confirm that the global catalog includes interface maps (Design > Interface Maps) that map the logical devices to the correct device profiles for the Juniper devices; create them if necessary.

- Create a rack type.

- For single leaf racks, specify redundancy protocol None in the Leaf section.

- For dual leaf racks

- Specify redundancy protocol ESI in the Leaf section.

- When specifying the end server in the Server section, specify attachment type as Dual-Homed towards ESI-based ToR leaf devices. EVPNs using ESs have a link aggregation option. Select the LAG mode LACP (Active)

- Create a rack-based template.

- Create a generic system for an external router.

- Create resource pools for ASNs, IP addresses, and VNIs.

- Create a blueprint based on the ESI-based template, then build the EVPN-based network topology for the Juniper devices by assigning resources, device profiles, and device IDs.

Configuration Rendering

Reference Design

- Underlay - The underlay in the data center fabric is Layer-3 configured using standard eBGP over the physical interfaces of Juniper devices.

- Overlay - Overlay is configured eBGP over

lo0.0address. EVPN VXLAN is used as an overlay protocol. All the ToR devices are enabled with L2 VN. Each one of these L2 VNs can have its default gateway hosted on connected ToR leaf devices. For the inter-VN traffic VXLAN routing is done in the fabric using L3 VNIs on the border leaf devices as per standard design. - VXLAN VTEPs - On Juniper leaf devices one IP address on

lo0.0is rendered which is used as VTEP address. The VTEP IP address is used to establish the VXLAN tunnel. - EVPN multi-homing LAG - Unique ESI value and LACP system

IDs are used per EVPN LAG. The multi-homed links are configured with

an ESI and a LACP system identifier is specified for each link. The ESI is

used to identify LAG groups and loop prevention. To support Active/Active

and multi-homing for Juniper leaf devices, they are configured with the same

LACP parameter for a given ESI so that they appear as a single system.

ESI MAC addresses are auto-generated internally. You can configure the value of the most significant byte (msb) used in the generated MAC. A new facade API is added to update the MSB value. A new node is added to the rack based template that contains the MAC MSB value. The default value of this byte is 2 and you can change it to any even number up to 254. Updating this value results in regeneration of all ESI MACs in the blueprint. This is exposed to address DCI use cases where ESIs must be unique across multiple blueprints (IP Fabrics).

-

L3VNIs - L3VNI is rendered as a routing zone per VRF. Multi-tenancy functionality is available to ensure that workloads remain logically separated within a VN (overlay) construct using routing zone.

-

Route Target (RT) for L2/L3 VNIs - Auto-generated for L2/L3 VNIs in the format VNI:1. There is 1 (fabric-wide) RT per MAC-VRF (that is, L3VNI). The value must be the same across all switches participating in one EVI. You can find the RT in the blueprint by navigating to Staged > Virtual > Virtual Networks and clicking the VN name. RT is in the parameters section.

-

Route Distinguisher (RD) for L2/L3 VNIs - For Junos VLAN-Aware based model, the RD is per EVI (switch). There is no RD for each l2 VNI. RD exists only for routing zone VRF in the format

{primary_loopback}:vlan_id. -

Virtual Switch Configuration - Under the switch-options hierarchy for Juniper devices the vtep-source-interface parameter is rendered, then the VTEP IP address used to establish the VXLAN tunnel is specified. Reachability to loopback interface (for example, lo0.0) is provided by the underlay. The RD here defines the EVI specific RD carried by Type 1, Type 2, Type 3 routes. RD for the global switch options is provided in the format

{loopback_id}:65534.The RT here defines the global RT inherited by EVPN routes. It is used by Type 1 routes. A default RT value is rendered for it (

100:100) for global switch options across all switches. -

MTU - The MTU values that are rendered for Juniper Devices:

- L2 ports: 9100

- L3 ports: 9216

- Integrated Routing and Bridging (IRB) Interfaces: 9000

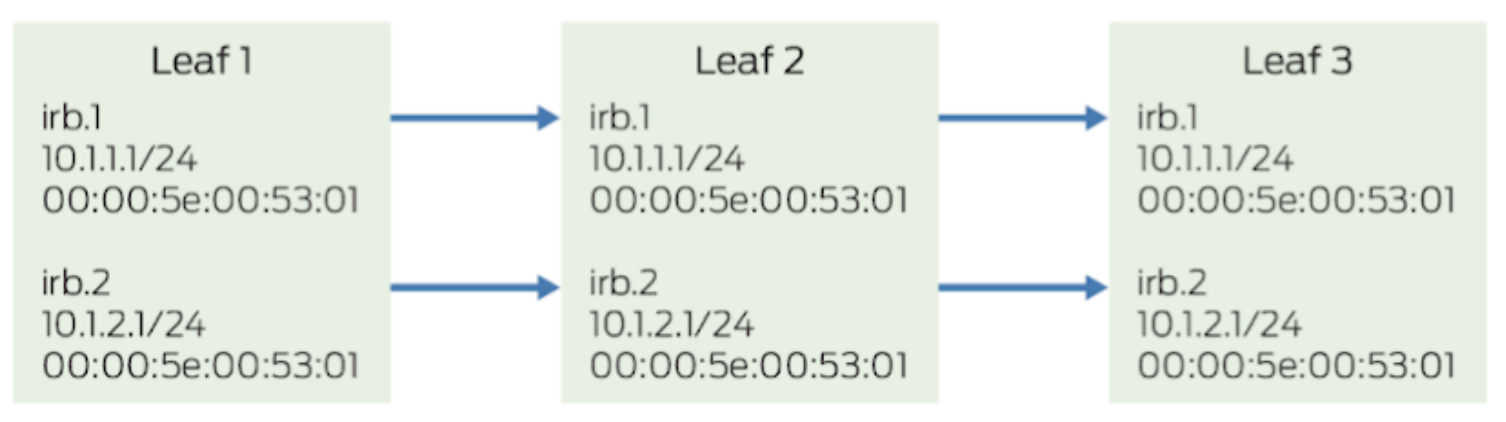

Anycast Gateway - The same IP on IRB interfaces of all the leaf devices is configured and no virtual gateway is set. Every IRB interface that participates in the stretched L2 service has the same IP/MAC configured as below:

In this model, all default gateway IRB interfaces in an overlay subnet are configured with the same IP and MAC address. A benefit of this model is that only a single IP address is required per subnet for default gateway IRB interface addressing, which simplifies gateway configuration on end systems.

Here MAC address of the IRB is auto generated.

Limitations

The following limitations apply to EVPN multi-homing topologies for Juniper devices:

- Only two-way multi-homing is supported. More than two Juniper leaf devices in a multi-homed group is not supported.

- Juniper EVPN with EVPN on other network vendors in the same blueprint is not supported.

- No Pure IP Fabric support.

- IPv6-based fabrics do not support Junos.

- In Juniper EVPN multi-homing, L3 External Connectivity Points (ECP) towards generic systems are supported; L2 ECP is not supported.

- BGP routing from Junos leaf devices to Apstra-managed Layer 3 servers is not supported.