Juniper Cloud-Native Router Components

The Juniper Cloud-Native Router solution consists of several components including the Cloud-Native Router controller, the Data Plane Development Kit (DPDK) or extended Berkley Packet Filter (eBPF) eXpress Data Path (XDP) datapath based Cloud-Native Router vRouter and the JCNR-CNI. This topic provides a brief overview of the components of the Juniper Cloud-Native Router.

Cloud-Native Router Components

The Juniper Cloud-Native Router has primarily three components—the Cloud-Native Router Controller control plane, the Cloud-Native Router vRouter forwarding plane, and the JCNR-CNI for Kubernetes integration. All Cloud-Native Router components are deployed as containers.

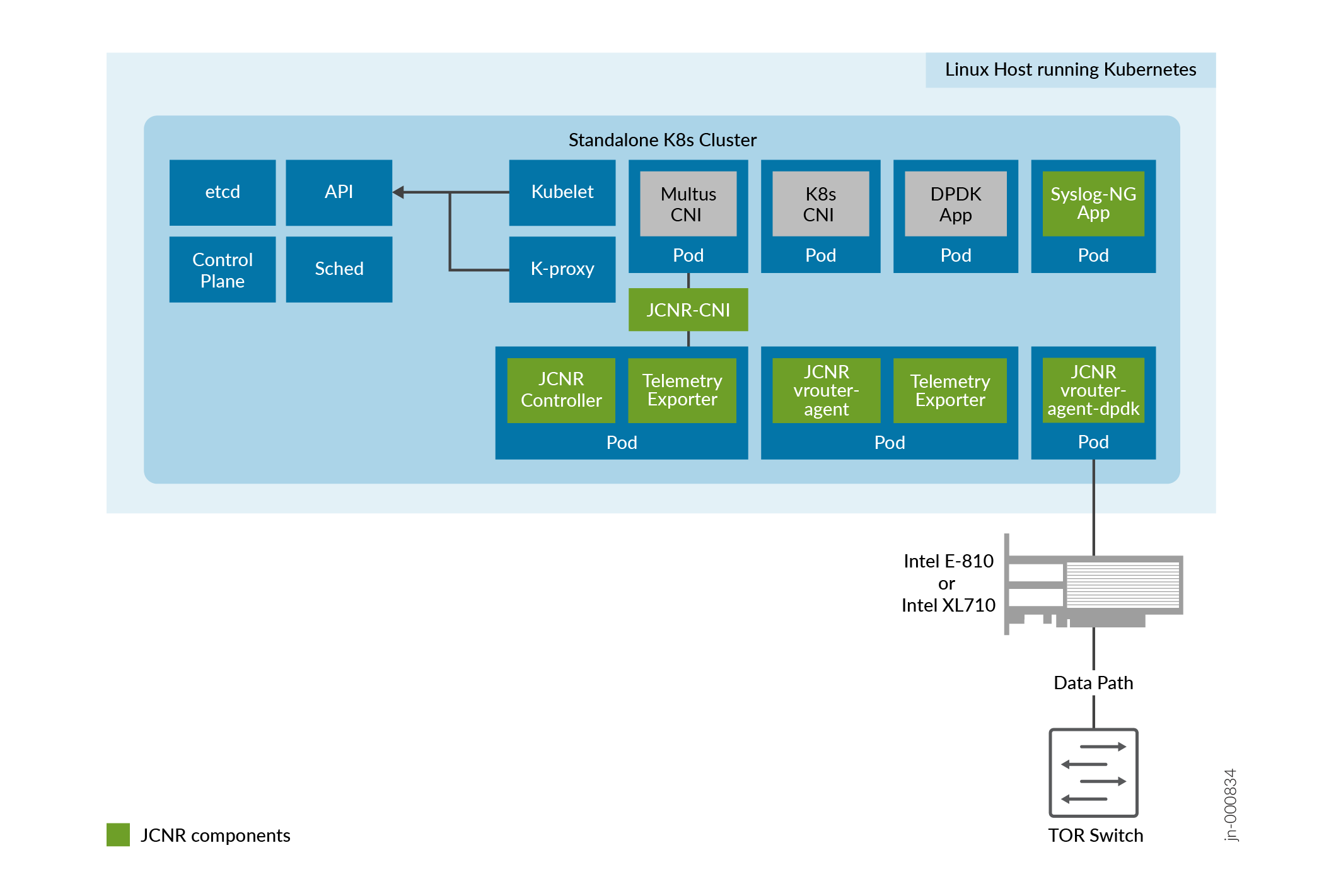

Figure 1 shows the components of the Juniper Cloud-Native Router inside a Kubernetes cluster when implemented with DPDK based vRouter.

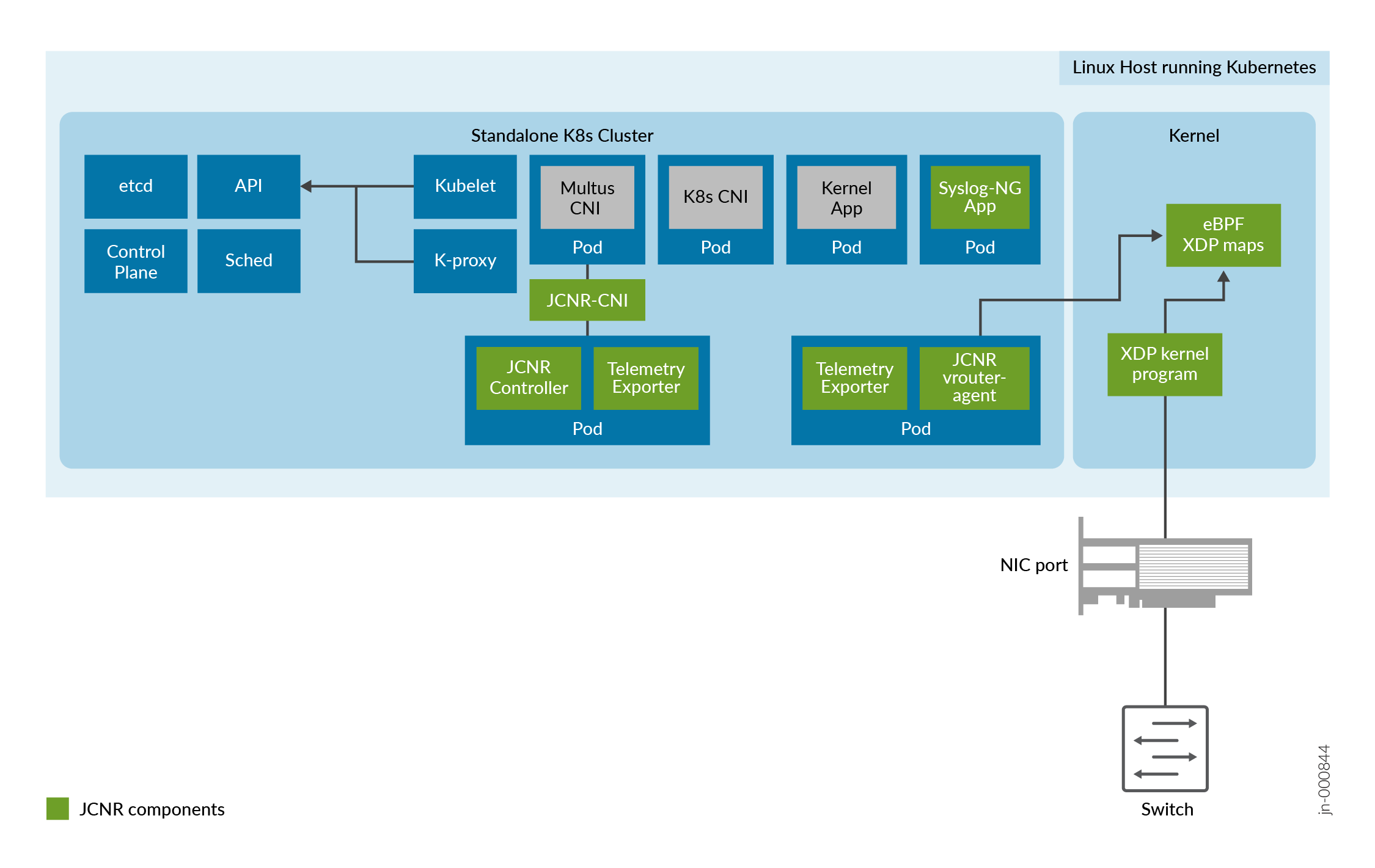

Figure 2 shows the components of the Juniper Cloud-Native Router inside a Kubernetes cluster when implemented with eBPF XDP based vRouter.

Cloud-Native Router Controller

The Cloud-Native Router Controller is the control-plane of the cloud-native router solution that runs the Junos containerized routing protocol Daemon (cRPD). It is implemented as a statefulset. The controller communicates with the other elements of the cloud-native router. Configuration, policies, and rules that you set on the controller at deployment time are communicated to the Cloud-Native Router vRouter and other components for implementation.

For example, firewall filters (ACLs) configured on the controller are sent to the Cloud-Native Router vRouter (through the vRouter agent).

Juniper Cloud-Native Router Controller Functionality:

-

Exposes Junos OS compatible CLI configuration and operation commands that are accessible to external automation and orchestration systems using the NETCONF protocol.

-

Supports vRouter as the high-speed forwarding plane. This enables applications that are built using the DPDK framework to send and receive packets directly to the application and the vRouter without passing through the kernel.

-

Supports configuration of VLAN-tagged sub-interfaces on physical function (PF), virtual function (VF), virtio, access, and trunk interfaces managed by the DPDK-enabled vRouter.

-

Supports configuration of bridge domains, VLANs, and virtual-switches.

-

Advertises DPDK application reachability to core network using routing protocols primarily with BGP, IS-IS and OSPF.

-

Distributes L3 network reachability information of the pods inside and outside a cluster.

-

Maintains configuration for L2 firewall.

-

Passes configuration information to the vRouter through the vRouter-agent.

-

Stores license key information.

-

Works as a BGP Speaker, establishing peer relationships with other BGP speakers to exchange routing information.

-

Exports control plane telemetry data to Prometheus and gNMI.

Configuration Options

Use the configlet resource to configure the cRPD pods.

Cloud-Native Router vRouter

The Cloud-Native Router vRouter is a high-performance datapath component. It is an alternative to the Linux bridge or the Open vSwitch (OVS) module in the Linux kernel. It runs as a user-space process. The vRouter functionality is implemented in two pods, one for the vrouter-agent and the vrouter-telemetry-exporter, and the other for the vrouter-agent-dpdk. This split gives you the flexibility to tailor CPU resources to the different vRouter components as needed.

The vRouter supports both Data Plane Development Kit (DPDK) and extended Berkley Packet Filter (eBPF) eXpress Data Path (XDP) datapath.

Cloud-Native Router eBPF XDP Datapath is a Juniper Technology Preview (Tech Preview) feature. Limited features are supported. See Cloud-Native Router vRouter Datapath for more details.

Cloud-Native Router vRouter Functionality:

-

Performs routing with Layer 3 virtual private networks.

-

Performs L2 forwarding.

-

Supports high-performance DPDK-based forwarding.

-

Supports high performance eBPF XDP datapath based forwarding.

-

Exports data plane telemetry data to Prometheus and gNMI.

-

High-performance packet processing.

-

Forwarding plane provides faster forwarding capabilities than kernel-based forwarding.

-

Forwarding plane is more scalable than kernel-based forwarding.

-

Support for the following NICs:

-

Intel E810 (Columbiaville) family

- Intel XL710 (Fortville) family

-

JCNR-CNI

JCNR-CNI is a new container network interface (CNI) developed by Juniper. JCNR-CNI is a Kubernetes CNI plugin installed on each node to provision network interfaces for application pods. During pod creation, Kubernetes delegates pod interface creation and configuration to JCNR-CNI. JCNR-CNI interacts with Cloud-Native Router controller and the vRouter to setup DPDK interfaces. When a pod is removed, JCNR-CNI is invoked to de-provision the pod interface, configuration, and associated state in Kubernetes and cloud-native router components. JCNR-CNI works as a secondary CNI, along with the Multus CNI to add and configure pod interfaces.

JCNR-CNI Functionality:

-

Manages the networking tasks in Kubernetes pods such as:

-

assigning IP addresses.

-

allocating MAC addresses.

-

setting up untagged, access, and other interfaces between the pod and vRouter in a Kubernetes cluster.

-

creating VLAN sub-interfaces.

-

creating L3 interfaces.

-

-

Acts on pod events such as add and delete.

-

Generates cRPD configuration.

The JCNR-CNI manages the secondary interfaces that the pods use. It creates the required interfaces based on the configuration in YAML-formatted network attachment definition (NAD) files. The JCNR-CNI configures some interfaces before passing them to their final location or connection point and provides an API for further interface configuration options such as:

-

Instantiating different kinds of pod interfaces.

-

Creating virtio-based high performance interfaces for pods that leverage the DPDK data plane.

-

Creating veth pair interfaces that allow pods to communicate using the Linux Kernel networking stack.

-

Creating pod interfaces in access or trunk mode.

-

Attaching pod interfaces to bridge domains and virtual routers.

-

Supporting IPAM plug-in for Dynamic IP address allocation.

-

Allocating unique socket interfaces for virtio interfaces.

-

Managing the networking tasks in pods such as assigning IP addresses and setting up of interfaces between the pod and vRouter in a Kubernetes cluster.

-

Connecting pod interface to a network including pod-to-pod and pod-to-network.

-

Integrating with the vRouter for offloading packet processing.

Benefits of JCNR-CNI:

-

Improved pod interface management

-

Customizable administrative and monitoring capabilities

-

Increased performance through tight integration with the controller and vRouter components

The Role of JCNR-CNI in Pod Creation:

When you create a pod for use in the cloud-native router, the Kubernetes component known as kubelet calls the Multus CNI to set up pod networking and interfaces. Multus reads the annotations section of the pod.yaml file to find the NADs. If a NAD points to JCNR-CNI as the CNI plug in, Multus calls the JCNR-CNI to set up the pod interface. JCNR-CNI creates the interface as specified in the NAD. JCNR-CNI then generates and pushes a configuration into the controller.

Syslog-NG

Juniper Cloud-Native Router uses a syslog-ng pod to gather event logs from cRPD and vRouter and transform the logs into JSON-based notifications. The notifications are logged to a file. Syslog-ng runs as a daemonset.