Customized Hash Field Selection for ECMP Load Balancing

Overview: Custom Hash Feature

Contrail Networking enables you to configure the set of fields used to hash upon during equal-cost multipath (ECMP) load balancing.

With the custom hash feature, users can configure an exact subset of fields to hash upon when choosing the forwarding path among a set of eligible ECMP candidates.

The custom hash configuration can be applied in the following ways:

globally

per virtual network (VN)

per virtual network interface (VMI)

VMI configurations take precedence over VN configurations, and VN configurations take precedence over global level configuration (if present).

Custom hash is useful whenever packets originating from a particular source and addressed to a particular destination must go through the same set of service instances during transit. This might be required if source, destination, or transit nodes maintain a certain state based on the flow, and the state behavior could also be used for subsequent new flows, between the same pair of source and destination addresses. In such cases, subsequent flows must follow the same set of service nodes followed by the initial flow.

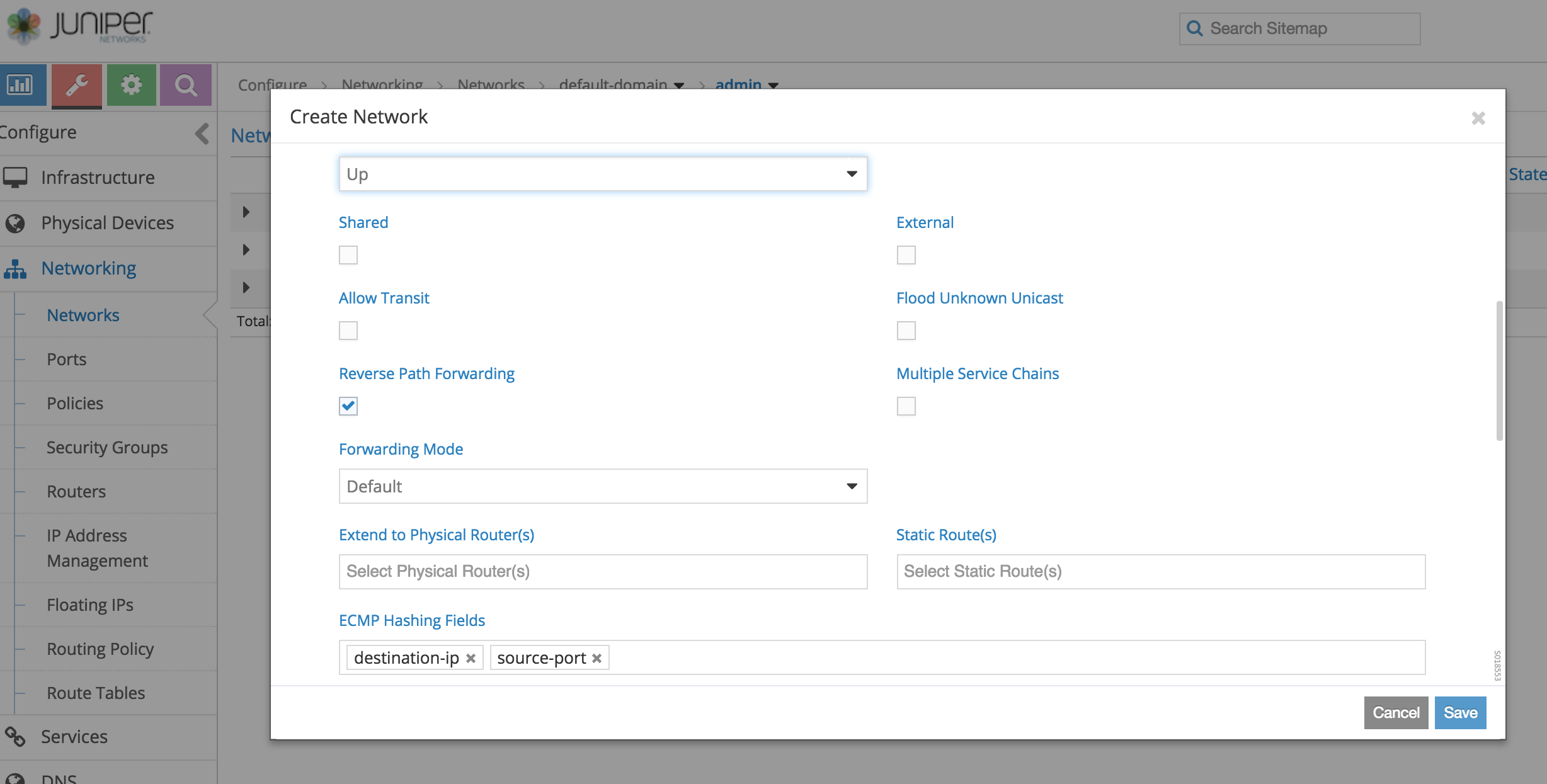

You can use the Contrail Web UI to identify specific fields in the network upon which to hash at the Configure > Networking > Network, Create Network window, in the ECMP Hashing Fields section as shown in the following figure.

If the hashing fields are configured for a virtual network, all traffic destined to that VN will be subject to the customized hash field selection during forwarding over ECMP paths by vRouters. This may not be desirable in all cases, as it could potentially skew all traffic to the destination network over a smaller set of paths across the IP fabric.

A more practical scenario is one in which flows between a source and destination must go through the same service instance in between, where one could configure customized ECMP fields for the virtual machine interface of the service instance. Then, each service chain route originating from that virtual machine interface would get the desired ECMP field selection applied as its path attribute, and eventually get propagated to the ingress vRouter node. See the following example.

Using ECMP Hash Fields Selection

Custom hash fields selection is most useful in scenarios where multiple ECMP paths exist for a destination. Typically, the multiple ECMP paths point to ingress service instance nodes, which could be running anywhere in the Contrail cloud.

Configuring ECMP Hash Fields Over Service Chains

Use the following steps to create customized hash fields with ECMP over service chains.

Create the virtual networks needed to interconnect using service chaining, with ECMP load-balancing.

Create a service template and enable scaling.

Create a service instance, and using the service template, configure by selecting:

the desired number of instances for scale-out

the left and right virtual network to connect

the shared address space, to make sure that instantiated services come up with the same IP address for left and right, respectively

This configuration enables ECMP among all those service instances during forwarding.

Create a policy, then select the service instance previously created and apply the policy to to the desired VMIs or VNs.

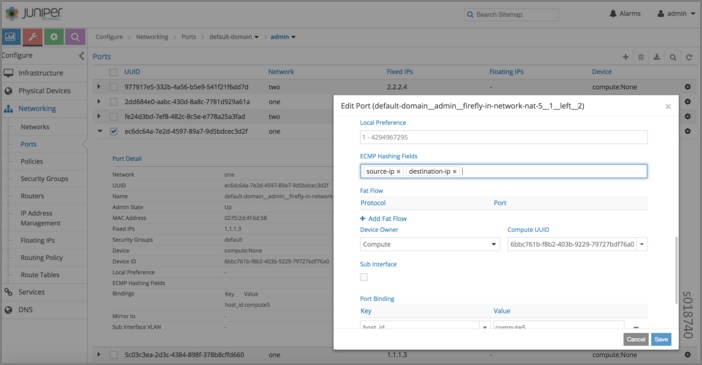

After the service VMs are instantiated, the ports of the left and right interfaces are available for further configuration. At the Contrail Web UI Ports section under Networking, select the ports on the left interface (virtual machine interface) of the service instance and apply the desired ECMP hash field configuration.

Note:Currently the ECMP field selection configuration for the service instance left or right interface must be applied by using the Ports (VMIs) section under Networking and explicitly configuring the ECMP fields selection for each of the instantiated service instances' VMIs. This must be done for all service interfaces of the group, to ensure the end result is as expected, because the load balance attribute of only the best path is carried over to the ingress vRouter. If the load balance attribute is not configured, it is not propagated to the ingress vRouter, even if other paths have that configuration.

When the configuration is finished, the vRouters get programmed with routing tables with the ECMP paths to the various service instances. The vRouters are also programmed with the desired ECMP hash fields to be used during load balancing of the traffic.

Flow Stickiness for Load-Balanced System

Flow stickiness is a beta feature in Contrail Networking Release 21.3 that helps to minimize flow remapping across equal cost multipath (ECMP) groups in a load-balanced system.

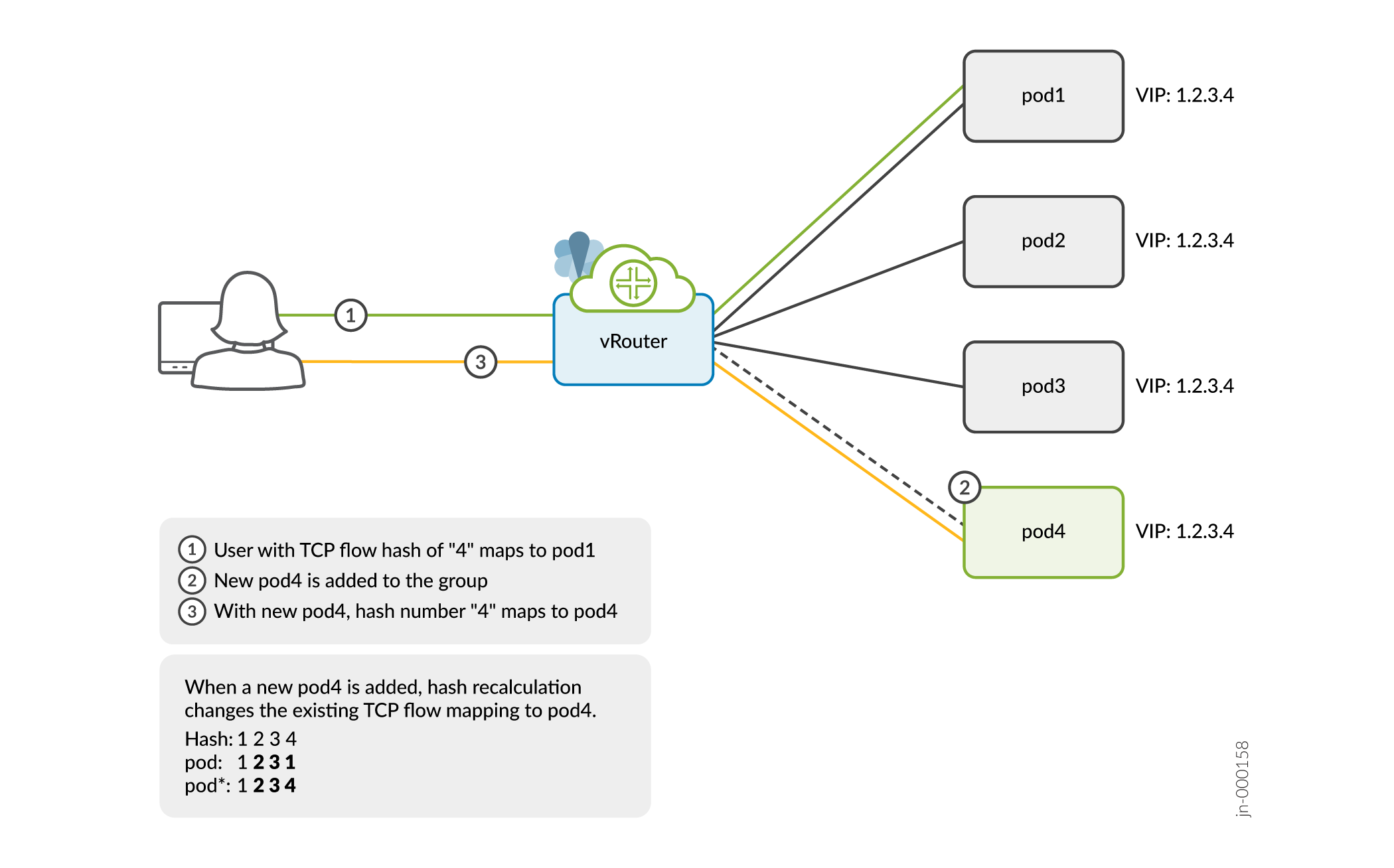

We’ll show you an example of a flow remapping problem that occurs when a new member is added to a three-member ECMP load-balancing system. See Figure 1 for the workflow.

In this example, you’ll send a flow request to the IP address 1.2.3.4. Because this is a three members group with the VIP address 1.2.3.4, vRouter sends the request to pod1 based on the flow hash calculation. Let's add a new member pod4 to the same VIP group. The request is now diverted and redirected to pod4 based on the flow hash recalculation.

Flow stickiness reduces such flow being remapped and retains the flow with the original path to pod1 though a new member pod4 is added. If adding pod4 affects the flow, vRouter reprograms the flow table and rebalances the flow with pod1.

Table 1 shows the expected normal hash and flow stickiness results for the scale-up and scale-down scenarios.

Example Scenario |

Normal (Static) Hash Result |

Flow Stickiness Result |

|---|---|---|

ECMP group size is 3. |

Based on the flow hash, traffic will be directed to pod1. |

Based on the flow hash, traffic will be directed to pod1. |

Add one more pod to the same service. ECMP group size is 4. |

Flow redistribution is possible and traffic may now be redirected to another pod. |

Traffic will continue to be directed to pod1. |

Delete one pod from same service. ECMP group size is 2. |

Flow redistribution is possible and traffic may now be redirected to another pod. |

Unless pod1 is deleted, traffic will continue to be directed to it. If pod1 is deleted, the session must be reinitiated from client. |

Flow stickiness is only supported when the flow is an ECMP flow before and after scaling up or scaling down.

Here’s an example of how flow stickiness may not work as expected:

Example Deployment |

Scenario |

Result |

|---|---|---|

Two pods in two computes, one in each. |

Before scale-up |

With respect to the compute forwarding the traffic, flow will be a non-ecmp. |

After scale-up |

Flow becomes an ECMP flow and triggers rehashing. This may cause the flow stickiness to fail. |

Change History Table

Feature support is determined by the platform and release you are using. Use Feature Explorer to determine if a feature is supported on your platform.