Understanding EVPN-MPLS Interworking with Junos Fusion Enterprise and MC-LAG

You can use Ethernet VPN (EVPN) to extend a Junos Fusion Enterprise or multichassis link aggregation group (MC-LAG) network over an MPLS network to a data center or campus network. With the introduction of this feature, you can now interconnect dispersed campus and data center sites to form a single Layer 2 virtual bridge.

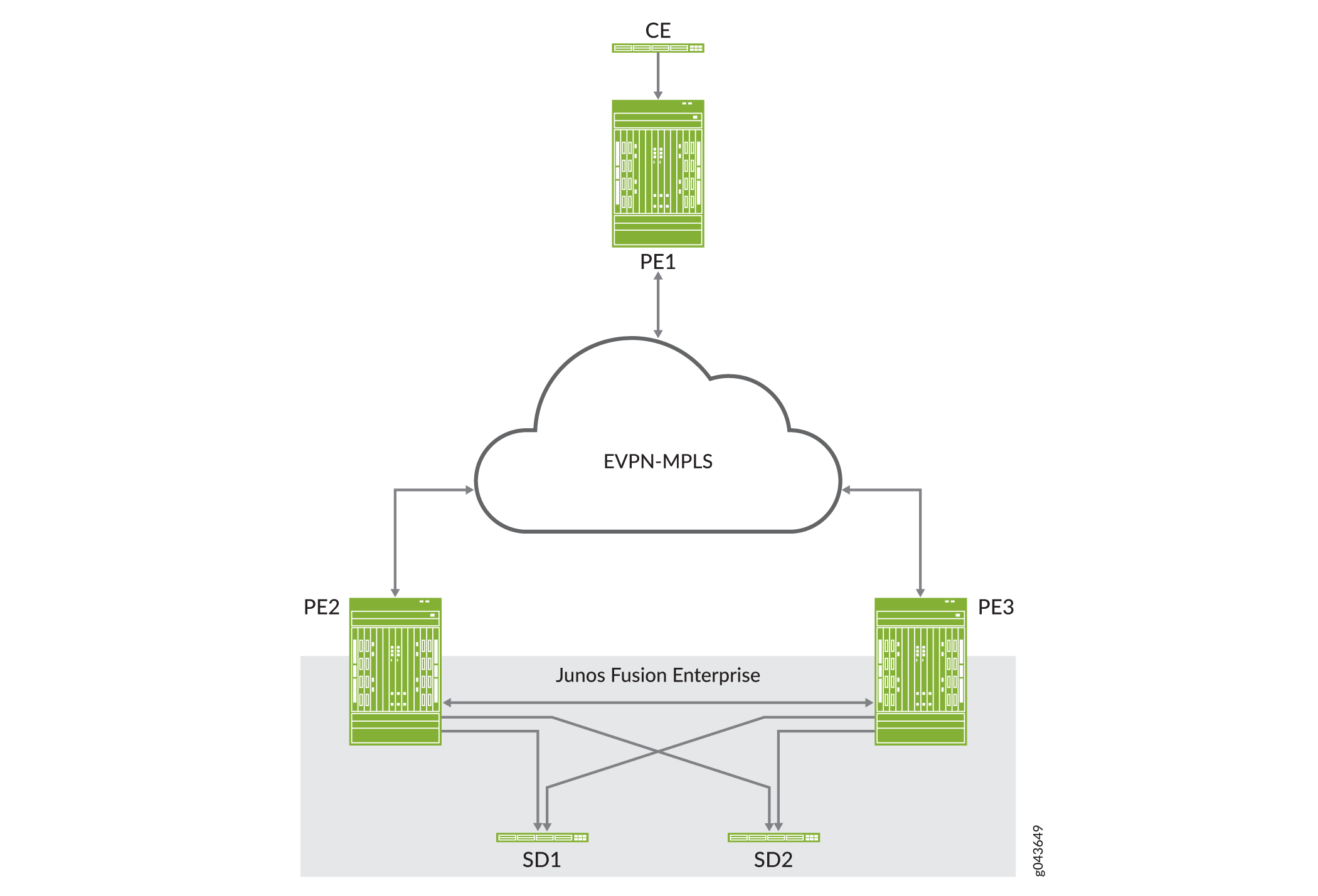

Figure 1 shows a Junos Fusion Enterprise topology with two EX9200 switches that serve as aggregation devices (PE2 and PE3) to which the satellite devices are multihomed. The two aggregation devices use an interchassis link (ICL) and the Inter-Chassis Control Protocol (ICCP) protocol from MC-LAG to connect and maintain the Junos Fusion Enterprise topology. PE1 in the EVPN-MPLS environment interworks with PE2 and PE3 in the Junos Fusion Enterprise with MC-LAG.

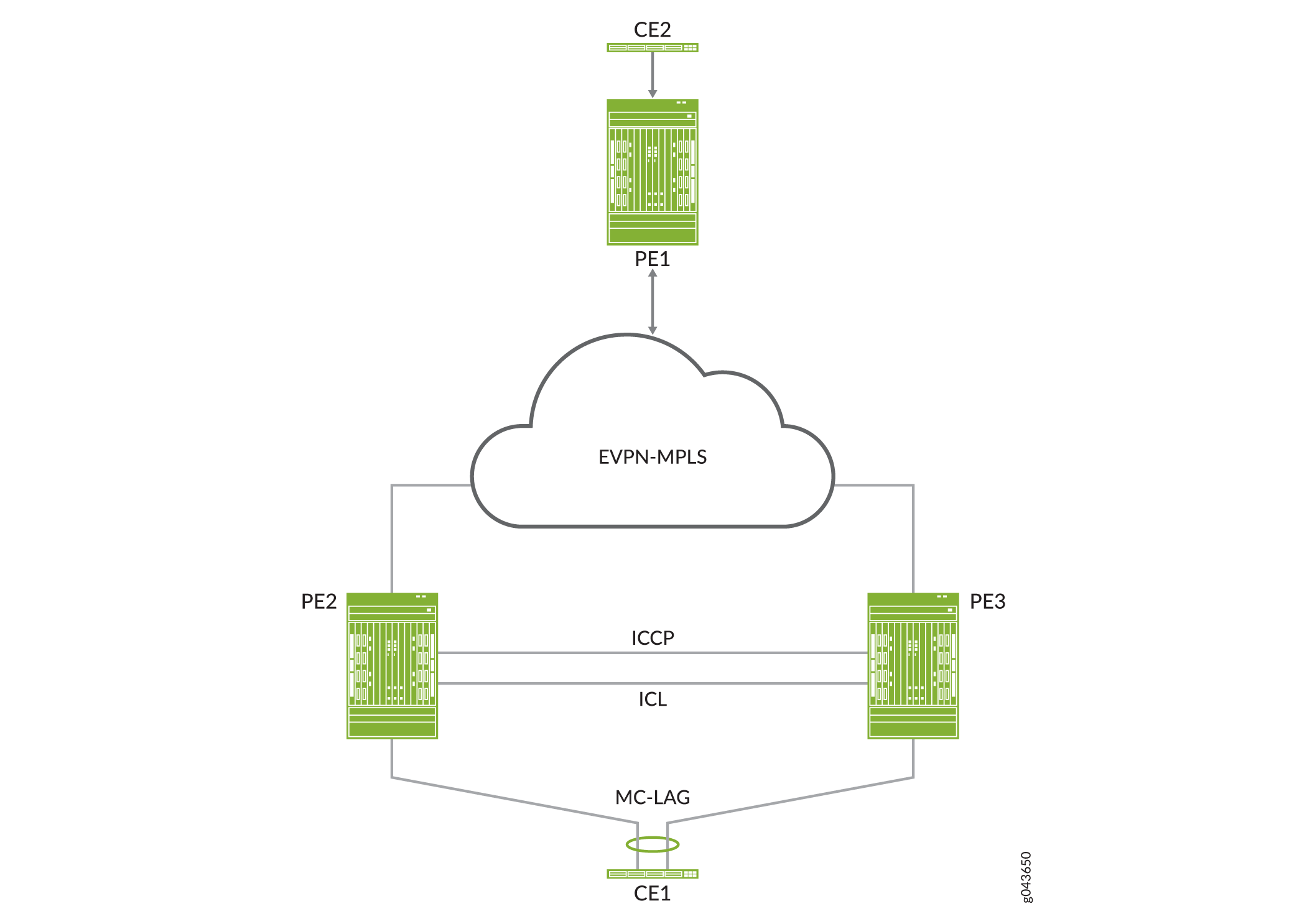

Figure 2 shows an MC-LAG topology in which customer edge (CE) device CE1 is multihomed to PE2 and PE3. PE2 and PE3 use an ICL and the ICCP protocol from MC-LAG to connect and maintain the topology. PE1 in the EVPN-MPLS environment interworks with PE2 and PE3 in the MC-LAG environment.

Throughout this topic, Figure 1 and Figure 2 serve as references to illustrate various scenarios and points.

The use cases depicted in Figure 1 and Figure 2 require the configuration of both EVPN multihoming in active-active mode and MC-LAG on PE2 and PE3. EVPN with multihoming active-active and MC-LAG have their own forwarding logic for handling traffic, in particular, broadcast, unknown unicast, and multicast (BUM) traffic. At times, the forwarding logic for EVPN with multihoming active-active and MC-LAG contradict each other and causes issues. This topic describes the issues and how the EVPN-MPLS interworking feature resolves these issues.

Other than the EVPN-MPLS interworking-specific implementations described in this topic, EVPN-MPLS, Junos Fusion Enterprise, and MC-LAG offer the same functionality and function the same as the standalone features.

Benefits of Using EVPN-MPLS with Junos Fusion Enterprise and MC-LAG

Use EVPN-MPLS with Junos Fusion Enterprise and MC-LAG to interconnect dispersed campus and data center sites to form a single Layer 2 virtual bridge.

BUM Traffic Handling

In the use cases shown in Figure 1 and Figure 2, PE1, PE2, and PE3 are EVPN peers, and PE2 and PE3 are MC-LAG peers. Both sets of peers exchange control information and forward traffic to each other, which causes issues. Table 1 outlines the issues that arise, and how Juniper Networks resolves the issues in its implementation of the EVPN-MPLS interworking feature.

BUM Traffic Direction |

EVPN Interworking with Junos Fusion Enterprise and MC-LAG Logic |

Issue |

Juniper Networks Implementation Approach |

|---|---|---|---|

North bound (PE2 receives BUM packet from a locally attached single- or dual-homed interfaces). |

PE2 floods BUM packet to the following:

|

Between PE2 and PE3, there are two BUM forwarding paths—the MC-LAG ICL and an EVPN-MPLS path. The multiple forwarding paths result in packet duplication and loops. |

|

South bound (PE1 forwards BUM packet to PE2 and PE3). |

PE2 and PE3 both receive a copy of the BUM packet and flood the packet out of all of their local interfaces, including the ICL. |

PE2 and PE3 both forward the BUM packet out of the ICL, which results in packet duplication and loops. |

Split Horizon

In the use cases shown in Figure 1 and Figure 2, split horizon prevents multiple copies of a BUM packet from being forwarded to a CE device (satellite device). However, the EVPN-MPLS and MC-LAG split horizon implementations contradict each other, which causes an issue. Table 2 explains the issue and how Juniper Networks resolves it in its implementation of the EVPN-MPLS interworking feature.

BUM Traffic Direction |

EVPN Interworking with Junos Fusion Enterprise and MC-LAG Logic |

Issue |

Juniper Networks Implementation Approach |

|---|---|---|---|

North bound (PE2 receives BUM packet from a locally attached dual-homed interface). |

|

The EVPN-MPLS and MC-LAG forwarding logic contradicts each other and can prevent BUM traffic from being forwarded to the ES. |

Support local bias, thereby ignoring the DF and non-DF status of the port for locally switched traffic. |

South bound (PE1 forwards BUM packet to PE2 and PE3). |

Traffic received from PE1 follows the EVPN DF and non-DF forwarding rules for a multihomed ES. |

None. |

Not applicable. |

MAC Learning

EVPN and MC-LAG use the same method for learning MAC addresses—namely, a PE device learns MAC addresses from its local interfaces and synchronizes the addresses to its peers. However, given that both EVPN and MC-LAG are synchronizing the addresses, an issue arises.

Table 3 describes the issue and how the EVPN-MPLS interworking implementation prevents the issue. The use cases shown in Figure 1 and Figure 2 illustrate the issue. In both use cases, PE1, PE2, and PE3 are EVPN peers, and PE2 and PE3 are MC-LAG peers.

MAC Synchronization Use Case |

EVPN Interworking with Junos Fusion Enterprise and MC-LAG Logic |

Issue |

Juniper Networks Implementation Approach |

|---|---|---|---|

MAC addresses learned locally on single- or dual-homed interfaces on PE2 and PE3. |

|

PE2 and PE3 function as both EVPN peers and MC-LAG peers, which result in these devices having multiple MAC synchronization paths. |

|

MAC addresses learned locally on single- or dual-homed interfaces on PE1. |

Between the EVPN peers, MAC addresses are synchronized using the EVPN BGP control plane. |

None. |

Not applicable. |

Handling Down Link Between Cascade and Uplink Ports in Junos Fusion Enterprise

This section applies only to EVPN-MPLS interworking with a Junos Fusion Enterprise.

In the Junos Fusion Enterprise shown in Figure 1, assume that aggregation device PE2 receives a BUM packet from PE1 and that the link between the cascade port on PE2 and the corresponding uplink port on satellite device SD1 is down. Regardless of whether the BUM packet is handled by MC-LAG or EVPN multihoming active-active, the result is the same—the packet is forwarded via the ICL interface to PE3, which forwards it to dual-homed SD1.

To further illustrate how EVPN with multihoming active-active handles this situation with dual-homed SD1, assume that the DF interface resides on PE2 and is associated with the down link and that the non-DF interface resides on PE3. Typically, per EVPN with multihoming active-active forwarding logic, the non-DF interface drops the packet. However, because of the down link associated with the DF interface, PE2 forwards the BUM packet via the ICL to PE3, and the non-DF interface on PE3 forwards the packet to SD1.

Layer 3 Gateway Support

The EVPN-MPLS interworking feature supports the following Layer 3 gateway functionality for extended bridge domains and VLANs:

Integrated routing and bridging (IRB) interfaces to forward traffic between the extended bridge domains or VLANs.

Default Layer 3 gateways to forward traffic from a physical (bare-metal) server in an extended bridge domain or VLAN to a physical server or virtual machine in another extended bridge domain or VLAN.

Change History Table

Feature support is determined by the platform and release you are using. Use Feature Explorer to determine if a feature is supported on your platform.