Solution Benefits

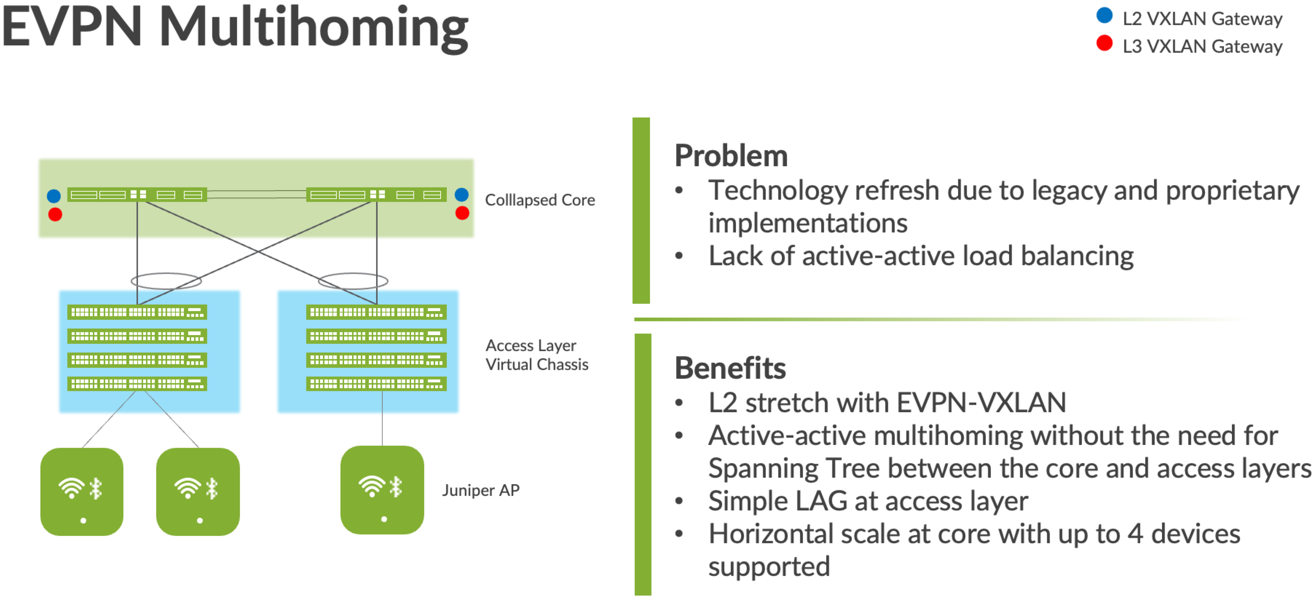

Most traditional campus architectures use single-vendor, chassis-based technologies that work well in small, static campuses with few endpoints. Campus architectures are too rigid to support the scalability and changing needs of modern large enterprises. Multi-chassis link aggregation group (MC-LAG) is a good example of a single-vendor technology that addresses the collapsed core deployment model. In this model, two chassis-based platforms are typically in the core of your network; deployed to handle all Layer 2 and Layer 3 requirements while providing an active/backup resiliency environment. MC-LAG does not interoperate between vendors, creating lock-in, and is limited to two devices.

A Juniper Networks EVPN multihoming solution based on EVPN-VXLAN addresses the collapsed core architecture and is simple, programmable, and built on a standards-based architecture that is common across campuses and data centers. See RFC 8365 for more information on this architecture.

EVPN multihoming uses a Layer 3 IP-based underlay network and an EVPN-VXLAN overlay network between the collapsed core Juniper switches. Broadcast, unknown unicast, and multicast (BUM) traffic, is handled natively by EVPN and eliminates the need for Spanning Tree Protocols (STP/RSTP). A flexible overlay network based on VXLAN tunnels combined with an EVPN control plane, efficiently provides Layer 3 or Layer 2 connectivity. This architecture decouples the virtual topology from the physical topology, which improves network flexibility and simplifies network management. Endpoints that require Layer 2 adjacency, such as Internet of Things (IoT) devices, can be placed anywhere in the network and remain connected to the same logical Layer 2 network.

With an EVPN multihoming deployment, up to four collapsed core devices are supported and all of them use EVPN-VXLAN. This standard is vendor-agnostic, so you can use the existing access layer infrastructure such as Link Aggregation Control Protocol (LACP) without the need to retrofit this layer of your network. Connectivity with legacy switches is accomplished with standards-based ESI-LAG. ESI-LAG uses standards-based LACP to interconnect with legacy switches.

Benefits of Campus Fabric EVPN Multihoming

The traditional Ethernet switching approach is inefficient because it leverages broadcast and multicast technologies to announce Media Access Control (MAC) addresses. It is also difficult to manage because you need to manually configure VLANs to extend them to new network ports. This problem is compounded significantly when considering the explosive growth of mobile and IoT devices.

EVPN multihoming’s underlay topology is supported with a routing protocol that ensures loopback interface reachability between nodes. In the case of EVPN multihoming, Juniper Mist Wired Assurance supports eBGP between the core switching platforms. These devices support the EVPN-VXLAN function as VXLAN Tunnel Endpoint (VTEPs) that encapsulate and decapsulate the VXLAN traffic. VTEP represents the construct within the switching platform that originates and terminates VXLAN tunnels. In addition to this, these devices route and bridge packets in and out of VXLAN tunnels as required. EVPN multihoming addresses the collapsed core model traditionally supported by technologies like MC-LAG and Virtual Router Redundancy Protocol (VRRP). In this case, you can retain the investment at the access layer while supporting the fiber or cabling plant that terminates connectivity up to four core devices.

This architecture provides optimized, seamless, and standards-compliant Layer 2 or Layer 3 connectivity. Juniper Networks EVPN-VXLAN campus networks provide the following benefits:

- Consistent, scalable architecture—Enterprises typically have multiple sites with different size requirements. A common EVPN-VXLAN-based campus architecture is consistent across all sites, irrespective of the size. EVPN-VXLAN scales out or scales in as a site evolves.

- Multi-vendor deployment—The EVPN-VXLAN architecture uses standards-based protocols so enterprises can deploy campus networks using multi-vendor network equipment. There is no single vendor lock-in requirement.

- Reduced flooding and learning—Control plane-based Layer 2 and Layer 3 learning reduces the flood and learn issues associated with data plane learning. Learning MAC addresses in the forwarding plane has an adverse impact on network performance as the number of endpoints grows. The EVPN control plane handles the exchange and learning of routes, so newly learned MAC addresses are not exchanged in the forwarding plane.

- Location-agnostic connectivity—The EVPN-VXLAN campus architecture provides a consistent endpoint experience no matter where the endpoint is located. Some endpoints require Layer 2 reachability, such as legacy building security systems or IoT devices. The Layer 2 VXLAN overlay provides Layer 2 reachability across campuses without any changes to the underlay network. With our standards-based network access control integration, an endpoint can be connected anywhere in the network.

- Underlay agnostic—VXLAN as an overlay is underlay agnostic. With a VXLAN overlay, you can connect multiple campuses with a Layer 2 VPN or Layer 3 VPN service from a WAN provider or by using IPsec over Internet.

- Consistent network segmentation—A universal EVPN-VXLAN-based architecture across campuses and data centers means consistent end-to-end network segmentation for endpoints and applications.

- Simplified management—Campuses and data centers based on a common EVPN-VXLAN design can use common tools and network teams to deploy and manage campus and data center networks.