Using a Remote Server for NorthStar Planner

As of NorthStar 6.2.4, you can install NorthStar Controller with a remote Planner server (a server separate from the NorthStar application server), to distribute the NorthStar Operator and NorthStar Planner server loads. This also helps ensure that the processes of each do not interfere with the processes of the other. Both the web Planner and the desktop Planner application are then run from the remote server. Be aware that to work in NorthStar Planner, you must still log in from the NorthStar Controller web UI login page.

Using a remote server for NorthStar Planner does not make NorthStar Planner independent of NorthStar Controller. As of now, there is no standalone Planner.

We recommend using a remote Planner server if any of the following are true:

Your network has more than 250 nodes

You typically run multiple concurrent Planner users and/or multiple concurrent Planner sessions

You work extensively with Planner simulations

In NorthStar HA cluster setups, there is one remote Planner server for the whole cluster. It is configured to communicate with the virtual IP of the cluster.

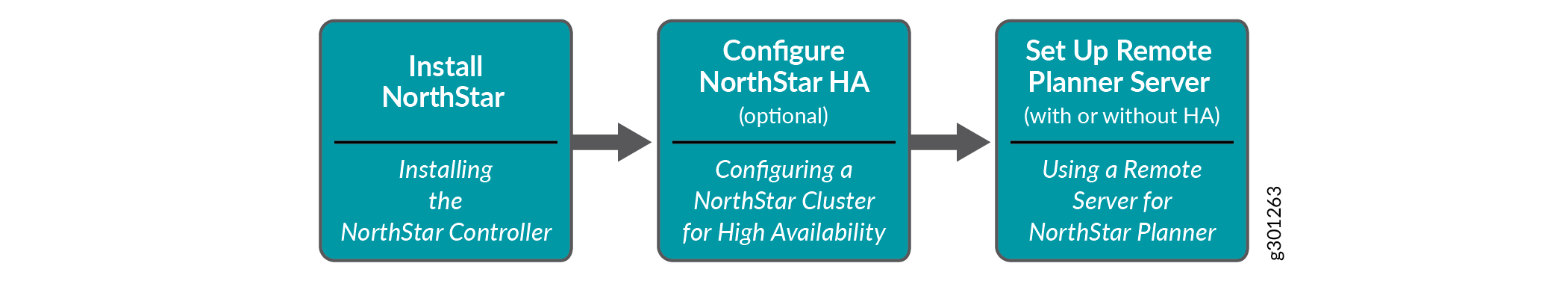

Process Overview: Installing and Configuring Remote Planner Server

Figure 1 shows the high level process you would follow to install NorthStar Controller with a remote Planner server. Installing and configuring NorthStar comes first. If you want a NorthStar HA cluster, you would set that up next. Finally, you would install and configure the remote Planner server. The text in italics indicates the topics in the NorthStar Getting Started Guide that cover the steps.

Setting up the remote Planner server is a two-step process that you perform on the remote server:

Run the remote Planner server installation script.

This script prepares the remote server for the tasks that will be performed by the setup utility.

Run the remote Planner server setup utility.

This utility copies your NorthStar license file from the NorthStar Controller to the remote Planner, and restarts the necessary NorthStar processes on the Controller. It disables Planner components on the NorthStar Controller application server so they can be run from the remote server instead. It also ensures that communication between the application server and the remote Planner server works. In an HA scenario, you run the setup utility once for each application server in the cluster, ending with the active node.

The setup utility requires you to provide the IP addresses of the NorthStar application server (physical IP or in the case of HA deployment, both the physical and virtual IP addresses) and the remote Planner server. Also, you must enter your server root password for access to the application server.

Download the Software to the Remote Planner Server

The NorthStar Controller software download page is available at https://www.juniper.net/support/downloads/?p=northstar#sw.

From the Version drop-down list, select the version number (6.2.4 or later support remote Planner server).

Click the NorthStar Application (which includes the RPM bundle) to download it. You do not need to download the Junos VM to the remote Planner server.

Install the Remote Planner Server

On the remote Planner server, execute the following commands:

[root@remote-server~]# yum install <rpm-filename> [root@remote-server~]# cd /opt/northstar/northstar_bundle_x.x.x/ [root@remote-server~]# ./install-remote_planner.sh

Run the Remote Planner Server Setup Utility

This utility (setup_remote_planner.py) configures the NorthStar application server and the remote Planner server so the processes are distributed correctly between the two and so the servers can communicate with each other.

Three scenarios are described here. The effects on certain NorthStar components on the NorthStar Controller (application server) are as follows:

Effect on Cassandra Database |

Effect on Web Processes |

|

|---|---|---|

Non-HA Setup Use this procedure if you do not have a NorthStar HA cluster configured. |

modified and restarted |

modified and restarted |

HA Active Node Setup Use this procedure to configure the active node in your NorthStar HA cluster. |

not modified and not restarted |

modified and restarted |

HA Standby Node Setup Use this procedure to configure the standby nodes in your NorthStar HA cluster. |

not modified and not restarted |

modified, but not restarted |

Non-HA Setup Procedure

The following procedure guides you through using the setup utility in interactive mode. As an alternative to interactive mode, you can launch the utility and enter the two required IP addresses directly in the CLI. The setup utility is located in /opt/northstar/utils/. To launch the utility:

[root@hostname~]# ./setup_remote_planner.py remote-planner-ip controller-ip

You will be required to enter your server root password to continue.

If you use interactive mode:

On the remote Planner server, launch the remote Planner server setup utility:

[root@hostname~]# ./setup_remote_planner.py Remote Planner requires Controller to have port 6379 and 9042 opened.

Respond to the prompts. You’ll need to provide the IP addresses for the NorthStar application server and the remote Planner server, and your server root password. For example:

Is Controller running in HA setup? (Y/N) N Controller IP Address for Remote Planner to connect to: 10.53.65.245 Remote Planner IP Address for Controller to connect to: 10.53.65.244 Cassandra on Controller will be restarted. Are you sure you want to proceed? (Y/N) Y Controller 10.53.65.245 root password: Configuring Controller...

The setup utility proceeds to configure the NorthStar Controller application server, configure the remote Planner server, test the Cassandra database connection, and check the Redis connection. The following sample output shows the progress you would see and indicates which processes end up on the application server and which on the remote Planner server. All processes should be RUNNING when the setup is complete.

Configuring Controller... bmp:bmpMonitor RUNNING pid 20150, uptime 12 days, 20:30:10 collector:worker1 RUNNING pid 21794, uptime 12 days, 20:28:25 collector:worker2 RUNNING pid 21796, uptime 12 days, 20:28:25 collector:worker3 RUNNING pid 21795, uptime 12 days, 20:28:25 collector:worker4 RUNNING pid 21797, uptime 12 days, 20:28:25 collector_main:beat_scheduler RUNNING pid 22721, uptime 12 days, 20:26:39 collector_main:es_publisher RUNNING pid 22722, uptime 12 days, 20:26:39 collector_main:task_scheduler RUNNING pid 22723, uptime 12 days, 20:26:39 config:cmgd RUNNING pid 22087, uptime 12 days, 20:28:08 config:cmgd-rest RUNNING pid 22088, uptime 12 days, 20:28:08 docker:dockerd RUNNING pid 20167, uptime 12 days, 20:30:10 epe:epeplanner RUNNING pid 20151, uptime 12 days, 20:30:10 infra:cassandra RUNNING pid 26573, uptime 0:00:25 infra:ha_agent RUNNING pid 20160, uptime 12 days, 20:30:10 infra:healthmonitor RUNNING pid 21760, uptime 12 days, 20:28:40 infra:license_monitor RUNNING pid 20161, uptime 12 days, 20:30:10 infra:prunedb RUNNING pid 20157, uptime 12 days, 20:30:10 infra:rabbitmq RUNNING pid 20159, uptime 12 days, 20:30:10 infra:redis_server RUNNING pid 20163, uptime 12 days, 20:30:10 infra:zookeeper RUNNING pid 20158, uptime 12 days, 20:30:10 ipe:ipe_app RUNNING pid 22720, uptime 12 days, 20:26:39 listener1:listener1_00 RUNNING pid 21788, uptime 12 days, 20:28:29 netconf:netconfd_00 RUNNING pid 22719, uptime 12 days, 20:26:39 northstar:anycastGrouper RUNNING pid 22714, uptime 12 days, 20:26:40 northstar:configServer RUNNING pid 22718, uptime 12 days, 20:26:39 northstar:mladapter RUNNING pid 22716, uptime 12 days, 20:26:40 northstar:npat RUNNING pid 22717, uptime 12 days, 20:26:39 northstar:pceserver RUNNING pid 22566, uptime 12 days, 20:27:56 northstar:privatetlvproxy RUNNING pid 22582, uptime 12 days, 20:27:46 northstar:prpdclient RUNNING pid 22713, uptime 12 days, 20:26:40 northstar:scheduler RUNNING pid 22715, uptime 12 days, 20:26:40 northstar:topologyfilter RUNNING pid 22711, uptime 12 days, 20:26:40 northstar:toposerver RUNNING pid 22712, uptime 12 days, 20:26:40 northstar_pcs:PCServer RUNNING pid 22602, uptime 12 days, 20:27:35 northstar_pcs:PCViewer RUNNING pid 22601, uptime 12 days, 20:27:35 northstar_pcs:SRPCServer RUNNING pid 22603, uptime 12 days, 20:27:35 web:app RUNNING pid 26877, uptime 0:00:12 web:gui RUNNING pid 26878, uptime 0:00:12 web:notification RUNNING pid 26876, uptime 0:00:12 web:proxy RUNNING pid 26879, uptime 0:00:12 web:restconf RUNNING pid 19271, uptime 0:00:28 web:resthandler RUNNING pid 19275, uptime 0:00:12 Controller configuration completed! Configuring Remote Planner... infra:rabbitmq RUNNING pid 3587, uptime 3 days, 2:53:21 northstar:npat RUNNING pid 3585, uptime 3 days, 2:53:21 web:planner RUNNING pid 6557, uptime 0:00:11 Remote Planner configuration completed! Testing Cassandra connection... Connected to Test Cluster at 10.49.128.199:9042. Cassandra connection OK. Checking Redis connection... Redis port is listening.

HA Standby Node Setup

As in the non-HA scenario, you don’t have to use interactive mode for the setup script. You can launch the utility and enter the required IP addresses directly in the CLI as follows (three IP addresses in the case of cluster nodes):

[root@hostname~]# ./setup_remote_planner.py remote-planner-ip controller-physical-ip controller-vip

This is the same for both active and standby nodes. The setup utility automatically distinguishes between the two.

You will be required to enter your server root password to continue.

Whether you use interactive or direct CLI mode, you run the utility once for each standby node in the HA cluster, using the appropriate IP addresses for each node.

If you use interactive mode:

On the remote Planner server, launch the remote Planner server setup utility:

[root@hostname~]# ./setup_remote_planner.py Remote Planner requires Controller to have port 6379 and 9042 opened.

Respond to the prompts. You’ll need to provide both the virtual IP and physical IP addresses for the standby application node, the IP address for the remote Planner server, and your server root password. For example:

Remote Planner requires Controller to have port 6379 and 9042 opened. Is Controller running in HA setup? (Y/N) Y Controller Cluster Virtual IP Address for Remote Planner to connect to: 10.51.132.196 Controller Cluster Physical IP Address for Remote Planner to connect to: 10.51.132.206 Remote Planner IP Address for Controller to connect to: 10.51.132.206 Controller 10.49.237.69 root password:

The setup utility proceeds to configure the NorthStar Controller application server, configure the remote Planner server, test the Cassandra database connection, and check the Redis connection. The following sample output shows the progress you would see and indicates which processes end up on the application server and which on the remote Planner server. Because this is a standby node in an HA cluster configuration, it is normal and expected for some processes to be STOPPED when the setup is complete.

Configuring Controller... analytics:elasticsearch RUNNING pid 7942, uptime 2:27:50 analytics:esauthproxy RUNNING pid 7946, uptime 2:27:50 analytics:logstash RUNNING pid 7945, uptime 2:27:50 analytics:netflowd RUNNING pid 7944, uptime 2:27:50 analytics:pipeline RUNNING pid 7943, uptime 2:27:50 bmp:bmpMonitor RUNNING pid 14342, uptime 3:48:40 collector:worker1 RUNNING pid 7172, uptime 2:32:31 collector:worker2 RUNNING pid 7174, uptime 2:32:31 collector:worker3 RUNNING pid 7173, uptime 2:32:31 collector:worker4 RUNNING pid 7175, uptime 2:32:31 collector_main:beat_scheduler STOPPED Sep 28 01:43 PM collector_main:es_publisher STOPPED Sep 28 01:43 PM collector_main:task_scheduler STOPPED Sep 28 01:42 PM config:cmgd STOPPED Sep 28 01:43 PM config:cmgd-rest STOPPED Sep 28 01:43 PM config:ns_config_monitor STOPPED Sep 28 01:43 PM docker:dockerd RUNNING pid 15181, uptime 3:48:22 epe:epeplanner RUNNING pid 18685, uptime 3:38:46 infra:cassandra RUNNING pid 3919, uptime 2:55:54 infra:ha_agent RUNNING pid 6972, uptime 2:33:50 infra:healthmonitor RUNNING pid 7131, uptime 2:32:45 infra:license_monitor RUNNING pid 3916, uptime 2:55:54 infra:prunedb STOPPED Sep 28 02:20 PM infra:rabbitmq RUNNING pid 3914, uptime 2:55:54 infra:redis_server RUNNING pid 3918, uptime 2:55:54 infra:zookeeper RUNNING pid 3907, uptime 2:55:54 ipe:ipe_app STOPPED Sep 28 01:43 PM listener1:listener1_00 RUNNING pid 7166, uptime 2:32:35 netconf:netconfd_00 STOPPED Sep 28 01:42 PM northstar:anycastGrouper STOPPED Sep 28 01:43 PM northstar:configServer STOPPED Sep 28 01:36 PM northstar:mladapter STOPPED Sep 28 01:36 PM northstar:npat STOPPED Sep 28 01:36 PM northstar:pceserver STOPPED Sep 28 01:42 PM northstar:prpdclient STOPPED Sep 28 01:36 PM northstar:scheduler STOPPED Sep 28 01:36 PM northstar:toposerver STOPPED Sep 28 01:36 PM northstar_pcs:PCServer STOPPED Sep 28 01:36 PM northstar_pcs:PCViewer STOPPED Sep 28 01:36 PM web:app STOPPED Not started web:gui STOPPED Not started web:notification STOPPED Not started web:proxy STOPPED Not started web:resthandler STOPPED Not started Controller configuration completed! Configuring Remote Planner... infra:rabbitmq RUNNING pid 3587, uptime 3 days, 3:03:30 northstar:npat RUNNING pid 3585, uptime 3 days, 3:03:30 web:planner RUNNING pid 6650, uptime 0:00:10 Remote Planner configuration completed! Testing Cassandra connection... Connected to NorthStar Cluster at 10.49.237.68:9042. Cassandra connection OK. Checking Redis connection... Redis port is listening.

HA Active Node Setup

After you have completed the remote server setup for all the standby nodes, you are ready to run setup_remote_planner.py for the active node in the cluster.

If you launch the utility and enter the three required IP addresses directly in the CLI, it looks like this:

[root@hostname~]# ./setup_remote_planner.py remote-planner-ip controller-physical-ip controller-vip

This is the same for both active and standby nodes. The setup utility automatically distinguishes between the two.

You will be required to enter your server root password to continue.

If you use interactive mode:

On the remote Planner server, launch the remote Planner server setup utility:

[root@hostname~]# ./setup_remote_planner.py Remote Planner requires Controller to have port 6379 and 9042 opened.

Respond to the prompts. You’ll need to provide both the virtual IP and physical IP addresses for the active application node, the IP address for the remote Planner server, and your server root password. For example:

Controller Cluster Virtual IP Address for Remote Planner to connect to: 10.49.237.68 Controller Cluster Physical IP Address for Remote Planner to connect to: 10.49.237.70 Remote Planner IP Address for Controller to connect to: 10.49.128.198 Controller 10.49.237.70 root password:

The setup utility proceeds to configure the NorthStar Controller application server, configure the remote Planner server, test the Cassandra database connection, and check the Redis connection. The following sample output shows the progress you would see and highlights which processes end up on the application server and which on the remote Planner server. Because this is the active node in an HA cluster configuration, all processes should be RUNNING when the setup is complete.

Configuring Controller... analytics:elasticsearch RUNNING pid 28516, uptime 2:38:47 analytics:esauthproxy RUNNING pid 28520, uptime 2:38:47 analytics:logstash RUNNING pid 28519, uptime 2:38:47 analytics:netflowd RUNNING pid 28518, uptime 2:38:47 analytics:pipeline RUNNING pid 28517, uptime 2:38:47 bmp:bmpMonitor RUNNING pid 14360, uptime 3:57:22 collector:worker1 RUNNING pid 27521, uptime 2:40:34 collector:worker2 RUNNING pid 27523, uptime 2:40:34 collector:worker3 RUNNING pid 27522, uptime 2:40:34 collector:worker4 RUNNING pid 27525, uptime 2:40:34 collector_main:es_publisher RUNNING pid 27388, uptime 2:40:48 collector_main:task_scheduler RUNNING pid 27389, uptime 2:40:48 config:cmgd RUNNING pid 26496, uptime 2:42:18 config:cmgd-rest RUNNING pid 26498, uptime 2:42:18 config:ns_config_monitor RUNNING pid 26497, uptime 2:42:18 docker:dockerd RUNNING pid 15199, uptime 3:57:02 epe:epeplanner RUNNING pid 6764, uptime 3:47:03 infra:cassandra RUNNING pid 27016, uptime 3:10:43 infra:ha_agent RUNNING pid 26101, uptime 2:43:27 infra:healthmonitor RUNNING pid 26074, uptime 2:43:38 infra:license_monitor RUNNING pid 27010, uptime 3:10:43 infra:prunedb RUNNING pid 26059, uptime 2:43:49 infra:rabbitmq RUNNING pid 27007, uptime 3:10:43 infra:redis_server RUNNING pid 27012, uptime 3:10:43 infra:zookeeper RUNNING pid 27006, uptime 3:10:43 ipe:ipe_app RUNNING pid 27387, uptime 2:40:48 listener1:listener1_00 RUNNING pid 29161, uptime 3:01:56 netconf:netconfd_00 RUNNING pid 27386, uptime 2:40:48 northstar:anycastGrouper RUNNING pid 19762, uptime 0:01:53 northstar:configServer RUNNING pid 27385, uptime 2:40:48 northstar:mladapter RUNNING pid 27383, uptime 2:40:48 northstar:npat RUNNING pid 27384, uptime 2:40:48 northstar:pceserver RUNNING pid 27154, uptime 2:41:54 northstar:prpdclient RUNNING pid 27381, uptime 2:40:48 northstar:scheduler RUNNING pid 27382, uptime 2:40:48 northstar:toposerver RUNNING pid 27380, uptime 2:40:48 northstar_pcs:PCServer RUNNING pid 27192, uptime 2:41:44 northstar_pcs:PCViewer RUNNING pid 27191, uptime 2:41:44 web:app RUNNING pid 16817, uptime 0:00:11 web:gui RUNNING pid 16818, uptime 0:00:11 web:notification RUNNING pid 16816, uptime 0:00:11 web:proxy RUNNING pid 16819, uptime 0:00:11 web:resthandler RUNNING pid 16820, uptime 0:00:11 Controller configuration completed! Configuring Remote Planner... infra:rabbitmq RUNNING pid 3587, uptime 3 days, 3:12:12 northstar:npat RUNNING pid 3585, uptime 3 days, 3:12:12 web:planner RUNNING pid 6777, uptime 0:00:10 Remote Planner configuration completed! Testing Cassandra connection... Connected to NorthStar Cluster at 10.49.237.68:9042. Cassandra connection OK. Checking Redis connection... Redis port is listening.

Installing Remote Planner Server at a Later Time

An alternative to installing and setting up the remote Planner server when you initially install or upgrade NorthStar, is to add the remote Planner server later. In this case, be aware that because running setup_remote_planner.py in a non-HA scenario includes a restart of the Cassandra database, all existing Planner sessions should be saved and closed before starting the process. We recommend performing the setup during a maintenance window. In the case of a NorthStar cluster, the Cassandra database is not restarted.

In a network where remote Planner server is already installed and functioning, you might need to switch from one NorthStar Controller application server to another, such as if the server should go down. It’s also possible for the IP address of the application server to change, which would interrupt communication between the application server and the remote Planner server. In those situations, you need only run the setup_remote_planner.py utility again from the remote Planner server, entering the new IP address of the application server.