Installing the NorthStar Controller

You can use the procedures described in the following sections if you are performing a fresh install of NorthStar Controller or upgrading from an earlier release, unless you are using NorthStar analytics and are upgrading from a release older than NorthStar 4.3. Steps that are not required if upgrading are noted. Before performing a fresh install of NorthStar, you must first use the ./uninstall_all.sh script to uninstall any older versions of NorthStar on the device. See Uninstalling the NorthStar Controller Application.

If you are upgrading from a release earlier than NorthStar 4.3 and you are using NorthStar analytics, you must upgrade NorthStar manually using the procedure described in Upgrading from Pre-4.3 NorthStar with Analytics.

If you are upgrading NorthStar from a release earlier than NorthStar 6.0.0, you must redeploy the analytics settings after you upgrade the NorthStar application nodes. This is done from the Analytics Data Collector Configuration Settings menu described in Installing Data Collectors for Analytics. This is to ensure that netflowd can communicate with cMGD (necessary for the NorthStar CLI available starting in NorthStar 6.1.0).

We also recommend that you uninstall any pre-existing older versions of Docker before you install NorthStar. Installing NorthStar will install a current version of Docker.

The NorthStar software and data are installed in the /opt directory. Be sure to allocate sufficient disk space. See NorthStar Controller System Requirements for our memory recommendations.

When upgrading NorthStar Controller, ensure that the /tmp directory has enough free space to save the contents of the /opt/pcs/data directory because the /opt/pcs/data directory contents are backed up to /tmp during the upgrade process.

If you are installing NorthStar for a high availability (HA) cluster, ensure that:

You configure each server individually using these instructions before proceeding to HA setup.

The database and rabbitmq passwords are the same for all servers that will be in the cluster.

All server time is synchronized by NTP using the following procedure:

Install NTP.

yum -y install ntp

Specify the preferred NTP server in ntp.conf.

Verify the configuration.

ntpq -p

Note:All cluster nodes must have the same time zone and system time settings. This is important to prevent inconsistencies in the database storage of SNMP and LDP task collection delta values.

To upgrade NorthStar Controller in an HA cluster environment, see Upgrade the NorthStar Controller Software in an HA Environment.

For HA setup after all the servers that will be in the cluster have been configured, see Configuring a NorthStar Cluster for High Availability.

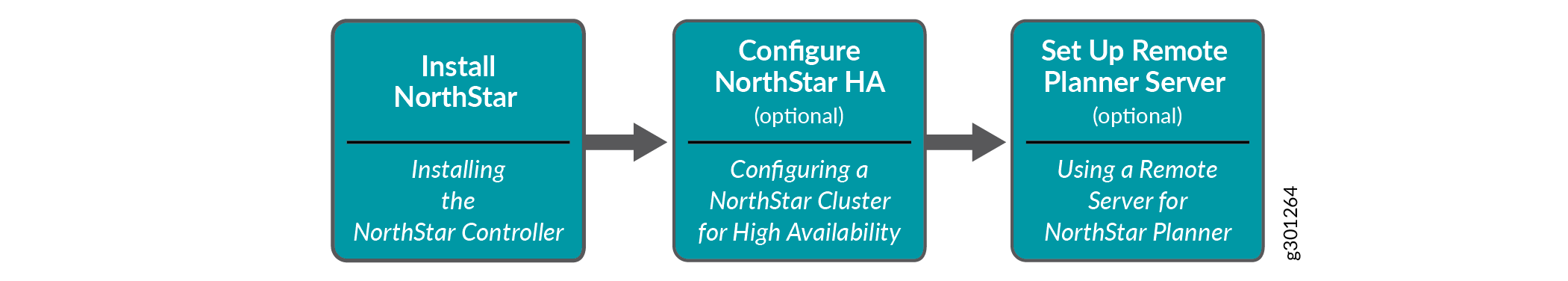

To set up a remote server for NorthStar Planner, see Using a Remote Server for NorthStar Planner.

The high-level order of tasks is shown in Figure 1. Installing and configuring NorthStar comes first. If you want a NorthStar HA cluster, you would set that up next. Finally, if you want to use a remote server for NorthStar Planner, you would install and configure that. The text in italics indicates the topics in the NorthStar Getting Started Guide that cover the steps.

The following sections describe the download, installation, and initial configuration of NorthStar.

The NorthStar software includes a number of third-party packages. To avoid possible conflict, we recommend that you only install these packages as part of the NorthStar Controller RPM bundle installation rather than installing them manually.

Activate Your NorthStar Software

To obtain your serial number certificate and license key, see Obtain Your License Keys and Software for the NorthStar Controller.

Download the Software

The NorthStar Controller software download page is available at https://www.juniper.net/support/downloads/?p=northstar#sw.

- From the Version drop-down list, select the version number.

- Click the NorthStar Application (which includes the RPM bundle and the Ansible playbook) and the NorthStar JunosVM to download them.

If Upgrading, Back Up Your JunosVM Configuration and iptables

If you are doing an upgrade from a previous NorthStar release, and you previously installed NorthStar and Junos VM together, back up your JunosVM configuration before installing the new software. Restoration of the JunosVM configuration is performed automatically after the upgrade is complete as long as you use the net_setup.py utility to save your backup.

If Upgrading from an Earlier Service Pack Installation

You cannot directly upgrade to NorthStar Release 6.2.4 from an earlier NorthStar Release with service pack installation; for example, you cannot upgrade to NorthStar Release 6.2.4 directly from a NorthStar 6.2.0 SP1 or 6.1.0 SP5 installation. So, to upgrade to NorthStar Release 6.2.4 from an earlier NorthStar Release with service pack installation, you must rollback the service packs or run the upgrade_NS_with_patches.sh script to allow installation of a newer NorthStar version over the service packs.

To upgrade to NorthStar Release 6.2.4, before proceeding with the installation:

Install NorthStar Controller

You can either install the RPM bundle on a physical server or use a two-VM installation method in an OpenStack environment, in which the JunosVM is not bundled with the NorthStar Controller software.

The following optional parameters are available for use with the install.sh command:

| ––vm | Same as ./install-vm.sh, creates a two-VM installation. |

| ––crpd | Creates a cRPD installation. |

| ––skip-bridge | For a physical server installation, skips checking if the external0 and mgmt0 bridges exist. |

The default bridges are external0 and mgmt0. If you have two interfaces such as eth0 and eth1 in the physical setup, you must configure the bridges to those interfaces. However, you can also define any bridge names relevant to your deployment.

We recommend that you configure the bridges before running install.sh.

Bridges are not used with cRPD installations.

For a physical server installation, execute the following commands to install NorthStar Controller:

[root@hostname~]# yum install <rpm-filename> [root@hostname~]# cd /opt/northstar/northstar_bundle_x.x.x/ [root@hostname~]# ./install.sh

Note:yum install works for both upgrade and fresh installation.

For a two-VM installation, execute the following commands to install NorthStar Controller:

[root@hostname~]# yum install <rpm-filename> [root@hostname~]# cd /opt/northstar/northstar_bundle_x.x.x/ [root@hostname~]# ./install-vm.sh

Note:yum install works for both upgrade and fresh installation.

The script offers the opportunity to change the JunosVM IP address from the system default of 172.16.16.2.

Checking current disk space INFO: Current available disk space for /opt/northstar is 34G. Will proceed with installation. System currently using 172.16.16.2 as NTAD/junosvm ip Do you wish to change NTAD/junosvm ip (Y/N)? y Please specify junosvm ip:

For a cRPD installation, you must have:

CentOS or Red Hat Enterprise Linux 7.x. Earlier versions are not supported.

A Junos cRPD license.

The license is installed during NorthStar installation. Verify that the cRPD license is installed by running the

show system licensecommand in the cRPD container.

Note:If you require multiple BGP-LS peering on different subnets for different AS domains at the same time, you should choose the default JunosVM approach. This configuration for cRPD is not supported.

For a cRPD installation, execute the following commands to install NorthStar Controller:

[root@hostname~]# yum install <rpm-filename> [root@hostname~]# cd /opt/northstar/northstar_bundle_x.x.x/ [root@hostname~]# ./install.sh ––crpd

Note:yum install works for both upgrade and fresh installation.

Configure Support for Different JunosVM Versions

This procedure is not applicable to cRPD installations.

If you are using a two-VM installation, in which the JunosVM is not bundled with the NorthStar Controller, you might need to edit the northstar.cfg file to make the NorthStar Controller compatible with the external VM by changing the version of NTAD used. For a NorthStar cluster configuration, you must change the NTAD version in the northstar.cfg file for every node in the cluster. NTAD is a 32-bit process which requires that the JunosVM device running NTAD be configured accordingly. You can copy the default JunosVM configuration from what is provided with the NorthStar release (for use in a nested installation). You must at least ensure that the force-32-bit flag is set:

[northstar@jvm1]#set system processes routing force-32-bit

To change the NTAD version in the northstar.cfg file:

Create Passwords

This step is not required if you are doing an upgrade rather than a fresh installation.

When prompted, enter new database/rabbitmq, web UI Admin, and cMGD root passwords.

Enable the NorthStar License

This step is not required if you are doing an upgrade rather than a fresh installation.

You must enable the NorthStar license as follows, unless you are performing an upgrade and you have an activated license.

Adjust Firewall Policies

The iptables default rules could interfere with NorthStar-related traffic. If necessary, adjust the firewall policies.

Refer to NorthStar Controller System Requirements for a list of ports that must be allowed by iptables and firewalls.

Launch the Net Setup Utility

This step is not required if you are doing an upgrade rather than a fresh installation.

For installations that include a remote Planner server, the Net Setup utility is used only on the Controller server and not on the Remote Planner Server. Instead, the install-remote_planner.sh installation script launches a different setup utility, called setup_remote_planner.py. See Using a Remote Server for NorthStar Planner.

Launch the Net Setup utility to perform host server configuration.

[root@northstar]# /opt/northstar/utils/net_setup.py

The main menu that appears is slightly different depending on whether your installation uses Junos VM or is a cRPD installation.

For Junos VM installations (installation on a physical server or a two-server installation), the main menu looks like this:

Main Menu:

.............................................

A.) Host Setting

.............................................

B.) JunosVM Setting

.............................................

C.) Check Network Setting

.............................................

D.) Maintenance & Troubleshooting

.............................................

E.) HA Setting

.............................................

F.) Collect Trace/Log

.............................................

G.) Analytics Data Collector Setting

(External standalone/cluster analytics server)

.............................................

H.) Setup SSH Key for external JunosVM setup

.............................................

I.) Internal Analytics Setting (HA)

.............................................

X.) Exit

.............................................

Please select a letter to execute.For cRPD installations, the main menu looks like this:

Main Menu:

.............................................

A.) Host Setting

.............................................

B.) Junos CRPD Setting

.............................................

C.) Check Network Setting

.............................................

D.) Maintenance & Troubleshooting

.............................................

E.) HA Setting

.............................................

F.) Collect Trace/Log

.............................................

G.) Analytics Data Collector Setting

(External standalone/cluster analytics server)

.............................................

I.) Internal Analytics Setting (HA)

.............................................

X.) Exit

.............................................

Please select a letter to execute.Notice that option B is specific to cRPD and option H is not available as it is not relevant to cRPD.

Configure the Host Server

This step is not required if you are doing an upgrade rather than a fresh installation.

Configure the JunosVM and its Interfaces

This section applies to physical server or two-VM installations that use Junos VM. If you are installing NorthStar using cRPD, skip this section and proceed to Configure Junos cRPD Settings.

This step is not required if you are doing an upgrade rather than a fresh installation.

From the Setup Main Menu, configure the JunosVM and its interfaces. Ping the JunosVM to ensure that it is up before attempting to configure it. The net_setup script uses IP 172.16.16.2 to access the JunosVM using the login name northstar.

Configure Junos cRPD Settings

From the Setup Main Menu, configure the Junos cRPD settings. This section applies only to cRPD installations (not to installations that use Junos VM).

Set Up the SSH Key for External JunosVM

This section only applies to two-VM installations. Skip this section if you are installing NorthStar using cRPD.

This step is not required if you are doing an upgrade rather than a fresh installation.

For a two-VM installation, you must set up the SSH key for the external JunosVM.

Please select a number to modify. [<CR>=return to main menu]: H

Follow the prompts to provide your JunosVM username and router login class (super-user, for example). The script verifies your login credentials, downloads the JunosVM SSH key file, and returns you to the main menu.

For example:

Main Menu:

.............................................

A.) Host Setting

.............................................

B.) JunosVM Setting

.............................................

C.) Check Network Setting

.............................................

D.) Maintenance & Troubleshooting

.............................................

E.) HA Setting

.............................................

F.) Collect Trace/Log

.............................................

G.) Analytics Data Collector Setting

(External standalone/cluster analytics server)

.............................................

H.) Setup SSH Key for external JunosVM setup

.............................................

I.) Internal Analytics Setting (HA)

.............................................

X.) Exit

.............................................

Please select a letter to execute.

H

Please provide JunosVM login:

admin

2 VMs Setup is detected

Script will create user: northstar. Please provide user northstar router login class e.g super-user, operator:

super-user

The authenticity of host '10.49.118.181 (10.49.118.181)' can't be established.

RSA key fingerprint is xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx:xx.

Are you sure you want to continue connecting (yes/no)? yes

Applying user northstar login configuration

Downloading JunosVM ssh key file. Login to JunosVM

Checking md5 sum. Login to JunosVM

SSH key has been sucessfully updated

Main Menu:

.............................................

A.) Host Setting

.............................................

B.) JunosVM Setting

.............................................

C.) Check Network Setting

.............................................

D.) Maintenance & Troubleshooting

.............................................

E.) HA Setting

.............................................

F.) Collect Trace/Log

.............................................

G.) Analytics Data Collector Setting

(External standalone/cluster analytics server)

.............................................

H.) Setup SSH Key for external JunosVM setup

.............................................

I.) Internal Analytics Setting (HA)

.............................................

X.) Exit

.............................................

Please select a letter to execute.

.............................................

Please select a letter to execute.

Upgrade the NorthStar Controller Software in an HA Environment

There are some special considerations for upgrading NorthStar Controller when you have an HA cluster configured. Use the following procedure:

The newly upgraded software automatically inherits the net_setup settings, HA configurations, and all credentials from the previous installation. Therefore, it is not necessary to re-run net_setup unless you want to change settings, HA configurations, or password credentials.