Configure a vSRX Virtual Firewall Chassis Cluster in Junos OS

Chassis Cluster Overview

Chassis cluster groups a pair of the same kind of vSRX Virtual Firewall instances into a cluster to provide network node redundancy. The devices must be running the same Junos OS release. You connect the control virtual interfaces on the respective nodes to form a control plane that synchronizes the configuration and Junos OS kernel state. The control link (a virtual network or vSwitch) facilitates the redundancy of interfaces and services. Similarly, you connect the data plane on the respective nodes over the fabric virtual interfaces to form a unified data plane. The fabric link (a virtual network or vSwitch) allows for the management of cross-node flow processing and for the management of session redundancy.

The control plane software operates in active/passive mode. When configured as a chassis cluster, one node acts as the primary device and the other as the secondary device to ensure stateful failover of processes and services in the event of a system or hardware failure on the primary device. If the primary device fails, the secondary device takes over processing of control plane traffic.

If you configure a chassis cluster on vSRX Virtual Firewall nodes across two physical hosts, disable igmp-snooping on the bridge that each host physical interface belongs to that the control vNICs use. This ensures that the control link heartbeat is received by both nodes in the chassis cluster.

The chassis cluster data plane operates in active/active mode. In a chassis cluster, the data plane updates session information as traffic traverses either device, and it transmits information between the nodes over the fabric link to guarantee that established sessions are not dropped when a failover occurs. In active/active mode, traffic can enter the cluster on one node and exit from the other node.

Chassis cluster functionality includes:

-

Resilient system architecture, with a single active control plane for the entire cluster and multiple Packet Forwarding Engines. This architecture presents a single device view of the cluster.

-

Synchronization of configuration and dynamic runtime states between nodes within a cluster.

-

Monitoring of physical interfaces, and failover if the failure parameters cross a configured threshold.

-

Support for generic routing encapsulation (GRE) and IP-over-IP (IP-IP) tunnels used to route encapsulated IPv4 or IPv6 traffic by means of two internal interfaces, gr-0/0/0 and ip-0/0/0, respectively. Junos OS creates these interfaces at system startup and uses these interfaces only for processing GRE and IP-IP tunnels.

At any given instant, a cluster node can be in one of the following states: hold, primary, secondary-hold, secondary, ineligible, or disabled. Multiple event types, such as interface monitoring, Services Processing Unit (SPU) monitoring, failures, and manual failovers, can trigger a state transition.

Enable Chassis Cluster Formation

- Chassis Cluster Provisioning on vSRX Virtual Firewall

- Interface Naming and Mapping

- Enabling Chassis Cluster Formation

Chassis Cluster Provisioning on vSRX Virtual Firewall

Setting up the connectivity for chassis cluster on vSRX Virtual Firewall instances is similar to physical SRX Series Firewalls. The vSRX Virtual Firewall VM uses virtual network (or vswitch) for virtual NIC (such as VMXNET3 or virtio).

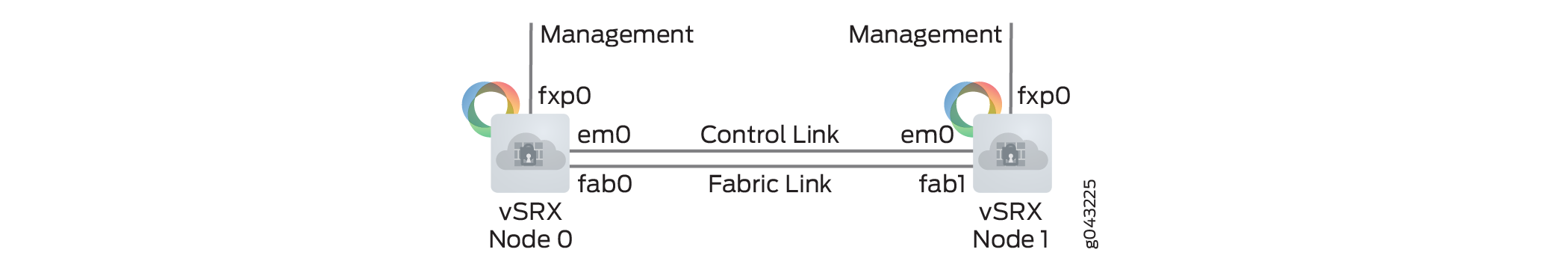

Chassis cluster requires the following direct connections between the two vSRX Virtual Firewall instances:

-

Control link, or virtual network, which acts in active/passive mode for the control plane traffic between the two vSRX Virtual Firewall instances

-

Fabric link, or virtual network, which is used for real-time session synchronization between the nodes. In active/active mode, this link is also used for carrying data traffic between the two vSRX Virtual Firewall instances.

Note: You can optionally create two fabric links for more redundancy.

The vSRX Virtual Firewall cluster uses the following interfaces:

-

Out-of-band Management interface (fxp0)

-

Cluster control interface (em0)

-

Cluster fabric interface (fab0 on node0, fab1 on node1)

The control interface must be the second vNIC. For the fabric link you can use any revenue port (ge- ports). You can optionally configure a second fabric link for increased redundancy.

Figure 1 shows chassis cluster formation with vSRX Virtual Firewall instances.

-

When you enable chassis cluster, you must also enable jumbo frames (MTU size = 9000) to support the fabric link on the virtio network interface.

If you configure a chassis cluster across two physical hosts, disable igmp-snooping on each host physical interface that the vSRX Virtual Firewall control link uses to ensure that the control link heartbeat is received by both nodes in the chassis cluster.

hostOS# echo 0 > /sys/devices/virtual/net/<bridge-name>/bridge/multicast_snooping-

After chassis cluster is enabled, the vSRX Virtual Firewall instance maps the second vNIC to the control link automatically, and its name will be changed from ge-0/0/0 to em0.

-

You can use any other vNICs for the fabric link/links. (See Interface Naming and Mapping)

For virtio interfaces, link status update is not supported. The link status of virtio interfaces is always reported as Up. For this reason, a vSRX Virtual Firewall instance using virtio and chassis cluster cannot receive link up and link down messages from virtio interfaces.

The virtual network MAC aging time determines the amount of time that an entry remains in the MAC table. We recommend that you reduce the MAC aging time on the virtual networks to minimize the downtime during failover.

For example, you can use the brctl

setageing bridge 1 command to set aging to 1 second for the Linux

bridge.

You configure the virtual networks for the control and fabric links, then create and connect the control interface to the control virtual network and the fabric interface to the fabric virtual network.

Interface Naming and Mapping

Each network adapter defined for a vSRX Virtual Firewall is mapped to a specific interface, depending on whether the vSRX Virtual Firewall instance is a standalone VM or one of a cluster pair for high availability. The interface names and mappings in vSRX Virtual Firewall are shown in Table 1 and Table 2.

Note the following:

- In standalone mode:

- fxp0 is the out-of-band management interface.

- ge-0/0/0 is the first traffic (revenue) interface.

- In cluster mode:

- fxp0 is the out-of-band management interface.

- em0 is the cluster control link for both nodes.

- Any of the traffic interfaces can be specified as the fabric links, such as ge-0/0/0 for fab0 on node 0 and ge-7/0/0 for fab1 on node 1.

The interface names and mappings for a standalone vSRX Virtual Firewall VM can be seen in Table 1 and for a vSRX Virtual Firewall VM in cluster mode the same is shown in Table 2. You can see that in the cluster mode, the em0 port is inserted between the fxp0 and ge-0/0/0 positions, which makes the revenue port numbers shift up one vNIC location.

|

Network Adapter |

Interface Names |

|---|---|

| 1 |

fxp0 |

| 2 |

ge-0/0/0 |

| 3 |

ge-0/0/1 |

| 4 |

ge-0/0/2 |

| 5 |

ge-0/0/3 |

| 6 |

ge-0/0/4 |

| 7 |

ge-0/0/5 |

| 8 |

ge-0/0/6 |

| Network Adapter | Interface Names |

|---|---|

| 1 |

fxp0 (node 0 and 1) |

| 2 |

em0 (node 0 and 1) |

| 3 |

ge-0/0/0 (node 0) ge-7/0/0 (node 1) |

| 4 |

ge-0/0/1 (node 0) ge-7/0/1 (node 1) |

| 5 |

ge-0/0/2 (node 0) ge-7/0/2 (node 1) |

| 6 |

ge-0/0/3 (node 0) ge-7/0/3 (node 1) |

| 7 |

ge-0/0/4 (node 0) ge-7/0/4 (node 1) |

| 8 |

ge-0/0/5 (node 0) ge-7/0/5 (node 1) |

Enabling Chassis Cluster Formation

You create two vSRX Virtual Firewall instances to form a chassis cluster, and then you set the cluster ID and node ID on each instance to join the cluster. When a vSRX Virtual Firewall VM joins a cluster, it becomes a node of that cluster. With the exception of unique node settings and management IP addresses, nodes in a cluster share the same configuration.

You can deploy up to 255 chassis clusters in a Layer 2 domain. Clusters and nodes are identified in the following ways:

-

The cluster ID (a number from 1 to 255) identifies the cluster.

-

The node ID (a number from 0 to 1) identifies the cluster node.

On SRX Series Firewalls, the cluster ID and node ID are written into EEPROM. On the vSRX Virtual Firewall VM, vSRX Virtual Firewall stores and reads the IDs from boot/loader.conf and uses the IDs to initialize the chassis cluster during startup.

Ensure that your vSRX Virtual Firewall instances comply with the following prerequisites before you enable chassis clustering:

-

You have committed a basic configuration to both vSRX Virtual Firewall instances that form the chassis cluster. See Configuring vSRX Using the CLI.

- Use

show versionin Junos OS to ensure that both vSRX Virtual Firewall instances have the same software version. - Use

show system licensein Junos OS to ensure that both vSRX Virtual Firewall instances have the same licenses installed. - You must set the same chassis cluster ID on each vSRX Virtual Firewall node and reboot the vSRX Virtual Firewall VM to enable chassis cluster formation.

The chassis cluster formation commands for node 0 and node 1 are as follows:

-

On vSRX Virtual Firewall node 0:

user@vsrx0>set chassis cluster cluster-id number node 0 reboot

-

On vSRX Virtual Firewall node 1:

user@vsrx1>set chassis cluster cluster-id number node 1 reboot

The vSRX Virtual Firewall interface naming and mapping to vNICs changes when you enable chassis clustering. Use the same cluster ID number for each node in the cluster.

When using multiple clusters that are connected to the same L2 domain, a unique cluster-id needs to be used for each cluster. Otherwise you may get duplicate mac addresses on the network, because the cluster-id is used to form the virtual interface mac addresses.

After reboot, on node 0, configure the fabric (data) ports of the cluster that are used to pass real-time objects (RTOs):

-

user@vsrx0# set interfaces fab0 fabric-options member-interfaces ge-0/0/0 user@vsrx0# set interfaces fab1 fabric-options member-interfaces ge-7/0/0

Chassis Cluster Quick Setup with J-Web

To configure chassis cluster from J-Web:

Manually Configure a Chassis Cluster with J-Web

You can use the J-Web interface to configure the primary node 0 vSRX Virtual Firewall instance in the cluster. Once you have set the cluster and node IDs and rebooted each vSRX Virtual Firewall, the following configuration will automatically be synced to the secondary node 1 vSRX Virtual Firewall instance.

Select Configure>Chassis Cluster>Cluster Configuration. The Chassis Cluster configuration page appears.

Table 3 explains the contents of the HA Cluster Settings tab.

Table 4 explains how to edit the Node Settings tab.

Table 5 explains how to add or edit the HA Cluster Interfaces table.

Table 6 explains how to add or edit the HA Cluster Redundancy Groups table.

Field |

Function |

|---|---|

Node Settings |

|

Node ID |

Displays the node ID. |

Cluster ID |

Displays the cluster ID configured for the node. |

Host Name |

Displays the name of the node. |

Backup Router |

Displays the router used as a gateway while the Routing Engine is in secondary state for redundancy-group 0 in a chassis cluster. |

Management Interface |

Displays the management interface of the node. |

IP Address |

Displays the management IP address of the node. |

Status |

Displays the state of the redundancy group.

|

Chassis Cluster>HA Cluster Settings>Interfaces |

|

Name |

Displays the physical interface name. |

Member Interfaces/IP Address |

Displays the member interface name or IP address configured for an interface. |

Redundancy Group |

Displays the redundancy group. |

Chassis Cluster>HA Cluster Settings>Redundancy Group |

|

Group |

Displays the redundancy group identification number. |

Preempt |

Displays the selected preempt option.

|

Gratuitous ARP Count |

Displays the number of gratuitous Address Resolution Protocol (ARP) requests that a newly elected primary device in a chassis cluster sends out to announce its presence to the other network devices. |

Node Priority |

Displays the assigned priority for the redundancy group on that node. The eligible node with the highest priority is elected as primary for the redundant group. |

Field |

Function |

Action |

|---|---|---|

Node Settings |

||

Host Name |

Specifies the name of the host. |

Enter the name of the host. |

Backup Router |

Displays the device used as a gateway while the Routing Engine is in the secondary state for redundancy-group 0 in a chassis cluster. |

Enter the IP address of the backup router. |

Destination |

||

IP |

Adds the destination address. |

Click Add. |

Delete |

Deletes the destination address. |

Click Delete. |

Interface |

||

Interface |

Specifies the interfaces available for the router. Note:

Allows you to add and edit two interfaces for each fabric link. |

Select an option. |

IP |

Specifies the interface IP address. |

Enter the interface IP address. |

Add |

Adds the interface. |

Click Add. |

Delete |

Deletes the interface. |

Click Delete. |

Field |

Function |

Action |

|---|---|---|

Fabric Link > Fabric Link 0 (fab0) |

||

Interface |

Specifies fabric link 0. |

Enter the interface IP fabric link 0. |

Add |

Adds fabric interface 0. |

Click Add. |

Delete |

Deletes fabric interface 0. |

Click Delete. |

Fabric Link > Fabric Link 1 (fab1) |

||

Interface |

Specifies fabric link 1. |

Enter the interface IP for fabric link 1. |

Add |

Adds fabric interface 1. |

Click Add. |

Delete |

Deletes fabric interface 1. |

Click Delete. |

Redundant Ethernet |

||

Interface |

Specifies a logical interface consisting of two physical Ethernet interfaces, one on each chassis. |

Enter the logical interface. |

IP |

Specifies a redundant Ethernet IP address. |

Enter a redundant Ethernet IP address. |

Redundancy Group |

Specifies the redundancy group ID number in the chassis cluster. |

Select a redundancy group from the list. |

Add |

Adds a redundant Ethernet IP address. |

Click Add. |

Delete |

Deletes a redundant Ethernet IP address. |

Click Delete. |

Field |

Function |

Action |

|---|---|---|

Redundancy Group |

Specifies the redundancy group name. |

Enter the redundancy group name. |

Allow preemption of primaryship |

Allows a node with a better priority to initiate a failover for a redundancy group. Note:

By default, this feature is disabled. When disabled, a node with a better priority does not initiate a redundancy group failover (unless some other factor, such as faulty network connectivity identified for monitored interfaces, causes a failover). |

– |

Gratuitous ARP Count |

Specifies the number of gratuitous Address Resolution Protocol requests that a newly elected primary sends out on the active redundant Ethernet interface child links to notify network devices of a change in primary role on the redundant Ethernet interface links. |

Enter a value from 1 to 16. The default is 4. |

node0 priority |

Specifies the priority value of node0 for a redundancy group. |

Enter the node priority number as 0. |

node1 priority |

Specifies the priority value of node1 for a redundancy group. |

Select the node priority number as 1. |

Interface Monitor |

||

Interface |

Specifies the number of redundant Ethernet interfaces to be created for the cluster. |

Select an interface from the list. |

Weight |

Specifies the weight for the interface to be monitored. |

Enter a value from 1 to 125. |

Add |

Adds interfaces to be monitored by the redundancy group along with their respective weights. |

Click Add. |

Delete |

Deletes interfaces to be monitored by the redundancy group along with their respective weights. |

Select the interface from the configured list and click Delete. |

IP Monitoring |

||

Weight |

Specifies the global weight for IP monitoring. |

Enter a value from 0 to 255. |

Threshold |

Specifies the global threshold for IP monitoring. |

Enter a value from 0 to 255. |

Retry Count |

Specifies the number of retries needed to declare reachability failure. |

Enter a value from 5 to 15. |

Retry Interval |

Specifies the time interval in seconds between retries. |

Enter a value from 1 to 30. |

IPV4 Addresses to Be Monitored |

||

IP |

Specifies the IPv4 addresses to be monitored for reachability. |

Enter the IPv4 addresses. |

Weight |

Specifies the weight for the redundancy group interface to be monitored. |

Enter the weight. |

Interface |

Specifies the logical interface through which to monitor this IP address. |

Enter the logical interface address. |

Secondary IP address |

Specifies the source address for monitoring packets on a secondary link. |

Enter the secondary IP address. |

Add |

Adds the IPv4 address to be monitored. |

Click Add. |

Delete |

Deletes the IPv4 address to be monitored. |

Select the IPv4 address from the list and click Delete. |