Solutions & Technologies

IP Storage Networking

Traditional storage networks running over Fibre Channel have been the backstop of the data center, but they can be expensive to maintain and grow. An all-IP-based storage infrastructure offers compelling cost-per-byte performance, and with costs continuing to go down on 25G and 100G Ethernet technology, as well as the growth of 400G spine switches, IP storage infrastructure can offer both a performance boost and overall cost savings.

Non-volatile memory express (NVMe) over fabric technology, or NVMe-oF, is a powerful enabler for IP storage networks, utilizing NVMe/RoCEv2 or NVMe/TCP for high-performance and general-purpose storage access. NVMe/RoCE, with its low latency and high data throughput rate, is ideal for intense workloads, such as artificial intelligence/machine learning (AI/ML) and big data. NVMe/TCP is attractive, as it can coexist with your network with no specific network configuration requirements, nor does it require dedicated RDMA-capable host adapters.

How Juniper can help

Juniper’s broad range of data center-class switches can be used for IP storage connectivity, including switches with enhanced feature support designed specifically for NVMe/RoCEv2 workloads. They are ideal for building out converged data and storage networks or high-performance RDMA networks for workloads such as AI/ML. Juniper Apstra® can manage and provision VXLAN tunnels for secure storage traffic with ease and provide rich telemetry data on network I/O throughput and heat maps.

High-Performance Storage for AI/ML

AI and ML workloads are pushing the boundaries of what’s possible in compute and storage, and networking is no exception. Large datasets are transferred as fast as possible, and for that, a non-blocking, high-speed network with the lowest latency is essential. Juniper QFX Series Switches are ideal for NVMe/RoCEv2 transport with 100G and 400G speeds and support for Priority Flow Control (PFC) and Explicit Congestion Notification (ECN).

Block Storage with NVMe/TCP

Enterprises continue to need reliable, high-performance I/O for block-based storage, combined with the ability to run that storage either as a dedicated storage network or converged with the data network. With the proliferation of 25G server connectivity and the reduced cost of 100G top-of-rack and 100/400G network infrastructure, NVMe/TCP, combined with EVPN-VXLAN, is an ideal storage transport solution for the modern data center.

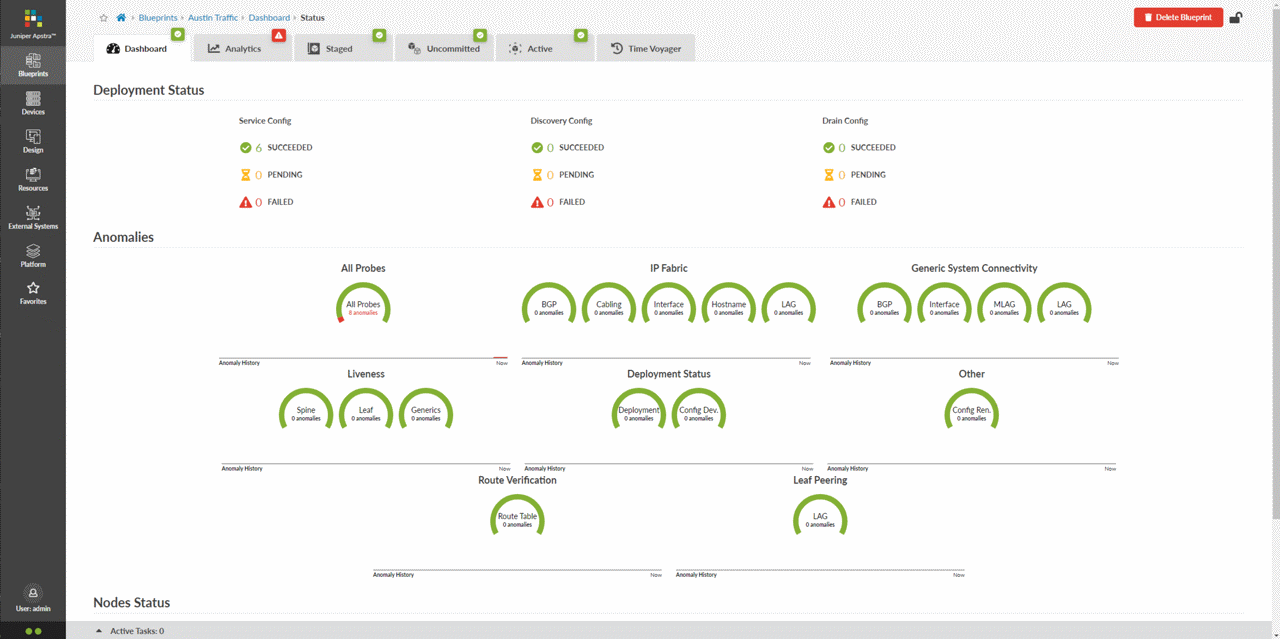

Apstra Intent-Based Networking

Deploying modern networks is easy with Apstra. It can automate the design, deployment, and operation of your network. And Apstra can provision and manage VXLAN tunnels for storage traffic by using tags and configlets. It makes it easy to see storage traffic usage and hotspots throughout your network.

Juniper Networks and WEKA solution

Juniper Networks and WEKA together provide scalable, high-performance, AI-optimized data center solutions to optimize GPU performance and efficiency for accelerated AI/ML training and inference.

Related Solutions

Data center networks

Simplify operations and ensure reliability with the modern, automated data center. Juniper helps you automate and continuously validate the entire network life cycle to ease design, deployment, and operations.

Data Center Interconnect

Juniper’s DCI solutions enable seamless interconnectivity that breaks through traditional scalability limitations, vendor lock-in, and interoperability challenges.

AI Data Center

Deploy high performing AI training, inference, and storage clusters with speed and flexibility. Juniper Validated Designs offer confidence and speed, giving you up to 10x better reliability.

IP Storage Networking FAQs

What is IP storage networking?

IP storage networking is a general term used to describe a group of technologies that allow block-level storage and file/object storage to be transmitted over an IP-based network. Recent technologies such as NVMe-oF, and specifically NVMe/RoCEv2 and NVMe/TCP, allow extremely high-performance storage area networks to operate with ease over IP/Ethernet.

What are the benefits of IP storage networking?

Users can deploy both separate storage networks at lower cost or use existing resources and leverage technologies such as NVMe/TCP to collapse their data and storage networks into a single network, eliminating the need to maintain separate networks. With the growth of 100G and even 400G Ethernet, IP storage networks are both faster than previous technologies such as FC-SAN and less expensive to maintain.

What use cases does IP storage networking support?

IP storage networking supports a wide variety of use cases, from small- and medium-sized business local storage needs with NVMe/TCP to advanced AI/ML and big data workloads using NVMe/RoCEv2. A significant driver behind the adoption of RDMA-based storage technologies such as NVMe/RoCE is the explosive growth in AI/ML, big data, and HPC-like workloads that require extreme storage performance over the network.

IP storage networking can also be used for traditional iSCSI storage needs, file I/O such as unstructured data repositories, and large-scale object stores for data lakes. In fact, IP storage networking can be used for almost any storage use case.

What are the components of IP storage networking?

With IP Storage Networking at its simplest, an Ethernet switch and network is all that is required for basic networked storage, such as iSCSI or file-based access like NFS or S3-compatible object stores. For more advanced and high-performance use cases, a lossless, non-blocking IP fabric with low-latency switches is highly desirable.

NVMe/TCP drivers are now present in the Linux kernel, and no specific network configurations are mandatory, although class-of-service optimizations could be beneficial. In NVMe/RoCEv2 deployments, congestion management features such as Priority Flow Control (PFC), Explicit Congestion Notification (ECN), and Data Center Quantized Congestion Notification (DCQCN) are desirable. Finally, a data processing unit (DPU) or similar SmartNIC is useful in high-performance NVMe/TCP workloads, and an RDMA-capable adapter for each host connected to the storage fabric is required in NVMe/RoCEv2 fabrics.