Assisted Replication Multicast Optimization in EVPN Networks

Assisted replication (AR) helps to optimize multicast traffic flow in EVPN networks by offloading traffic replication to devices that can more efficiently handle the task.

Assisted Replication in EVPN Networks

Assisted replication (AR) is a Layer 2 (L2) multicast traffic optimization feature supported in EVPN networks. With AR enabled, an ingress device replicates a multicast stream to another device in the EVPN network that can more efficiently handle replicating and forwarding the stream to the other devices in the network. For backward compatibility, ingress devices that don’t support AR operate transparently with other devices that have AR enabled. These devices use ingress replication to distribute the traffic to other devices in the EVPN network.

AR provides an overlay multicast optimization L2 solution. AR does not require PIM to be enabled in the underlay.

This documentation describes AR functions in terms of multicast traffic replication and forwarding, but applies to any broadcast, unknown unicast, and multicast (BUM) traffic in general.

In releases before Junos OS Release 22.2R1, you can enable AR only in the default switch EVPN instance on supported Junos OS devices. Starting in Junos OS Release 22.2R1, you can also enable AR on supported Junos OS devices in MAC-VRF EVPN instances.

Starting in Junos OS Evolved Release 22.2R1, you can enable AR on supported Junos OS Evolved devices in MAC-VRF EVPN instances.

As of Junos OS and Junos OS Evolved Release 22.2R1, you can also configure both AR and optimized intersubnet multicast (OISM) in the same EVPN-VXLAN fabric.

On Junos OS Evolved devices, we support EVPN-VXLAN using EVPN configurations with MAC-VRF instances only (and not in the default switch instance). As a result, on these devices we support AR only in MAC-VRF EVPN instances.

Benefits of Assisted Replication

-

In an EVPN network with a significant volume of broadcast, unknown unicast, and multicast (BUM) traffic, AR helps to optimize BUM traffic flow by passing replication and forwarding tasks to other devices that have more capacity to better handle the load.

-

In an EVPN-VXLAN environment, AR reduces the number of hardware next hops to multiple remote virtual tunnel endpoints (VTEPs) over traditional multicast ingress replication.

-

AR can operate with other multicast optimizations such as IGMP snooping, MLD snooping, and selective multicast Ethernet tag (SMET) forwarding.

-

AR operates transparently with devices that do not support AR for backward compatibility in existing EVPN networks.

AR Device Roles

To enable AR, you configure devices in the EVPN network into AR replicator and AR leaf roles. For backward compatibility, your EVPN network can also include devices that don’t support AR. Devices that don't support AR operate in a regular network virtualization edge (NVE) device role.

Table 1 summarizes these roles:

|

Role |

Description |

Supported Platforms |

|---|---|---|

|

AR leaf device |

Device in an EVPN network that offloads multicast ingress replication tasks to another device in the EVPN network to handle the replication and forwarding load. |

Starting in Junos OS Releases 18.4R2 and 19.4R1: QFX5110, QFX5120, and QFX10000 line of switches Starting in Junos OS Release 22.2R1: EX4650 Starting in Junos OS Evolved Release 22.2R1: QFX5130-32CD and QFX5700 |

|

AR replicator device |

Device in an EVPN network that helps perform multicast ingress replication and forwarding for traffic received from AR leaf devices on an AR overlay tunnel to other ingress replication overlay tunnels. |

Starting in Junos OS Releases 18.4R2 and 19.4R1: QFX10000 line of switches Starting in Junos OS Evolved Release 22.2R1: QFX5130-32CD and QFX5700 (and with OISM, in standalone mode only; see AR with Optimized Intersubnet Multicast (OISM) for details) |

|

Regular NVE device |

Device that does not support AR or that you did not configure into an AR role in an EVPN network. The device replicates and forwards multicast traffic using the usual EVPN network ingress replication. |

N/A |

If you enable other supported multicast optimization features in your EVPN network, such as IGMP snooping, MLD snooping, and SMET forwarding, AR replicator and AR leaf devices forward traffic in the EVPN core or on the access side only toward interested receivers.

If you want to enable AR in a fabric with OISM, see AR with Optimized Intersubnet Multicast (OISM) for considerations when planning which devices to configure as AR replicators and AR leaf devices in that case.

With supported EX Series and QFX Series AR devices in EVPN-VXLAN networks, AR replicator and AR leaf devices must operate in extended AR mode. In this mode, AR leaf devices that have multihomed Ethernet segments share some of the replication load with the AR replicator devices, which enables them to use split horizon and local bias rules to prevent traffic loops and duplicate forwarding. See Extended AR Mode for Multihomed Ethernet Segments. For details on how devices in an EVPN-VXLAN environment forward traffic to multihomed Ethernet segments using local bias and split horizon filtering, see EVPN-over-VXLAN Supported Functionality.

How AR Works

In general, devices in the EVPN network use ingress replication to distribute BUM traffic. The ingress device (where the source traffic enters the network) replicates and sends the traffic on overlay tunnels to all other devices in the network. For EVPN topologies with EVPN multihoming (ESI-LAGs), network devices employ local bias or designated forwarder (DF) rules to avoid duplicating forwarded traffic to receivers on multihomed Ethernet segments. With multicast optimizations like IGMP snooping or MLD snooping and SMET forwarding enabled, forwarding devices avoid sending unnecessary traffic by replicating the traffic only to other devices that have active listeners.

AR operates within the existing EVPN network overlay and multicast forwarding mechanisms. However, AR also defines special AR overlay tunnels over which AR leaf devices pass traffic from multicast sources to AR replicator devices. AR replicator devices treat incoming source traffic on AR overlay tunnels as requests to replicate the traffic toward other devices in the EVPN network on behalf of the sending AR leaf device.

AR basically works as follows:

-

Each AR replicator device advertises its replicator capabilities and AR IP address to the EVPN network using EVPN Type 3 (inclusive multicast Ethernet tag [IMET]) routes. You configure a secondary IP address on the loopback interface (lo0) as an AR IP address when you assign the replicator role to the device. AR leaf devices use the secondary IP address for the AR overlay tunnel.

-

An AR leaf device receives these advertisements to learn about available AR replicator devices and their capabilities.

-

AR leaf devices advertise EVPN Type 3 routes for ingress replication that include the AR leaf device ingress replication tunnel IP address.

-

The AR leaf device forwards multicast source traffic to a selected AR replicator on its AR overlay tunnel.

When the network has multiple AR replicator devices, AR leaf devices automatically load-balance among them. (See AR Leaf Device Load Balancing with Multiple Replicators.)

-

The AR replicator device receives multicast source traffic from an AR leaf device on an AR overlay tunnel and replicates it to the other devices in the network using the established ingress replication overlay tunnels. It also forwards the traffic toward local receivers and external gateways, including those on multihomed Ethernet segments, using the same local bias or DF rules it would use for traffic received from directly connected sources or regular NVE devices.

Note:When AR operates in extended AR mode, an AR leaf device with a multihomed source handles the replication to its EVPN multihoming peers, and the AR replicator device receiving source traffic from that AR leaf device skips forwarding to the multihomed peers. (See Extended AR Mode for Multihomed Ethernet Segments; Figure 7 illustrates this use case.)

Figure 1 shows an example of multicast traffic flow in an EVPN network with two AR replicators, four AR leaf devices, and a regular NVE device.

In Figure 1, AR Leaf 4 forwards multicast source traffic on an AR overlay tunnel to Spine 1, one of the available AR replicators. Spine 1 receives the traffic on the AR tunnel and replicates it on the usual ingress replication overlay tunnels to all other devices in the EVPN network, including AR leaf devices, other AR replicators, and regular NVE devices.

In this example, Spine 1 also forwards the traffic to its local receivers. Applying local bias rules, Spine 1 forwards the traffic to its multihomed receiver regardless of whether it is the DF for that multihomed Ethernet segment. In addition, according to split horizon rules, AR replicator Spine 1 does not forward the traffic to AR Leaf 4. Instead, AR Leaf 4, the ingress device, does local bias forwarding to its attached receiver.

QFX5130-32CD and QFX5700 switches serving as AR replicators don't support replication and forwarding from locally-attached sources or to locally-attached receivers.

When you enable IGMP snooping or MLD snooping, you also enable SMET forwarding by default. With SMET, the AR replicator skips sending the traffic on ingress replication overlay tunnels to any devices that don’t have active listeners.

Figure 1 shows a simplified view of the control messages and traffic flow with IGMP snooping enabled:

-

Receivers attached to AR Leaf 1 (multihomed to AR Leaf 2), AR Leaf 4, and Regular NVE send IGMP join messages to express interest in receiving the multicast stream.

-

For IGMP snooping to work with EVPN multihoming and avoid duplicate traffic, multihomed peers AR Leaf 1 and AR Leaf 2 synchronize the IGMP state using EVPN Type 7 and 8 (Join Sync and Leave Sync) routes.

Spine 1 and Spine 2 do the same for their multihomed receiver, though the figure doesn’t show that specifically.

-

The EVPN devices hosting receivers (and only the DF for multihomed receivers) advertise EVPN Type 6 routes in the EVPN core (see Overview of Selective Multicast Forwarding), so forwarding devices only send the traffic to the other EVPN devices with interested receivers.

For IPv6 multicast traffic with MLD and MLD snooping enabled, you see the same behavior as illustrated for IGMP snooping.

Regular NVE devices and other AR replicator devices don’t send source traffic on AR overlay tunnels to an AR replicator device, and AR replicators don’t perform AR tasks when receiving multicast source traffic on regular ingress replication overlay tunnels. AR replicators forward traffic they did not receive on AR tunnels the way they normally would using EVPN network ingress replication.

Similarly, if an AR leaf device doesn’t see any available AR replicators, the AR leaf

device defaults to using the usual ingress replication forwarding behavior (the same as a

regular NVE device). In that case, AR show commands display the AR operational mode as

No replicators/Ingress Replication. See the

show evpn multicast-snooping assisted-replication replicators

command for more details.

AR Route Advertisements

Devices in an EVPN network advertise EVPN Type 3 (IMET) routes for regular ingress replication and AR.

AR replicator devices advertise EVPN Type 3 routes for regular ingress replication that include:

-

The AR replicator device ingress replication tunnel IP address

-

Tunnel type—

IR -

AR device role—

AR-REPLICATOR

AR replicators also advertise EVPN Type 3 routes for the special AR overlay tunnels that include:

-

An AR IP address you configure on a loopback interface on the AR replicator device (see the replicator configuration statement).

-

Tunnel type—

AR. -

AR device role—

AR-REPLICATOR. -

EVPN multicast flags extended community with the

Extended-MH-ARflag when operating in extended AR mode (see Extended AR Mode for Multihomed Ethernet Segments).

AR leaf devices advertise EVPN Type 3 routes for regular ingress replication that include:

-

The AR leaf device ingress replication tunnel IP address

-

Tunnel type—

IR -

AR device role—

AR-LEAF

Regular NVE devices don’t support AR or are devices you didn't configured as AR leaf devices. Regular NVE devices ignore AR route advertisements and forward multicast traffic using the usual EVPN network ingress replication rules.

Extended AR Mode for Multihomed Ethernet Segments

EVPN networks employ split horizon and local bias rules to enable multicast optimizations when the architecture includes multihomed Ethernet segments (see EVPN-over-VXLAN Supported Functionality). These methods include information about the ingress AR leaf device in forwarded packets to ensure that the traffic isn’t unnecessarily forwarded or looped back to the source by way of the ingress device’s multihomed peers.

Some devices serving in the AR replicator role can’t retain the source IP address or Ethernet segment identifier (ESI) label on behalf of the ingress AR leaf device when forwarding the traffic to other overlay tunnels. AR replicator devices with this limitation operate in extended AR mode, where the AR replicator forwards traffic toward other devices in the EVPN network with the AR replicator’s ingress replication IP address as the source IP address.

QFX Series AR devices in an EVPN-VXLAN environment use extended AR mode. We currently only support AR in VXLAN overlay architectures, and only support extended AR mode.

AR replicators include the EVPN multicast flags extended community in the EVPN Type 3 AR

route advertisement. The Extended-MH-AR flag indicates the AR operating

mode.

When AR devices operate in extended AR mode:

-

Besides forwarding the traffic to the AR replicator device, an AR leaf device with multihomed sources also handles replicating the traffic to its multihoming peers.

-

An AR replicator device receiving source traffic from an AR leaf device with multihomed sources skips forwarding to the AR leaf device’s multihoming peers.

See Source behind an AR Leaf Device (Multihomed Ethernet Segment) with Extended AR Mode, which shows the traffic flow in extended AR mode for an EVPN network with a multihomed source.

Extended AR mode behavior applies only when AR leaf devices have multihomed Ethernet

segments with other AR leaf devices, not with AR replicators from which the AR leaf

requests replication assistance. When AR replicator and AR leaf devices share multihomed

Ethernet segments in environments that require extended AR mode, AR can’t work correctly.

As a result, ingress AR leaf devices don’t use the AR tunnels and default to using only

ingress replication. In this case, AR show commands display the AR operational mode as

Misconfiguration/Ingress Replication.

AR replicators that can retain the source IP address or ESI label of the ingress AR leaf device operate in regular AR mode.

See the

show evpn multicast-snooping assisted-replication replicators

command for more information about AR operational modes.

AR Leaf Device Load Balancing with Multiple Replicators

When the EVPN network has more than one advertised AR replicator device, the AR leaf devices automatically load-balance among the available AR replicator devices.

- Default AR Leaf Load-Balancing When Detecting Multiple AR Replicators

- Deterministic Load Balancing and Traffic Steering to AR Replicators

Default AR Leaf Load-Balancing When Detecting Multiple AR Replicators

For QFX Series switches in an EVPN-VXLAN network:

-

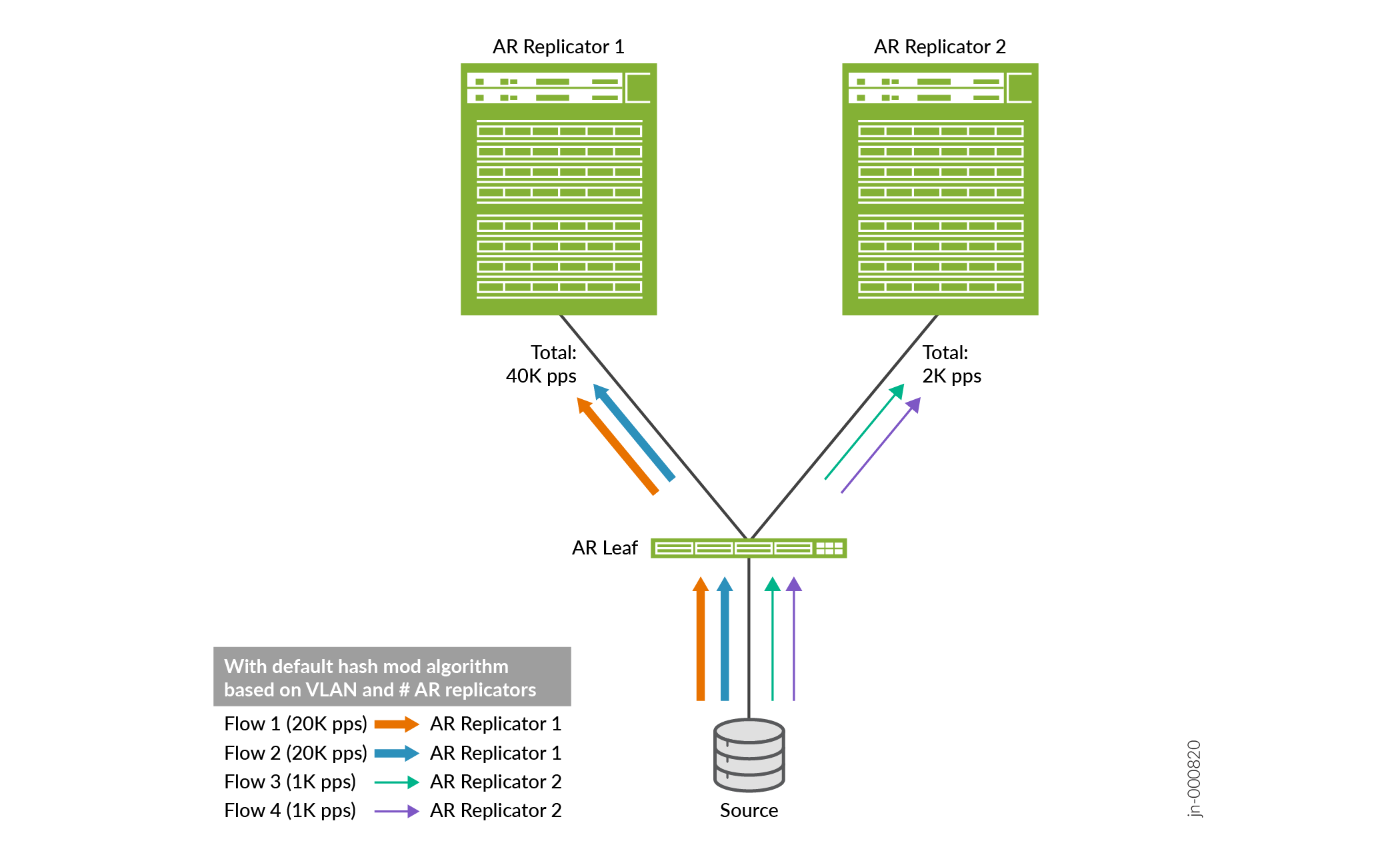

AR leaf devices that are EX4650 switches or switches in the QFX5000 line designate a particular AR replicator device for a VLAN or VXLAN network identifier (VNI) to load-balance among the available AR replicators. This default method on these devices uses a hash mod algorithm based on the VLAN or VNI and the number of AR replicators.

-

AR leaf devices in the QFX10000 line of switches actively load-balance among the available AR replicators based on traffic flow levels (packets per second) within a VLAN or VNI. This method on these devices uses a flow level hash mod algorithm based on the multicast group and the number of AR replicators.

Deterministic Load Balancing and Traffic Steering to AR Replicators

For AR leaf devices that don't use a flow level load balancing mechanism by default (see Default AR Leaf Load-Balancing When Detecting Multiple AR Replicators), we support a feature with which you can deterministically configure AR leaf devices to send multicast flows to particular AR replicators.

We support setting deterministic AR replicator policies only:

-

In EVPN-VXLAN networks running AR with OISM.

-

For multicast traffic flows.

For unknown unicast and broadcast traffic, the device uses the usual bridge domain or VLAN flooding behavior.

With this deterministic AR replicator load balancing method, you:

-

Define routing policies to match one or more multicast flows in the policy

fromconditions.For example, you can set a condition to match a particular multicast group address using the

route-filter multicast-group-addressstatement at the[edit policy-options policy-statement name from]hierarchy. -

In the

thenclause of each routing policy, for the policy action, specify the AR replicator(s) to which the AR leaf device sends the matching flows.You set the policy action with the

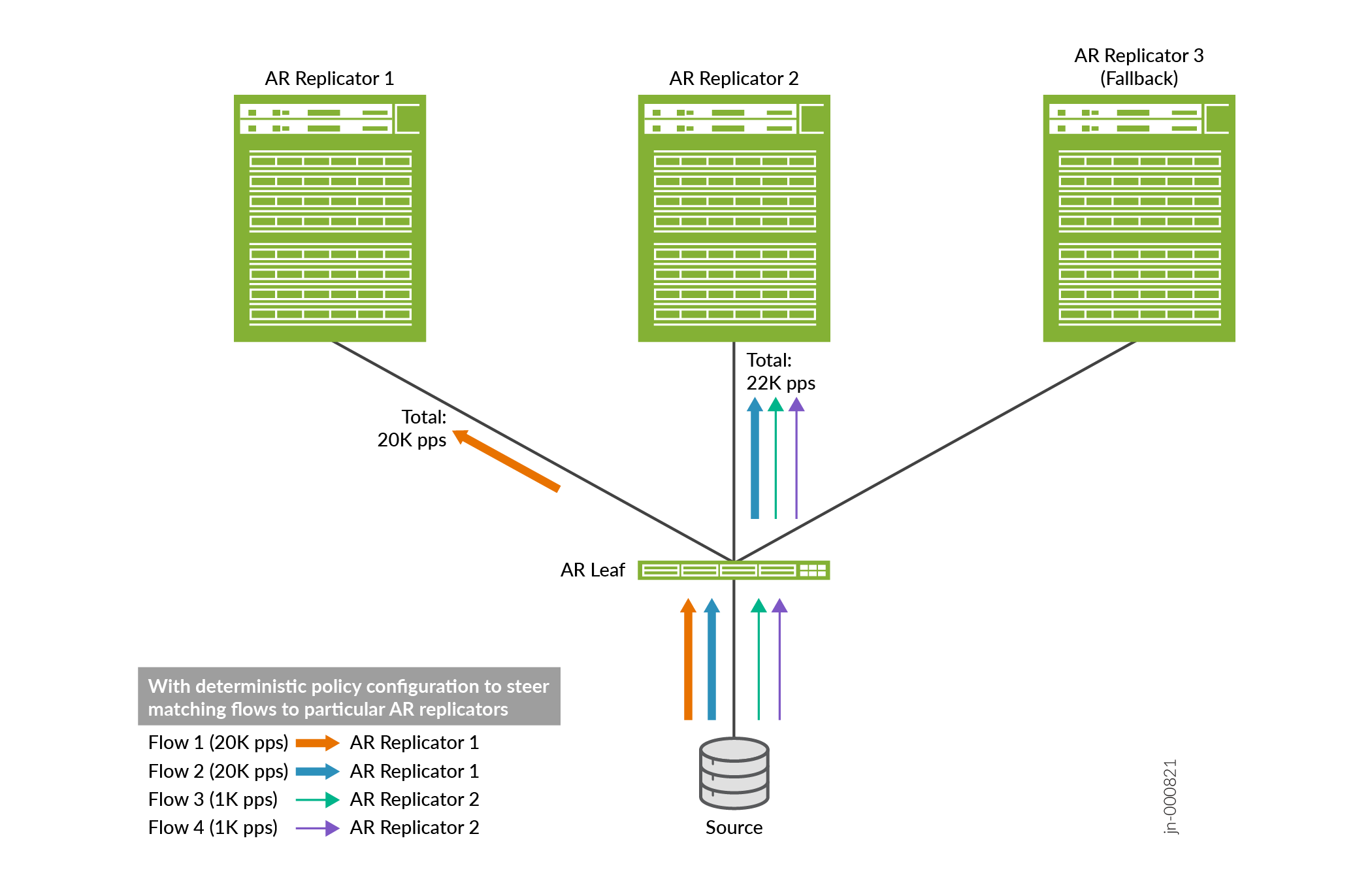

assisted-replication replicator-ip replicator-ip-addrstatement at the[edit policy-options policy-statement name then]hierarchy. (See assisted-replication (Deterministic AR Replicator Policy Actions).)You have options to strictly steer matching flows only to a preferred AR replicator, or also include a fallback AR replicator to use in case the preferred one goes down.

-

Assign the routing policy or policies to an EVPN instance on the AR leaf device using the

deterministic-ar-policystatement at the[edit routing-instances name protocols evpn assisted-replication leaf]hierarchy level. (See deterministic-ar-policy.)

An example use case where you might want to deterministically steer AR leaf traffic to particular replicators is when an AR leaf device serves a source device where you know particular multicast flows are usually significantly larger or smaller. Figure 2 shows how the AR leaf device might load-balance the flows using the default load balancing method that results in a very unbalanced traffic distribution.

Figure 3 illustrates how you can configure deterministic load balancing to more equally distribute (steer) the flows among the available replicators.

- AR Load Balancing Routing Policy Options

- Behavior Notes on Deterministic AR Load Balancing Policies

- Assign a Deterministic AR Policy to an EVPN Instance on an AR Leaf Device

- Sample Deterministic AR Policy Configurations

AR Load Balancing Routing Policy Options

Use the guidelines here to define and assign AR load balancing routing policies.

You can include routing policy match conditions for IPv4 or IPv6 multicast traffic flows associated with IGMP or MLD multicast groups as follows:

-

With IGMPv2 or MLDv1, you can match a multicast flow based on either or both of the following:

-

A multicast group.

-

A bridge domain or VLAN-mapped virtual network identifier (VNI).

Note:This feature introduces the

bridge-domain bridge-domain-nameoption in the policy statementfromclause so you can specify a bridge domain or VLAN-mapped VNI as a match condition.

With the source-specific multicast (SSM) protocols IGMPv3 or MLDv2, you can match a multicast flow based on any combination of the following:

-

A multicast group.

-

A bridge domain or VLAN-mapped VNI.

-

A multicast source address.

-

In the routing policy from clause, use the following options to set

the match conditions for the desired multicast flows:

-

Multicast group:

route-filter -

Bridge domain or VLAN-mapped VNI:

bridge-domain -

Multicast source:

source-address-filter

See Routing Policy Match Conditions for more on the routing policy match options mentioned here.

See Sample Deterministic AR Policy Configurations for some sample policy match condition configurations.

To match MLDv1 or MLDv2 IPv6 multicast flows, include the family

inet6 option in the from clause when you specify IPv6

addresses or prefixes with the route-filter and

source-address-filter match condition options.

In the then clause, you can configure the deterministic AR replicator

policy action (assisted-replication (Deterministic AR Replicator Policy Actions))

to use either of the following modes, which are mutually exclusive:

-

Strict mode—Strictly direct flows to a specified preferred AR replicator. If the preferred AR replicator goes down, the AR leaf device drops the matching flows.

To configure strict mode, include the

strictoption with the AR deterministic load balancing policy action when you specify the AR replicator IP address:[edit policy-options policy-statement name then] user@ar-leaf# set assisted-replication replicator-ip replicator-ip strict

-

Loose mode—This is the default mode if you don't include the

strictoption. In this mode, you can optionally include a fallback AR replicator to use if the specified AR replicator goes down.To specify a fallback AR replicator, include the

fallback-replicator-ip fallback-replicator-ipoption with the AR deterministic load balancing policy action when you specify the AR replicator IP address:[edit policy-options policy-statement name then] user@ar-leaf# set assisted-replication replicator-ip replicator-ip fallback-replicator-ip fallback-replicator-ip

The AR replicator IP addresses for the primary and fallback AR replicators are the secondary loopback addresses you assign to the AR replicator function when you configure those devices as AR replicators. See Configure an AR Replicator Device for details.

Behavior Notes on Deterministic AR Load Balancing Policies

Note the following behaviors with deterministic AR load balancing policies:

-

When you assign a deterministic AR policy to an EVPN instance, the device applies the policy only for advertised EVPN Type 6 routing table entries. The device creates core next-hop multicast snooping entries for those routes.

-

Deterministic AR policies match a multicast source based on the presence of the source address in the EVPN Type 6 routes, which are in the EVPN Type 6 advertisements only with SSM IGMPv3 or MLDv2 flows.

-

If no local receivers generate EVPN Type 6 routes expressing interest in a multicast group, the device still sends locally sourced multicast flows for the group to the border leaf devices so the traffic can reach any external receivers.

-

-

For the multicast flows that don't match any of the policies you assign on an AR leaf device, the device uses its default AR load balancing method. See Default AR Leaf Load-Balancing When Detecting Multiple AR Replicators for more on the default method on different platforms.

-

In loose mode (no

strictoption), if the preferred AR replicator isn't available and you didn't specify a fallback or the fallback is also down, the AR leaf device uses its default AR replicator load balancing method to distribute any multicast traffic among the remaining available AR replicators. (See Default AR Leaf Load-Balancing When Detecting Multiple AR Replicators.)If the AR leaf device doesn't detect any available AR replicators in the network, the device reverts to the default multicast traffic ingress replication behavior (the device doesn't use AR at all).

Note:Ingress replication is the default multicast traffic forwarding method with AR on:

-

Devices in the network that don't support AR.

-

Leaf devices that support AR but you didn't configure them as AR leaf devices.

-

Leaf devices you configure as AR leaf devices, but those AR leaf devices don't detect any available AR replicators.

-

-

If you apply a deterministic AR policy on multihoming peer AR leaf devices, those devices ignore any such policies when forwarding to each other. Instead, those devices invoke extended AR mode forwarding behavior—they use ingress replication to forward multicast source traffic directly to their peer AR leaf devices in the same multihomed Ethernet segment. See Source behind an AR Leaf Device (Multihomed Ethernet Segment) with Extended AR Mode for details on how this use case works.

-

If you change a policy assignment or assigned policy conditions, you might see some multicast traffic loss until the routing tables converge after the policy update.

Assign a Deterministic AR Policy to an EVPN Instance on an AR Leaf Device

To enable a deterministic AR policy on an AR leaf device, assign the policy to an EVPN

instance on the device using the deterministic-ar-policy statement at

the [edit routing-instances name protocols evpn

assisted-replication leaf] hierarchy level.

For example, if you define a policy called arpol1, you can assign it

to the EVPN instance called evpn-vxlanA as follows:

set routing-instances evpn-vxlanA protocols evpn assisted-replication leaf deterministic-ar-policy arpol1;

You can use the show evpn igmp-snooping proxy command with the

deterministic-ar option to see the configured mode (strict or loose),

preferred AR replicator, and fallback AR replicator (if any).

Use the show evpn multicast-snooping assisted-replication replicators command to see the available AR replicators and the VTEP interface toward each one.

For example:

-

Strict mode example: With a strict mode policy configuration such as the following

arpol1, you configure to strictly use a preferred AR replicator:set policy-options policy-statement arpol1 from route-filter 233.252.0.2/24 orlonger; set policy-options policy-statement arpol1 from bridge-domain 1100; set policy-options policy-statement arpol1 then assisted-replication replicator-ip 192.168.104.1 strict set policy-options policy-statement arpol1 then accept; set routing-instances evpn-vxlanA protocols evpn assisted-replication leaf deterministic-ar-policy arpol1;

You can see the mode and preferred AR replicator, and follow the next hops for the flow to see that the device uses the available preferred AR replicator:

user@ar-leaf1> show evpn igmp-snooping proxy deterministic-ar Instance: evpn-vxlanA VN Identifier: 1100 Group Source Local Remote Corenh Flood Deterministic-AR(Mode/Preferred replicator/Fallback) 233.252.0.1 0.0.0.0 0 1 4375 0 N/A 233.252.0.2 0.0.0.0 0 1 4372 0 Strict/192.168.104.1/0.0.0.0 user@ar-leaf1> show evpn multicast-snooping next-hops 4372 detail Family: INET ID Refcount KRefcount Downstream interface Addr 4372 6 2 vtep.32778-(11148) Flags 0x2100 type 0x18 members 0/0/0/1/0 Address 0x769b404 user@ar-leaf1> show evpn multicast-snooping assisted-replication replicators Instance: evpn-vxlanA AR Role: AR Leaf VN Identifier: 1100 Operational Mode: Extended AR Replicator IP Nexthop Index Interface Mode 192.168.104.1 11148 vtep.32778 Extended AR 192.168.105.1 11357 vtep.32782 Extended AR 192.168.106.1 11180 vtep.32780 Extended ARNote:With a strict mode deterministic AR policy, the

show evpn igmp-snooping proxy deterministic-arcommand displays a null fallback replicator address (0.0.0.0). -

Loose mode example: With a loose mode policy configuration such as the following

arpol2, you enable the device to use a fallback AR replicator in case the preferred AR replicator becomes unavailable:set policy-options policy-statement arpol2 from route-filter 233.252.0.2/24 orlonger; set policy-options policy-statement arpol2 from bridge-domain 1100; set policy-options policy-statement arpol2 then assisted-replication replicator-ip 192.168.104.1 fallback-replicator-ip 192.168.105.1 set policy-options policy-statement arpol2 then accept; set routing-instances evpn-vxlanA protocols evpn assisted-replication leaf deterministic-ar-policy arpol2;

You can see in the case below that the AR leaf device didn't detect the preferred AR replicator, so the device uses the specified fallback AR replicator instead:

user@ar-leaf1> show evpn igmp-snooping proxy deterministic-ar Instance: evpn-vxlanA VN Identifier: 1100 Group Source Local Remote Corenh Flood Deterministic-AR(Mode/Preferred replicator/Fallback) 233.252.0.1 0.0.0.0 0 1 4375 0 N/A 233.252.0.2 0.0.0.0 0 1 4453 0 Loose/192.168.104.1/192.168.105.1 user@ar-leaf1> show evpn multicast-snooping next-hops 4453 detail Family: INET ID Refcount KRefcount Downstream interface Addr 4453 3 1 vtep.32782-(11357) Flags 0x2100 type 0x18 members 0/0/0/1/0 Address 0x55a0ce415604 user@ar-leaf1> show evpn multicast-snooping assisted-replication replicators Instance: evpn-vxlanA AR Role: AR Leaf VN Identifier: 1100 Operational Mode: Extended AR Replicator IP Nexthop Index Interface Mode 192.168.105.1 11357 vtep.32782 Extended AR 192.168.106.1 11180 vtep.32780 Extended AR

Sample Deterministic AR Policy Configurations

Here are a few sample deterministic AR replicator routing policies:

-

This sample policy matches IGMP multicast flows for multicast groups under 233.252.0.2/24 and bridge domain or VNI 1100. The configuration steers those flows to AR replicator 192.168.104.1 in strict mode (no fallback or default load balancing):

policy-options { policy-statement arpol1 { from { route-filter 233.252.0.2/24 orlonger; bridge-domain-id 1100; } then { assisted-replication replicator-ip 192.168.104.1 strict; accept; } -

This sample policy matches multicast flows for IGMP multicast groups under 233.252.0.2/24 and any bridge domain or VNI. The configuration steers those flows to AR replicator 192.168.104.1 in loose mode with AR replicator 192.168.105.1 as the fallback:

policy-options { policy-statement arpol2 { from { route-filter 233.252.0.2/24 orlonger; } then { assisted-replication replicator-ip 192.168.104.1 fallback-replicator-ip 192.168.104.1; accept; } -

This sample policy matches MLDv2 multicast flows for IPv6 multicast groups under ffe9::2:1/112 and ffe9::2:2/112, any bridge domain or VNI, and source IPv6 address 2001:db8::1/128. The configuration steers those flows to AR replicator 192.168.104.1 in loose mode with AR replicator 192.168.105.1 as the fallback:

Note:Include

family inet6in thefromclause for IPv6 multicast flows with MLD.policy-options { policy-statement arpol5 { from { family inet6; route-filter ffe9::2:1/112 orlonger; route-filter ffe9::2:2/112 orlonger source-address-filter 2001:db8::1/128 exact } then { assisted-replication replicator-ip 192.168.104.1 fallback-replicator-ip 192.168.105.1; accept; }

AR Limitations with IGMP Snooping or MLD Snooping

AR replicator devices with IGMP snooping or MLD snooping enabled will silently drop multicast traffic toward AR leaf devices that don’t support IGMP snooping or MLD snooping in an EVPN-VXLAN environment.

If you want to include IGMP snooping or MLD snooping multicast traffic optimization with AR, you can work around this limitation by disabling AR on the EVPN devices that don’t support IGMP snooping or MLD snooping so they don’t function as AR leaf devices. Those devices then behave like regular NVE devices and can receive the multicast traffic by way of the usual EVPN network ingress replication, although without the benefits of AR and IGMP snooping or MLD snooping optimizations. You can configure devices that do support IGMP snooping or MLD snooping in this environment as AR leaf devices with IGMP snooping or MLD snooping.

See Table 1 for more about the behavior differences between AR leaf devices and regular NVE devices.

For best results in EVPN-VXLAN networks with a combination of leaf devices that don’t support IGMP snooping or MLD snooping (such as QFX5100 switches), and leaf devices that do support IGMP snooping or MLD snooping (such as QFX5120 switches), follow these recommendations based on the VTEP scaling you need:

-

VTEP scaling with 100 or more VTEPs: Enable AR for all supporting devices in the network. Don’t use IGMP snooping or MLD snooping.

-

VTEP scaling with less than 100 VTEPs: Enable AR and IGMP snooping on the AR replicators and other AR leaf devices that support IGMP snooping or MLD snooping. Don't enable AR on leaf devices that don’t support IGMP snooping or MLD snooping, so those devices then act as regular NVE devices and not as AR leaf devices.

AR with Optimized Intersubnet Multicast (OISM)

OISM is a multicast traffic optimization feature that operates at L2 and Layer 3 (L3) in EVPN-VXLAN edge-routed bridging (ERB) overlay fabrics. With OISM, leaf devices in the fabric route intersubnet multicast traffic locally through IRB interfaces. This design minimizes the amount of traffic the devices send out into the EVPN core and avoids traffic hairpinning. OISM uses IGMP snooping (or MLD snooping) and selective multicast forwarding (SMET) to further limit EVPN core traffic only to destinations with interested listeners. Finally, OISM enables ERB fabrics to effectively support multicast traffic between sources and receivers inside and outside of the fabric.

In contrast, AR focuses on optimizing the L2 functions of replicating and forwarding BUM traffic in the fabric.

OISM optimizes only multicast traffic flow, not broadcast or unknown unicast traffic flows. AR helps to optimize any BUM traffic flows.

Starting in Junos OS and Junos OS Evolved Release 22.2R1, you can enable AR with OISM on supported devices in an ERB overlay fabric. With this support, OISM uses the symmetric bridge domains model, in which you configure the same subnet information symmetrically on all OISM devices.

- AR and OISM Device Roles

- Guidelines to Integrate AR and OISM Device Roles

- How AR and OISM Work Together

AR and OISM Device Roles

With AR, you configure devices to function in the AR replicator or AR leaf role. When you don't assign an AR role to a device, the device functions as a regular NVE device. See Table 1 for more on these roles.

With OISM, you configure devices in an ERB overlay fabric to function in one of the following roles:

-

OISM border leaf role.

-

OISM server leaf role.

-

No OISM role—These devices are usually lean spine devices in the fabric.

See Optimized Intersubnet Multicast in EVPN Networks for details on how OISM works and how to configure OISM devices.

When you integrate AR and OISM functions, we support the AR replicator role in the following modes:

-

Collocated: You configure the AR replicator role on the same device as the OISM border leaf role.

-

Standalone: The AR replicator function is not collocated with the OISM border leaf role on the same device. In this case, the AR replicator is usually a lean spine device in an ERB fabric running OISM.

Note:On QFX5130-32CD and QFX5700 switches, we only support standalone mode. You can configure the AR replicator role only on a device in the fabric that isn't also an OISM border leaf device.

Guidelines to Integrate AR and OISM Device Roles

Use these guidelines to integrate AR and OISM roles on the devices in the fabric:

-

You can configure any OISM leaf devices (border leaf or server leaf) with the AR leaf role.

-

Use the following rules when you configure the AR replicator role:

-

We support the AR replicator role on any of following devices: QFX5130-32CD, QFX5700, QFX10002, QFX10008, and QFX10016.

Note:QFX5130-32CD and QFX5700 devices support the AR replicator role only in standalone mode.

-

The devices you use as AR replicators must be devices that support OISM, even if you configure an AR replicator in standalone mode (see AR and OISM Device Roles). The AR replicator devices must have the same tenant virtual routing and forwarding (VRF) and VLAN information as the OISM devices (see rule 3).

-

Even if the AR replicator device is in standalone mode, you must configure the device with the same tenant VRF instances, corresponding IRB interfaces, and member VLANs as the OISM leaf devices. The AR replicator requires this information to install the correct L2 multicast states to properly forward the multicast traffic.

See Configure Common OISM Elements on Border Leaf Devices and Server Leaf Devices for details on the OISM configuration steps you duplicate on standalone AR replicators for these elements.

-

How AR and OISM Work Together

When you enable AR and OISM together, you can configure higher capacity devices in the fabric to do the replication and lessen the load on the OISM leaf devices. With OISM, the ingress leaf device replicates and sends copies of the packets on the ingress VLAN toward interested receivers, where:

-

The ingress leaf device might be an OISM server leaf device or an OISM border leaf device.

-

For multicast traffic sourced within the fabric, the ingress VLAN is a revenue VLAN.

-

For multicast traffic coming into the fabric from an external multicast source, the ingress VLAN is the supplemental bridge domain (SBD).

When you configure the AR leaf role on the ingress OISM leaf device, the device sends one copy of the traffic toward the AR replicator. The AR replicator takes care of replicating and forwarding the traffic toward the other OISM server leaf or border leaf devices. The OISM leaf devices do local routing to minimize the multicast traffic in the EVPN core.

OISM server leaf and border leaf devices send EVPN Type 6 routes into the EVPN core when their receivers join a multicast group. OISM devices derive the multicast (*,G) states for IGMPv2 or (S,G) states for IGMPv3 from these EVPN Type 6 routes. The devices install these derived states on the OISM SBD and revenue bridge domain VLANs in the MAC-VRF instance for the VLANs that are part of OISM-enabled L3 tenant VRF instances. If you enable AR with OISM, the AR replicator devices use the Type 6 routes to install multicast states in the same way the OISM devices do.

However, QFX5130-32CD and QFX5700 switches configured as AR replicators with OISM might

have scaling issues when they install the multicast states in a fabric with many VLANs.

As a result, these switches install the derived multicast states only on the OISM SBD

VLAN. They don't install these states on all OISM revenue bridge domain VLANs. On these

devices when configured as AR replicators, you see multicast group routes only on the

SBD in show multicast snooping route command output.

See OISM and AR Scaling with Many VLANs for details.

See the following illustrations of AR and OISM working together:

See Configure Assisted Replication for details on how to configure AR, including when you integrate AR and OISM device roles.

Multicast Forwarding Use Cases in an EVPN Network with AR Devices

This section shows multicast traffic flow in several common use cases where the source is located behind different AR devices or regular NVE devices in an EVPN network.

Some use cases or aspects of these use cases don't apply based on the following platform limitations with AR:

-

QFX5130-32CD and QFX5700 devices serving as AR replicators don't support replication and forwarding from locally-attached sources or to locally-attached receivers. As a result, with these switches as AR replicators, we don't support the following:

-

The use case in Source behind an AR Replicator Device.

-

Replicating to a single-homed or multihomed receiver locally attached to an AR replicator device as the other use cases show.

-

- Source behind a Regular NVE Device

- Source behind an AR Replicator Device

- Source behind an AR Leaf Device (Single-Homed Ethernet Segment)

- Source behind an AR Leaf Device (Multihomed Ethernet Segment) with Extended AR Mode

Source behind a Regular NVE Device

Figure 4 shows an EVPN network with multicast traffic from a source attached to Regular NVE, a device that doesn’t support AR.

In this case, forwarding behavior is the same whether you enable AR or not, because the ingress device, Regular NVE, is not an AR leaf device sending traffic for replication to an AR replicator. Regular NVE employs the usual ingress replication forwarding rules as follows:

-

Without IGMP snooping or MLD snooping, and without SMET forwarding enabled, Regular NVE floods the traffic to all the other devices in the EVPN network.

-

With IGMP snooping or MLD snooping, and with SMET forwarding enabled, Regular NVE only forwards the traffic to other devices in the EVPN network with active listeners. In this case, Regular NVE forwards to all the other devices except AR Leaf 2 and AR Leaf 4.

Spine 1 and Spine 2 replicate and forward the traffic toward their local single-homed or multihomed receivers or external gateways using the usual EVPN network ingress replication local bias or DF behaviors. In this case, Figure 4 shows that Spine 2 is the elected DF and forwards the traffic to a multihomed receiver on an Ethernet segment that the two spine devices share.

Source behind an AR Replicator Device

Figure 5 shows an EVPN network with multicast traffic from a source attached to an AR replicator device called Spine 1.

QFX5130-32CD and QFX5700 devices serving as AR replicators don't support replication and forwarding from locally-attached sources or to locally-attached receivers. As a result, this use case doesn't apply when these switches act as AR replicators.

In this case, forwarding behavior is the same whether you enable AR or not, because the ingress device, Spine 1, is not an AR leaf device sending traffic for replication to an AR replicator. Spine 1, although it’s configured as an AR replicator device, does not act as an AR replicator. Instead, it employs the usual ingress replication forwarding rules as follows:

-

Without IGMP snooping or MLD snooping, and without SMET forwarding enabled, Spine 1, the ingress device, floods the traffic to all the other devices in the EVPN network.

-

With IGMP snooping or MLD snooping, and with SMET forwarding enabled, Spine 1 only forwards the traffic to other devices in the EVPN network with active listeners. In this case, Spine 1 forwards to the other devices except AR Leaf 2 and AR Leaf 4, and to Spine 2, which has a local interested receiver.

-

Spine 1 also replicates the traffic toward local single-homed or multihomed receivers, or external gateways, using the usual EVPN network ingress replication local bias or DF behaviors. In this case, Spine 1 uses local bias and forwards the traffic to a multihomed receiver due to local bias rules (whether it is the DF on that Ethernet segment or not).

Spine 2 forwards the traffic it received from Spine 1 to its local receiver. Spine 2 also does a local bias check for its multihomed receiver, and skips forwarding to the multihomed receiver even though it is the DF for that Ethernet segment.

Source behind an AR Leaf Device (Single-Homed Ethernet Segment)

Figure 6 shows an EVPN network with multicast traffic from a source attached to the AR leaf device called AR Leaf 4. Traffic flow is the same whether the ingress AR leaf device and AR replicator are operating in extended AR mode or not, because the source isn’t multihomed to any other leaf devices.

Without IGMP snooping or MLD snooping, and without SMET forwarding enabled:

-

AR Leaf 4 forwards one copy of the multicast traffic to an advertised AR replicator device, in this case Spine 1, using the AR overlay tunnel secondary IP address that you configured on the loopback interface, lo0, for Spine 1.

-

AR Leaf 4 also applies local bias forwarding rules and replicates the multicast traffic to its locally attached receiver.

-

Spine 1 receives the multicast traffic on the AR overlay tunnel and replicates and forwards it on behalf of Leaf 4 using the usual ingress replication overlay tunnels to all the other devices in the EVPN network, including Spine 2. Spine 1 skips forwarding the traffic back to the ingress device AR Leaf 4 (according to split horizon rules).

With IGMP snooping or MLD snooping, and with SMET forwarding enabled:

-

AR replicator Spine 1 further optimizes replication by only forwarding the traffic toward other devices in the EVPN network with active listeners. In this case, Spine 1 skips forwarding to AR Leaf 2.

Spine 1 also replicates and forwards the traffic to its local receivers and toward multihomed receivers or external gateways using the usual EVPN network ingress replication local bias or DF behaviors. In this case, Spine 1 forwards the traffic to a local receiver. Spine 1 uses local bias to forward to a multihomed receiver (although it is not the DF for that Ethernet segment).

Spine 2 forwards the traffic it received from Spine 1 to its local receiver. Spine 2 does a local bias check for its multihomed receiver, and skips forwarding to the multihomed receiver even though it is the DF for that Ethernet segment.

Source behind an AR Leaf Device (Multihomed Ethernet Segment) with Extended AR Mode

Figure 7 shows an EVPN network with multicast traffic from a source on a multihomed Ethernet segment operating in extended AR mode. The source is multihomed to three AR leaf devices and might send the traffic to any one of them. In this case, AR Leaf 1 is the ingress device.

Without IGMP snooping or MLD snooping, and without SMET forwarding enabled:

-

AR Leaf 1 forwards one copy of the multicast traffic to one of the advertised AR replicator devices, in this case, Spine 1, using the AR overlay tunnel secondary IP address that you configured on the loopback interface, lo0, for Spine 1.

-

Operating in extended AR mode:

-

AR Leaf 1 also replicates and forwards the multicast traffic to all of its multihoming peers (AR Leaf 2 and AR Leaf 3) for the source Ethernet segment.

-

Spine 1 receives the multicast traffic on the AR overlay tunnel and replicates and forwards it using the usual ingress replication overlay tunnels to the other devices in the EVPN network except AR Leaf 1 and its multihoming peers AR Leaf 2 and AR Leaf 3.

-

With IGMP snooping or MLD snooping, and with SMET forwarding enabled, the AR leaf and AR replicator devices further optimize multicast replication in extended AR mode, as follows:

-

Besides sending to AR replicator Spine 1, AR Leaf 1 also replicates and forwards the multicast traffic only to its multihoming peers that have active listeners (AR Leaf 3).

-

Spine 1 replicates traffic for AR Leaf 1 only to other devices in the EVPN network with active listeners. In this case, that includes only the regular NVE device and Spine 2.

Spine 1 also replicates and forwards the traffic to its local receivers and toward multihomed receivers or external gateways using the usual EVPN network ingress replication local bias or DF behaviors. In this case, Spine 1 uses local bias to forward to a multihomed receiver although it is not the DF for that Ethernet segment. Spine 2 receives the traffic from Spine 1 and forwards the traffic to its local receiver. Spine 2 also does a local bias check for its multihomed receiver, and skips forwarding to the multihomed receiver even though it is the DF for that Ethernet segment.

Configure Assisted Replication

Assisted replication (AR) helps optimize multicast traffic flow in EVPN networks. To enable AR, you configure devices in the EVPN network to operate as AR replicator and AR leaf devices. Available AR replicator devices that are better able to handle the processing load help perform multicast traffic replication and forwarding tasks for AR leaf devices.

You don’t need to configure any options on AR replicator or AR leaf devices to accommodate devices that don't support AR. We refer to devices that don't support AR as regular network virtualization edge (NVE) devices. Regular NVE devices use the usual EVPN network and overlay tunnel ingress replication independently of AR operation within the same EVPN network. AR replicator devices also don’t need to distinguish between AR leaf and regular NVE devices when forwarding multicast traffic, because AR replicator devices also use the existing ingress replication overlay tunnels for all destinations.

In general, to configure AR:

-

You can enable AR on Junos OS devices in default switch EVPN instance configurations.

-

(Starting in Junos OS 22.2R1) You can enable AR on Junos OS devices in MAC-VRF EVPN instance configurations with service types

vlan-basedorvlan-aware. -

(Starting in Junos OS Evolved 22.2R1) You can enable AR on Junos OS Evolved devices only in MAC-VRF EVPN instance configurations with service types

vlan-basedorvlan-aware.

- Configure IGMP Snooping or MLD Snooping with AR

- Configure an AR Replicator Device

- Configure an AR Leaf Device

Configure IGMP Snooping or MLD Snooping with AR

You can enable IGMP snooping or MLD snooping with AR to further optimize multicast forwarding in the fabric. Use the following guidelines:

-

With default switch EVPN instance configurations:

Enable IGMP snooping or MLD snooping and the relevant options for each VLAN (or all VLANs) at the

[edit protocols igmp-snooping]hierarchy. -

With MAC-VRF EVPN instance configurations:

We support IGMP snooping with MAC-VRF instance configurations. Enable IGMP snooping and the relevant options for each VLAN (or all VLANs) in the MAC-VRF routing instances at the

[edit routing-instances mac-vrf-instance-name protocols igmp-snooping]hierarchy. -

When you configure AR and OISM roles on the same device, configure IGMP snooping for each of the OISM revenue VLANs, SBD, and the M-VLAN (if using the M-VLAN method for external multicast).

See Supported IGMP or MLD Versions and Group Membership Report Modes for details on any-source multicast (ASM) and source-specific multicast (SSM) support with IGMP or MLD in EVPN-VXLAN fabrics.

See for more on considerations to configure OISM with AR to support both IGMPv2 receivers and IGMPv3 receivers.

When you enable IGMP snooping or MLD snooping with AR or OISM for multicast traffic in EVPN-VXLAN fabrics, you also automatically enable the selective multicast forwarding (SMET) optimization feature. See Overview of Selective Multicast Forwarding.

Configure an AR Replicator Device

Devices you configure as AR replicator devices advertise their AR capabilities and AR IP address to the EVPN network. The AR IP address is a loopback interface address you configure on the AR replicator device. AR leaf devices receive these advertisements to learn about available AR replicators to which the leaf devices can offload multicast replication and forwarding tasks. AR leaf devices automatically load-balance among multiple available AR replicator devices.

If you want to enable AR and OISM together in your fabric, you can assign the AR replicator role in standalone or collocated mode:

-

In standalone mode, you assign the AR replicator role to devices that don't act as OISM border leaf devices. These devices are usually the lean spine devices in the ERB overlay fabrics that support OISM.

Note:On QFX5130-32CD and QFX5700 switches, we only support standalone mode.

-

In collocated mode, you can assign the AR replicator role on OISM border leaf devices.

In either case, the AR replicator device needs the common OISM tenant VRF instances, revenue VLANs, SBD, and corresponding IRB interfaces to properly forward the multicast traffic across the L3 VRFs. You'll need to configure these items as part of the AR configuration on the standalone AR replicator device. See step 4 below.) In collocated mode, you configure these items as part of the OISM configuration as well as other OISM role-specific configuration.

To configure an AR replicator device:

Configure an AR Leaf Device

Devices you configure as AR leaf devices receive traffic from multicast sources and offload the replication and forwarding to an AR replicator device. AR replicator devices advertise their AR capabilities and AR IP address, and AR leaf devices automatically load-balance among the available AR replicators.

If you want to enable AR and OISM together in your fabric, you can configure the AR leaf role on any devices configured in the OISM border leaf or server leaf roles in the fabric. For details on OISM leaf device configuration, see:

-

Configure Common OISM Elements on Border Leaf Devices and Server Leaf Devices

-

Configure Border Leaf Device OISM Elements with Classic L3 Interface Method

-

Configure Border Leaf Device OISM Elements with Non-EVPN IRB Method

To configure an AR leaf device:

Verify Assisted Replication Setup and Operation

Several commands help verify that AR replicator devices have advertised their AR IP address and capabilities. Some commands verify that AR leaf devices have learned how to reach available AR replicator devices and EVPN multihoming peers to operate in extended AR mode.

Change History Table

Feature support is determined by the platform and release you are using. Use Feature Explorer to determine if a feature is supported on your platform.