Managing Virtual Network Functions Using JDM

Understanding Virtual Network Functions

Virtualized network functions (VNFs) include all virtual entities that can be launched and managed from the Juniper Device Manager (JDM). Currently, virtual machines (VMs) are the only VNF type that is supported.

There are several components in a JDM environment:

-

JDM—Manages the life cycle for all service VMs. JDM also provides a CLI with configuration persistence or the ability to use NETCONF for scripting and automation.

-

Primary Junos OS VM—A system VM that is the primary virtual device. This VM is always present when the system is running.

-

Other Junos OS VMs—These VMs are service VMs and are activated dynamically by an external controller. A typical example of this type of VM is a vSRX Virtual Firewall instance.

-

Third-party VNFs—JDM supports the creation and management of third-party VMs such as Ubuntu Linux VMs.

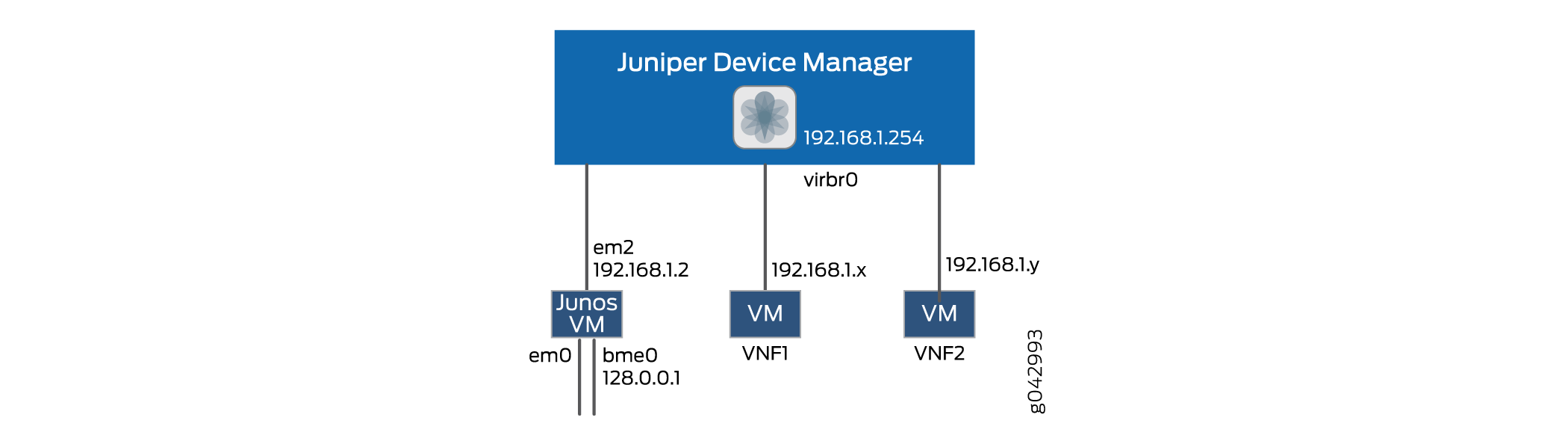

The JDM architecture provides an internal network that connects all VMs to the JDM as shown in Figure 1.

The JDM can reach any VNF using an internal network (192.0.2.1/24).

Up to Junos OS Release 15.1X53-D470, the liveliness IP is in 192.168.1.0/24 subnet. In all later Junos OS Releases, the liveliness IP is in 192.0.2.0/24 subnet.

A VNF can own or share management ports and NIC ports in the system.

All VMs run in isolation and a state change in one VM does not affect another VM. When the system restarts, the service VMs are brought online as specified in the persistent configuration file. When you gracefully shut down the system, all VMs including the Junos VMs are shut down.

Table 1 provides a glossary of commonly used VNF acronyms and terms.

|

Term |

Definition |

|---|---|

|

JCP |

Junos Control Plane (also known as the primary Junos OS VM) |

|

JDM |

Juniper Device Manager |

|

NFV |

Network Functions Virtualization |

|

VM |

Virtual Machine |

|

VNF |

Virtualized Network Function |

Prerequisites to Onboard Virtual Network Functions on NFX250 Devices

You can onboard and manage Juniper VNFs and third-party VNFs on NFX devices through the Junos Control Plane (JCP).

The number of VNFs that you can onboard on the device depends on the availability of system resources such as the number of CPUs and system memory.

Before you onboard the VNFs, it is recommended to check the available system resources such as CPUs, memory, and storage for VNFs. For more information, see Managing the VNF Life Cycle.

Prerequisites for VNFs

To instantiate VNFs, the NFX devices support:

KVM based hypervisor deployment

OVS or Virtio interface drivers

raw or qcow2 VNF file types

(Optional) SR-IOV

(Optional) CD-ROM and USB configuration drives

(Optional) Hugepages for memory requirements

Managing the VNF Life Cycle

You can use the JDM CLI to manage the VNF. Additionally, libvirt software offers extensive virtualization features. To ensure that you are not limited by the CLI, JDM provides an option to operate VNF using an XML descriptor file. Network Configuration Protocol (NETCONF) supports all VNF operations. Multiple VNFs can co-exist in a system and you can configure multiple VNFs using either an XML descriptor file or an image.

Ensure that VNF resources that are specified in the XML descriptor file do not exceed the available system resources.

This topic covers the life-cycle management of a VNF.

- Planning Resources for a VNF

- Managing the VNF Image

- Preparing the Bootstrap Configuration

- Launching a VNF

- Allocating Resources for a VNF

- Managing VNF States

- Managing VNF MAC Addresses

- Managing MTU

- Accessing a VNF from JDM

- Viewing List of VNFs

- Displaying the VNF Details

- Deleting a VNF

Planning Resources for a VNF

Purpose

Before launching a VNF, it is important to check the system inventory and confirm that the resources required by the VNF are available. The VNF must be designed and configured properly so that its resource requirements do not exceed the available capacity of the system.

The output of the

show system inventorycommand displays only the current snapshot of system resource usage. When you start a VNF, the resource usage might be less than what was available when you installed the VNF package.Before starting a VNF, you must check the system resource usage.

Some of the physical CPUs are reserved by the system. Except for the following physical CPUs, all others are available for user-defined VNFs:

The following table provides the list of physical CPUs that are reserved for NFX250-LS1.

CPU Core |

Allocation |

|---|---|

0 |

Host, JDM, and JCP |

4 |

Host bridge |

7 |

IPSec |

The following table provides the list of physical CPUs that are reserved for NFX250-S1, NFX250-S2, and NFX250-S1E devices.

CPU Core |

Allocation |

|---|---|

0 |

Host, JDM, and JCP |

6 |

Host bridge |

7 |

IPSec |

For more information, see the following:

Managing the VNF Image

To load a VNF image on the device from a remote location, use

the file-copy command. Alternatively, you

can use the NETCONF command file-put, to load a VNF image.

You must save the VNF image in the /var/third-party/images directory.

Preparing the Bootstrap Configuration

You can bootstrap a VNF by attaching either a CD or a USB storage device that contains a bootstrap-config ISO file.

A bootstrap configuration file must contain an initial configuration that allows the VNF to be accessible from an external controller, and accepts SSH, HTTP, or HTTPS connections from an external controller for further runtime configurations.

An ISO disk image must be created offline for the bootstrap configuration file as follows:

user@jdm>request genisoimage bootstrap-config-filename iso-filename

Launching a VNF

You can launch a VNF by configuring the VNF name, and specifying either the path to an XML descriptor file or to an image.

While launching a VNF with an image, two VNF interfaces are added by default. These interfaces are required for management and internal network. For those two interfaces, the target Peripheral Component Interconnect (PCI) addresses, such as 0000:00:03:0 and 0000:00:04:0 are reserved.

To launch a VNF using an XML descriptor file:

user@jdm# set virtual-network-functions vnf-name init-descriptor file-path user@jdm# commit

To launch a VNF using an image:

user@jdm# set virtual-network-functions vnf-name image file-path user@jdm# commit

To specify a UUID for the VNF:

user@jdm# set virtual-network-functions vnf-name [uuid vnf-uuid]

uuid is an optional parameter,

and it is recommended to allow the system to allocate a UUID for the

VNF.

You cannot change the init-descriptor or image configuration after saving and committing the init-descriptor and image configuration. To change the init-descriptor or image for a VNF, you must delete and create a VNF again.

Commit checks are applicable only for VNF configurations that are based on image specification through JDM CLI, and not for VNF configurations that are based on init-descriptor XML file.

For creating VNFs using image files, ensure the following:

You must use unique files for image, disk, USB that are used within a VNF or across VNFs except for an iso9660 type file, which can be attached to mutiple VNFs.

A file specified as image in raw format should be a block device with a partition table and a boot partition.

A file specified as image in qcow2 format should be a valid qcow2 file.

Allocating Resources for a VNF

This topic covers the process of allocating various resources to a VNF.

- Specifying CPU for VNF

- Allocating Memory for a VNF

- Configuring VNF Storage Devices

- Configuring VNF Interfaces and VLANs

Specifying CPU for VNF

To specify the number of virtual CPUs that are required for a VNF, type the following command:

user@jdm# set virtual-network-functions vnf-name virtual-cpu count 1-4

To pin a virtual CPU to a physical CPU, type the following command:

user@jdm# set virtual-network-functions vnf-name virtual-cpu vcpu-number physical-cpu pcpu-number

The physical CPU number can either be a number or a range. By default, a VNF is allocated with one virtual CPU that is not pinned to any physical CPU.

You cannot change the CPU configuration of a VNF when the VNF is in running state. Restart the VNF for changes to take effect.

To enable hardware-virtualization or hardware-acceleration for VNF CPUs, type the following command:

user@jdm# set virtual-network-functions vnf-name virtual-cpu features hardware-virtualization

Allocating Memory for a VNF

To specify the maximum primary memory that the VNF can use, enter the following command:

user@jdm# set virtual-network-functions vnf-name memory size size

By default, 1 GB of memory is allocated to a VNF.

You cannot change the memory configuration of a VNF if the VNF is in running state. Restart the VNF for changes to take effect.

To allocate hugepages for a VNF, type the following command:

user@jdm# set virtual-network-functions vnf-name memory features hugepages [page-size page-size]

page-size is an optional parameter. Possible values are 1024 for a page size of 1GB and 2 for a page size of 2 MB. Default value is 1024 hugepages.

Configuring hugepages is recommended only if the enhanced orchestration mode is enabled. If the enhanced orchestration mode is disabled and if VNF requires hugepages, the VNF XML descriptor file should contain the XML tag with hugepages configuration.

For VNFs that are created using image files, there is a maximum limit of the total memory that can be configured for all user-defined VNFs including memory based on hugepages and memory not based on hugepages.

Table 4 lists the maximum hugepage memory that can be reserved for the various NFX250 models.

Model |

Memory |

Maximum Hugepage Memory (GB) |

Maximum Hugepage Memory (GB) for CSO-SDWAN |

|---|---|---|---|

NFX250-S1 |

16 GB |

8 |

- |

|

NFX250-S1E |

16 GB |

8 |

13 |

NFX250-S2 |

32 GB |

24 |

13 |

NFX250-LS1 |

16 GB |

8 |

- |

Configuring VNF Storage Devices

To add a virtual CD or to update the source file of a virtual CD, enter the following command:

user@jdm# set virtual-network-functions vnf-name storage device-name type cdrom source file file-name

To add a virtual USB storage device, enter the following command:

user@jdm# set virtual-network-functions vnf-name storage device-name type usb source file file-name

To attach an additional hard disk, enter the following command:

user@jdm# set virtual-network-functions vnf-name storage device-name type disk [bus-type virtio | ide] [file-type raw | qcow2] source file file-name

To delete a virtual CD, USB storage device, or a hard disk from the VNF, enter the following command:

user@jdm# delete virtual-network-functions vnf-name storage device-name

After attaching or detaching a CD from a VNF, you must restart the device for changes to take effect. The CD detach operation fails if the device is in use within the VNF.

VNF supports one virtual CD, one virtual USB storage device, and multiple virtual hard disks.

You can update the source file in a CD or USB storage device while the VNF is in running state.

You must save the source file in the /var/third-party directory and the file must have read and write permission for all users.

For VNFs created using image files, ensure the following:

A file specified as a hard disk in raw format should be a block device with a partition table.

A file specified as a hard disk in qcow2 format should be a valid qcow2 file.

A file specified as USB should be a block device with a partition table, or an iso9660 type file.

A file specified as CD-ROM should be a block device of type iso9660.

If a VNF has an image specified with bus-type=ide, it should not have any device attached with name hda.

If a VNF has an image specified with bus-type=virtio, then it should not have any device attached with name vda.

Configuring VNF Interfaces and VLANs

You can create a VNF interface and attach it to a physical NIC port, management interface, or VLANs.

Managing VNF States

By default, the VNF is autostarted on committing the VNF config.

Managing VNF MAC Addresses

VNF interfaces that are defined, either using a CLI or specified in an init-descriptor XML file, are assigned a globally-unique and persistent MAC address. A common pool of 64 MAC addresses is used to assign MAC addresses. You can configure a MAC address other than that available in the common pool, and this address will not be overwritten.

To delete or modify the MAC address of a VNF interface, you must stop the VNF, make the necessary changes, and then start the VNF.

The MAC address specified for a VNF interface can be either a system MAC address or a user-defined MAC address.

The MAC address specified from the system MAC address pool must be unique for VNF interfaces.

Managing MTU

The maximum transmission unit (MTU) is the largest data unit that can be forwarded without fragmentation. You can configure either 1500 bytes or 2048 bytes as the MTU size. The default MTU value is 1500 bytes.

MTU configuration is supported only on VLAN interfaces.

MTU size can be either 1500 bytes or 2048 bytes.

The maximum number of VLAN interfaces on the OVS that can be configured in the system is 20.

The maximum size of the MTU for a VNF interface is 2048 bytes.

Accessing a VNF from JDM

You can access a VNF from JDM using either SSH or a VNF console.

Use ctrl-] to exit the virtual console.

Do not use Telnet session to run the command.

Viewing List of VNFs

user@jdm> show virtual-network-functions ID Name State Liveliness ----------------------------------------------------------------- 3 vjunos0 running alive - vsrx shut off down

The Liveliness output field of a VNF indicates whether the IP address of the VNF is reachable or not reachable from JDM. The default IP address of the liveliness bridge 192.0.2.1/24.

Displaying the VNF Details

To display VNF details:

user@jdm> show virtual-network-functions vnf-name Virtual Machine Information --------------------------- Name: vsrx IP Address: 192.0.2.4 Status: Running Liveliness: Up VCPUs: 1 Maximum Memory: 2000896 Used Memory: 2000896 Virtual Machine Block Devices ----------------------------- Target Source --------------- hda /var/third-party/images/vsrx/media-srx-ffp-vsrx-vmdisk-15.1-2015-05-29_X_151_X49.qcow2 hdf /var/third-party/test.iso

Deleting a VNF

To delete a VNF:

user@jdm# delete virtual-network-functions vnf-name

The VNF image remains in the disk even after you delete the VNF.

Non-Root User Access for VNF Console

You can use Junos OS to create, modify, or delete VNF on the NFX Series routers.

Junos OS CLI allows the following management operations on VNFs:

|

Operation |

CLI |

|---|---|

|

start |

|

|

stop |

|

|

restart |

|

|

console access |

|

|

ssh access |

request virtual-network-functions <vnf-name> ssh [user-name

<user-name>] |

|

telnet access |

request virtual-network-functions <vnf-name> ssh [user-name

<user-name>] |

Table 6 lists the user access permissions for the VNF management options:

|

Operation |

|

|

|

|

|---|---|---|---|---|

|

start |

command available and works |

command available and works |

command not available |

command not available |

|

stop |

command available and works |

command available and works |

command not available |

command not available |

|

restart |

command available and works |

command available and works |

command not available |

command not available |

|

console access |

command available and works |

command available; but not supported |

command not available |

command not available |

|

ssh access |

command available and works |

command available; but not supported |

command not available |

command not available |

|

telnet access |

command available and works |

command available; but not supported |

command not available |

command not available |

Starting In Junos OS 24.1R1, Junos OS CLI allows the management operations on VNFs for a non-root user.

A new Junos OS user permission, vnf-operation allows the request

virtual-network-functions CLI hierarchy available to Junos OS users that do not

belong to the root and the super-user class.

You can add the user permission to a custom user class using the statement

vnf-operation at [edit system login class custom-user

permissions]

Table 3 lists the VNF management options available for a user belonging to a custom

Junos OS user class with vnf-operation permission.

|

Operation |

|

|

User of a custom Junos OS user class with |

|---|---|---|---|

|

start |

command available and works |

command available and works |

command available and works |

|

stop |

command available and works |

command available and works |

command available and works |

|

restart |

command available and works |

command available and works |

command available and works |

|

console access |

command available and works |

command available and works |

command available and works |

|

ssh access |

command available and works |

command available and works |

command available and works |

Accessing the VNF Console

Starting in Junos OS 24.1R1, the following message is displayed when you access the console initially:

Trying 192.168.1.1... Connected to 192.168.1.1. Escape character is '^]'.

The messages Trying 192.168.1.1... and Connected to

192.168.1.1. come from telnet client that is launched using the Junos OS CLI

command request virtual-network-functions <vnf-name> console.

The IP addresses present in the message cannot be replaced with the name of the VNF.

Exiting the VNF Console

Starting in Junos OS 24.1R1, when the user uses the escape sequence ^] the

console session terminates, and the telnet command prompt is displayed to the user.

You must enter quit or close or you must enter

qor c to exit from the terminal command prompt and

return to Junos OS command prompt.

su-user@host> request virtual-network-functions testvnf1 console Trying 192.168.1.1... Connected to 192.168.1.1. Escape character is '^]'. CentOS Linux 7 (Core) Kernel 3.10.0-1160.49.1.el7.x86_64 on an x86_64 centos2 login: telnet> q Connection closed. su-user@host> exit

Creating the vSRX Virtual Firewall VNF on the NFX250 Platform

vSRX Virtual Firewall is a virtual security appliance that provides security and networking services in virtualized private or public cloud environments. It can be run as a virtual network function (VNF) on the NFX250 platform. For more details on vSRX Virtual Firewall, see the product documentation page on the Juniper Networks website at https://www.juniper.net/.

To activate the vSRX Virtual Firewall VNF from the Juniper Device Manager (JDM) command-line interface:

Configuring the vMX Virtual Router as a VNF on NFX250

The vMX router is a virtual version of the Juniper MX Series 5G Universal Routing Platform. To quickly migrate physical infrastructure and services, you can configure vMX as a virtual network function (VNF) on the NFX250 platform. For more details on the configuration and management of vMX, see vMX Overview.

Before you configure the VNF, check the system inventory and confirm that the required resources are available. vMX as VNF must be designed and configured so that its resource requirements do not exceed the available capacity of the system. Ensure that a minimum of 20 GB space is available on NFX250.

To configure vMX as VNF on NFX250 using the Juniper Device Manager (JDM) command-line interface (CLI):

Virtual Route Reflector on NFX250 Overview

The virtual Route Reflector (vRR) feature allows you to implement route reflector capability using a general purpose virtual machine that can be run on a 64-bit Intel-based blade server or appliance. Because a route reflector works in the control plane, it can run in a virtualized environment. A virtual route reflector on an Intel-based blade server or appliance works the same as a route reflector on a router, providing a scalable alternative to full mesh internal BGP peering.

Starting in Junos OS Release 17.3R1, you can implement the virtual route reflector (vRR) feature on the NFX250 Network Services platform. The Juniper Networks NFX250 Network Services Platform comprises the Juniper Networks NFX250 devices, which are Juniper Network’s secure, automated, software-driven customer premises equipment (CPE) devices that deliver virtualized network and security services on demand. NFX250 devices use the Junos Device Manager (JDM) for virtual machine (VM) lifecycle and device management, and for a host of other functions. The JDM CLI is similar to the Junos OS CLI in look and provides the same added-value facilities as the Junos OS CLI.

-

Starting in vRR Junos OS Release 20.1R1, both Linux Bridge (LB) and enhanced orchestration (EO) modes are supported for vRR. It is recommended to instantiate vRR VNF in EO mode.

-

Support for LB mode on NFX250 devices ended in NFX Junos OS Release 18.4.

-

Support for NFX-2 software architecture on NFX250 devices ended in NFX Junos OS Release 19.1R1.

-

Starting in NFX Host Release 21.4R2 and vRR Junos OS Release 21.4R2, you can deploy a vRR VNF on an NFX250 NextGen device. Only enhanced orchestration (EO) mode is supported for vRR.

Benefits of vRR

vRR has the following benefits:

Scalability: By implementing the vRR feature, you gain scalability improvements, depending on the server core hardware on which the feature runs. Also, you can implement virtual route reflectors at multiple locations in the network, which helps scale the BGP network with lower cost. The maximum routing information base (RIB) scale with IPv4 routes on NFX250 is 20 million.

Faster and more flexible deployment: You install the vRR feature on an Intel server, using open source tools, which reduces your router maintenance.

Space savings: Hardware-based route reflectors require central office space. You can deploy the virtual route reflector feature on any server that is available in the server infrastructure or in the data centers, which saves space.

For more information about vRR, refer Virtual Route Reflector (vRR) Documentation.

Software Requirements for vRR on NFX250

The following software components are required to support vRR on NFX250:

Juniper Device Manager: The Juniper Device Manager (JDM) is a low-footprint Linux container that supports Virtual Machine (VM) lifecycle management, device management, Network Service Orchestrator module, service chaining, and virtual console access to VNFs including vSRX Virtual Firewall, vjunos, and now vRR as a VNF.

Junos Control Plane: Junos Control Plane (JCP) is the Junos VM running on the hypervisor. You can use JCP to configure the network ports of the NFX250 device, and JCP runs by default as vjunos0 on NFX250. You can log on to JCP from JDM using the SSH service and the command-line interface (CLI) is the same as Junos.

Configuring vRR as a VNF on NFX250

You can configure vRR as a VNF in either Linux Bridge (LB) mode or Enhanced Orchestration (EO) mode.

- Configuring vRR VNF on NFX250 in Linux Bridge Mode

- Configuring vRR VNF on NFX250 in Enhanced Orchestration Mode

Configuring vRR VNF on NFX250 in Linux Bridge Mode

- Configuring Junos Device Manager (JDM) for vRR

- Verifying that the Management IP is Configured

- Verifying that the Default Routes are Configured

- Configuring Junos Control Plane (JCP) for vRR

- Launching vRR

- Enabling Liveliness Detection of vRR VNF from JDM

Configuring Junos Device Manager (JDM) for vRR

By default, the Junos Device Manager (JDM) virtual machine comes up after NFX250 is powered on. By default, enhanced orchestration mode is enabled on JDM. While configuring vRR, disable enhanced orchestration mode, remove the interfaces configuration, and reboot the NFX device.

To configure the Junos Device Manager (JDM) virtual machine for vRR, perform the following steps:

Verifying that the Management IP is Configured

Verifying that the Default Routes are Configured

Purpose

Ensure that the default routes are configured for DNS and gateway access.

Action

From configuration mode, enter the show route command.

user@jdm# show route

destination 172.16.0.0/12 next-hop 10.48.15.254; destination 192.168.0.0/16 next-hop 10.48.15.254; destination 207.17.136.0/24 next-hop 10.48.15.254; destination 10.0.0.0/10 next-hop 10.48.15.254; destination 10.64.0.0/10 next-hop 10.48.15.254; destination 10.128.0.0/10 next-hop 10.48.15.254; destination 10.192.0.0/11 next-hop 10.48.15.254; destination 10.224.0.0/12 next-hop 10.48.15.254; destination 10.240.0.0/13 next-hop 10.48.15.254; destination 10.248.0.0/14 next-hop 10.48.15.254; destination 10.252.0.0/15 next-hop 10.48.15.254; destination 10.254.0.0/16 next-hop 10.48.15.254; destination 66.129.0.0/16 next-hop 10.48.15.254; destination 10.48.0.0/15 next-hop 10.48.15.254;

Configuring Junos Control Plane (JCP) for vRR

By default, the Junos Control Plane (JCP) VM comes up after

NFX250 is powered on. The JCP virtual machine controls the front panel

ports in the NFX250 device. VLANs provide the bridging between the

virtual route reflector VM interfaces and the JCP VMs using sxe ports. The front panel ports are configured as part of the same

VLAN bridging of the VRR ports. As a result, packets are transmitted

or received using these bridging ports between JCP instead of the

vRR VNF ports.

To configure JCP for vRR, perform the following steps:

Launching vRR

You can launch the vRR VNF as a virtualized network function (VNF) using the XML configuration templates that are part of the vRR image archive.

Enabling Liveliness Detection of vRR VNF from JDM

Liveliness of a VNF indicates if the IP address of the

VM is accessible to the Junos Device Manager (JDM). If the liveliness

of the VM is down, it implies that the VM is not reachable from JDM.

You can view the liveliness of VMs using the show virtual-machines command. By default, the liveliness of vRR VNF is shown as down.

Before creating the vRR VNF, it is recommended that you enable liveliness

detection in JDM.

To enable liveliness detection of the vRR VNF from JDM, perform the following steps:

Configuring vRR VNF on NFX250 in Enhanced Orchestration Mode

Before you configure the vRR VNF, check the

system inventory and confirm that the required resources are available

by using show system visibility command. vRR as VNF must

be designed and configured so that its resource requirements do not

exceed the available capacity of the system.

You can instantiate the vRR VNF in Enhanced Orchestration (EO) mode by using the JDM CLI configuration and without using the XML descriptor file. EO mode uses Open vSwitch (OVS) as NFV backplane for bridging the interfaces.

To activate the vRR VNF from the Juniper Device Manager (JDM) CLI:

Configuring Cross-connect

The Cross-connect feature enables traffic switching between any two OVS interfaces such as VNF interfaces or physical interfaces such as hsxe0 and hsxe1 that are connected to the OVS. You can bidirectionally switch either all traffic or traffic belonging to a particular VLAN between any two OVS interfaces.

This feature does not support unidirectional traffic flow.

The Cross-connect feature supports the following:

Unconditional cross-connect between two VNF interfaces for all network traffic.

VLAN-based traffic forwarding between VNF interfaces support the following functions:

Provides an option to switch traffic based on a VLAN ID.

Supports network traffic flow from trunk to access port.

Supports network traffic flow from access to trunk port.

Supports VLAN PUSH, POP, and SWAP operations.

To configure cross-connect:

Configuring Analyzer VNF and Port-mirroring

The Port-mirroring feature allows you to monitor network traffic. If the feature is enabled on a VNF interface, the OVS system bridge sends a copy of all network packets of that VNF interface to the analyzer VNF for analysis. You can use the port-mirroring or analyzer JDM commands for analyzing the network traffic.

Port-mirroring is supported only on VNF interfaces that are connected to an OVS system bridge.

VNF interfaces must be configured before configuring port-mirroring options.

If the analyzer VNF is active after you configure, you must restart the VNF for changes to take effect.

You can configure up to four input ports and only one output port for an analyzer rule.

Output ports must be unique in all analyzer rules.

After changing the configuration of the input VNF interfaces, you must de-activate and activate the analyzer rules referencing to it along with the analyzer VNF restart.

To configure the analyzer VNF and enable port-mirroring:

Change History Table

Feature support is determined by the platform and release you are using. Use Feature Explorer to determine if a feature is supported on your platform.