Configuration Walkthrough

This walkthrough provides the steps required to configure the Collapsed Fabric with Access Switches and Juniper Apstra JVDE. For more detailed step-by-step configuration information for any procedure, see the Juniper Apstra User Guide. This walkthrough includes notes that provide the configuration steps as well as additional configuration guidance.

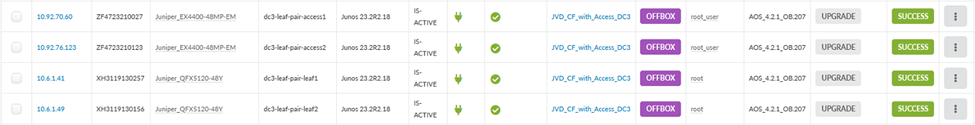

This walkthrough details the configuration of the baseline design, as used during validation in the Juniper data center validation test lab. The baseline design consists of two QFX5120-48Y switches in the collapsed spine role, and two EX4400-48MP switches in the access switch role. The goal of this JVDE is to provide options so that the baseline switch platform can be replaced with any validated switch platform for that role, as described in the Juniper Hardware Components section. To provide this JVDE configuration while keeping this walkthrough a manageable length, only the baseline design platform is used during this configuration walkthrough.

Throughout this guide you will note that several objects within Apstra are prepended with “DC3”. This nomenclature is simply an artefact of our test environment. There is no requirement for you to use identical nomenclature in your deployment. Juniper Apstra can manage multiple networks from a single instance by using multiple blueprints. The network design references in this document simply happen to be the third in the set of network designs we maintain for regular testing.

Apstra: Configure Apstra Server and Add Switches

This document does not cover the installation of Juniper Apstra. For more information about Juniper Apstra installation, see the Juniper Apstra Installation and Upgrade Guide or the Installing Juniper Apstra Quick Start Guide .

The first step for installing Juniper Apstra is to configure the Apstra Server VM. After setting up this VM and establishing a connection to it, a configuration wizard launches. You configure the Apstra server password, the Apstra UI password, and other network configuration parameters using this wizard.

Apstra: Management of Junos OS Device

There are two methods of adding Juniper devices into Apstra:

To add devices manually (recommended):

- In the Apstra UI, navigate to Devices > Agents > Create Offbox Agents. This requires the devices to be configured with a minimum of the root password and management IP.

To add devices through ZTP:

- To add devices from the Apstra ZTP server or for more information on adding devices to Apstra using ZTP, see the Apstra ZTP section of the Juniper Apstra User Guide .

For the purposes of this JVDE setup, a root password and management IPs were already configured on all switches prior to adding the devices to Apstra. To add switches to Apstra, first log on to Apstra Web UI, choose a method of device addition as described above, and provide the appropriate username and password that you have preconfigured for those devices when you initially unboxed them and set them up.

Apstra pulls the configuration from Juniper devices called pristine config. The Junos OS configuration ‘groups’ stanza is ignored when importing the pristine configuration, and Apstra will not validate any group configuration listed in the inheritance model, see Use Configuration Groups to Quickly Configure Devices . However, it’s best practice to avoid setting loopbacks, interfaces (except management interface), routing-instances (except management-instance). Apstra will set the protocols LLDP and RSTP when device is successfully Acknowledged.

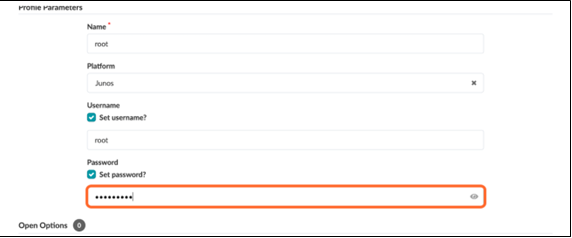

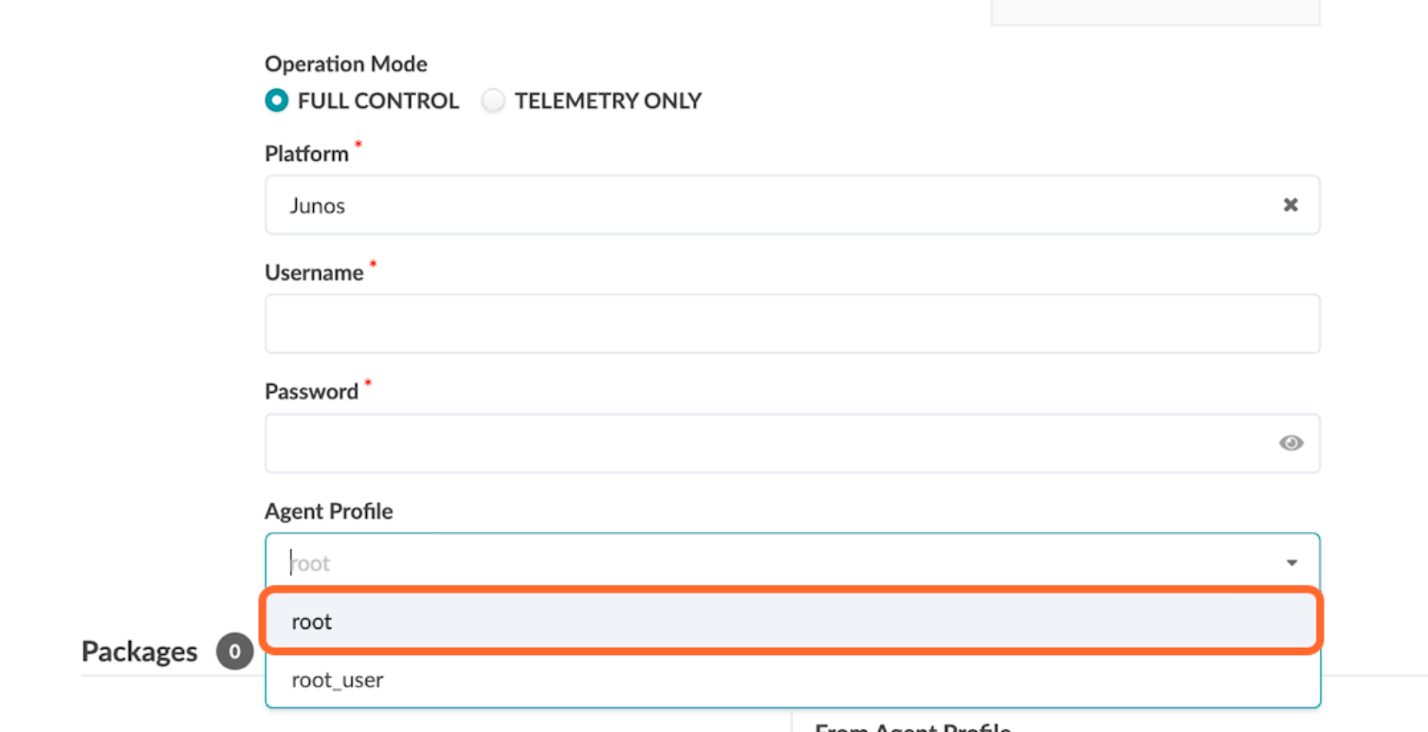

Create Agent Profile

For the purposes of this JVDE, the root user and password are the same across all devices; hence, an agent profile is created, as shown below. Note that this password is obscured, which keeps it secure.

- Navigate to Devices > Agent Profiles.

- Click Create Agent Profile.

- Create an agent profile named root with the platform set to Junos.

- Add the username and password used to log into your switches.

Create Offbox Agent

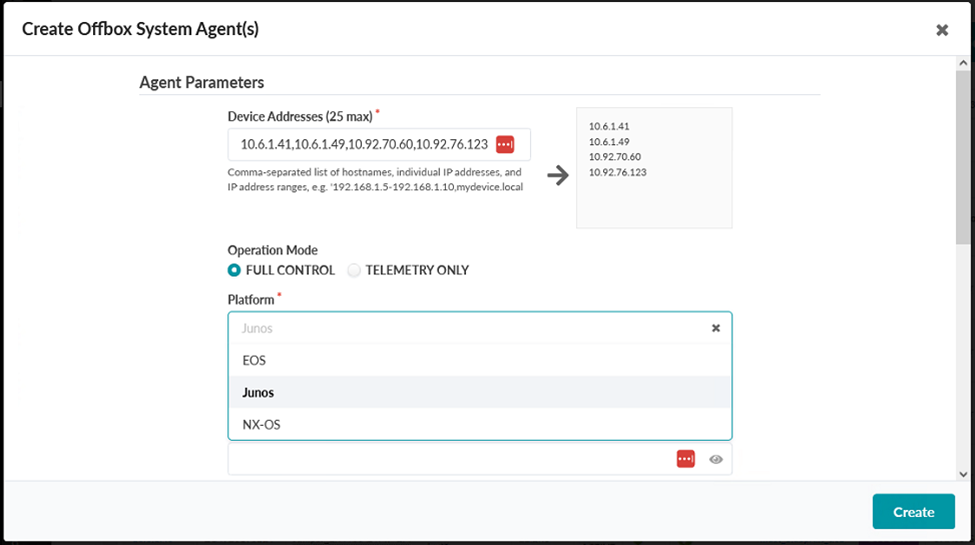

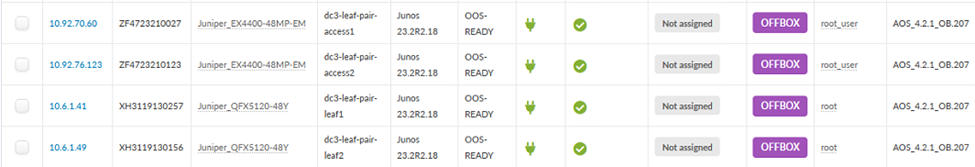

For switches that do not support the provisioning of an Apstra management agent onto the switches themselves, offbox agents are required to manage those switches. An IP address range can be provided to bulk-add devices into Apstra. This will create the requisite offbox agents to manage those devices.

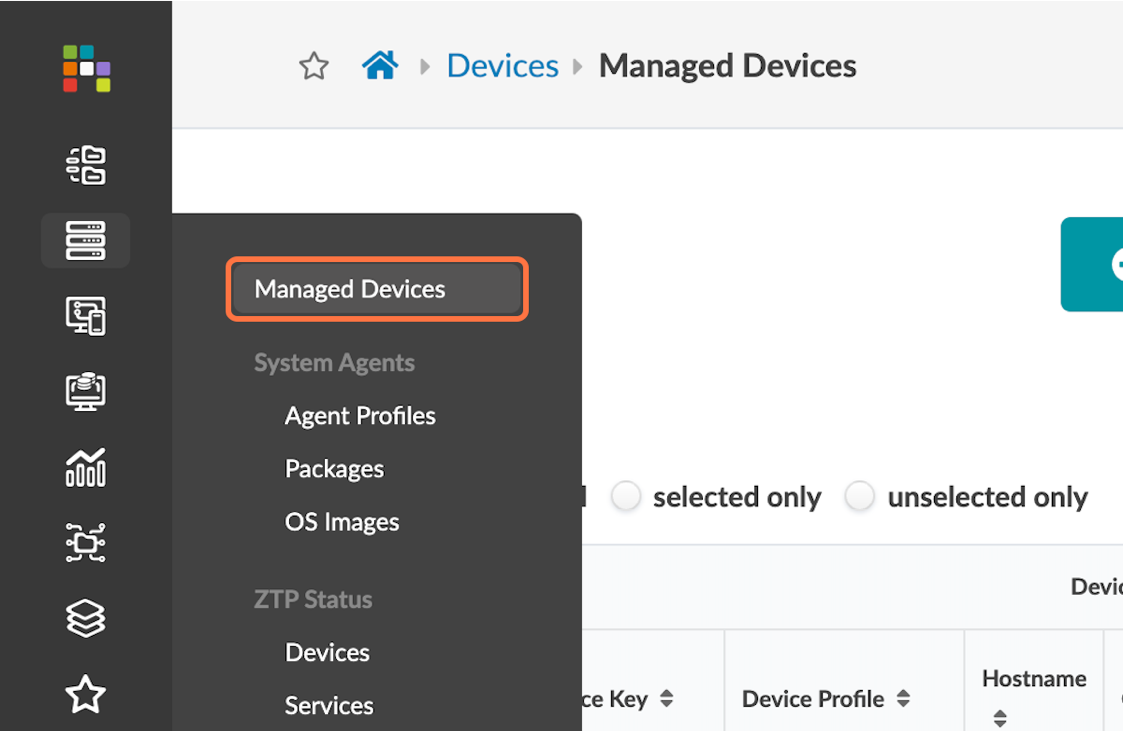

- Navigate to Devices > Managed Devices.

- Click on Create Offbox Agents.

- Add the management addresses of the switches, separated by a comma, in the Create Offbox Agents pop-up. You might enter an IP range instead if you prefer.

- Select Junos as the platform and full control as the operation mode.

Figure 3: Create Offbox Agents Pop-up with the Platform Option Selecting Junos

- Select the agent profile root created in the previous step. Figure 4: Create Offbox Agents Pop-up with the Agent Profile Option Selecting Root

- Press Create and wait for the systems to populate in the Managed Devices table.

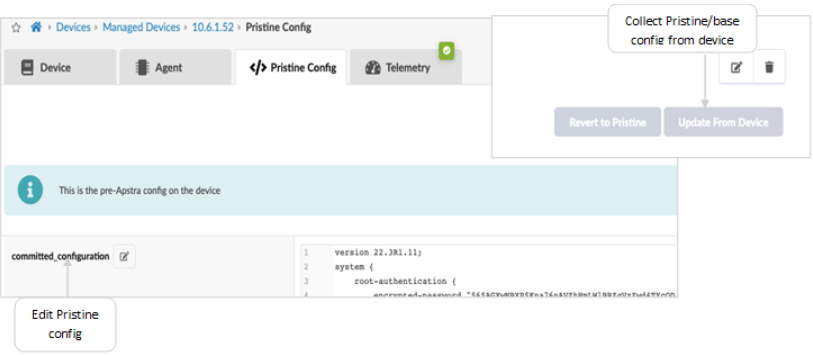

Add Pristine Config

Pristine configurations are collected from devices during provisioning in order to have a baseline configuration of the device. This is useful in order to have something to compare the Apstra-generated configuration against. Click on each of the newly created systems in the Devices > Managed Devices table, and then add a pristine configuration by either collecting it from the device or by pushing it from Apstra.

The configuration applied as part of the pristine configuration should be the base configuration or minimal configuration required to reach the devices with the addition of any users, static routes to the management switch, and possible other essential connectivity configuration. The pristine config creates a backup of the base configuration in Apstra and allows devices to be reverted to the pristine configuration when issues are experienced.

If the pristine configuration is updated using Apstra as shown in the above figure, then run Revert to Pristine.

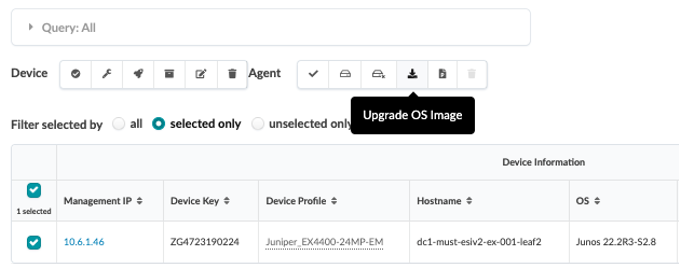

Upgrade Junos OS

If your switches are not running the operating system release recommended by this JVD, you should upgrade them to the recommended version. For this JVDE, the recommended Junos OS version is 22.2R3-S3.

Important: A maintenance window is required to perform any device upgrade, as upgrades can be disruptive.Best practice recommendations for upgrade:

- Upgrade devices using the Junos OS CLI as outlined in the Junos OS Software Installation and Upgrade Guide , along with the Junos OS version release notes, as Apstra currently only performs basic upgrade checks. However, this JVD summarizes the steps to upgrade if Apstra is intended to be used for upgrades.

- If a device is added to the blueprint, set it to undeploy, unassign its serial number from the blueprint, and commit the changes, which reverts it back to Pristine Config. Then, proceed to upgrade. Once the upgrade is complete, add the device back to the blueprint.

Apstra allows device upgrades. However, our current best practice recommendation is to upgrade devices using the Junos OS CLI as outlined in the Junos OS Software Installation and Upgrade Guide or in the Junos OS release notes. We recommend upgrading Junos OS outside Juniper Apstra because the Juniper Apstra upgrade process only performs basic upgrade checks.

If you want to upgrade the device within Apstra, here is how you do it:

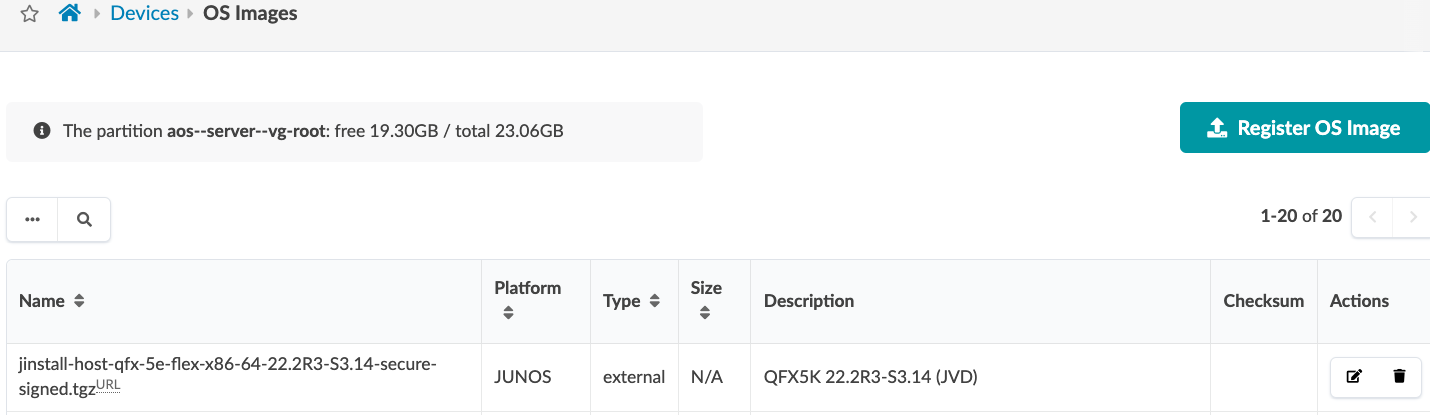

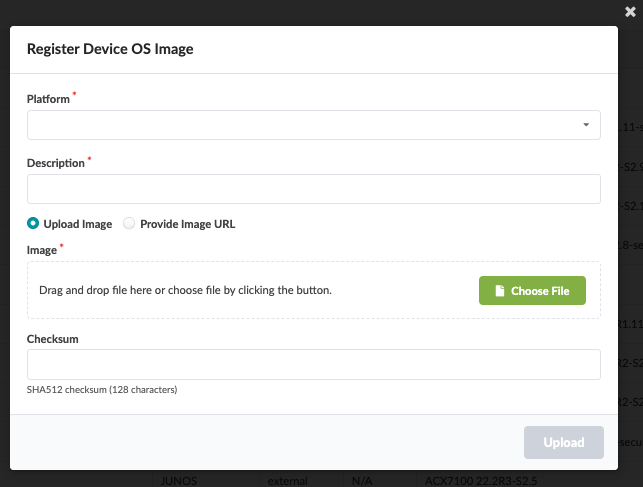

First, you need to register an image with Apstra so that it can deploy that image to devices. To register a Junos OS image on Apstra, either provide a link to the corporate repository where all OS images are stored or upload the OS image as shown below.

In the Apstra UI, navigate to Devices > OS Images and click Register OS Image.

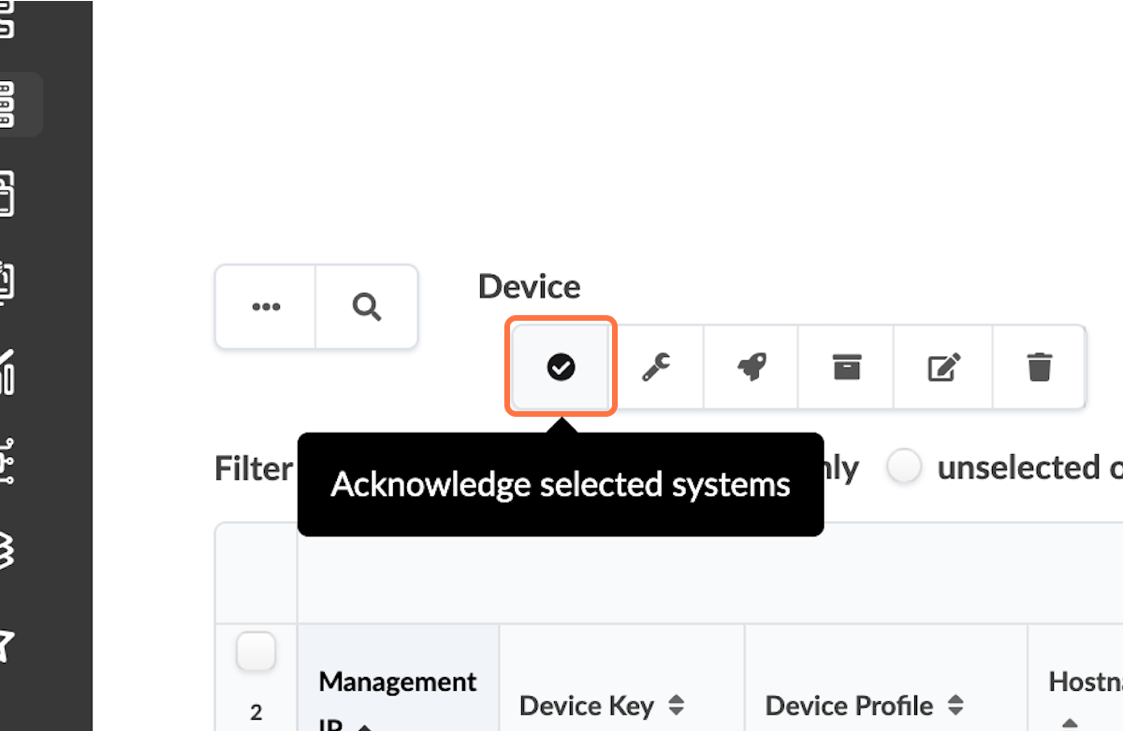

Acknowledge Devices

- Navigate to Devices > Managed Devices.

- Check Discovered Devices and Acknowledge the Devices.

- Click the checkbox interface to select all the devices once the offbox agent is added and the device information is collected.

- Click Acknowledge.

The switch is now under the management of Juniper Apstra.

Figure 10: Managed Devices Table Control Panel with the Acknowledge Selected Systems Highlighted

- Once a switch is acknowledged, the status icon under the Acknowledged? table header changes from a red “no entry” symbol to a green checkmark. Verify this change for all switches. If there are no changes, repeat the procedure to acknowledge the switches again.

After a device is managed by Apstra, all device configuration changes should be performed using Apstra. Do not perform configuration changes on devices outside of Apstra, as Apstra might revert those changes.Note: The device profiles covered in this JVD document are not modular chassis-based. For modular chassis-based devices such as QFX5700 the linecard profiles, chassis profile are available in Apstra and linked to the device profile. These cannot be edited; however, they can be cloned, and custom profiles can be created for linecard, chassis and device profile as shown below in Figure 13 and Figure 14.

Once the devices are successfully acknowledged, perform the collect pristine config step detailed above to ensure the LLDP and RSTP protocol configurations are added to the pristine switch configurations.

Fabric Provisioning

Fabric provisioning in Juniper Apstra involves the creation of a number of logical abstractions which represent the desired network fabric configuration to the software. Once the logical fabric is created, Apstra then provisions the switches with the desired configuration to the devices added to the software in the preceding portion of this walkthrough. The major concepts of fabric provisioning are outlined in the Juniper Apstra Overview section earlier in this document. You are expected to be familiar with these Apstra concepts to complete this walkthrough.

Identify and Create Logical Devices, Interface Maps with Device Profiles

The following steps define the Collapsed Fabric with Access Switches and Juniper Apstra JVDE baseline architecture and devices.

Before provisioning a blueprint, a replica of the topology is created. We define the data center reference architecture and devices in the following steps.

This setup process involves selecting logical devices for both the collapsed spine and the access switches. Generic devices are also created to represent two servers and a router. One server is connected to the collapsed spine layer and the other server is connected to the access switch layer. The servers connected to multiple switch layers is done intentionally in this topology to demonstrate that devices can be connected to each switch layer based upon need.

Logical devices are abstractions of physical devices that specify common device form factors such as the amount, speed, and roles of ports. Vendor-specific device information is not included in the logical device definitions, which permits building the network definition before selecting vendors and hardware device models.

- The Apstra software installation includes many predefined logical devices that can be used to create any variation of a logical device. After initial creation, logical devices are then mapped to device profiles using interface maps. The ports mapped to the interface maps match the device profile and physical connections. In the final configuration step, the racks and templates are defined using the configured logical devices and device profiles. These logical devices and device profiles are then used to create a blueprint.

The Device Configuration Lifecycle section of the Juniper Apstra User Guide explains the device lifecycle, which must be understood when working with Apstra blueprints and devices.

Create Device Profile

For all devices covered in this document, the device profiles (defined in Apstra and found under Devices > Device Profiles) were exactly matched by Apstra when adding devices into Apstra, as covered in Apstra: Management of Junos OS Device .

During the validation of supported devices, some device profiles had to be custom-made to suit the linecard setup on the device. For example, the setup of the EX4400-48MP includes the setup of multiple “panels” within a given switch, representing the configuration of different port groups for that device. This panel creation process is similar to how line cards are setup for a device. For more information on device profiles, see Apstra User Guide for Device Profiles .

To create the device profiles:

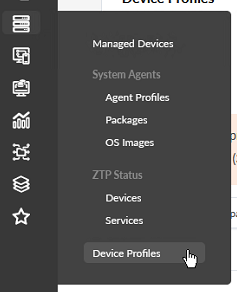

- Navigate to Devices > Device Profiles. Review the devices listed based on the number and speed of ports.

- Select the device that most closely resembles the switch for which you want to create

a device profile. Figure 12: Devices Menu with the Device Profiles Button Highlighted

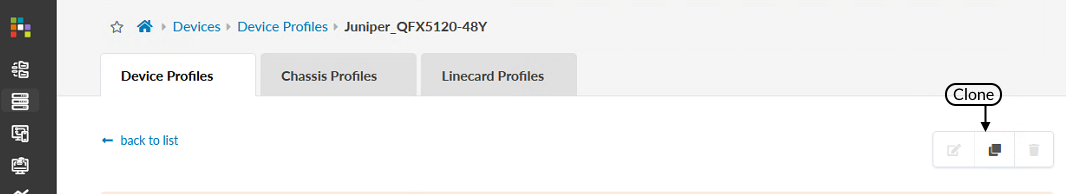

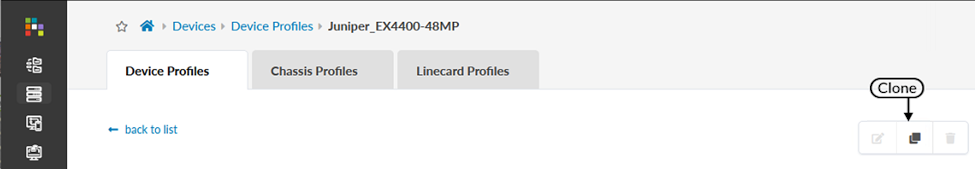

- Press the Clone button once you are confident

that the device profile you selected most closely resembles your

switch. Do this first for the switch model you have selected for

use in the collapsed spine role. For the purposes of this document,

this is the QFX5120-48Y.

Figure 13: Device Profile Page for the QFX5120-48Y with the Clone Button Pointed Out

Note:

Note:Note: Default logical devices, and devices which have already been added to the system, cannot be changed.

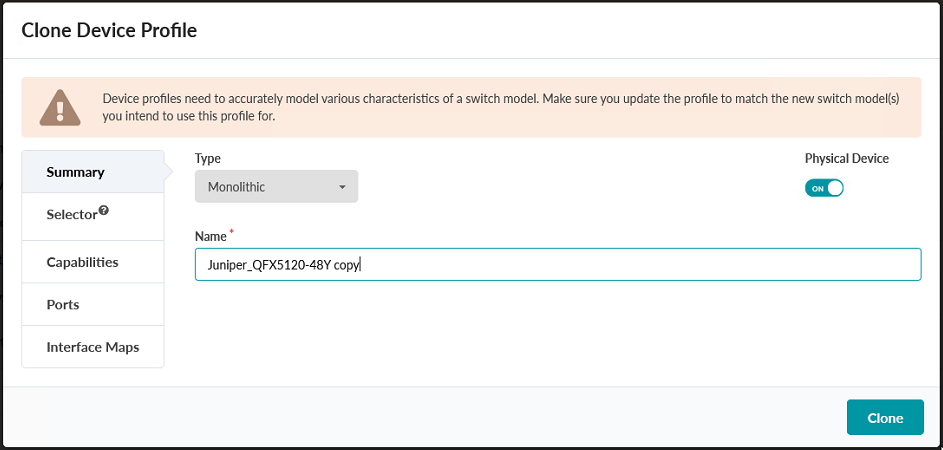

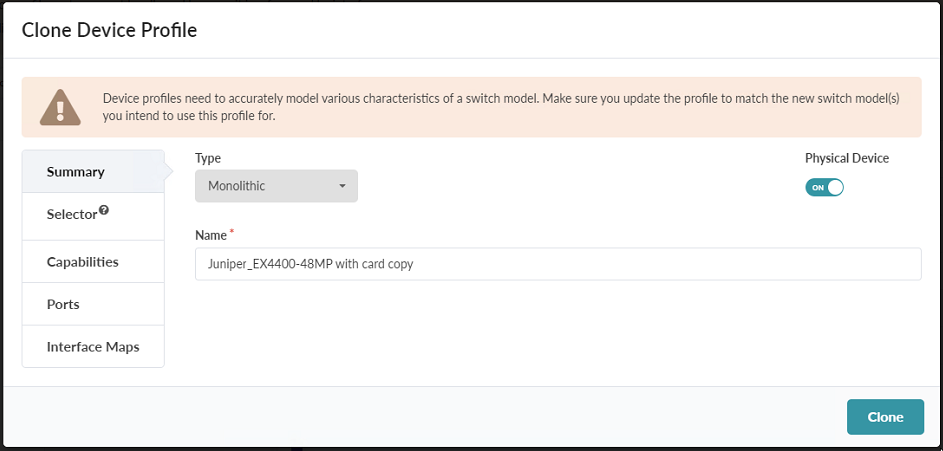

- Name the cloned profile that you will use for this blueprint. Figure 14: Clone Device Profile Pop-Up

-

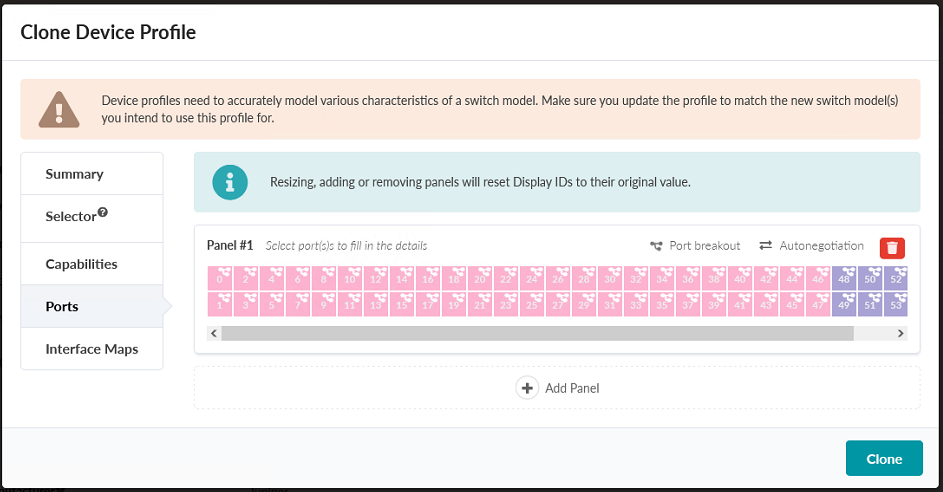

Click Ports to verify that the port selection matches your device. Apstra 4.1.2 comes preloaded with a device profile for the 5120-48Y that is an appropriate device profile setup starting point in most implementations of this topology. This device profile has 48 1/10/25 gigabit ports, and 8x 100 gigabit ports all in a single panel. How to modify or add a panel will be reviewed below when detailing the EX4400-48MP configuration.

Note:It may be advisable for your switch to be broken out into multiple panels based on functionality, location, or whether or not they are part of a line card. In this case, add and populate panels as appropriate. For this document all ports for the QFX5120-48Y will be added to a single panel, as they are both physically contiguous, and no ports support PoE.

Figure 15: Clone Device Profile Pop-up showing the port map for the 5120-48Y

- Once you are satisfied that the logical device accurately reflects the physical device you have chosen, press Clone.

- Repeat the cloning process for the access switches, which should be based off of the

device profile for the EX4400-48MP switch.Figure 16: Device Profile Page for the EX4400-48MP with the clone button showing on the right

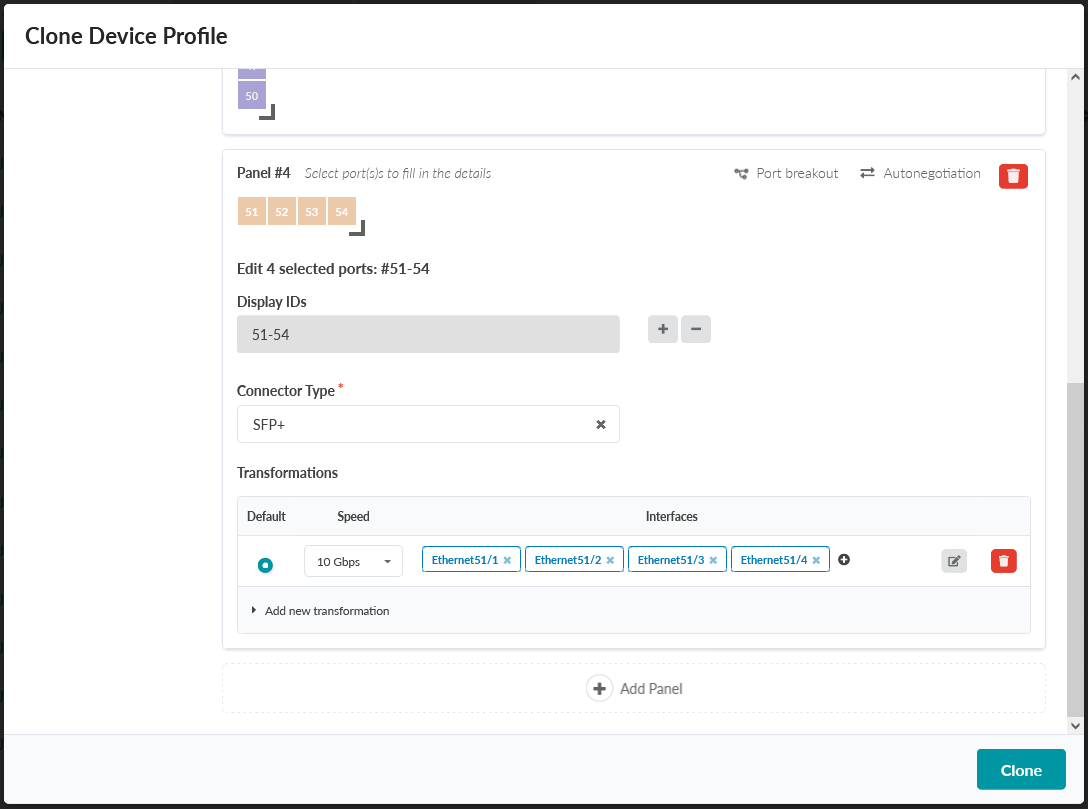

Figure 17: Clone Device Profile Popup

Figure 17: Clone Device Profile Popup

-

For the purposes of this document, we are going to assume the EX4400-48MP has the optional 4x 10 gigabit line card installed. Apstra comes preloaded with an EX4400-48MP device profile, however, this device profile does not include the 4x 10 gigabit card configured in the device profile. We will thus be adding a panel to our clone of the default config, showing how panels can be configured and set up.

The default EX4400-48MP has 12x100M/1/2.5/5/10 gigabit ports, 36x100M/1/2.5 gigabit PoE ports, and 2x100 gigabit ports, each configured in 3 different panels. The reason these ports are separated into different panels by default is that the 10 gigabit ports do not support PoE and the 100 gigabit ports are in a different physical location on the switch. We will be configuring an additional panel to represent the 4x10 gigabit card.

To add this panel click on the Ports tab under Summary, scroll to the bottom of the interaction window and click Add Panel.

Note:The recommended configuration for the EX4400-48MP in the role of access switch is to use a 10 gigabit ports to provide connectivity between the two access switches, and to use the 100 gigabit uplink ports on the back of the switches to provide connectivity to the collapsed spine layer.

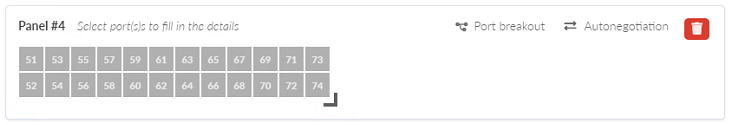

- Modify the port layout by clicking on the right-angle icon on the lower right corner

of the port map. Drag the icon until the interface map represents the number of ports on

your switch. Figure 18: An additional panel, shoing the port map with the right-andle icon used to modify the port count

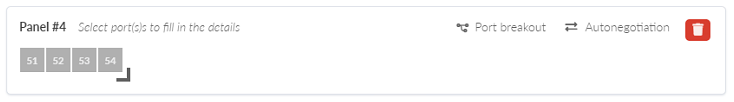

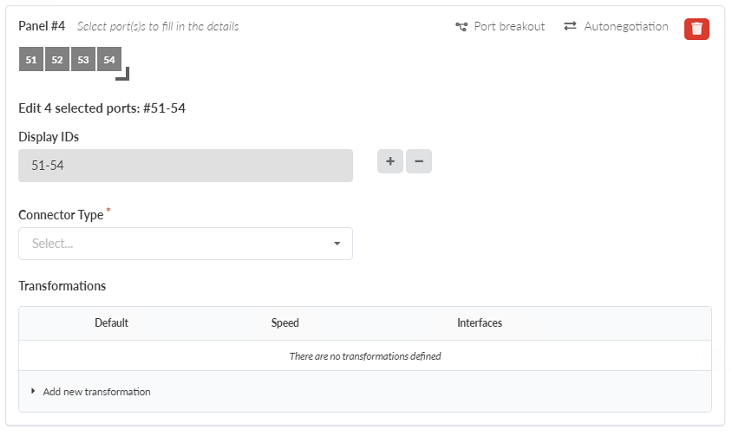

- Since we are adding a panel representing the 4x 10 Gigabit add-in card, drag the

right-angle icon until the panel shows 4 ports. Figure 19: An additional panel, modified to have 4 ports

- Select one port by clicking on it. Drag the icon until the appropriate number

representing a single group of ports with identical capabilities is selected. As you drag

the icon the Clone Device Profile popup will expand, allowing you to configure the port

speed and interface type. Figure 20: Device Profile Page for the EX4400-48MP with the ports of the 4th panel selected for configuration

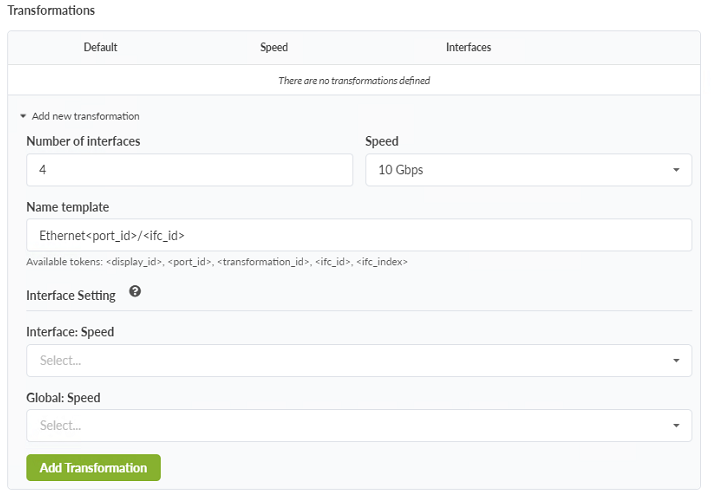

- Select SFP+ under Connector Type.

- Click Add New Transformation.

- Set Number of Interfaces to 4 and Speed to 10 Gbps.

Figure 21: Device Profile Page showing the add new transformation option having been selected

- Click the Add Transformation button. Figure 22: Device Profile Page for the EX4400-48MP with the 4th panel added and configured

- Click Clone.

Create Logical Devices

Logical devices must be created to provide Apstra with a software abstraction of the physical hardware devices. Where device profiles describe the physical device’s capabilities (such as the physical capabilities of a port), logical devices describe how those physical capabilities will actually be used (such as the speed of devices connected to that port).

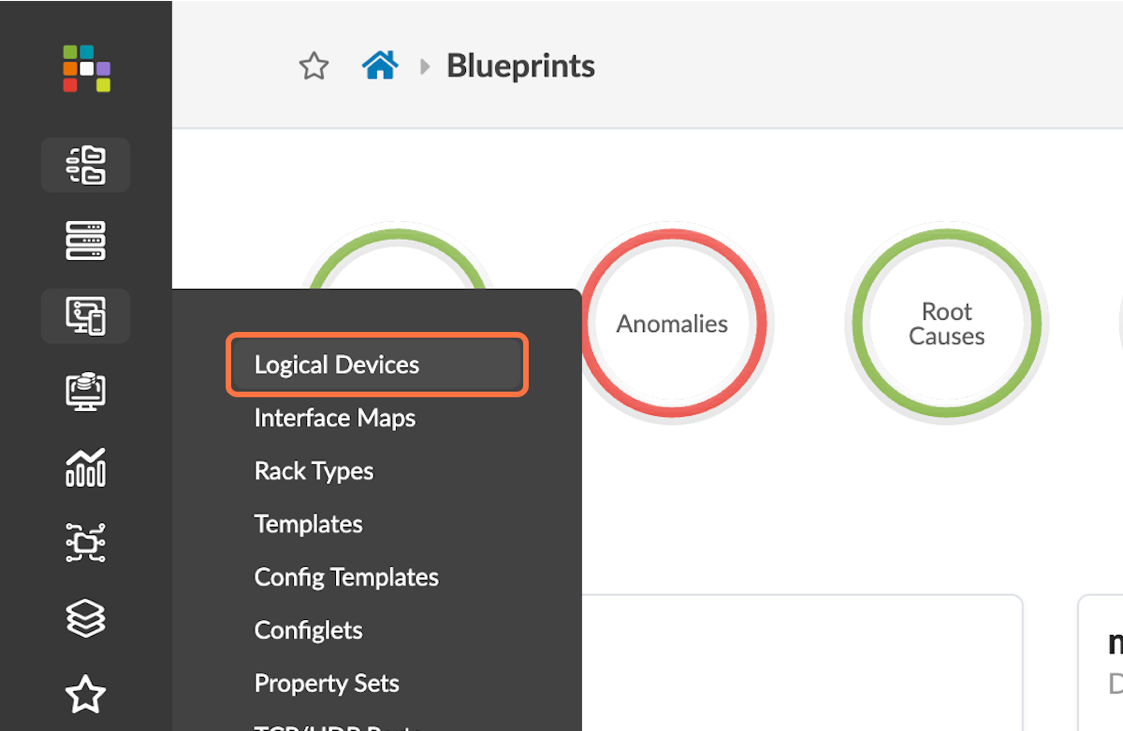

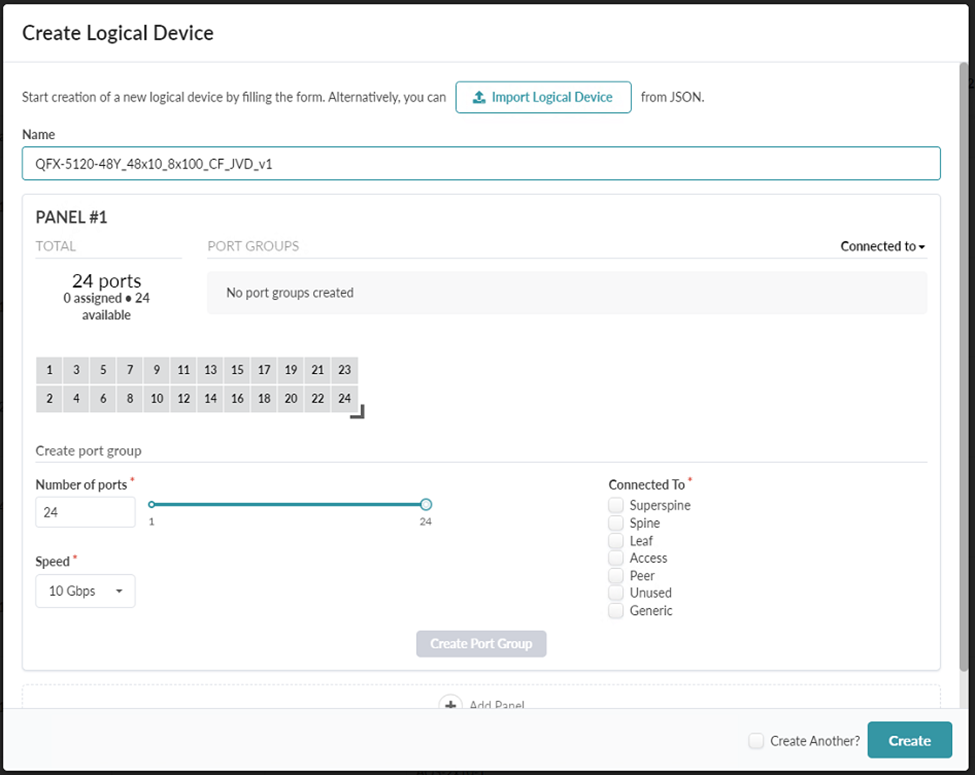

- Navigate to Design > Logical Devices. Select the Create Logical Device button in the upper-right corner.

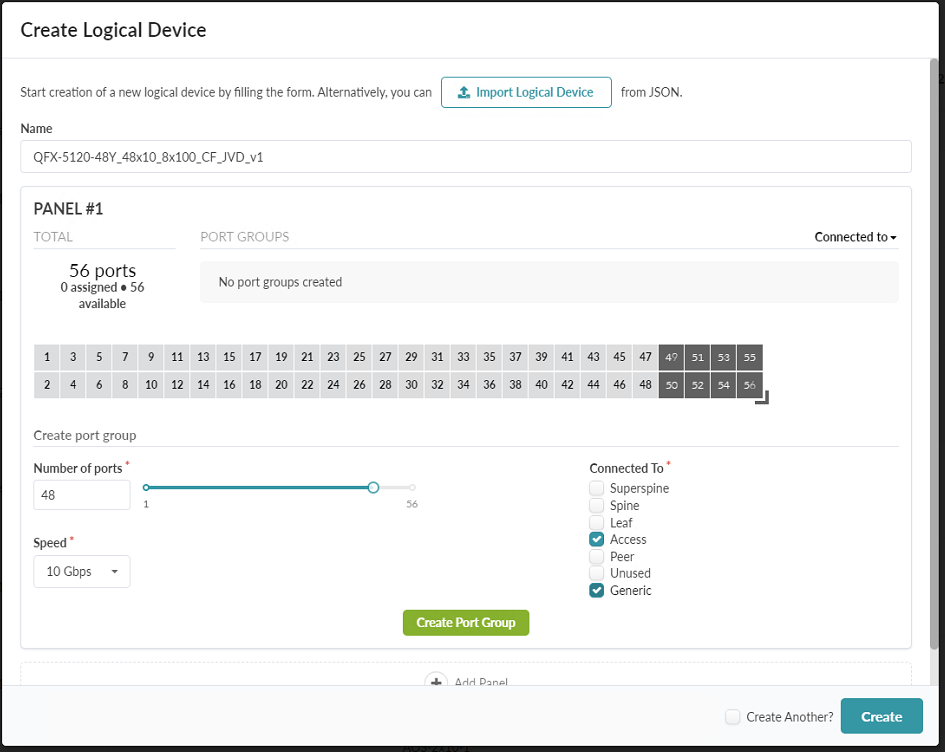

- Create and name the logical device for the QFX 5120-48Y. This document uses the name

JVD_QFX5120-48Y_48x10_8x100_CF_JVD_v1. Figure 24: The Create Logical Device popup

- Expand the number of ports by clicking and dragging the right-angle icon on the bottom right of the logical port panel.

- Enter 48 in the box labelled Number of ports, set Speed to 10

Gbps, and ensure that only Access and Generic are selected among the

Connected to options. These ports will be used to connect devices directly to the

collapsed spine. Figure 25: The Create Logical Device popup showing the panel expanded to 56 ports, with the first 48 ports selected and configured

- Click Create Port Group. The interface will then switch to defining the second group of ports.

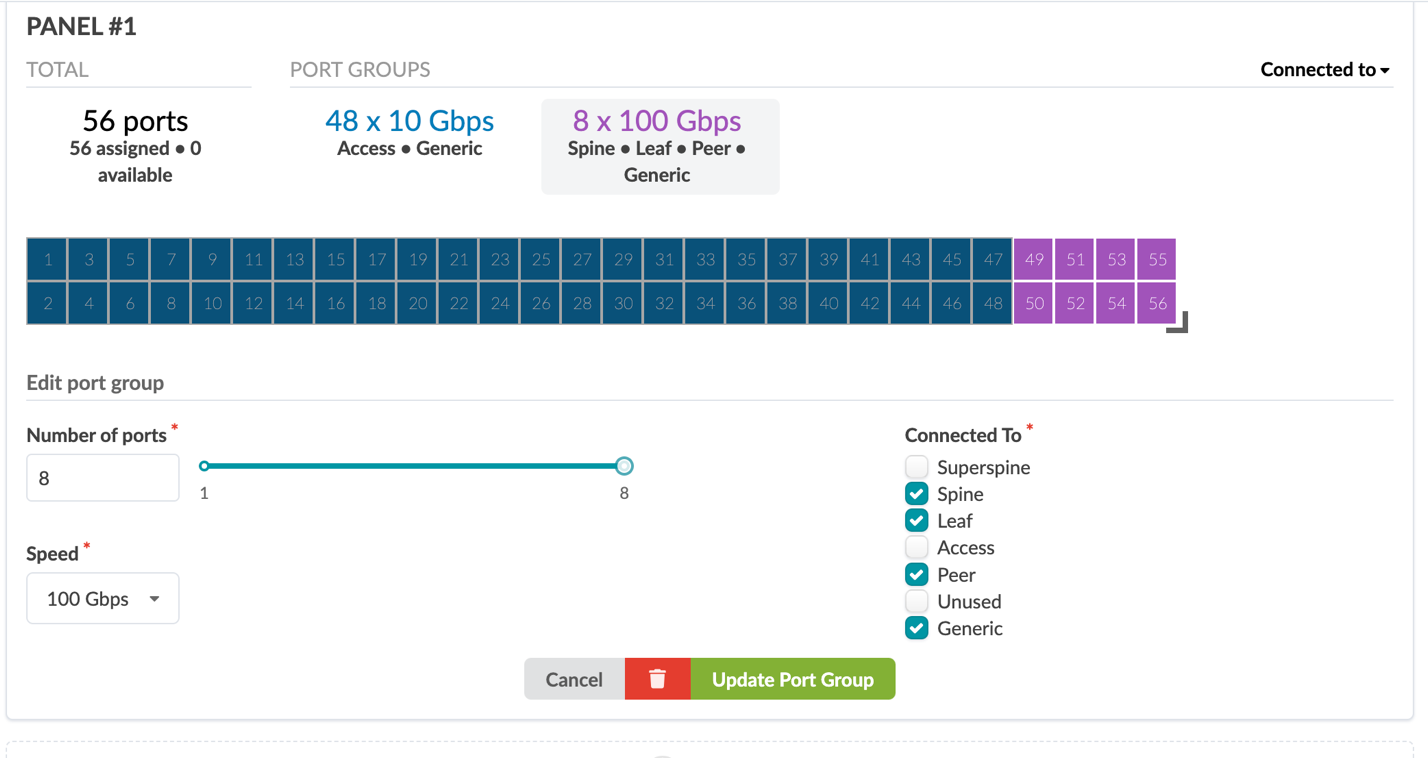

- For this second group of ports set Number of ports to 8, Speed to 100 Gbps, and select the checkboxes Spine, Leaf, Peer, and Generic as Connected to options.

- Click Create Port Group.Figure 26: The Create Logical Device popup showing the second port group for the 5120-48Y

- Click Create. You have now created a logical device that represents the 5120-48Y switch.

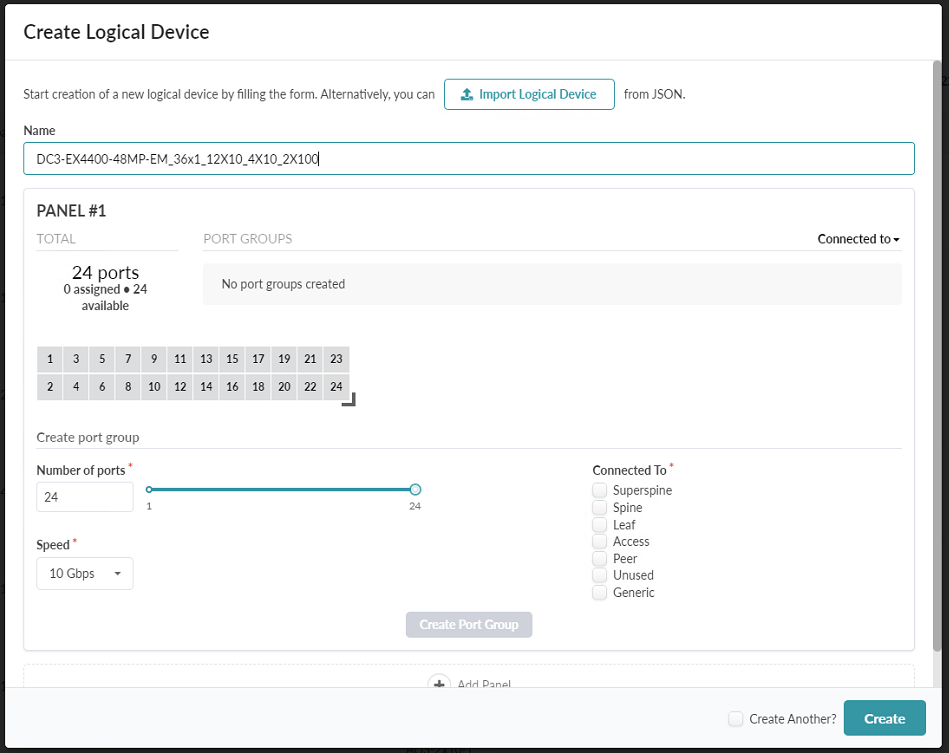

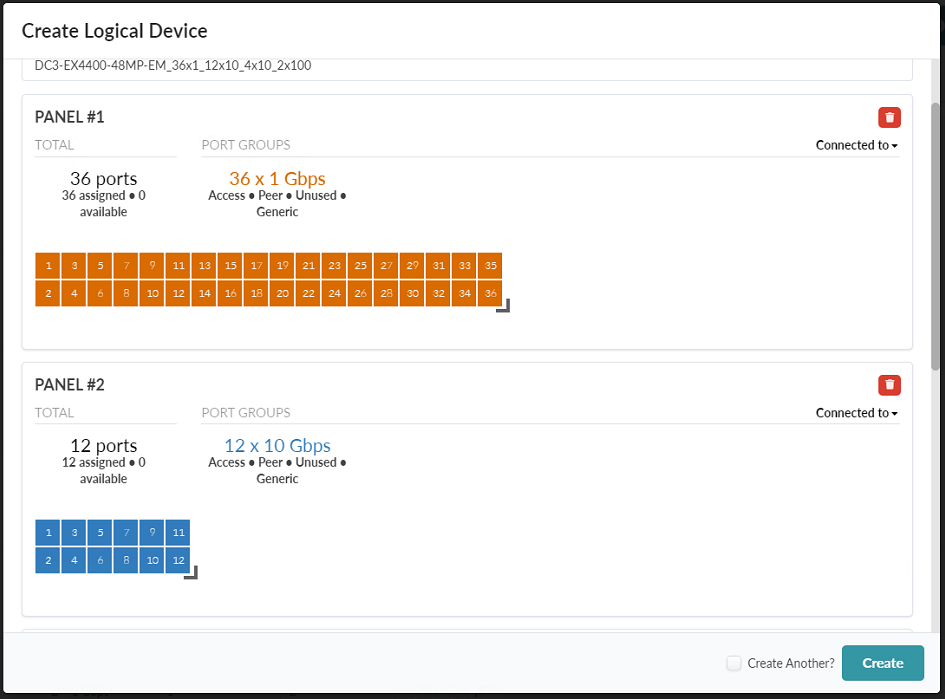

- Create a logical device for the EX4400-48MP switch. This document uses the name

DC3-EX4400-48MP-EM_36x1_12x10_4x10_2x100 for this logical device. Figure 27: The Create Logical Device popup showing the initial setup for the EX4400-48MP

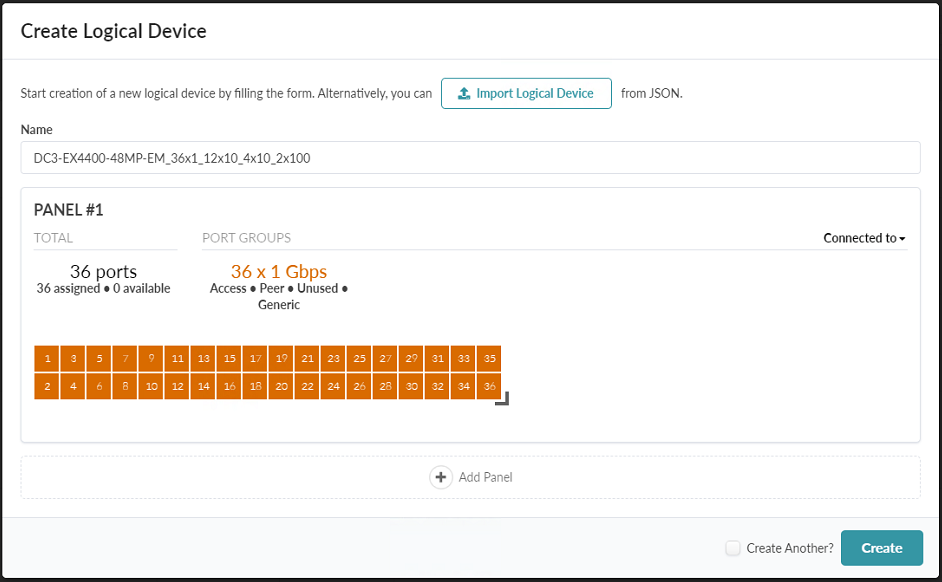

- This logical device will have four panels. Configure the first panel with 36x 1

Gbps ports set for Access, Peer, Unused, and Generic.

Click Create Port Group. Figure 28: The Create Logical Device popup showing the first panel for the EX4400-48MP

-

Click Add Panel. Configure the second panel with 12x 10Gbps ports set for Access, Peer, Unused, and Generic.

Click Create Port Group.

Figure 29: The Create Logical Device popup showing the first and second panels for the EX4400-48MP

-

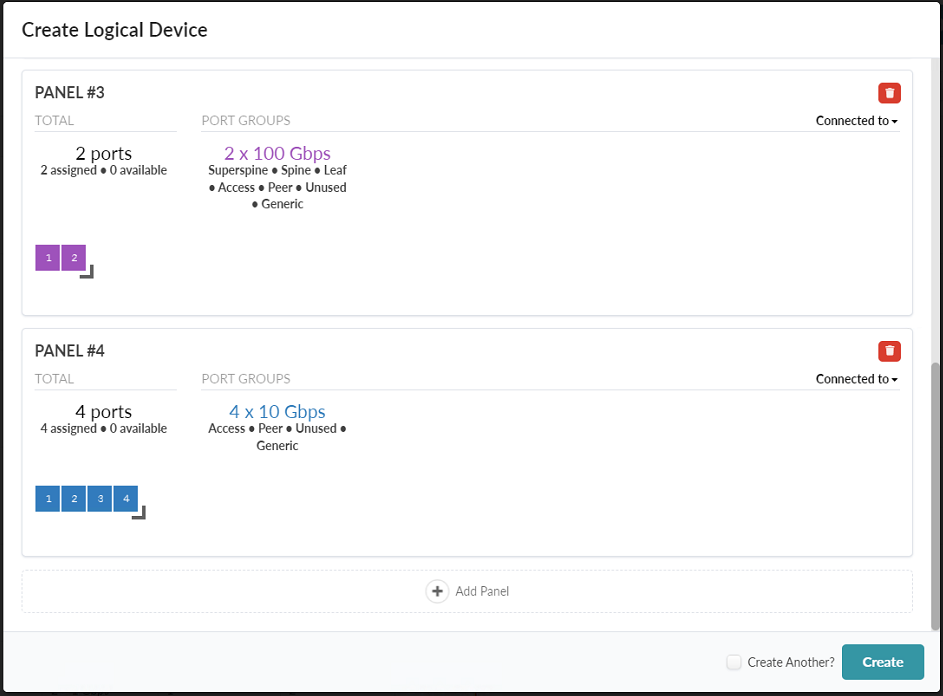

Click Add Panel, configure the third panel with 2x 100 Gbps ports set for Superspine, Spine, Leaf, Access, Peer, Unused, and Generic.

Click Create Port Group.

-

Click add panel, configure the fourth panel with 4x 10 Gbps ports set for Access, Peer, Unused, and Generic.

Click Create Port Group.

Figure 30: The Create Logical Device popup showing the third and fourth panels for the EX4400-48MP

- Click Create.

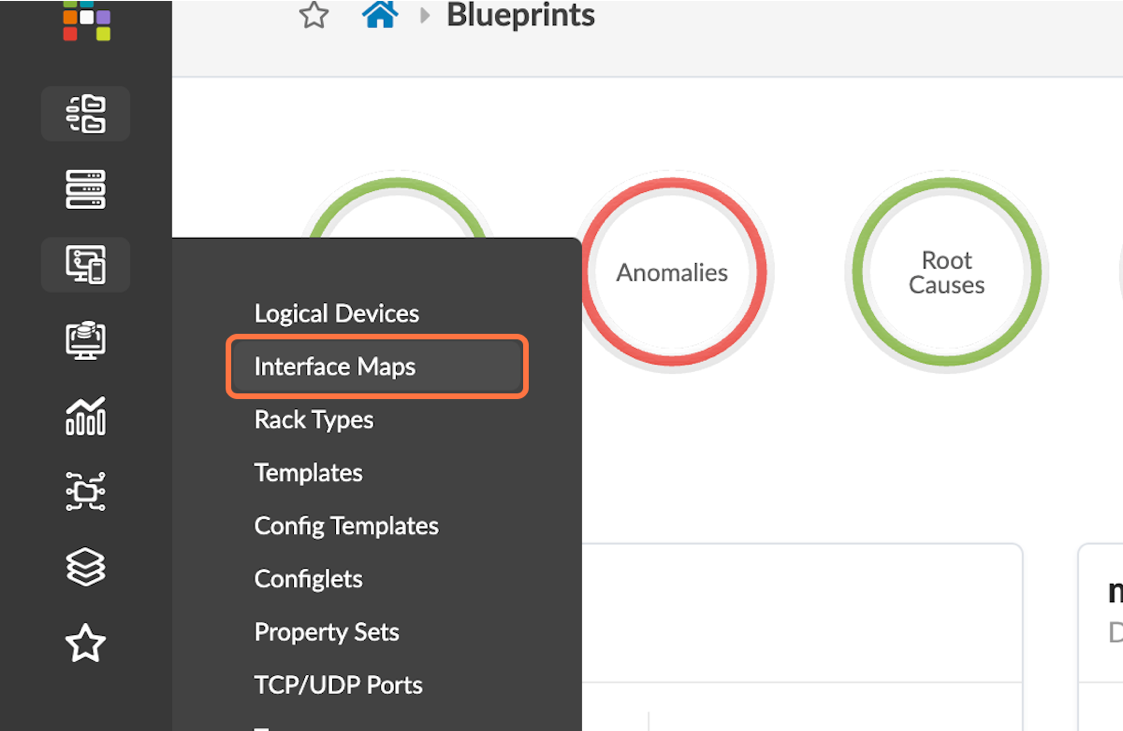

Create Interface Map

Interface maps bind logical devices to device profiles.

-

Navigate to Design > Logical Devices.

Select the Create Interface Map button in the upper-right corner.

Figure 31: Design Menus with the Interface Maps Button Highlighted

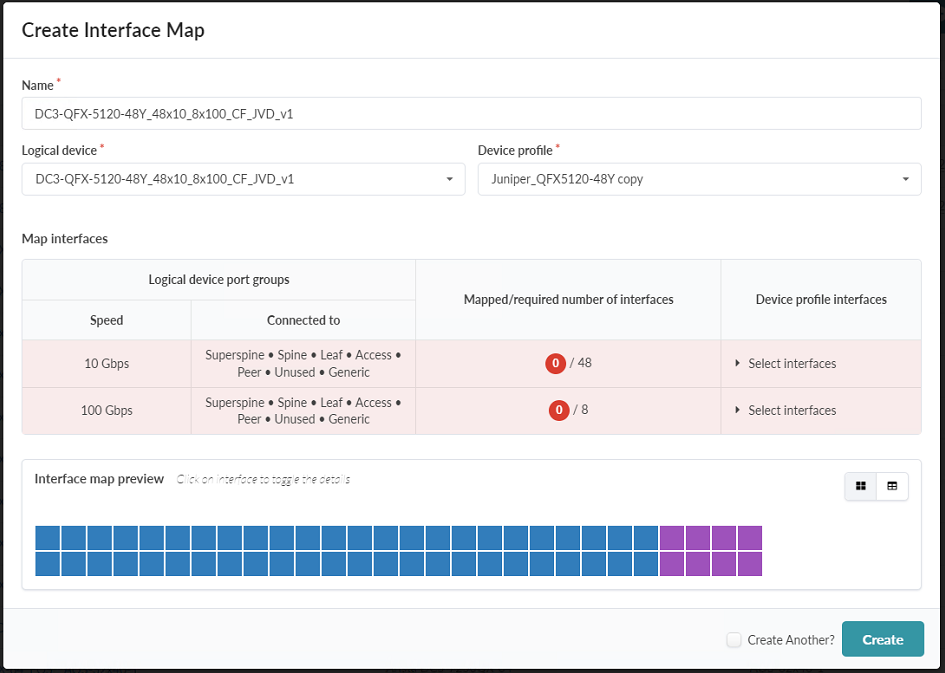

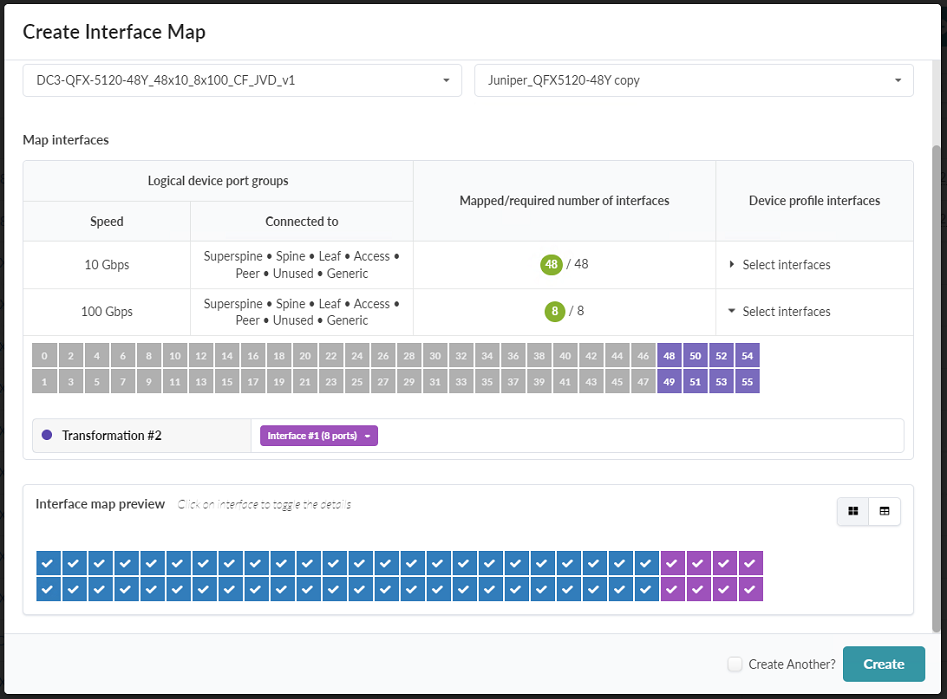

- Name the interface map DC3-QFX-5120-48Y_48x10_8x100_CF_JVD_v1.

- Select the logical device and the device profiles for the QFX-5120-48Y switch that

were created in the earlier procedures. Figure 32: Create Interface Map Pop-up Showing the Interface Map Preview

- Under the Device profile interfaces column, click Select Interfaces.

Assign all 48x10 Gbps ports and 8x100 Gbps ports as appropriate by selecting one port and

dragging it until you have selected all ports of that type. Figure 33: Create Interface Map Pop-up Showing the Interface Map Preview for the QFX5120-48Y

- Click Create.

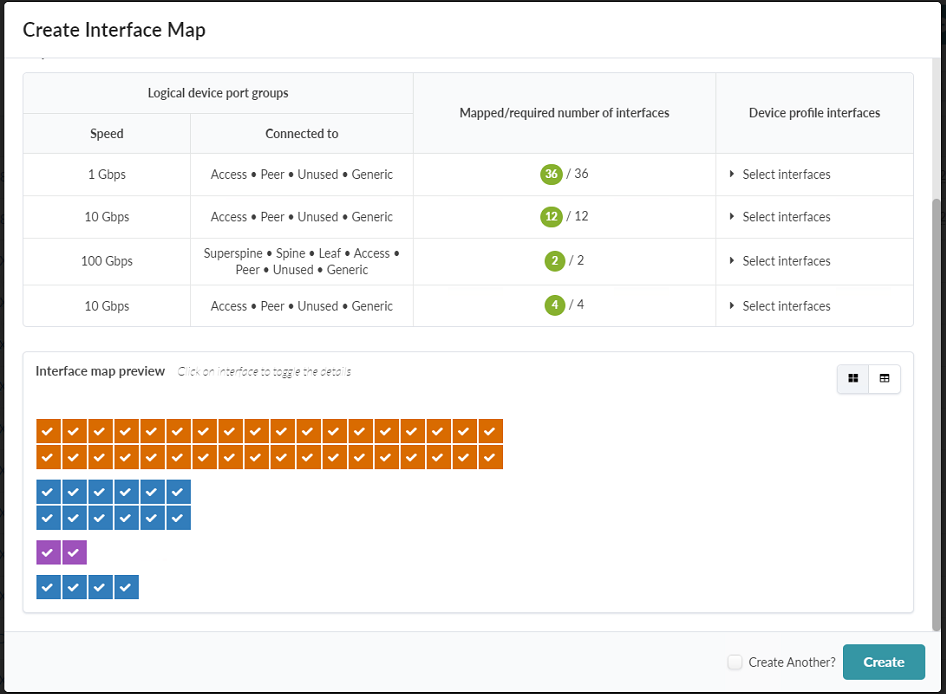

- Create a new interface map for the EX4400-48MP switch. Name the interface map DC3-EX4400-48MP-EM_36x1_12x10_4x10_2x100.

- Assign interfaces to this interface map as shown in this figure: Figure 34: Create Interface Map Pop-up Showing the Interface Map Preview for the EX4400-MP

- Click Create.

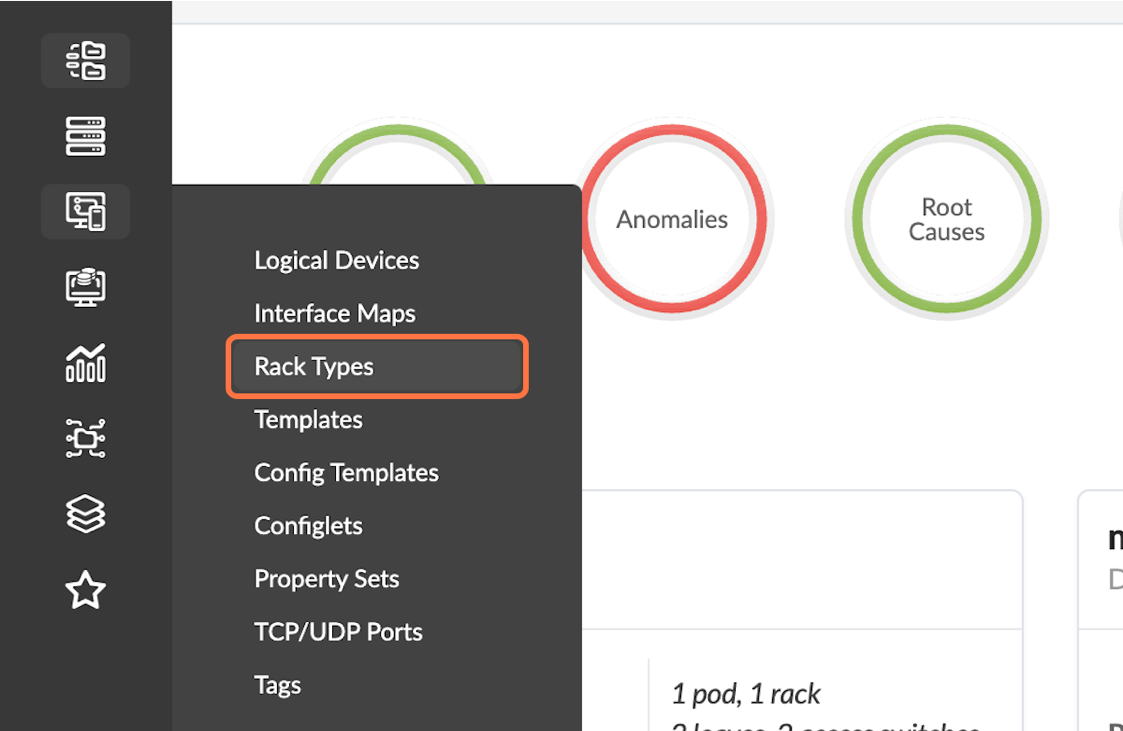

Create Rack Type

Rack types define logical racks in Juniper Apstra, which are an abstracted representation of physical racks. Rack types define the links between logical devices.

- Navigate to Design > Rack Types.Figure 35: Design Menu with the Rack Types Button Highlighted

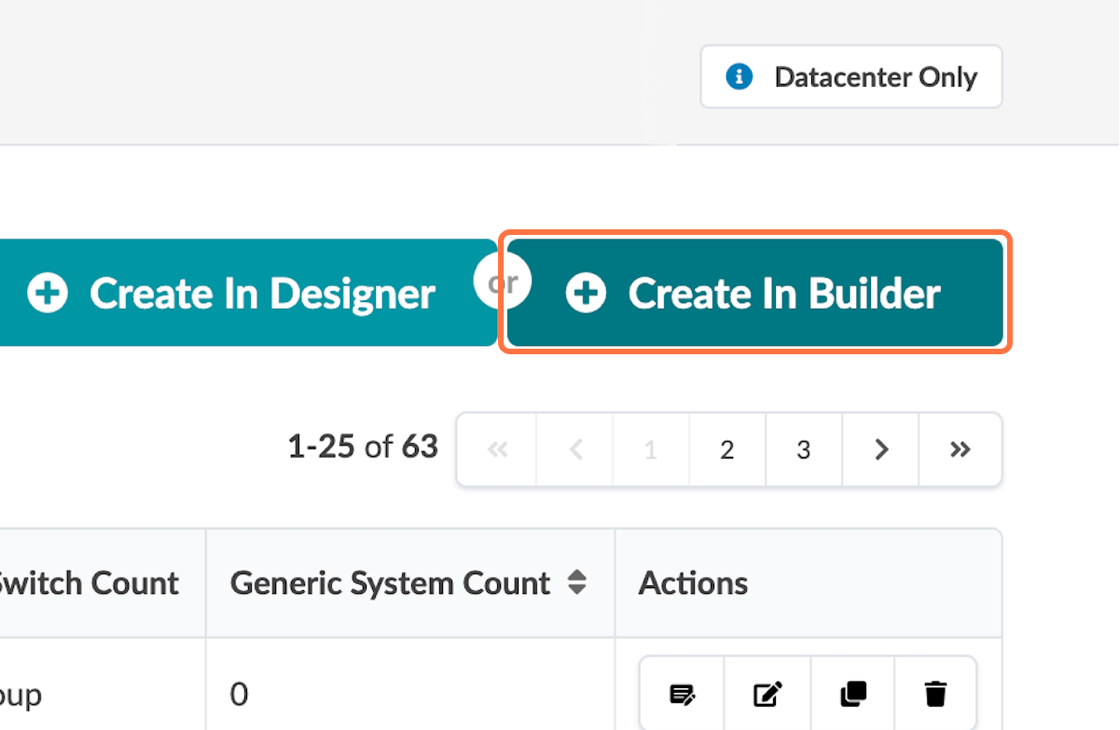

- Select Create In Builder in the upper-right corner. Figure 36: The Rack Types Page with the Create in Builder Button Highlighted

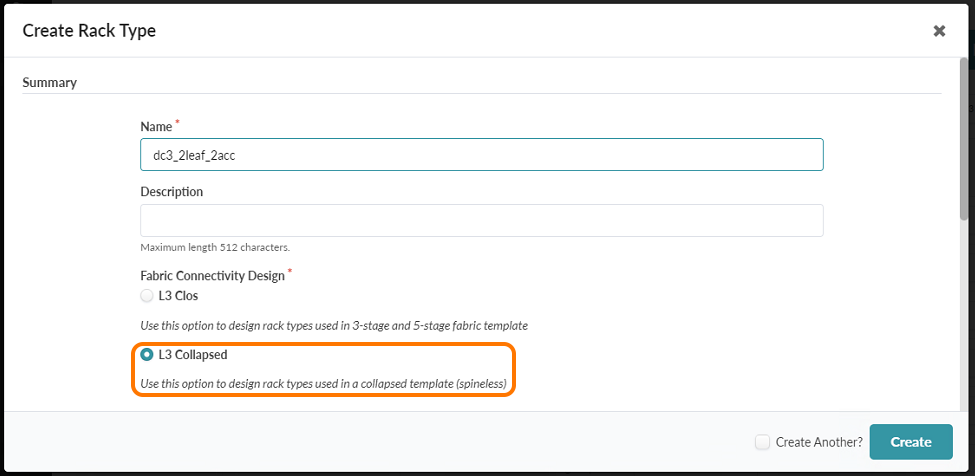

- Create a rack with the name dc3_2leaf_2acc and select L3 collapsed. Figure 37: Rack Type Creation in Builder with L3 Collapsed Highlighted

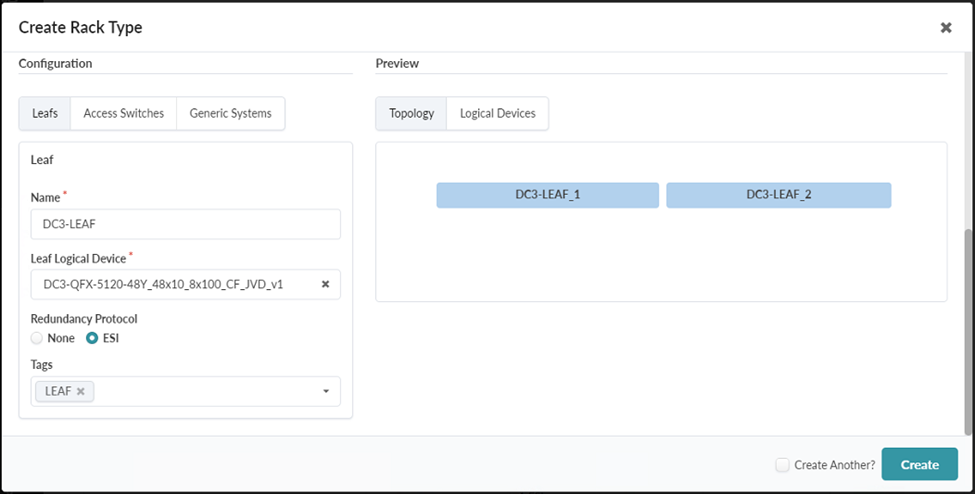

-

Scroll down in the pop-up box.

Set the following attributes under Leaf:

Name: DC3-Leaf,

Leaf Logical Device: select the logical device created earlier for the QFX-5120-48Y

Redundancy Protocol: ESI

Note:Juniper Apstra refers to the collapsed spine layer as “leaf” switches. This underlies our nomenclature choices in this guide.

Figure 38: Rack Type Creation in Builder with ESI Under Leafs Highlighted

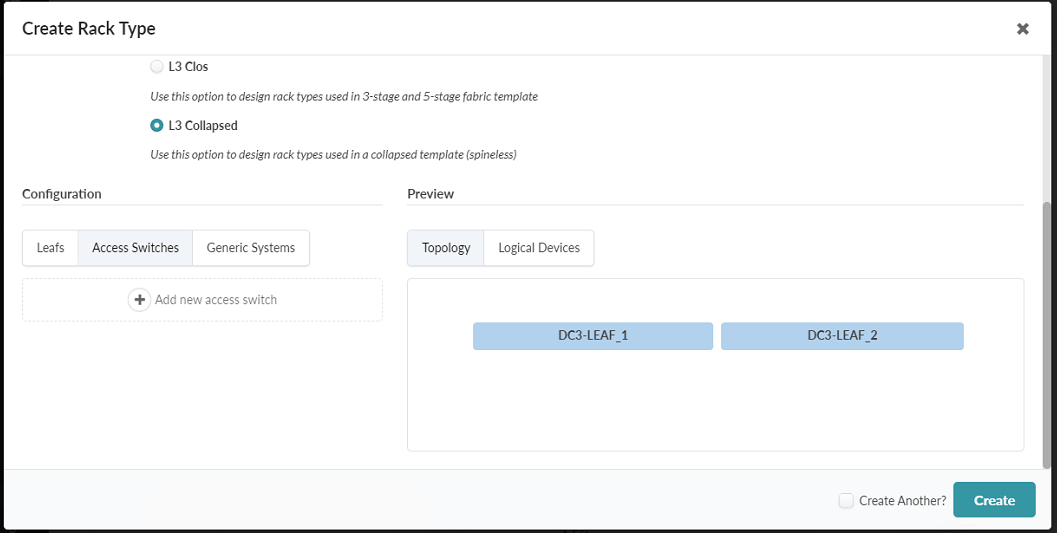

- Click Access Switches. Figure 39: Create Rack Type Pop-up Showing the Access Switches tab

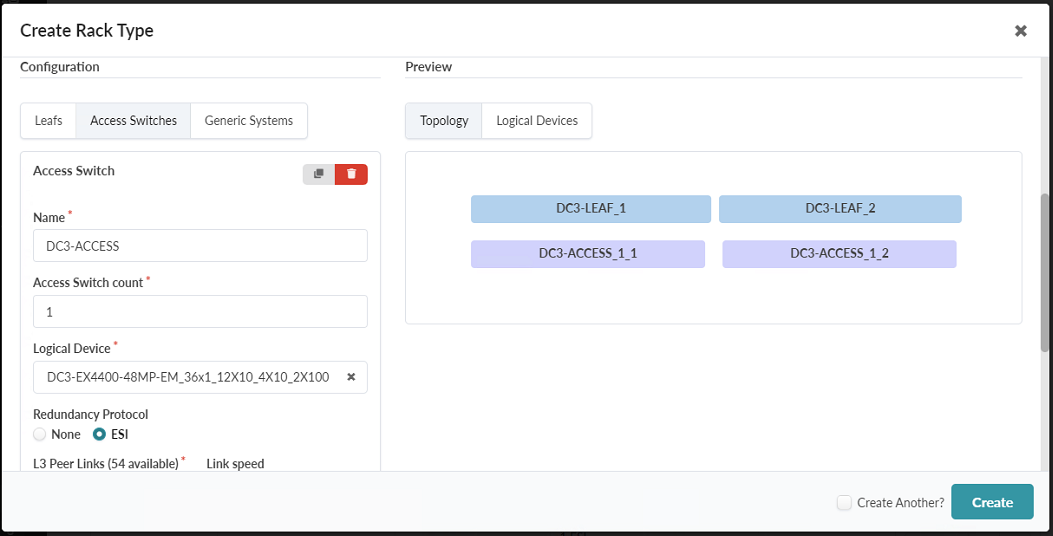

-

Enter the following information:

Name: DC3-Access

Access Switch Count: 1

Logical Device: select the logical device created earlier for the EX4400-48MP

Redundancy Protocol: ESI

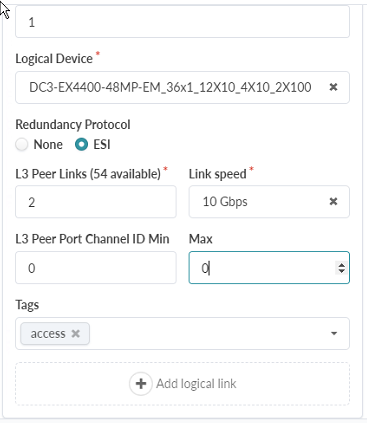

Figure 40: Create Rack Type Pop-up showing the Access Switches tab with the first part filled out

-

Scroll down and enter the following information:

L3 Peer Links: 2

Link speed: 10Gbps

L3 Peer Port Channel ID Min: 0, Max: 0

Figure 41: Create Rack Type Pop-up showing additional configuration options on the Access Switches tab

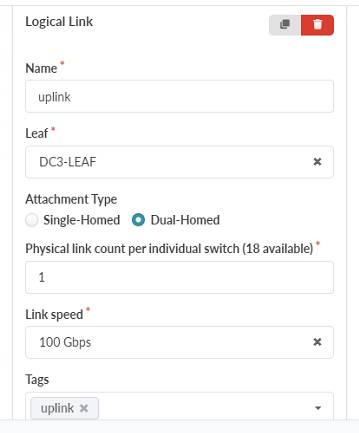

-

Click Add a logical link. Add a logical link with the following information

Name: uplink

Leaf: DC3-Leaf

Attachment Type: Dual-Homed

Physical Link count per individual switch: 1

Link speed: 100Gbps

Figure 42: Create Rack Type Pop-up showing the Logical Link configuration options in the Access Switches tab

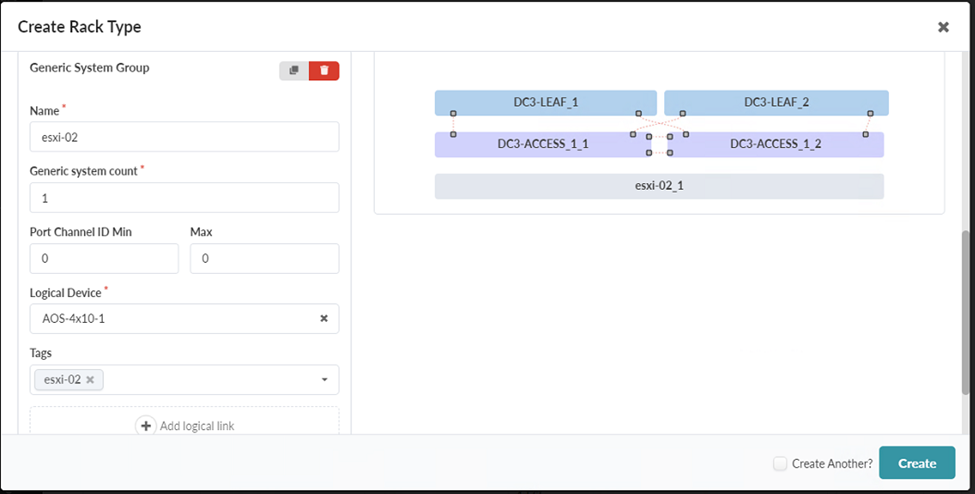

- Under Generic Systems, click Add new generic system group. Name the

generic system group esxi-02 and select an appropriate logical device that

represents your server.

In this document, we are choosing the AOS-4x10-1 as the logical device because the ESXi servers in our data center JVD test lab have 4x 10 gigabit NIC ports. Creating generic systems connects the leaf switches to the generic systems, such as servers in high availability mode.

The Generic System Group should end up with the following configuration:

Name: esxi-02

Generic system count: 1

Port Channel ID Min: 0, Max: 0

Logical Device: AOS-4x10-1

Figure 43: Rack Type Creation in Builder with Generic Systems Selected

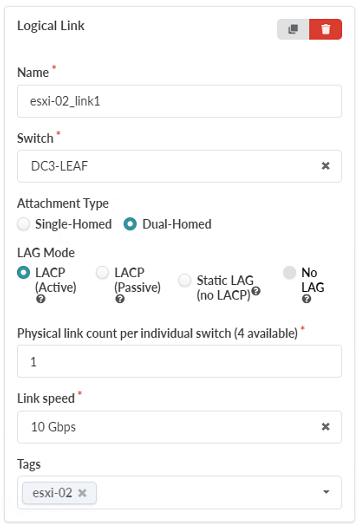

- While still under Generic Systems, click Add logical link to create a

logical link.

This logical link will be dual-homed from esxi-02 to the DC3-LEAF switch layers switches. The DC3-LEAF switch layer is the collapsed spine layer in our topology, not the access switches. This configuration demonstrates the ability to connect servers directly to switches in the collapsed fabric layer.

Create the Logical Link with the following parameters:

Name: esxi-02_link1

Switch: DC3-Leaf

Attachment Type: Dual-Homed

LAG Mode: LACP (Active)

Physical link count per individual switch: 1

Link Speed: 10 Gbps

Figure 44: Create Rack Type Pop-up showing the Logical Link options under the Generic Systems tab

- Click Add new generic system group.

Figure 45: The Add new generic system group button under the Generic Systems tab in the Create Rack Type Pop-up

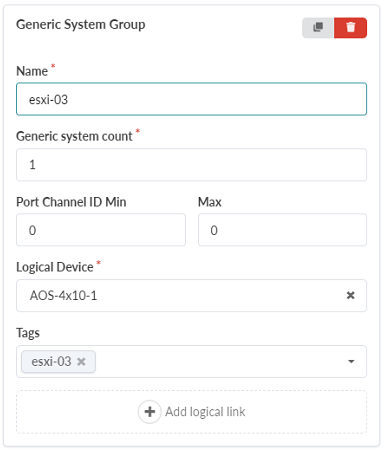

-

Create a Generic System Group with the following parameters:

Name: esxi-03

Generic system count: 1

Port Channel ID Min: 0, Max: 0

Logical Device: AOS-4x10-1

Figure 46: Create Rack Type Pop-up showing the Logical Link configuration options in the Access Switches tab

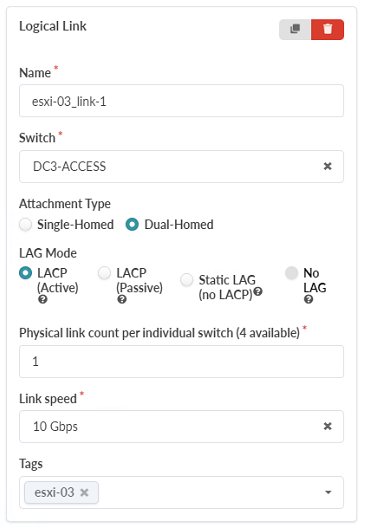

-

While still under Generic Systems, click Add logical link to create a logical link. This logical link will be dual-homed from esxi-03 to the DC3-ACCESS switch layer, which is the access switches. This topology demonstrates the ability to connect servers to the access switches. Please note that while we are connecting this server to the access switches using a 10Gbps link, the EX4400 access switches have a limited number of 10Gbps ports.These EX4400 access switches are recommended to be used predominantly to attach 1Gbps devices to the collapsed fabric. The Logical Link should end up with the following parameters: Name: esxi-03_link1

Switch: DC3-Access

Attachment Type: Dual-Homed

LAG Mode: LACP (Active)

Physical link count per individual switch: 1

Link Speed: 10 Gbps

Figure 47: Create Rack Type Pop-up showing the Logical Link options under the Generic Systems tab

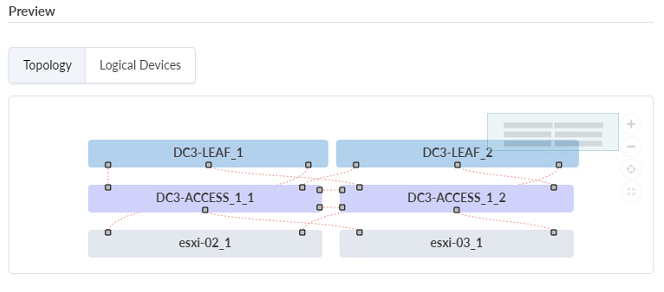

- Click Create. You will know you have successfully created your rack when the topology preview looks like the one in the image below:

Create Templates

Templates combine one or more Rack Types to create a logical representation of the entire fabric. They will be used to create a blueprint.

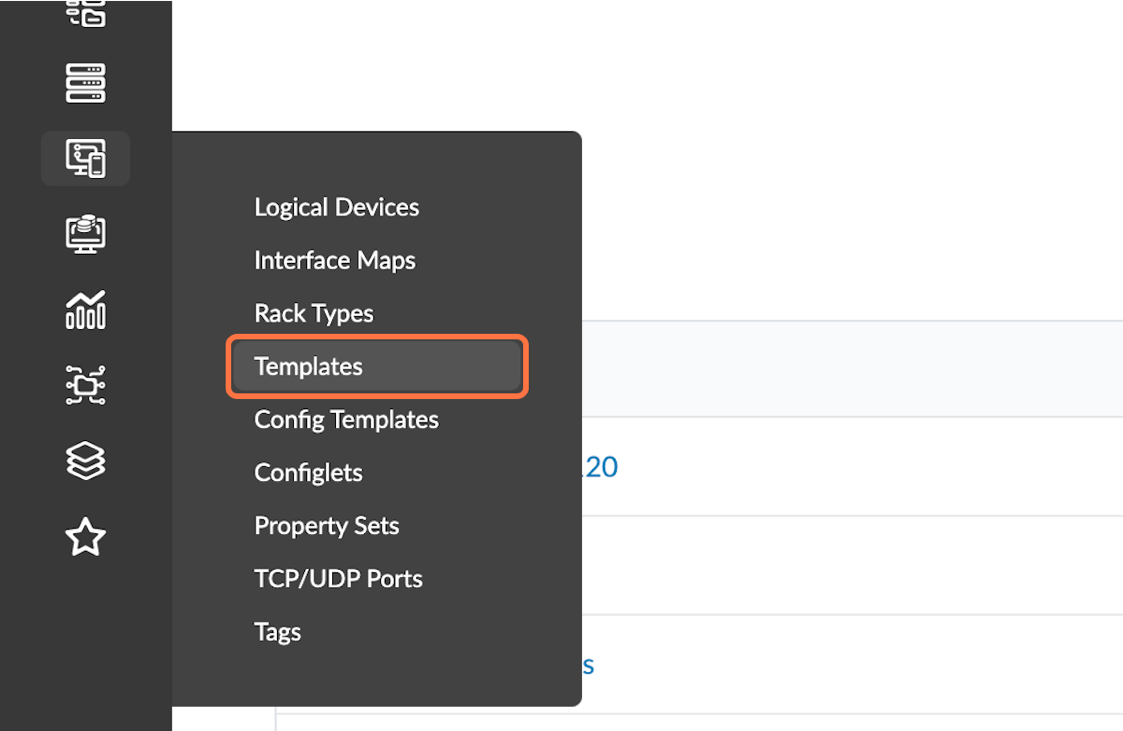

- Navigate to Design > Templates. Select Create Template in the

upper-right corner. Figure 49: The Design Menu with the Templates Button Highlighted

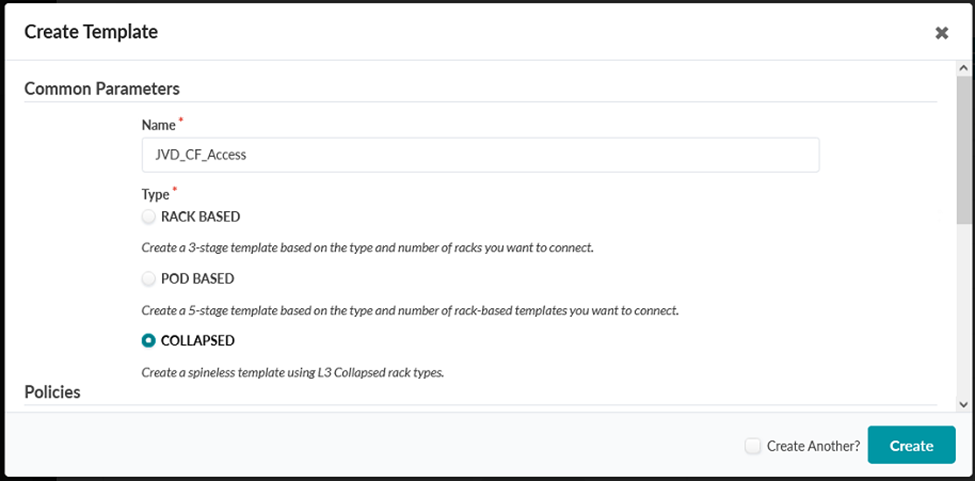

- Name the template JVD_CF_Access. Set Type to Collapsed and select

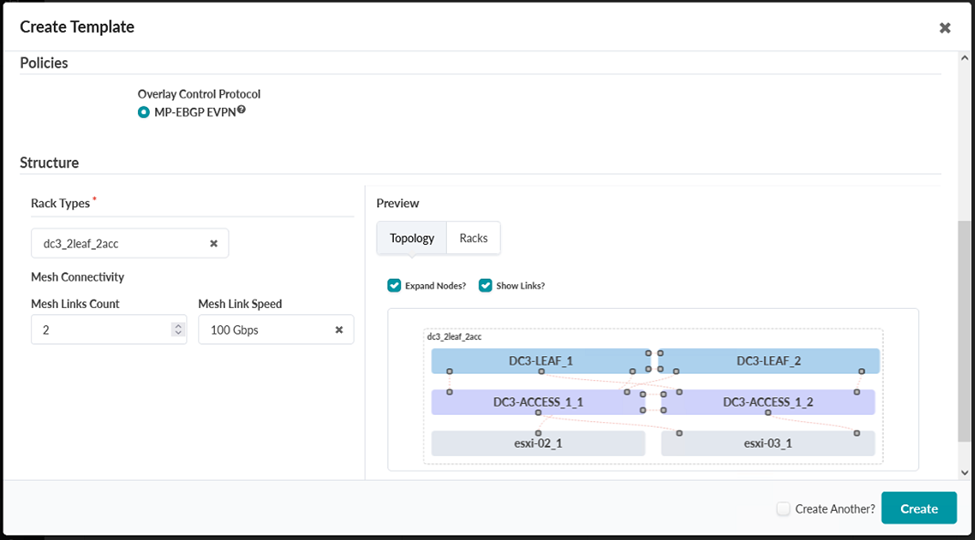

MP-BGP-EVPN as the overlay control protocol. Figure 50: Create Template Pop-up with the COLLAPSED type selected

- Select the Rack Type, dc3_2leaf_2acc, that was created earlier in this procedure. Set Mesh Links Count: 2 and Mesh Link Speed: 100 Gbps.

Click Create.

Create ASN POOL

Create a pool of ASNs for automatic assignation of ASNs later in the walkthrough.

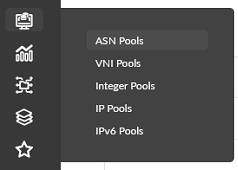

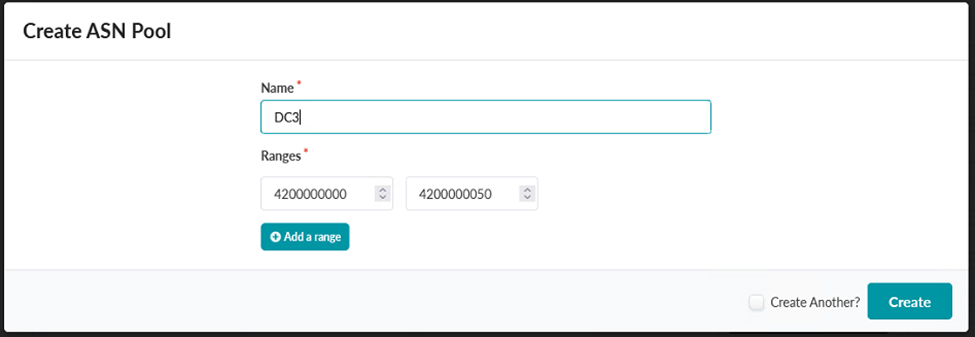

- Navigate to Resources > ASN Pools. Select Create ASN Pool in the

upper-right corner. Figure 52: Resources Menu with the ASN Pools Button Highlighted

- Create an ASN pool with Name: JVD_CF_ASN1 for internal ASNs. This guide

uses the Range

4200000000-4200000050 for this ASN Pool. These ASNs are from the block of 32-bit

ASNs reserved by IANA for private use. Figure 53: Create ASN Pool Pop-up Showing the Creation of the ASN Pool DC3

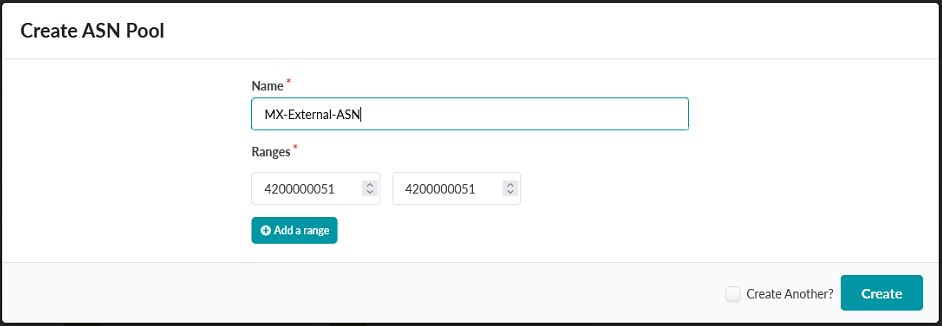

- Create a second ASN pool for the external ASN. Set Name: MX-External-ASN and a Range of 4200000051-4200000051 to define the single external ASN.

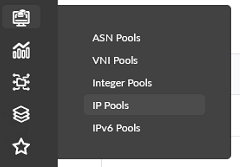

Create IP and Loopback Pool

Create IP pools for automatic assignation of IP addresses later in the walkthrough.

- Navigate to Resources > ASN Pools and then select the Create IP Pool

button in the upper-right corner.Figure 55: Resources Menu with the ASN Pools Button Highlighted

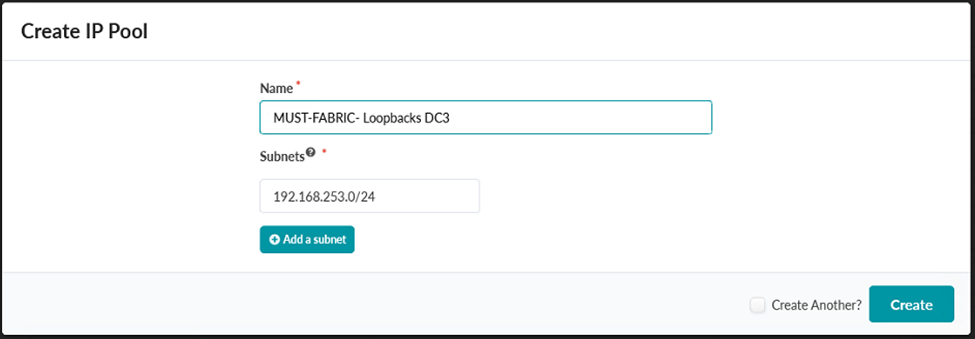

- Create an IP Pool named MUST-FABRIC-Loopbacks DC3 with a subnet of

192.168.253.0/24.Figure 56: Create IP Pool Pop-up Showing the Creation of the MUST-FABRIC-Loopbacks DC3 IP Pool

Click Create.

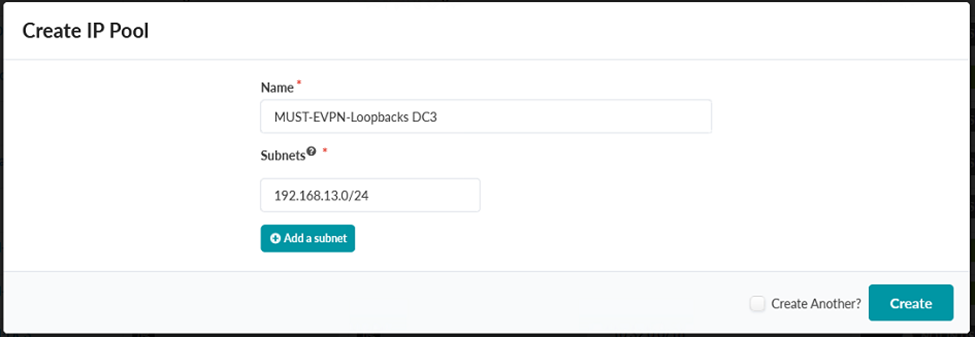

- Create a second IP Pool named MUST-EVPN-Loopbacks DC3 with a subnet of

192.168.13.0/24.

Figure 57: Create IP Pool Pop-up Showing the Creation of the MUST-EVPN-Loopbacks DC3 IP Pool

Click Create.

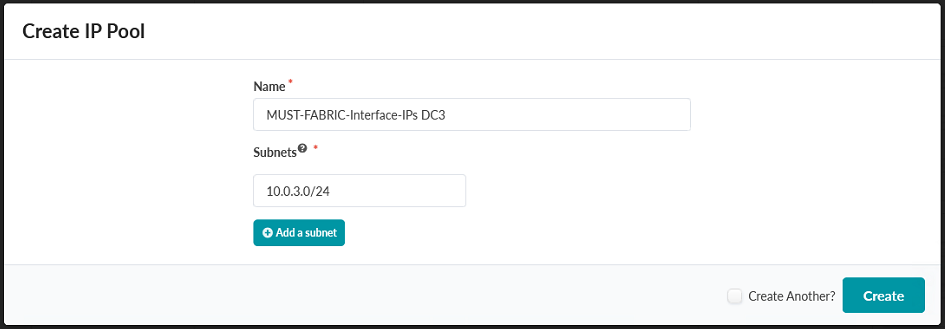

- Create a third IP Pool named MUST-FABRIC-Interface-IPs DC3 with a subnet of

10.0.3.0/24.Figure 58: Create IP Pool Pop-up Showing the Creation of the MUST-FABRIC-Interface-IPs DC3 IP Pool

Click Create.

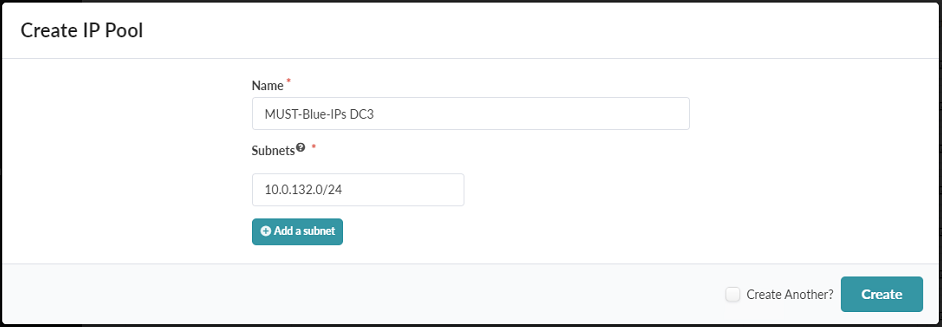

- Create a fourth IP Pool named MUST-Blue-IPs DC3 with a subnet of

10.0.132.0/24.

Figure 59: Create IP Pool Pop-up Showing the Creation of the MUST-Blue-IPs DC3 IP Pool

Click Create.

- Create a fifth IP Pool named MUST-Red-IPs DC3 with a subnet of

10.0.135.0/24.

Figure 60: Create IP Pool Pop-up Showing the Creation of the MUST-Red-IPs DC3 IP Pool

.png)

Click Create.

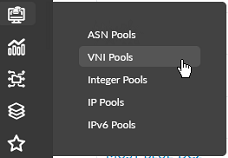

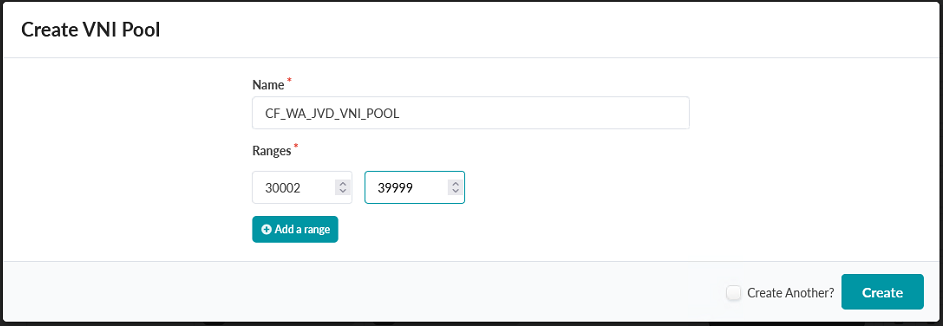

Create VNI Pool

Create a pool of VNIs for automatic assignation of VNIs later in the walkthrough.

- Navigate to Resources > VNI Pools. Select the Create VNI Pool button

in the upper-right corner. Figure 61: VNI Pools button under the Resources menu

- Create a VNI Pool named CF_WA_JVD_VNI_POOL with a range of 30002-39999.

Click Create.

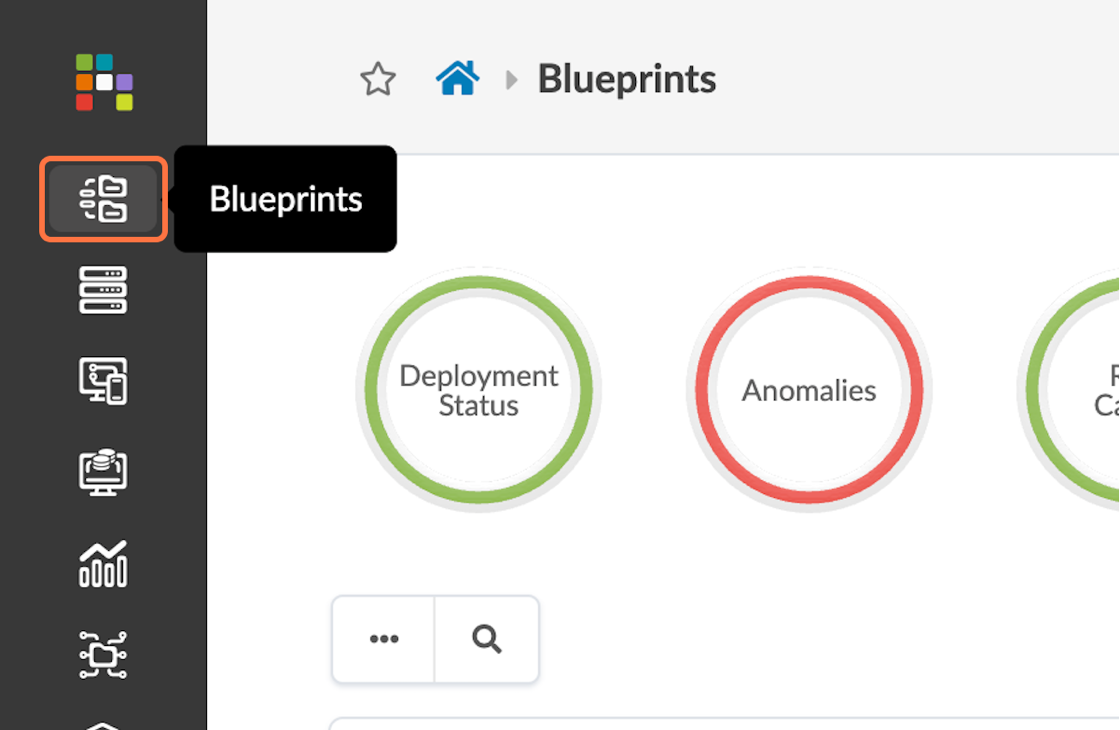

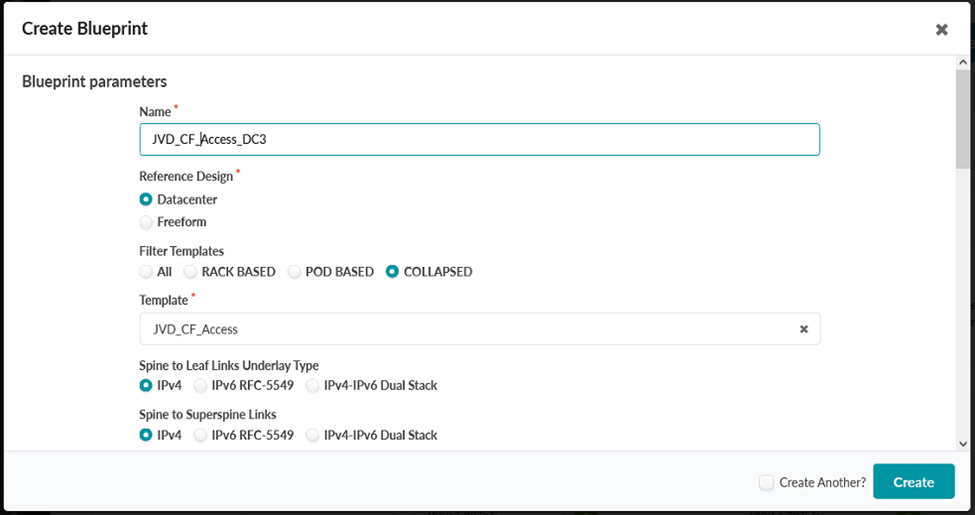

Create Blueprint

Once configured and deployed, this blueprint will be the primary means of interacting with the fabric for administrative purposes.

- Navigate to Blueprints. Select the Create Blueprint button in the

upper-right corner. Figure 63: Blueprints Button on the Main Menu Highlighted

- Name the Blueprint JVD_CF_Access_DC3.

- Select Datacenter for the Reference Design.

- Filter Templates, select COLLAPSED.

-

Select the JVD_CF_Access template that was created earlier in this JVDE and choose IPv4 for the links.

Figure 64: Create Blueprint Pop-up with Inputs Populated for this JVD

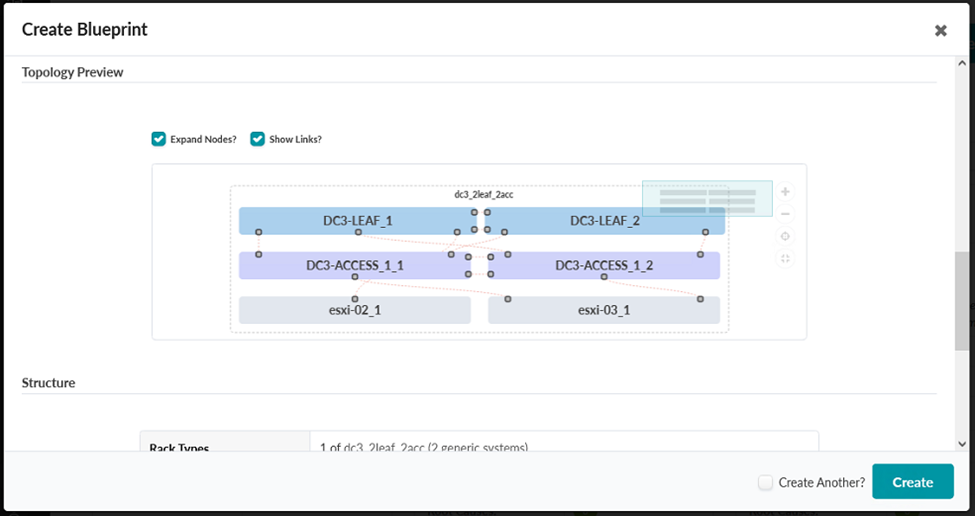

- Scroll down in the Create Blueprint pop-up and verify that the topology preview matches the one seen during the Create Rack steps earlier in this document.

Configure Blueprint

Now that all the logical abstractions necessary to define the basic structure of your fabric have been created, it is time to configure the blueprint with the details of your network environment.

- Navigate to Blueprints. Select the blueprint that was just created.

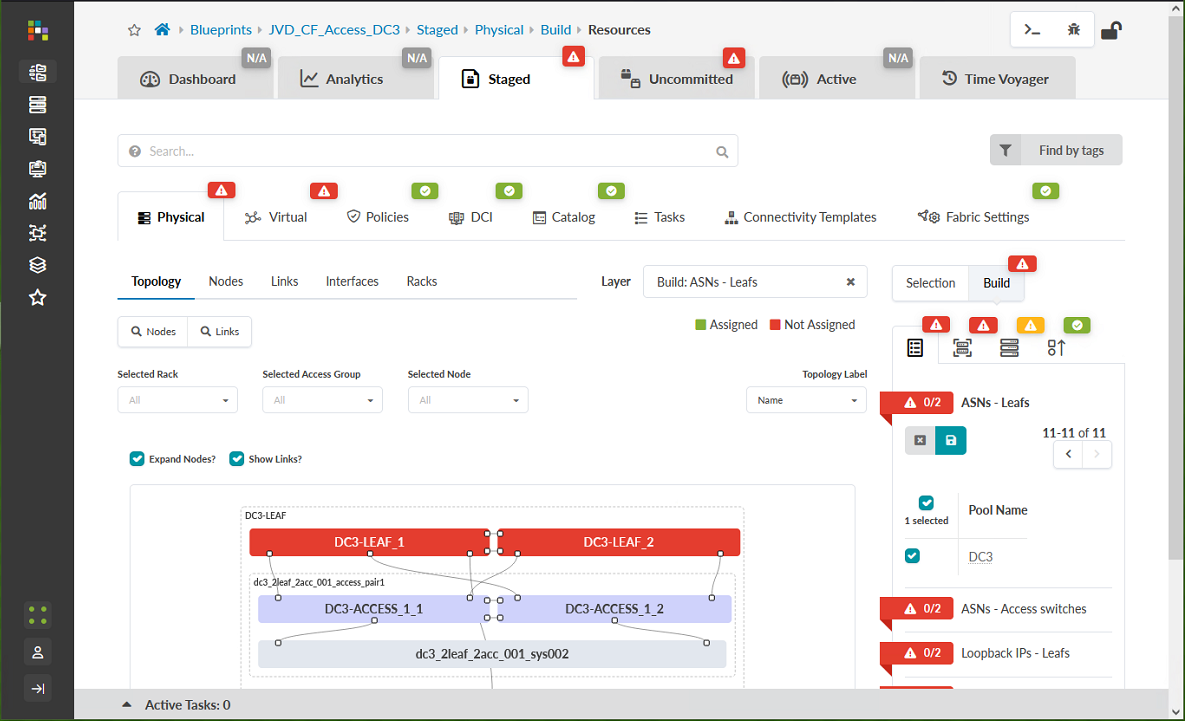

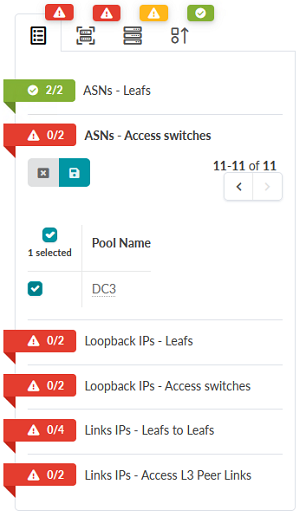

- Go to Staged > Topology. Click on the icon beside the words ASNs – Leafs in the panel on the right side of the screen.

- Select the ASN, DC3, that was previously created for internal use. Figure 66: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing ASN - Leafs assignment options

- Click the Save icon:

Figure 67: Close up of the Save icon in the Staged Tab in the JVD_CF_Access_DC3 Blueprint

- Click the icon beside ASNs – Access switches. Assign the DC3 ASN to the

access switches. Figure 68: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing ASN – Access Switches assignment options

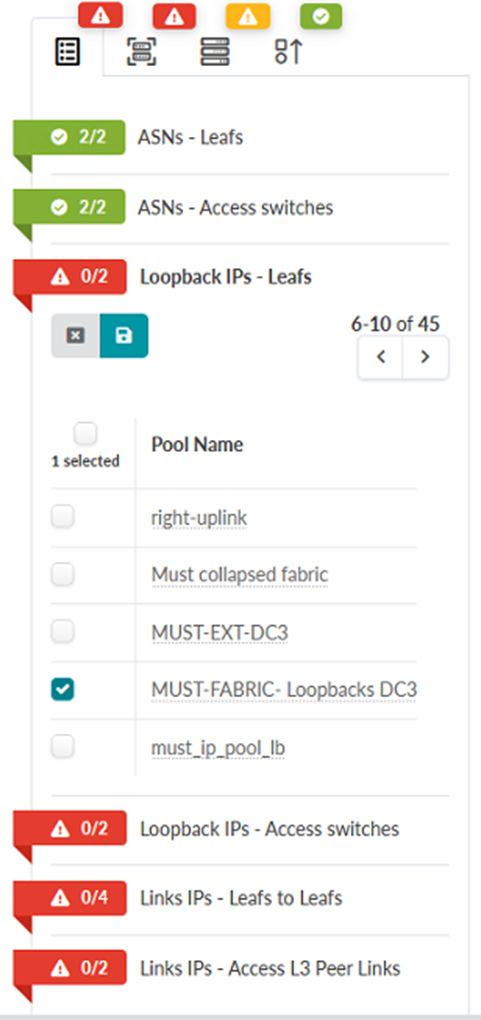

- Click the icon beside Loopback IPs – Leafs. Assign the

MUST-FABRIC-Loopbacks DC3 IP Pool. Figure 69: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing Loopback IPs – Leafs assignment options

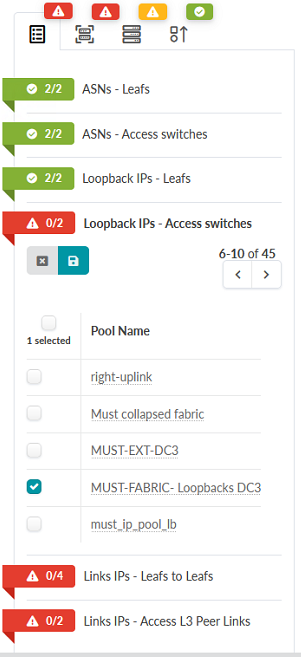

- Click the icon beside Loopback IPs – Access switches. Assign the

MUST-FABRIC-Loopbacks DC3 IP Pool. Figure 70: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing Loopback IPs – Access switches assignment options

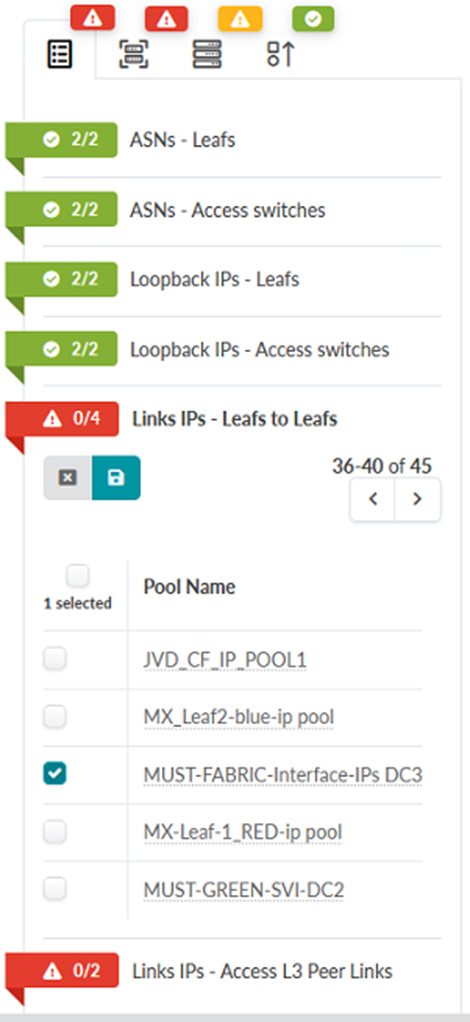

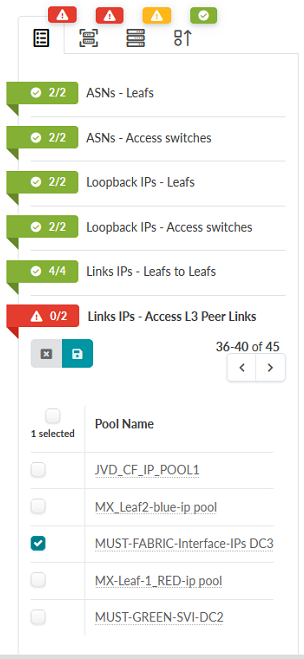

- Click the icon next to Links IPs – Leafs

Figure 71: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing Links IPs – Leafs to Leafs assignment options

- Click the icon next to Links IPs – Access L3 Peer Links. Assign the

MUST-FABRIC-Interface-IPs-DC3 IP Pool. Figure 72: Staged Tab in the JVD_CF_Access_DC3 Blueprint Showing Links IPs – Access L3 Peer Links assignment options

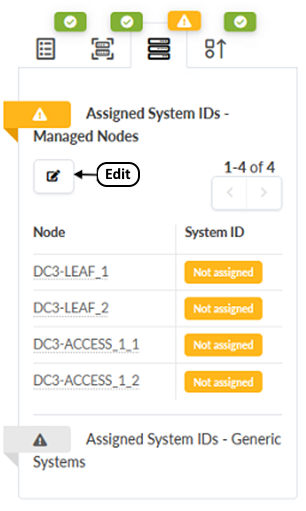

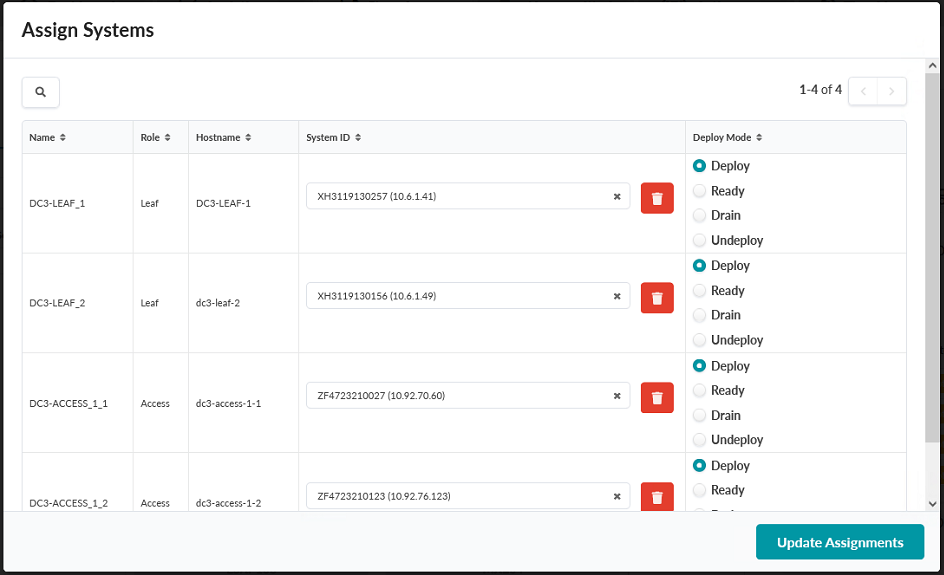

- Deploy the systems by assigning system IDs to the switches. Click the Device icon, which looks like three stacked switches. Click the Edit icon under Assigned System IDs – Managed Nodes.

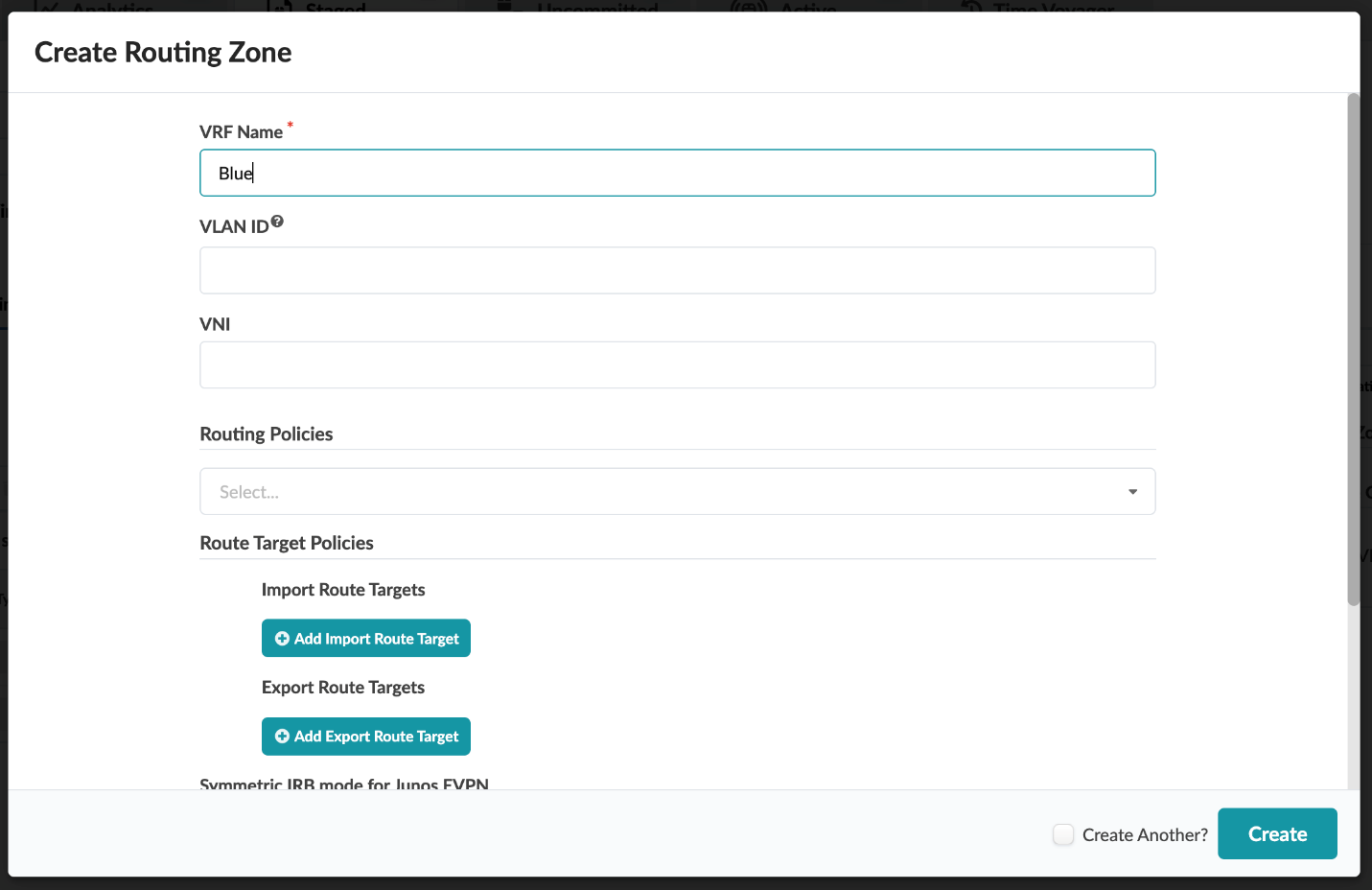

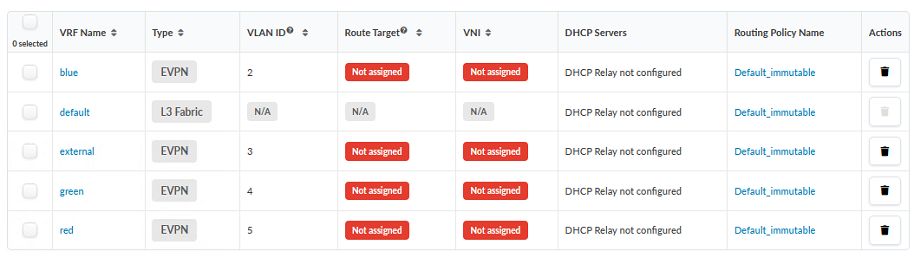

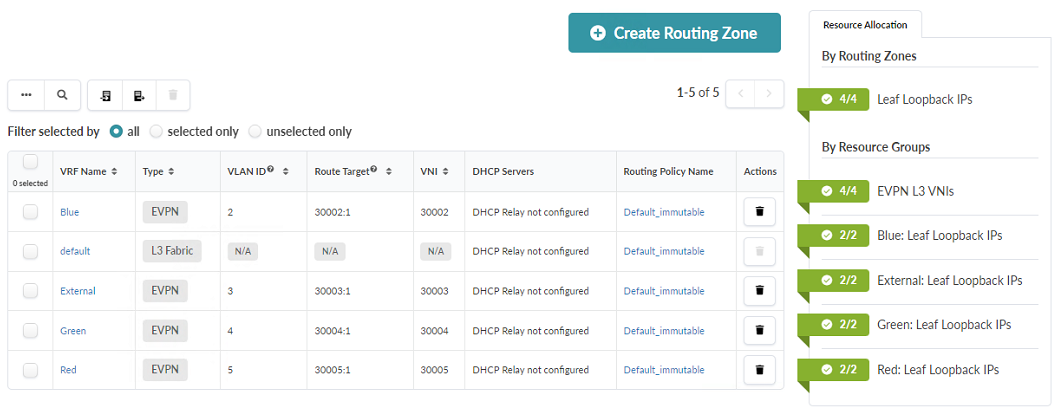

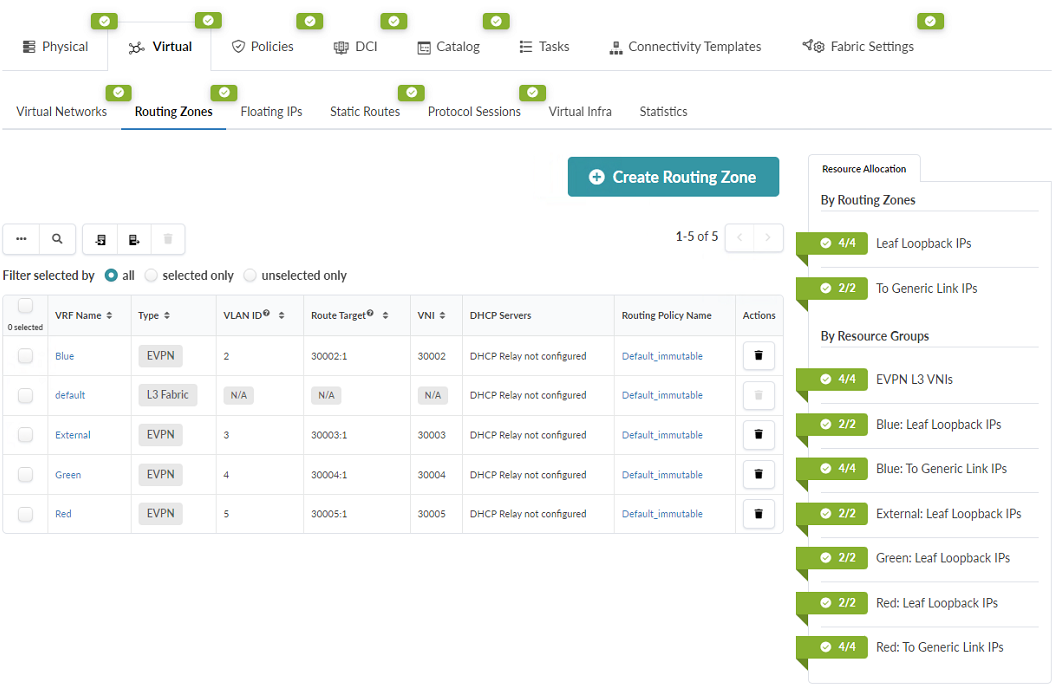

Create Routing Zones

Define the routing zones where the virtual networks will operate.

- From within the JVD_CF_Access_DC3 blueprint, navigate to Staged > Virtual > Routing Zones. Select Create Routing Zone in the upper-right corner of the main content frame.

- Create four VRFs: Blue, External,

Green, and Red. To create a routing zone, enter the VRF Name and

click Create. Repeat the process until all four routing zones are created. Figure 75: Create Routing Zone Pop-up in the JVD_CF_Access_DC3 Blueprint

- The Routing Zone table should include the four new routing zones and the default routing zone:

Create Virtual Networks

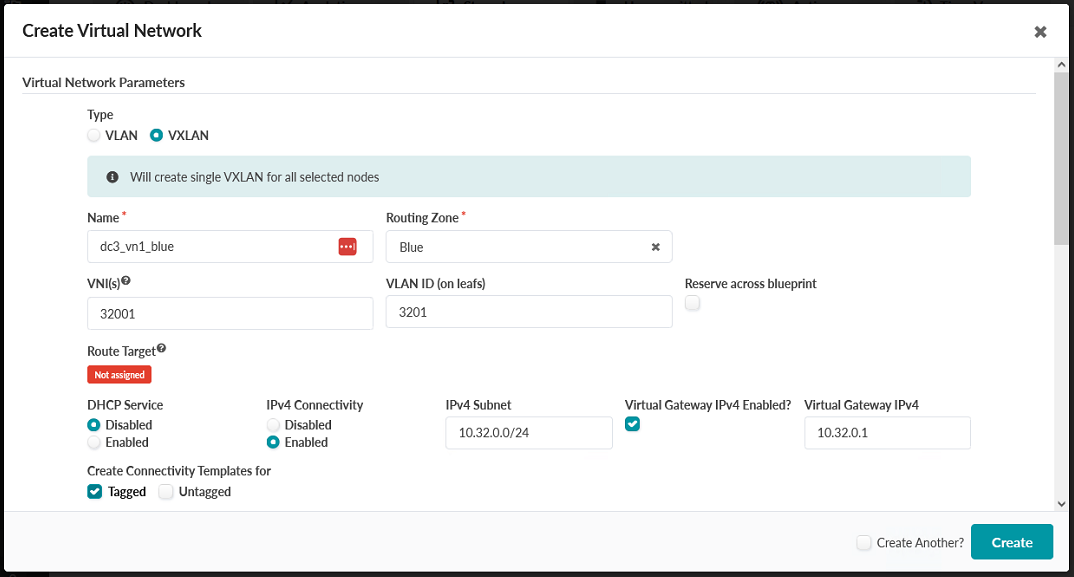

Define the virtual networks that will be a part of this fabric.

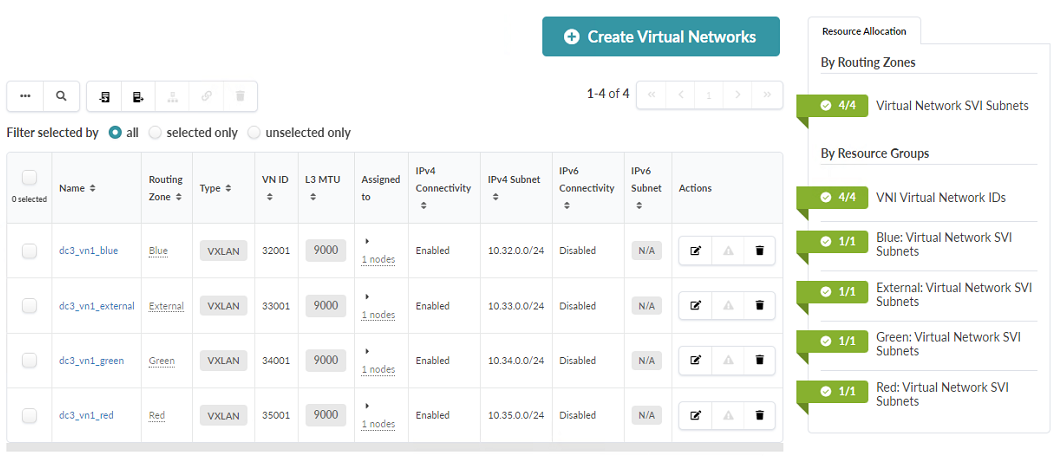

- From within the JVD_CF_Access_DC3 blueprint, navigate to Staged > Virtual > Virtual Networks. Select Create Virtual Networks in the upper-right corner of the main content frame.

- Create Virtual Networks according to the parameters in the following table and

figures. Do not click Create until you have reached and read step 3 of this

section.

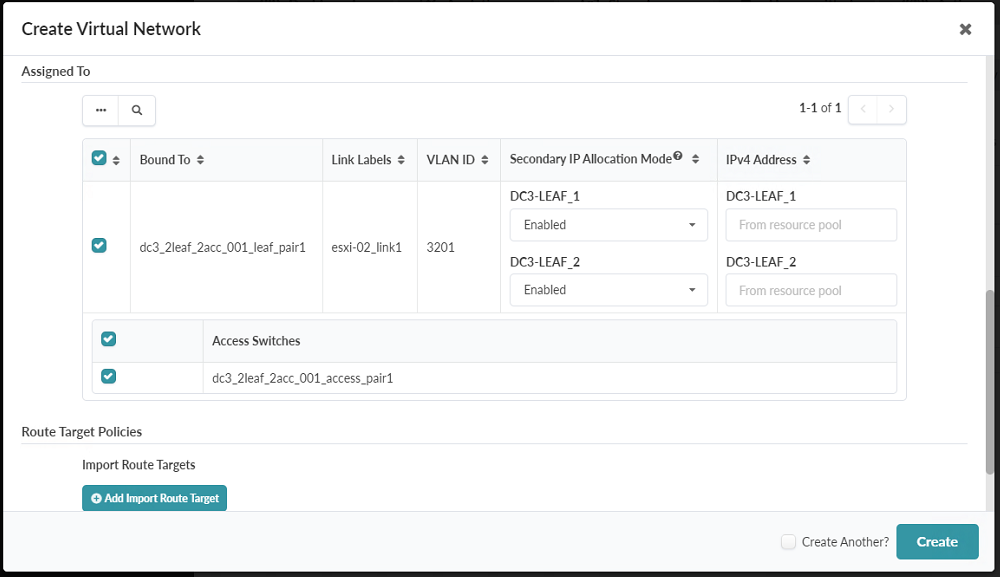

VXLAN Options Blue routing zone External routing zone Green routing zone Red routing zone VRF Name dc3_vn1_blue dc3_vn1_external dc3_vn1_green dc3_vn1_red VNI 32001 33001 34001 35001 VLAN ID 3201 3301 3401 3501 DHCP Service Disabled Disabled Disabled Disabled IPv4 Connectivity Enabled Enabled Enabled Enabled IPv4 Subnet 10.32.0.0/24 10.33.0.0/24 10.34.0.0/24 10.35.0.0/24 Virtual Gateway IPv4 Enabled Yes Yes Yes Yes Virtual Gateway IPv4 10.32.0.1 10.33.0.1 10.34.0.1 10.35.0.1 Create Connectivity Templates For Tagged Tagged Tagged Tagged Figure 77: Upper Part of the Create Virtual Network Pop-up

- Before you click Create to create the virtual networks, you must assign virtual

networks to the relevant switches. Scroll down in the open pop-up, assign to all switches,

and then click Create. Figure 78: Lower Part of the Create Virtual Network Pop-up

- Repeat this process for each routing zone in the table. Verify the results in the Virtual Networks table.

Assign Routing Group resources

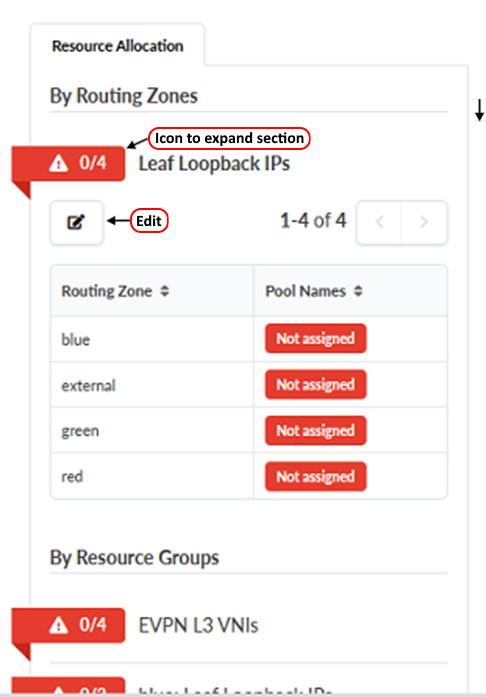

Assign resources such as IPs and VNIs to the routing groups created above. Because we defined these resources in the resource pools earlier in this procedure, we can simply add the relevant resource pools and Apstra will automatically assign resources as needed.

- From within the JVD_CF_Access_DC3 blueprint, navigate to Staged > Virtual > Routing Zones. Select the Create Routing Zone button in the upper-right corner of the main content frame.

- In the Resource Allocation configuration box on the right of the Routing

Zones tab, click the icon next to Leaf loopback IPs.

Figure 80: Resource Allocation configuration box in the Routing Zones tab showing the Leaf Loopback IPs section open and unconfigured

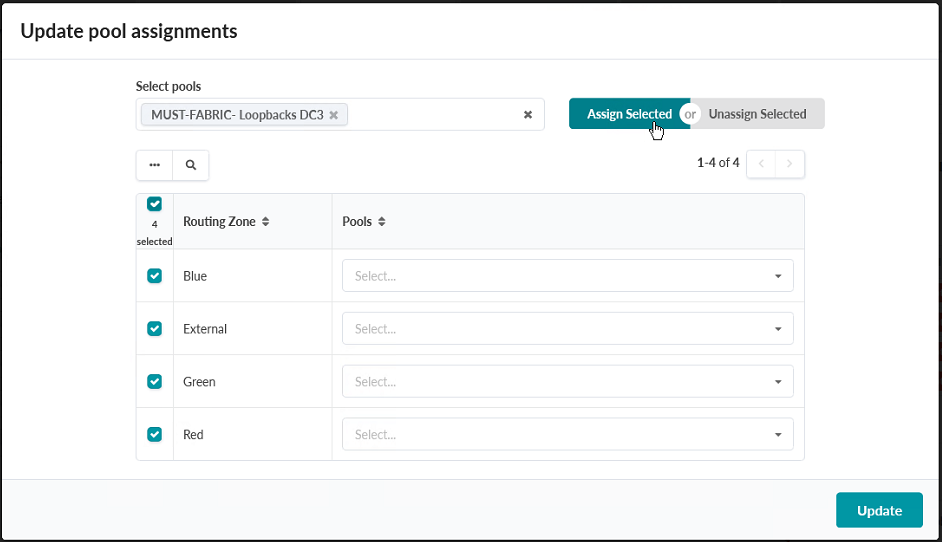

- Click the Edit icon next to Leaf Loopback IPs to open the Update Pool Assignments pop-up box.

- Select all Routing Zones by clicking the empty box in the upper left corner of

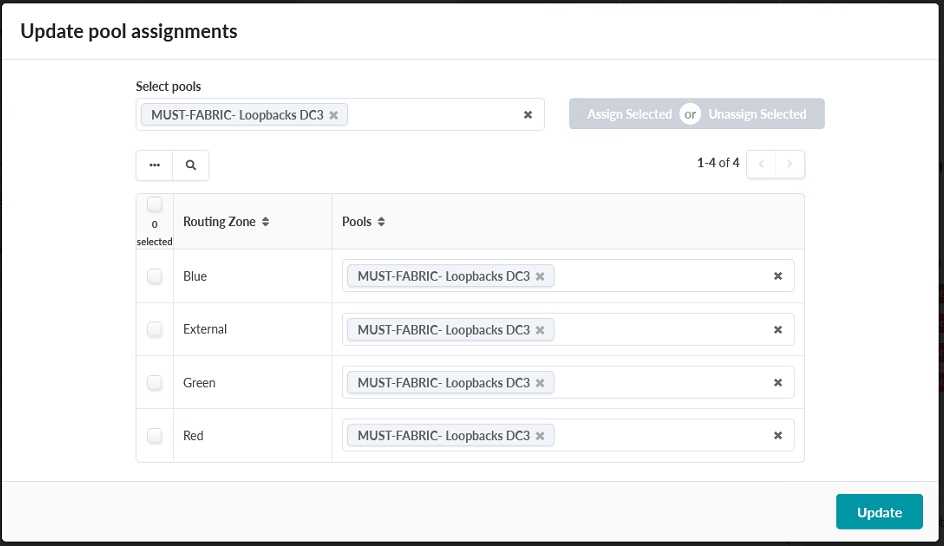

the table. Click the Assign Selected button. Figure 81: The Update Pool Assignments pop-up box showing all routing zones having been selected with the Assign Selected button moused over

- Verify that all Routing Zones have been assigned a Loopback IP Pool. Figure 82: The Update Pool Assignments pop-up box showing all routing zones having been assigned a Loopback IP Pool

Click Update.

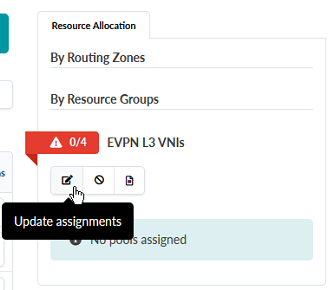

- From within the Resource Allocation box, click the red box next to EVPN L3

VNIs. Select the Update assignments button. Figure 83: The Update assignments button for EVPN L3 VNIs under Resource Groups highlighed

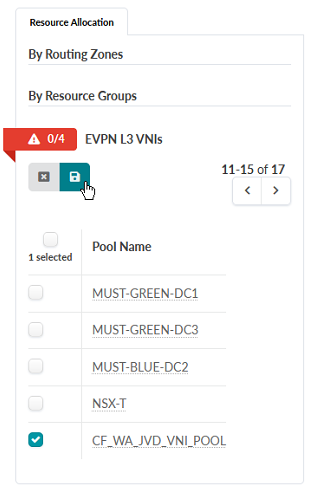

-

Select the previously created CF_WA_JVD_VNI_POOL.

Click the Save icon.

Figure 84: The Save icon highlighted for EVPN L3 VNIs under Resource Groups showing the CF_WA_JVD_VNI_POOL having been selected

- Verify that L3 VNIs have been assigned by examining the Routing Zones table. You should see the automatically assigned Route Targets and VNIs.

Add External Router

You can add an external router to the fabric to configure network connectivity beyond the fabric itself. For the purposes of this document, an MX204 router is used as the external router. All routers are treated as generic systems by Apstra, making the specific router interchangeable. The router itself (and its configuration) is not considered part of this JVDE.

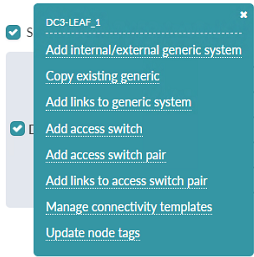

-

From within the JVD_CF_Access_DC3 blueprint, navigate to Staged > Physical.

Click on DC3-Leaf-1 in the topology.

- Click the checkbox on DC3-Leaf-1 and select Add

internal/external generic system.

Figure 86: DC3-Leaf-1 Pop-up Showing the ability to add an External Generic System

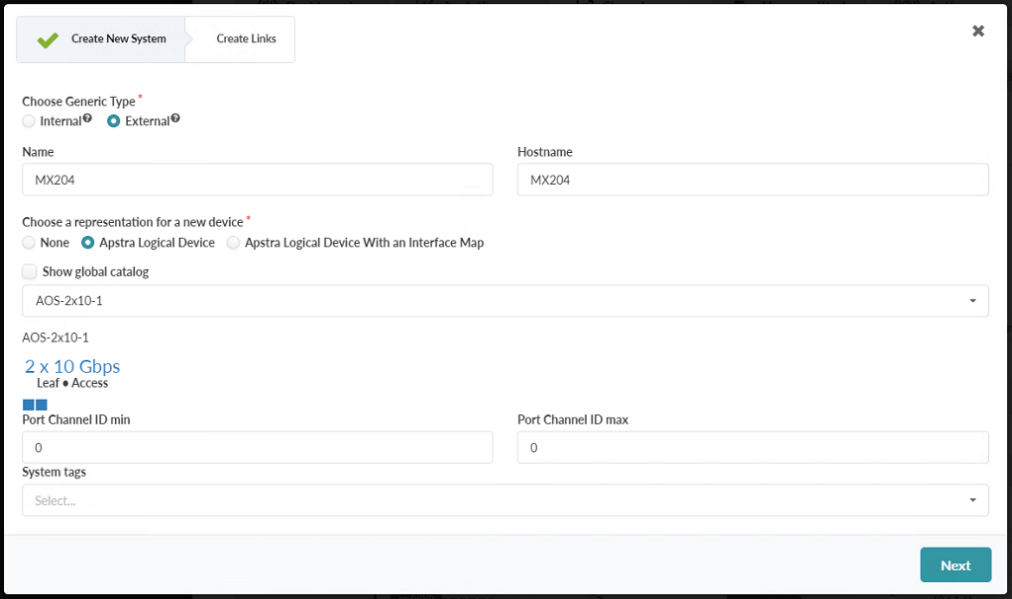

- Create an external system. Name it MX204 and select a logical device with 2x10

Gbps ports.Note:

The 2x10 Gbps logical device does not accurately reflect the MX204, however, as an External Generic System the MX204 is not directly managed by Juniper Apstra. Apstra only needs to know how many of what kind of interface the fabric will be connecting to.

Figure 87: First Part of the Assign Internal External Generic System pop-up

Click Next.

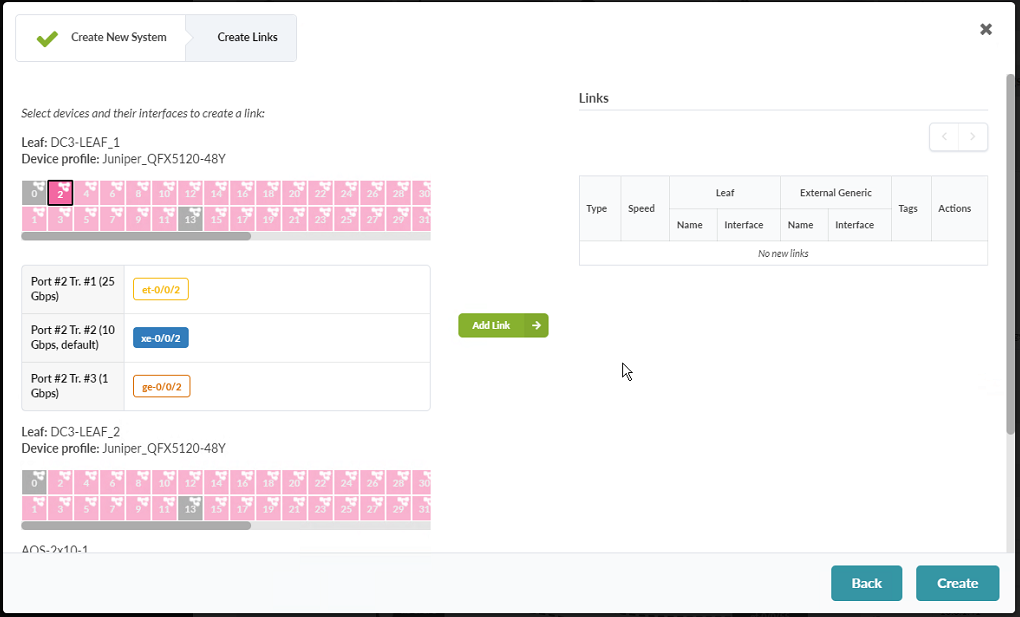

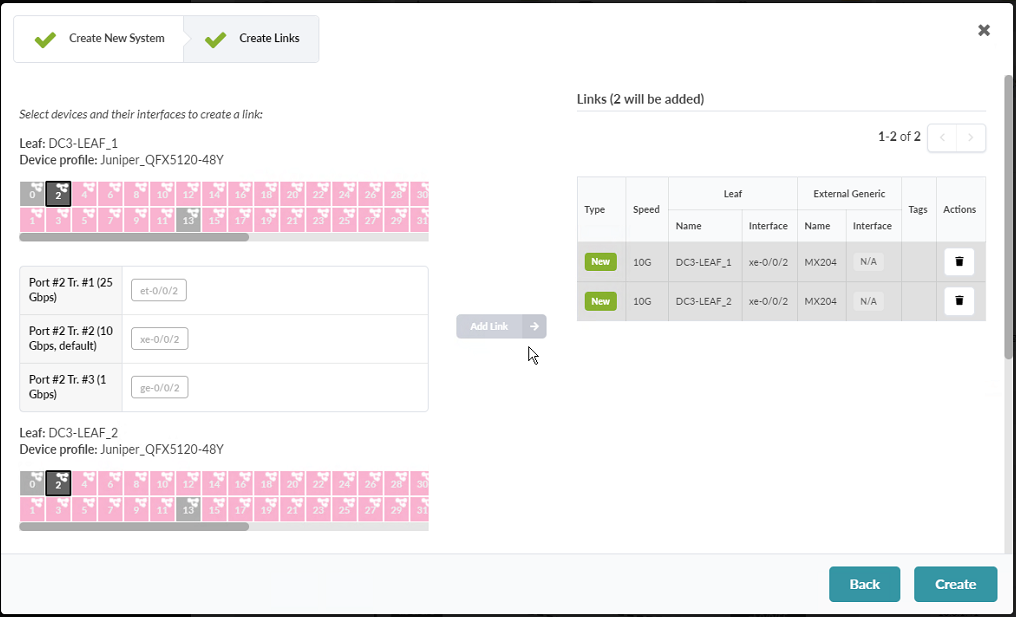

- Create links for the new system to both DC3-Leaf-1 and DC3-Leaf-2. You

create these links by first selecting an interface and then selecting a port speed from

the list that becomes available when you click the port. Figure 88: Second Part of the Assign Internal/External Generic System pop-up

- Click Add Link. Repeat this step for both switches.

- Click Create in the bottom right corner once you’re done. Figure 89: Second Part of the Assign Internal/External Generic System

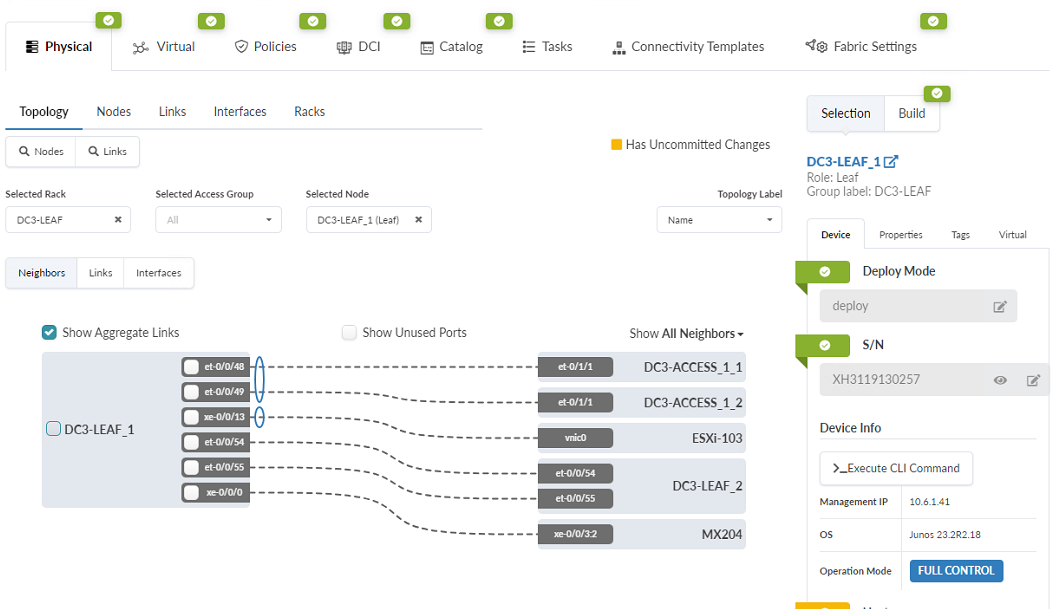

- When complete, you should see a new link on the graphic.

The MX204 referenced above is a stand-in for a generic router, and not considered a key component of this JVD. Similar steps can be taken to connect any router. The MX interface configuration is provided below in order to provide an example of how routing on a router is set up to interface with the network described in this JVD.

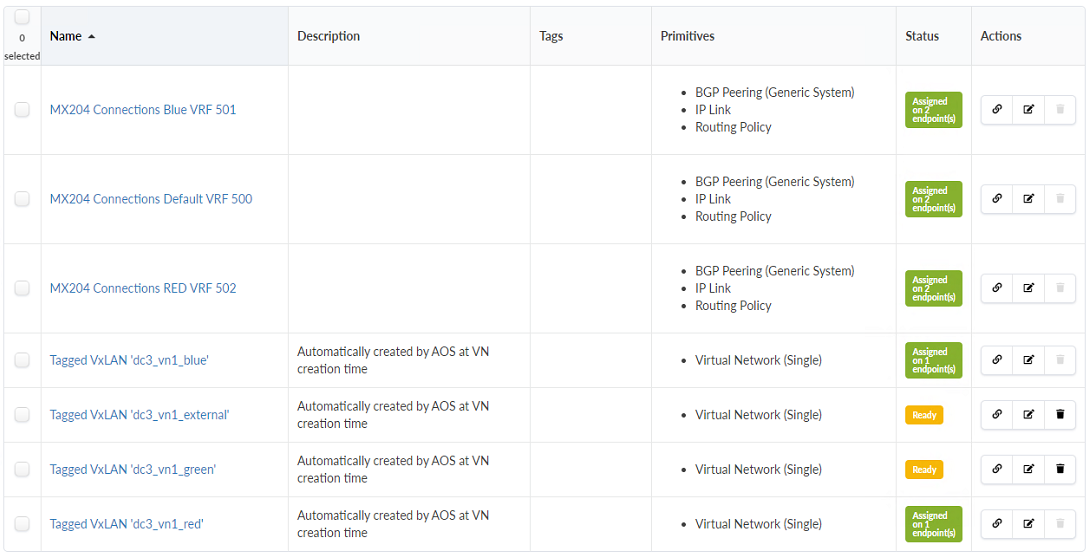

Assign External Router Connectivity Templates

Connectivity Templates must be created to connect Virtual Networks to the newly-created router. We created four routing zones earlier in the document, but we will only be assigning two Connectivity Templates—one each for the Blue and Red Routing Zones—in this procedure. The Connectivity Templates created for the external router will be used along with the Connectivity Templates created for the corresponding Virtual Networks in the next section to connect the two Generic Systems, the ESXi servers, to the topology.

- Navigate to Staged > Connectivity Templates.

- Click the Add Template button in the upper-right corner.

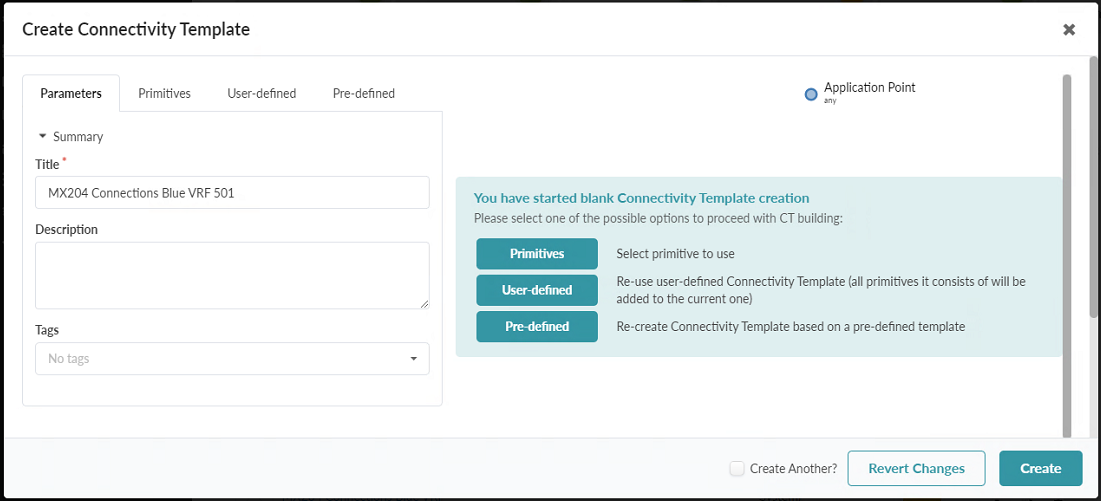

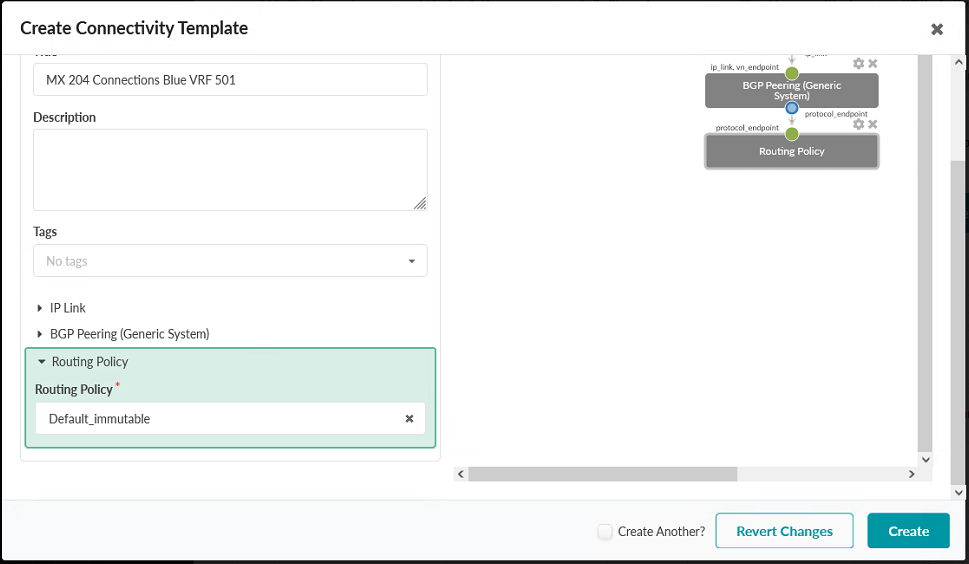

- Name the template MX204 Connections Blue VRF 501. Figure 91: Create Connectivity Template Pop-up in the JVD_CF_Access_DC3 Blueprint

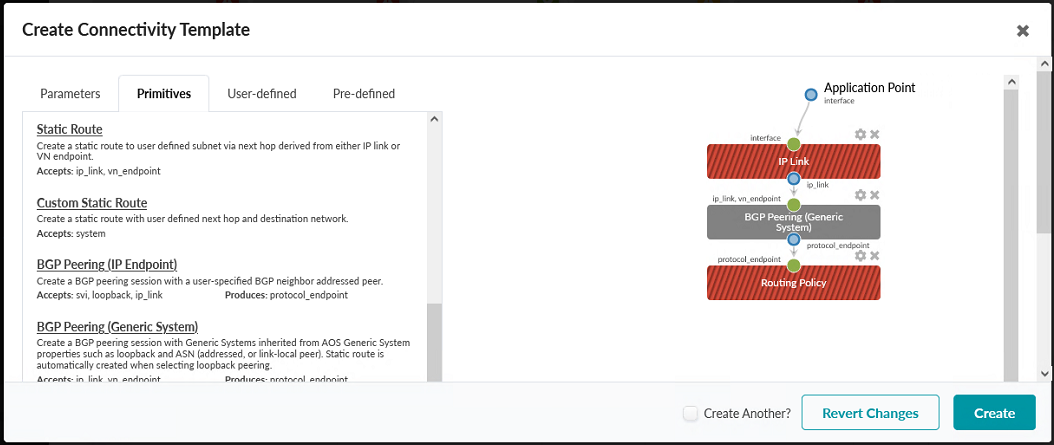

- Click on the Primitives tab. Select the primitives IP Link, BGP

Peering (Generic System), and Routing Policy. When you click the

Primitives button, the primitives you need to select will appear as text links in

the box on the left of the popup, under the Primitives tab. You will have to scroll

through that section of the popup to find all three primitives to select. When you are

done selecting all three primitives, the result should look like the image below. Figure 92: Primitives Tab in the Create Connectivity Template Pop-up in the JVD_CF_Access_DC3 Blueprint

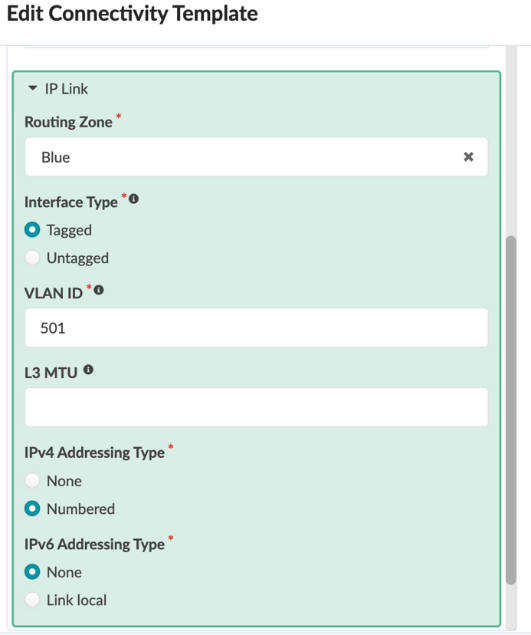

- Click the Parameters tab on the left of the popup box and expand the IP Link section by clicking on the text labelled IP Link.

- Choose the routing zone Blue.

- Set the interface type to Tagged and enter a VLAN ID of 501.

- Set the IPv4 Addressing Type to Numbered and the IPv6 Addressing

Type to None. Figure 93: Expanded IP Link Section of the Parameters Tab

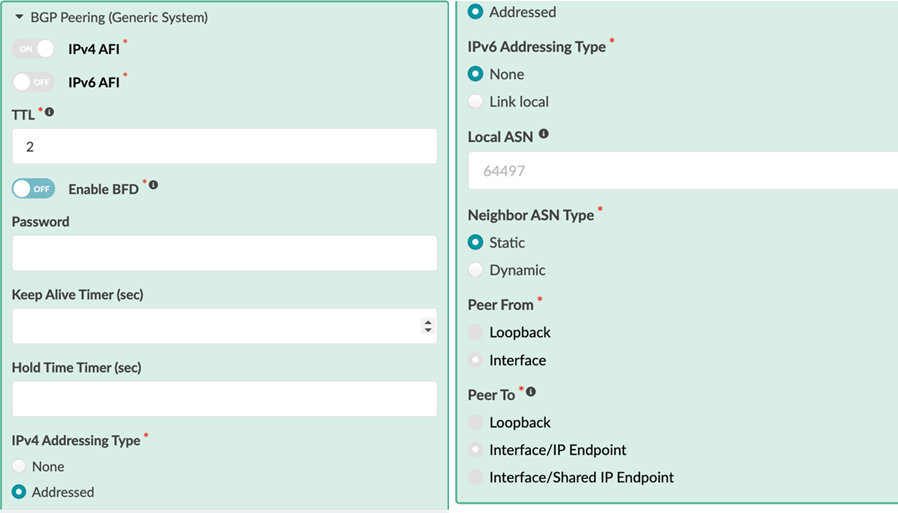

- Expand BGP Peering (Generic System) by clicking on the labelled BGP Peering (Generic System).

- Set the IPv4 AFI to ONand the IPv6 AFI to OFF.

- Configure a TTL of 2. Do not enable BFD.

- Set the IPv4 Addressing Type to Addressed and leave the IPv6 Addressing Type as None.

- Leave the Local ASN Type set to unconfigured.

- Set the Neighbor ASN Type to Static.

- Set the Peer From option to Interface.

- Set the Peer To option to Interface/IP Endpoint.

Figure 94: Expanded IP Link Section of the Parameters Tab

- Expand and configure the Routing Policy section. Set the Routing Policy to Default_immutable.

- Click Create. Figure 95: Expanded Routing Policy Section in the Parameters Tab

- Repeat the above steps to create a connectivity template for MX204 Connections Red VRF 502. This connectivity template will connect to the Red routing group and use 502 as its VLAN ID.

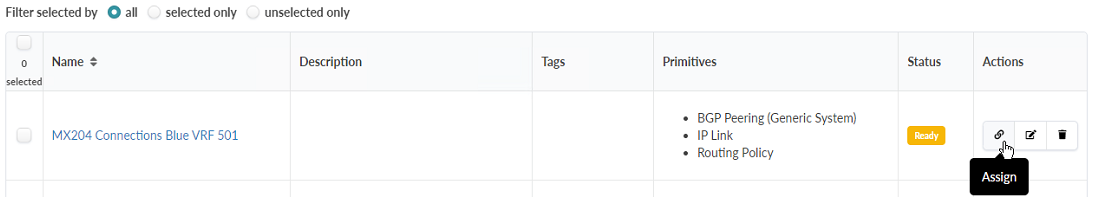

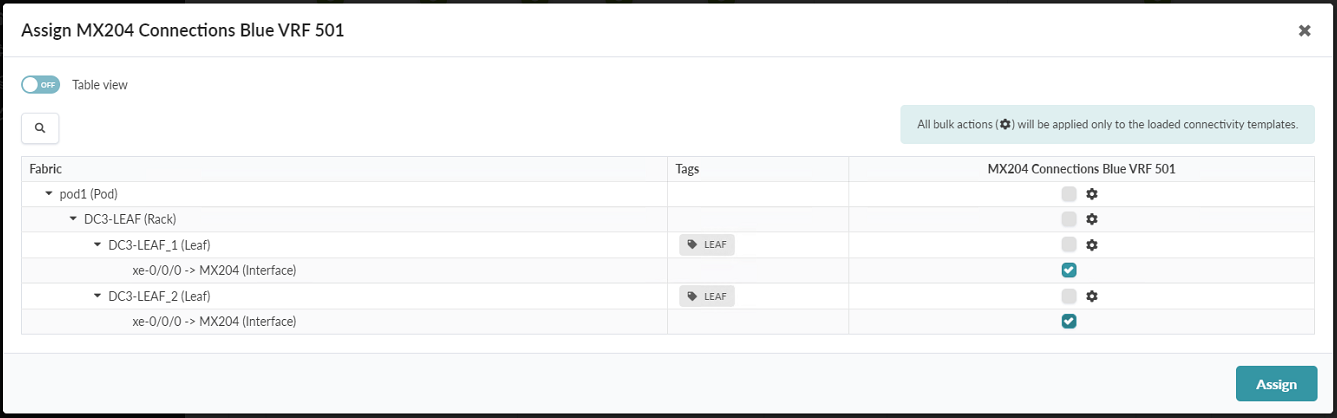

- The connectivity templates that were created need to be assigned to the DC3-Leaf-1 and

DC3-Leaf-2 interfaces, which are connected to the external router (MX204). Select the

Connectivity Templates in the table and click the Assign icon under the Actions

column of the Connectivity Templates table. Figure 96: CF-to-MX_Blue Connectivity Template Listing, with the Assign Button Highlighted

- Click the checkboxes to assign the connectivity template to the interfaces connected

to the external router. Click Assign. Figure 97: Assign-CF-to-MX_Blue Pop-up

- Repeat the process for the MX204 Connections Red VRF 502 Connectivity Template

Assign Virtual Network Connectivity Templates

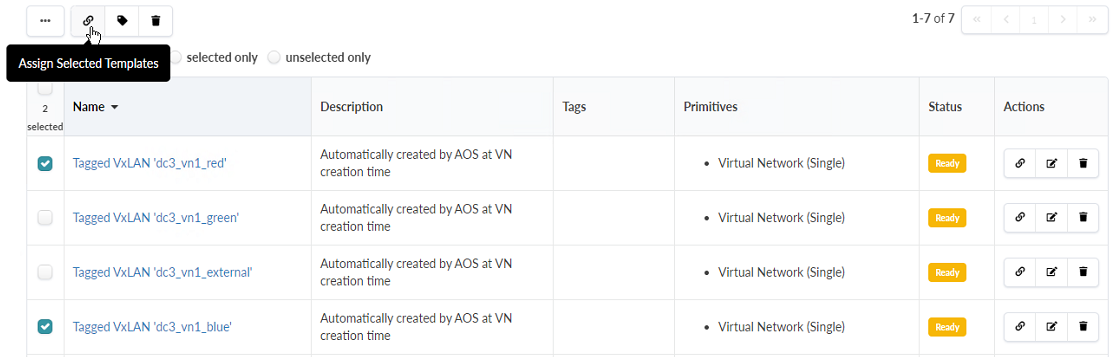

When the virtual networks were created earlier in this document, basic Connectivity Templates would have been created for each virtual network. These Connectivity Templates need to be assigned to appropriate interfaces. We will be assigning the two Connectivity Templates created for the dc3_vn1_blue and dc3_vn1_red Virtual Networks, which are connected respectively to the Blue and Red Routing Zones. These Virtual Networks will be connected to the two Generic Systems (the ESXi servers) created earlier in the document, providing the systems access to the router.

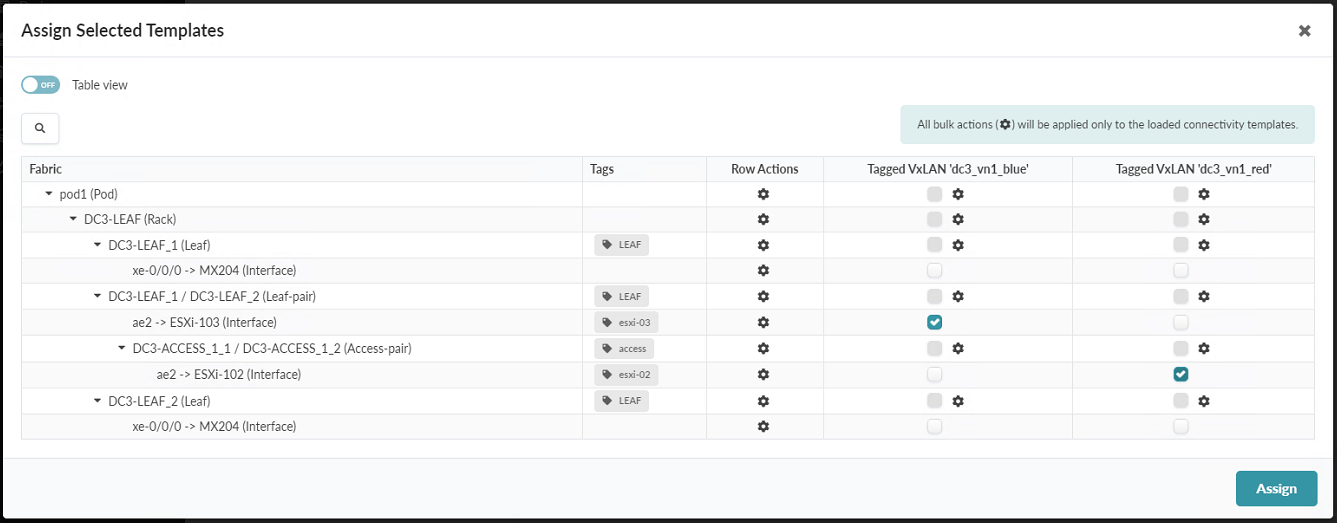

- While still within the JVD_CF_Access_DC3 blueprint, navigate to Staged >

Connectivity Templates. Click the check box next to Tagged VxLAN

‘dc3_vn1_blue

’ and Tagged VxLAN ‘dc3_vn1_red’. Click the Assign icon (it looks

like two links in a chain), which appears when you make that selection. Figure 98: The Assign icon

Figure 99: Tagged VXLAN Connectivity Templates and the Control Panel to Assign Them

Figure 99: Tagged VXLAN Connectivity Templates and the Control Panel to Assign Them

- Assign the Tagged VxLAN ‘dc3_vn1_blue’ to the Esxi-03 server and the

Tagged VxLAN ‘dc3_vn1_red’ to the Esxi-02 server. Figure 100: Assign Connectivity Template Pop-up Showing the Tagged VxLAN assignment options

When you are finished, click Assign.

- The result is a Connectivity Templates table that looks like the Figure below.

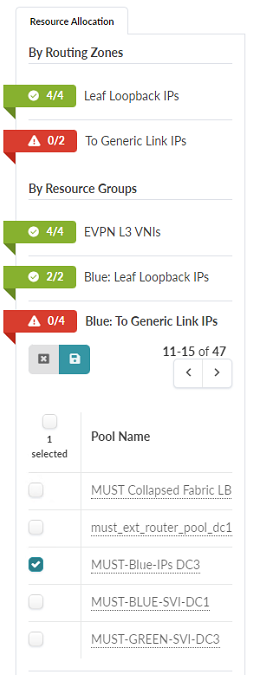

Assign IP Address Pools to Connected Routing Zones

The final step is to assign IP addresses to the interfaces on DC3-Leaf-1 and DC3-Leaf-2, which are connected to the external router for the Blue VRF.

To create IP address pools:

- Navigate to Staged > Routing Zones inside the blueprint. You will see that there are new options available under Resource Allocation.

- Click on the icon next to Blue: To Generic Link

IPs in the Resource Allocation panel and

assign the IP Pool MUST-Blue-IPs-DC3 address pool that was

created earlier in the document. To complete this step, select the

checkmark next to the appropriate IP Pool and click the

Save button.

Figure 102: Blue: To Generic Link IPs Section of the Routing Zones Panel is Shown Expanded

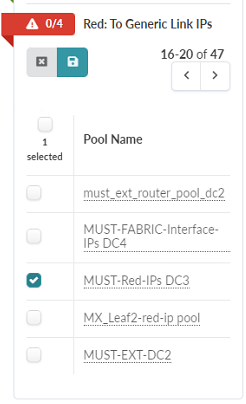

- Repeat the above, assigning the MUST-Red-IPs DC3 IP

Pool to the Red: Generic Link IPs section.

Figure 103: Red: To Generic Link IPs Section of the Routing Zones Panel is Shown Expanded

- When you are finished assigning the IP address pool, all of the red icons should turn green. You are now ready to deploy.

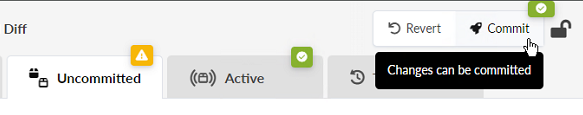

Commit Changes

If you have followed this document to this point, you should now be ready to commit the changes you have made to the switches and bring your network online.

- Navigate to the Uncommitted tab inside the

blueprint. Click the Commit button in the upper

right.

Figure 105: The Commit button in the Uncomitted tab

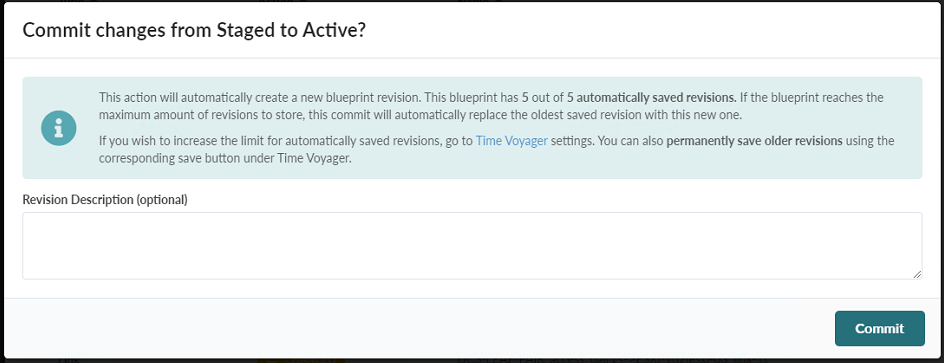

-

When you click Commit a verification pop-up will appear. Enter a description of your changes that is meaningful for you.

Click Commit.

Verify Connectivity from the switch command line.

Now that you have committed your changes to the switches, you should verify connectivity from the Junos operating system command line on your switches.

- Log in to each switch and run the following commands:

Output from Leaf-1:

root@DC3-LEAF-1> show lacp interfaces

Aggregated interface: ae1

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

et-0/0/48 Actor No No Yes Yes Yes Yes Fast Active

et-0/0/48 Partner No No Yes Yes Yes Yes Fast Active

et-0/0/49 Actor No No Yes Yes Yes Yes Fast Active

et-0/0/49 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

et-0/0/48 Current Fast periodic Collecting distributing

et-0/0/49 Current Fast periodic Collecting distributing

Aggregated interface: ae2

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/0/13 Actor No No Yes Yes Yes Yes Fast Active

xe-0/0/13 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/0/13 Current Fast periodic Collecting distributing

root@DC3-LEAF-1> show vlans

Routing instance VLAN name Tag Interfaces

default-switch default 1

evpn-1 vn3201 3201

ae1.0*

ae2.0*

esi.1936*

esi.1937*

vtep-14.32770*

evpn-1 vn3301 3301

ae1.0*

esi.1936*

vtep-14.32770*

evpn-1 vn3401 3401

ae1.0*

esi.1936*

vtep-14.32770*

evpn-1 vn3501 3501

ae1.0*

esi.1936*

vtep-14.32770*

{master:0}

root@DC3-LEAF-1> show arp vpn Blue

MAC Address Address Name Interface Flags

cc:e1:94:6b:a8:71 10.0.132.1 10.0.132.1 xe-0/0/0.501 none

{master:0}

root@DC3-LEAF-1> show arp vpn Red

MAC Address Address Name Interface Flags

cc:e1:94:6b:a8:71 10.0.135.1 10.0.135.1 xe-0/0/0.502 none

{master:0}

root@DC3-LEAF-1> show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC,

B - Blocked MAC)

Ethernet switching table : 0 entries, 0 learned

Routing instance : evpn-1

{master:0}

root@DC3-LEAF-1> show evpn database

Instance: evpn-1

VLAN DomainId MAC address Active source Timestamp IP address

32001 00:1c:73:00:00:01 irb.3201 May 14 15:28:42 10.32.0.1

33001 00:1c:73:00:00:01 irb.3301 May 14 15:28:42 10.33.0.1

34001 00:1c:73:00:00:01 irb.3401 May 14 15:28:42 10.34.0.1

35001 00:1c:73:00:00:01 irb.3501 May 14 15:28:42 10.35.0.1

{master:0}

root@DC3-LEAF-1> show interfaces terse | match in

Interface Admin Link Proto Local Remote

pfe-0/0/0.16383 up up inet

inet6

pfh-0/0/0.16383 up up inet

pfh-0/0/0.16384 up up inet

xe-0/0/0.500 up up inet 10.202.1.1/31

xe-0/0/0.501 up up inet 10.0.132.0/31

xe-0/0/0.502 up up inet 10.0.135.0/31

xe-0/0/3.0 up down inet

xe-0/0/4.0 up up inet

xe-0/0/5.0 up up inet

xe-0/0/6.0 up up inet

xe-0/0/7.0 up up inet

xe-0/0/8.0 up up inet

xe-0/0/9.0 up up inet

xe-0/0/11.0 up up inet

xe-0/0/12.0 up up inet

xe-0/0/22.0 up down inet

xe-0/0/23.0 up down inet

et-0/0/50.0 up up inet

et-0/0/54.0 up up inet 192.168.13.0/31

et-0/0/55.0 up up inet 192.168.13.2/31

bme0.0 up up inet 128.0.0.1/2

em0.0 up up inet 10.6.1.41/26

em2.32768 up up inet 192.168.1.2/24

irb.0 up down inet

irb.3201 up up inet 10.32.0.1/24

irb.3301 up up inet 10.33.0.1/24

irb.3401 up up inet 10.34.0.1/24

irb.3501 up up inet 10.35.0.1/24

jsrv.1 up up inet 128.0.0.127/2

lo0.0 up up inet 192.168.253.0 --> 0/0

lo0.2 up up inet 192.168.253.13 --> 0/0

lo0.3 up up inet 192.168.253.17 --> 0/0

lo0.4 up up inet 192.168.253.19 --> 0/0

lo0.5 up up inet 192.168.253.15 --> 0/0

lo0.16384 up up inet 127.0.0.1 --> 0/0

lo0.16385 up up inet

{master:0}Output from Leaf-2:

root@dc3-leaf-2> show lacp interfaces

Aggregated interface: ae1

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

et-0/0/48 Actor No No Yes Yes Yes Yes Fast Active

et-0/0/48 Partner No No Yes Yes Yes Yes Fast Active

et-0/0/49 Actor No No Yes Yes Yes Yes Fast Active

et-0/0/49 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

et-0/0/48 Current Fast periodic Collecting distributing

et-0/0/49 Current Fast periodic Collecting distributing

Aggregated interface: ae2

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/0/13 Actor No No Yes Yes Yes Yes Fast Active

xe-0/0/13 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/0/13 Current Fast periodic Collecting distributing

{master:0}

root@dc3-leaf-2> show vlans

Routing instance VLAN name Tag Interfaces

default-switch default 1

evpn-1 vn3201 3201

ae1.0*

ae2.0*

esi.1936*

esi.1937*

vtep-14.32770*

evpn-1 vn3301 3301

ae1.0*

esi.1936*

vtep-14.32770*

evpn-1 vn3401 3401

ae1.0*

esi.1936*

vtep-14.32770*

evpn-1 vn3501 3501

ae1.0*

esi.1936*

vtep-14.32770*

root@dc3-leaf-2> show arp vpn Blue

MAC Address Address Name Interface Flags

cc:e1:94:6b:a8:72 10.0.132.3 10.0.132.3 xe-0/0/0.501 none

{master:0}

root@dc3-leaf-2> show arp vpn Red

MAC Address Address Name Interface Flags

cc:e1:94:6b:a8:72 10.0.135.3 10.0.135.3 xe-0/0/0.502 none

{master:0}

root@dc3-leaf-2> show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC,

B - Blocked MAC)

Ethernet switching table : 0 entries, 0 learned

Routing instance : evpn-1

{master:0}

root@dc3-leaf-2> show evpn database

Instance: evpn-1

VLAN DomainId MAC address Active source Timestamp IP address

32001 00:1c:73:00:00:01 irb.3201 May 14 15:29:08 10.32.0.1

33001 00:1c:73:00:00:01 irb.3301 May 14 15:29:08 10.33.0.1

34001 00:1c:73:00:00:01 irb.3401 May 14 15:29:08 10.34.0.1

35001 00:1c:73:00:00:01 irb.3501 May 14 15:29:08 10.35.0.1

{master:0}

root@dc3-leaf-2>

root@dc3-leaf-2> show interfaces terse | match in

Interface Admin Link Proto Local Remote

pfe-0/0/0.16383 up up inet

inet6

pfh-0/0/0.16383 up up inet

pfh-0/0/0.16384 up up inet

xe-0/0/0.500 up up inet 10.202.1.3/31

xe-0/0/0.501 up up inet 10.0.132.2/31

xe-0/0/0.502 up up inet 10.0.135.2/31

xe-0/0/1.0 up down inet

xe-0/0/2.0 up down inet

xe-0/0/3.0 up down inet

xe-0/0/4.0 up up inet

xe-0/0/5.0 up up inet

xe-0/0/6.0 up down inet

xe-0/0/7.0 up up inet

xe-0/0/9.0 up up inet

xe-0/0/11.0 up up inet

xe-0/0/12.0 up up inet

et-0/0/50.0 up up inet

et-0/0/54.0 up up inet 192.168.13.1/31

et-0/0/55.0 up up inet 192.168.13.3/31

bme0.0 up up inet 128.0.0.1/2

em0.0 up up inet 10.6.1.49/26

em2.32768 up up inet 192.168.1.2/24

irb.0 up down inet

irb.3201 up up inet 10.32.0.1/24

irb.3301 up up inet 10.33.0.1/24

irb.3401 up up inet 10.34.0.1/24

irb.3501 up up inet 10.35.0.1/24

jsrv.1 up up inet 128.0.0.127/2

lo0.0 up up inet 192.168.253.1 --> 0/0

lo0.2 up up inet 192.168.253.14 --> 0/0

lo0.3 up up inet 192.168.253.18 --> 0/0

lo0.4 up up inet 192.168.253.20 --> 0/0

lo0.5 up up inet 192.168.253.16 --> 0/0

lo0.16384 up up inet 127.0.0.1 --> 0/0

lo0.16385 up up inet

{master:0}Output from Access-1:

root@dc3-access-1-1> show lacp interfaces

Aggregated interface: ae1

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

et-0/1/0 Actor No No Yes Yes Yes Yes Fast Active

et-0/1/0 Partner No No Yes Yes Yes Yes Fast Active

et-0/1/1 Actor No No Yes Yes Yes Yes Fast Active

et-0/1/1 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

et-0/1/0 Current Fast periodic Collecting distributing

et-0/1/1 Current Fast periodic Collecting distributing

Aggregated interface: ae3

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/2/2 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/2 Partner No No Yes Yes Yes Yes Fast Active

xe-0/2/3 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/3 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/2/2 Current Fast periodic Collecting distributing

xe-0/2/3 Current Fast periodic Collecting distributing

Aggregated interface: ae2

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/2/0 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/0 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/2/0 Current Fast periodic Collecting distributing

{master:0}

root@dc3-access-1-1> show vlans

Routing instance VLAN name Tag Interfaces

default-switch default 1

evpn-1 vn3201 3201

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3301 3301

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3401 3401

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3501 3501

ae1.0*

ae2.0*

esi.1764*

esi.1765*

vtep-8.32770*

{master:0}Because of differences in the access switches (L2 VxLAN) versus the collapsed spine switches (L3 VxLAN), the access switches do not see nodes within the virtual networks themselves. As you will note later on in the tests, however, the two hosts can ping one another, despite being on different virtual networks, with one host connected to the access switches and another to the collapsed spines.

root@dc3-access-1-1> show arp vpn Blue

error: Named Route table not found.

{master:0}

root@dc3-access-1-1> show arp vpn Red

error: Named Route table not found.

{master:0}

root@dc3-access-1-1> show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC,

B - Blocked MAC)

Ethernet switching table : 0 entries, 0 learned

Routing instance : evpn-1

{master:0}

root@dc3-access-1-1> show evpn database

{master:0}

root@dc3-access-1-1> show interfaces terse | match in

Interface Admin Link Proto Local Remote

mge-0/0/0.0 up down inet

pfe-0/0/0.16383 up up inet

inet6

pfh-0/0/0.16383 up up inet

pfh-0/0/0.16384 up up inet

mge-0/0/1.0 up down inet

mge-0/0/2.0 up down inet

mge-0/0/3.0 up down inet

mge-0/0/4.0 up down inet

mge-0/0/5.0 up down inet

mge-0/0/6.0 up down inet

mge-0/0/7.0 up down inet

mge-0/0/8.0 up down inet

mge-0/0/9.0 up down inet

mge-0/0/10.0 up down inet

mge-0/0/11.0 up down inet

mge-0/0/12.0 up down inet

mge-0/0/13.0 up down inet

mge-0/0/14.0 up down inet

mge-0/0/15.0 up down inet

mge-0/0/16.0 up down inet

mge-0/0/17.0 up down inet

mge-0/0/18.0 up down inet

mge-0/0/19.0 up down inet

mge-0/0/20.0 up down inet

mge-0/0/21.0 up down inet

mge-0/0/22.0 up down inet

mge-0/0/23.0 up down inet

mge-0/0/24.0 up down inet

mge-0/0/25.0 up down inet

mge-0/0/26.0 up down inet

mge-0/0/27.0 up down inet

mge-0/0/28.0 up down inet

mge-0/0/29.0 up down inet

mge-0/0/30.0 up down inet

mge-0/0/31.0 up down inet

mge-0/0/32.0 up down inet

mge-0/0/33.0 up down inet

mge-0/0/34.0 up down inet

mge-0/0/35.0 up down inet

mge-0/0/36.0 up down inet

mge-0/0/37.0 up up inet

mge-0/0/38.0 up up inet

mge-0/0/39.0 up down inet

mge-0/0/40.0 up down inet

mge-0/0/41.0 up down inet

mge-0/0/42.0 up down inet

mge-0/0/43.0 up down inet

mge-0/0/44.0 up down inet

mge-0/0/45.0 up down inet

mge-0/0/46.0 up up inet

mge-0/0/47.0 up down inet

xe-0/2/1.0 up up inet

ae3.0 up up inet 10.0.3.1/31

bme0.0 up up inet 128.0.0.1/2

jsrv.1 up up inet 128.0.0.127/2

lo0.0 up up inet 192.168.253.2 --> 0/0

lo0.16384 up up inet 127.0.0.1 --> 0/0

lo0.16385 up up inet

me0.0 up up inet 10.92.70.60/23

{master:0}Output from Access-2:

root@dc3-access-1-2> show lacp interfaces

Aggregated interface: ae1

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

et-0/1/0 Actor No No Yes Yes Yes Yes Fast Active

et-0/1/0 Partner No No Yes Yes Yes Yes Fast Active

et-0/1/1 Actor No No Yes Yes Yes Yes Fast Active

et-0/1/1 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

et-0/1/0 Current Fast periodic Collecting distributing

et-0/1/1 Current Fast periodic Collecting distributing

Aggregated interface: ae3

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/2/2 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/2 Partner No No Yes Yes Yes Yes Fast Active

xe-0/2/3 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/3 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/2/2 Current Fast periodic Collecting distributing

xe-0/2/3 Current Fast periodic Collecting distributing

Aggregated interface: ae2

LACP state: Role Exp Def Dist Col Syn Aggr Timeout Activity

xe-0/2/0 Actor No No Yes Yes Yes Yes Fast Active

xe-0/2/0 Partner No No Yes Yes Yes Yes Fast Active

LACP protocol: Receive State Transmit State Mux State

xe-0/2/0 Current Fast periodic Collecting distributing

{master:0}

root@dc3-access-1-2> show vlans

Routing instance VLAN name Tag Interfaces

default-switch default 1

evpn-1 vn3201 3201

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3301 3301

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3401 3401

ae1.0*

esi.1764*

vtep-8.32770*

evpn-1 vn3501 3501

ae1.0*

ae2.0*

esi.1764*

esi.1765*

vtep-8.32770*

{master:0}

root@dc3-access-1-2> show arp vpn Blue

error: Named Route table not found.

{master:0}

root@dc3-access-1-2> show arp vpn Red

error: Named Route table not found.

{master:0}

root@dc3-access-1-2> show ethernet-switching table

MAC flags (S - static MAC, D - dynamic MAC, L - locally learned, P - Persistent static

SE - statistics enabled, NM - non configured MAC, R - remote PE MAC, O - ovsdb MAC,

B - Blocked MAC)

Ethernet switching table : 0 entries, 0 learned

Routing instance : evpn-1

{master:0}

root@dc3-access-1-2> show evpn database

{master:0}

root@dc3-access-1-2> show interfaces terse | match in

Interface Admin Link Proto Local Remote

mge-0/0/0.0 up down inet

pfe-0/0/0.16383 up up inet

inet6

pfh-0/0/0.16383 up up inet

pfh-0/0/0.16384 up up inet

mge-0/0/1.0 up down inet

mge-0/0/2.0 up down inet

mge-0/0/3.0 up down inet

mge-0/0/4.0 up down inet

mge-0/0/5.0 up down inet

mge-0/0/6.0 up down inet

mge-0/0/7.0 up down inet

mge-0/0/8.0 up down inet

mge-0/0/9.0 up down inet

mge-0/0/10.0 up down inet

mge-0/0/11.0 up down inet

mge-0/0/12.0 up down inet

mge-0/0/13.0 up down inet

mge-0/0/14.0 up down inet

mge-0/0/15.0 up down inet

mge-0/0/16.0 up down inet

mge-0/0/17.0 up down inet

mge-0/0/18.0 up down inet

mge-0/0/19.0 up down inet

mge-0/0/20.0 up down inet

mge-0/0/21.0 up down inet

mge-0/0/22.0 up down inet

mge-0/0/23.0 up down inet

mge-0/0/24.0 up down inet

mge-0/0/25.0 up down inet

mge-0/0/26.0 up down inet

mge-0/0/27.0 up down inet

mge-0/0/28.0 up down inet

mge-0/0/29.0 up down inet

mge-0/0/30.0 up down inet

mge-0/0/31.0 up down inet

mge-0/0/32.0 up down inet

mge-0/0/33.0 up down inet

mge-0/0/34.0 up down inet

mge-0/0/35.0 up down inet

mge-0/0/36.0 up down inet

mge-0/0/37.0 up up inet

mge-0/0/38.0 up down inet

mge-0/0/39.0 up down inet

mge-0/0/40.0 up down inet

mge-0/0/41.0 up down inet

mge-0/0/42.0 up down inet

mge-0/0/43.0 up down inet

mge-0/0/44.0 up down inet

mge-0/0/45.0 up down inet

mge-0/0/46.0 up up inet

mge-0/0/47.0 up up inet

xe-0/2/1.0 up up inet

ae3.0 up up inet 10.0.3.0/31

bme0.0 up up inet 128.0.0.1/2

jsrv.1 up up inet 128.0.0.127/2

lo0.0 up up inet 192.168.253.3 --> 0/0

lo0.16384 up up inet 127.0.0.1 --> 0/0

lo0.16385 up up inet

me0.0 up up inet 10.92.76.123/23

{master:0}

root@dc3-access-1-2>PING from Host-2 (Red VRF) 10.35.0.102 to Host-1 (Blue VRF) 10.32.0.103:

must@redubuntutest:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:8e:f8:ed brd ff:ff:ff:ff:ff:ff

altname enp11s0

inet 10.35.0.102/24 brd 10.35.0.255 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe8e:f8ed/64 scope link

valid_lft forever preferred_lft forever

must@redubuntutest:~$ ping 10.32.0.103

PING 10.32.0.103 (10.32.0.103) 56(84) bytes of data.

64 bytes from 10.32.0.103: icmp_seq=1 ttl=61 time=1.16 ms

64 bytes from 10.32.0.103: icmp_seq=2 ttl=61 time=1.14 ms

64 bytes from 10.32.0.103: icmp_seq=3 ttl=61 time=1.11 ms

64 bytes from 10.32.0.103: icmp_seq=4 ttl=61 time=1.33 ms

^C

--- 10.32.0.103 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3004ms

rtt min/avg/max/mdev = 1.106/1.181/1.325/0.084 ms

must@redubuntutest:~$The interface configuration from the Junos OS CLI towards the Leaf-1 and Leaf-2 switches.

set interfaces xe-0/0/3:2 description to.DC3-Leaf-1 set interfaces xe-0/0/3:2 vlan-tagging set interfaces xe-0/0/3:2 unit 500 vlan-id 500 set interfaces xe-0/0/3:2 unit 500 family inet address 10.202.1.0/31 set interfaces xe-0/0/3:2 unit 501 description to.blue-DC3-Leaf-1-Cf set interfaces xe-0/0/3:2 unit 501 vlan-id 501 set interfaces xe-0/0/3:2 unit 501 family inet address 10.0.132.1/31 set interfaces xe-0/0/3:2 unit 502 description to.red-DC3-Leaf-1-Cf set interfaces xe-0/0/3:2 unit 502 vlan-id 502 set interfaces xe-0/0/3:2 unit 502 family inet address 10.0.135.1/31 set interfaces xe-0/0/3:3 description to.DC3-Leaf-2 set interfaces xe-0/0/3:3 vlan-tagging set interfaces xe-0/0/3:3 unit 500 description to.DC3-Leaf-2-CF set interfaces xe-0/0/3:3 unit 500 vlan-id 500 set interfaces xe-0/0/3:3 unit 500 family inet address 10.202.1.2/31 set interfaces xe-0/0/3:3 unit 501 description to.blue-DC3-Leaf-2-Cf set interfaces xe-0/0/3:3 unit 501 vlan-id 501 set interfaces xe-0/0/3:3 unit 501 family inet address 10.0.132.3/31 set interfaces xe-0/0/3:3 unit 502 description to.red-DC3-Leaf-2-Cf set interfaces xe-0/0/3:3 unit 502 vlan-id 502 set interfaces xe-0/0/3:3 unit 502 family inet address 10.0.135.3/31