ON THIS PAGE

Configure and Verify the EBGP Underlay and EBGP Overlay Peering

Configure the EVPN MAC-VRF Instance and Enable Enhanced OISM

Use Case #1: Internal Source to Internal Receivers (Including Multihoming Peer) with IGMPv3—SSM

Configure Use Case #1: Internal Source and Receivers with Multihoming Peer Receiver and IGMPv3—SSM

Verify Use Case#1: Internal Source and Receivers with Multihoming Peer Receiver and IGMPv3—SSM

Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

Configure Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

Verify Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

Use Case #3: Internal Source to Internal Receivers with MLDv2—SSM

Configure Use Case #3: Internal Source and Receivers with MLDv2—SSM

Verify Use Case #3: Internal Source and Receivers with MLDv2—SSM

Enhanced Optimized Intersubnet Multicast (OISM) Implementation

Configure enhanced optimized intersubnet multicast (OISM) in a scaled EVPN-VXLAN edge-routed bridging (ERB) overlay fabric when the OISM leaf devices host a large number of diverse revenue VLANs.

In EVPN-VXLAN edge-routed bridging (ERB) overlay fabric designs, the leaf devices forward traffic within tenant VLANs and route traffic between tenant VLANs. To support efficient multicast traffic flows in scaled ERB overlay fabrics, we support optimized intersubnet multicast (OISM) with both internal and external multicast sources and receivers.

Regular OISM and Enhanced OISM Differences

We refer to our original OISM implementation as regular OISM. Regular OISM uses a symmetric bridge domains OISM model that requires you to configure all revenue VLANs (the tenant VLANs) in the network on all OISM leaf devices. See Optimized Intersubnet Multicast (OISM) with Assisted Replication (AR) for Edge-Routed Bridging Overlays in this guide for an example of a regular OISM configuration. Due to the symmetric VLANs configuration requirement, we also call regular OISM the "bridge domains everywhere" (BDE) version of OISM.

Enhanced OISM uses an asymmetric bridge domains OISM model in which you don't need to configure all revenue VLANs in the network on all OISM devices. On each leaf device that is not a multihoming peer with another leaf device, you can configure only the revenue VLANs that device hosts. However, the design still requires you to configure matching revenue VLANs on leaf devices that are multihoming peers. Enhanced OISM has some operational differences from regular OISM that support the asymmetric bridge domains model, but most of the configuration elements you need to set up are the same. Because you can configure the VLANs asymmetrically, we also call enhanced OISM the "bridge domains not everywhere" (BDNE) version of OISM.

Multihoming peer leaf devices are leaf devices that share an Ethernet segment (ES) for an attached multihomed client host, customer edge (CE) device, or top-of-rack (TOR) device.

The enhanced OISM asymmetric bridge domain model enables OISM to scale well when your network has leaf devices that host a large number of diverse revenue VLANs.

This example shows enhanced OISM configuration elements and verification steps tested in our scaled reference environment. We describe a few use cases here that highlight the main differences between regular OISM mode and enhanced OISM mode.

Starting in Junos OS Release 24.4R1, we have qualified enhanced OISM at scale in environments that also include configurations for the following features:

-

BGP unnumbered peering with an IPv6 underlay (also called BGP autodiscovery or BGP auto-peering).

See BGP Unnumbered IPv6 Underlay in an EVPN-VXLAN Data Center.

-

Non-revertive preference-based designated forwarder (DF) election.

We don't include configuration instructions for those features in the enhanced OISM use cases described here.

Enhanced OISM Use Cases Overview

The enhanced OISM use cases included in this example don't include full device configurations. Instead, we focus on the sections of the configuration for the test environment differences and the enhanced OISM operational differences compared to the regular OISM example in Optimized Intersubnet Multicast (OISM) with Assisted Replication (AR) for Edge-Routed Bridging Overlays, such as:

-

Using EBGP for the overlay peering in the EVPN core network (instead of IBGP, which the regular OISM example configuration environment uses for the overlay peering).

-

Configuring the same set of revenue VLANs only on multihoming peer leaf devices, and configuring different sets of revenue VLANs on the other OISM leaf devices.

-

Configuring the VRF instances and IRB interfaces on leaf devices that host different sets of revenue VLANs.

-

Enabling IPv6 multicast with MLDv1 any-source multicast (ASM) or MLDv2 source-specific multicast (SSM), which is supported with regular OISM but wasn't included in the regular OISM configuration example.

-

Verifying behaviors specific to enhanced OISM operation (see Operational Differences in Enhanced OISM Mode).

Refer to the regular OISM example in Optimized Intersubnet Multicast (OISM) with Assisted Replication (AR) for Edge-Routed Bridging Overlays for complete details on configuring all of the required elements that are common for both regular OISM and enhanced OISM, such as:

-

EVPN fabric interfaces

-

Underlay and overlay peering (except here we cover EBGP for the overlay peering)

-

EVPN MAC-VRF instances

-

Multicast protocols—IGMP, IGMP snooping, MLD, MLD snooping, Protocol Independent Multicast (PIM)

-

The SBD, the revenue VLANs, and their corresponding IRB interfaces

-

The tenant VRF instances

-

OISM device roles—Server leaf, or border leaf as a PIM EVPN gateway (PEG) for exchanging multicast traffic from and to external sources and external receivers

-

Interfaces to and from an external PIM domain

Configuration Differences in Enhanced OISM Mode

You configure enhanced OISM in almost the same way that you configure regular OISM, using the same statements to set up the OISM environment. The only differences are:

-

To enable enhanced mode instead of regular mode, configure the

enhanced-oismoption at the[edit forwarding-options multicast-replication evpn irb]hierarchy level instead of configuring theoismoption that enables regular OISM. -

Configure the virtual routing and forwarding (VRF) instances on the OISM leaf devices with the revenue VLANs each device hosts. On each set of multihoming peer leaf devices, however, be sure to configure the same set of revenue VLANs, which should be a combination of all revenue VLANs used by the receivers behind those peer leaf devices.

-

Configure an OSPF area for server leaf device connectivity on the SBD. With enhanced OISM, the server leaf devices need Layer 3 connectivity to route source traffic onto the SBD for east-west traffic. As a result, in each tenant VRF, you configure an OSPF area on the server leaf devices with the SBD IRB interface in active mode to form adjacencies on the SBD. You configure the other interfaces in OSPF passive mode.

Note that on the OISM border leaf devices, for both regular and enhanced OISM, we require you to configure an OSPF area in each tenant VRF with the following interfaces:

-

The SBD IRB interface, in OSPF active mode

-

The PEG interface that provides access to external multicast sources and receivers, in OSPF active mode

-

The remaining interfaces in the VRF, including the revenue VLAN IRB interfaces, in OSPF passive mode

-

Operational Differences in Enhanced OISM Mode

The main operational differences in enhanced OISM mode operation compared to regular OISM mode operation are:

-

East-west traffic from internal sources—The ingress leaf devices forward east-west multicast source traffic on the source VLAN only to their multihoming peer leaf devices with which they share at least one Ethernet segment. For all other OISM leaf devices, the ingress leaf devices route the source traffic only on the supplemental bridge domain (SBD), even if those other devices host the source VLAN. Then each leaf device locally routes the traffic from the SBD to the destination VLAN.

Conversely, regular OISM sends multicast traffic from internal sources only on the source VLAN. Then each leaf device locally forwards the traffic on the source VLAN or routes the traffic to the destination VLAN. Only the border leaf devices route traffic on the SBD; the border leaf devices use the SBD to support multicast flows from external sources to receivers inside the EVPN fabric.

Note:Enhanced OISM, like regular OISM, requires you to enable IGMP snooping or MLD snooping, so the ingress leaf device for a multicast flow sends the traffic only toward other OISM leaf devices with receivers that subscribed to (sent an IGMP or MLD join for) that flow.

-

North-south traffic from internal sources toward external receivers—The ingress leaf devices generate EVPN Type 10 Selective P-router Multicast Service Interface (S-PMSI) Auto-Discovery (A-D) routes for internal multicast (S,G) sources and groups.

The OISM border leaf devices act as PIM EVPN gateway (PEG) devices to connect to external multicast sources and receivers. The PEG devices need to perform PIM source registration only for multicast sources inside the EVPN network, so they look for and only do PIM registration for the sources in advertised S-PMSI A-D routes.

-

Enhanced OISM limitation for data packets with a time to live (TTL) of 1—Enhanced OISM routes most multicast traffic on the SBD rather than on the source VLAN (even if the destination device hosts the source VLAN). The TTL value on multicast data packets routed to the SBD and then to the destination VLAN will have the packet TTL decremented more than once. As a result, packets with TTL=1 won't reach the receivers. This limitation applies to traffic for any multicast groups other than 224.0.0.0/24 (for IPv4 multicast) and ff02::/16 (for IPv6 multicast).

For more details on the operational differences between regular OISM and enhanced OISM mode, see the following pages in the EVPN User Guide:

For full details on all OISM concepts, components, configuration and operation, see Optimized Inter-Subnet Multicast in EVPN Networks.

Configure and Verify the EBGP Underlay and EBGP Overlay Peering

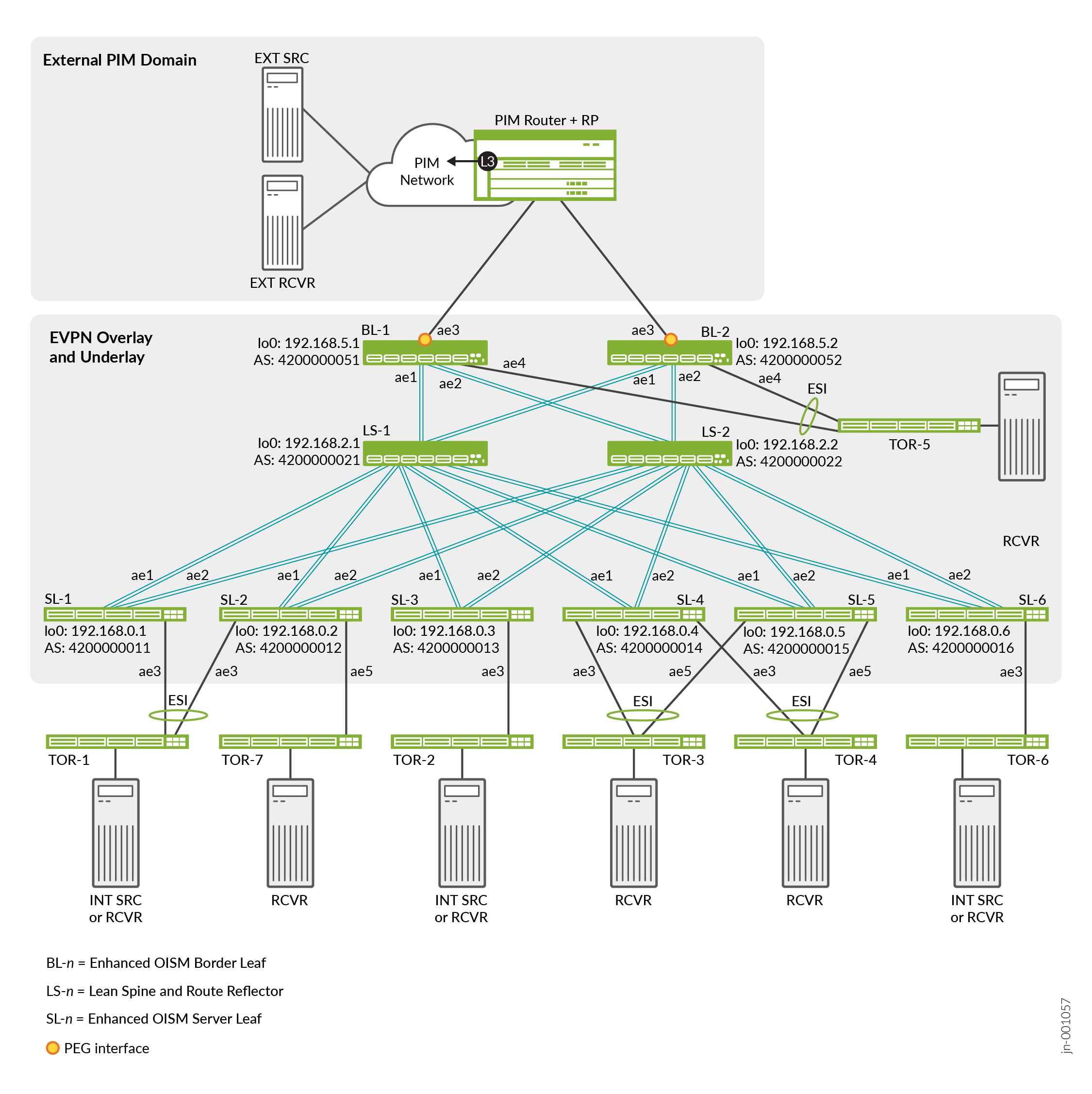

In the EVPN-VXLAN fabric configuration for regular OISM in Optimized Intersubnet Multicast (OISM) with Assisted Replication (AR) for Edge-Routed Bridging Overlays, we configure the underlay peering with EBGP and the overlay peering with IBGP. However, in this example for enhanced OISM, the EVPN-VXLAN reference architecture test environment uses EBGP for both the underlay peering and the overlay peering. See Figure 1.

We support both IPv4 and IPv6 multicast data traffic over the IPv4 underlay and overlay in this configuration.

All of the OISM server leaf devices (SL-n) and border leaf devices (BL-n) peer with the lean spine devices LS-1 and LS-2 in a full mesh spine and leaf configuration. See:

-

Figure 1 for the EVPN core interface names, device loopback addresses, and AS numbers.

-

Table 1 for the corresponding subnet addresses for the underlay peering interfaces—all interface addresses are .0 on the spine device side and .1 on the leaf device side.

|

Leaf Device |

Spine Device |

Interface |

Underlay Subnet |

|---|---|---|---|

|

SL-1 192.168.0.1 |

LS-1 |

ae1 |

172.16.1.0/31 |

|

LS-2 |

ae2 |

172.16.3.0/31 |

|

|

SL-2 192.168.0.2 |

LS-1 |

ae1 |

172.16.2.0/31 |

|

LS-2 |

ae2 |

172.16.4.0/31 |

|

|

SL-3 192.168.0.3 |

LS-1 |

ae1 |

172.16.5.0/31 |

|

LS-2 |

ae2 |

172.16.6.0/31 |

|

|

SL-4 192.168.0.4 |

LS-1 |

ae1 |

172.16.7.0/31 |

|

LS-2 |

ae2 |

172.16.9.0/31 |

|

|

SL-5 192.168.0.5 |

LS-1 |

ae1 |

172.16.8.0/31 |

|

LS-2 |

ae2 |

172.16.10.0/31 |

|

|

SL-6 192.168.0.6 |

LS-1 |

ae1 |

172.16.15.0/31 |

|

LS-2 |

ae2 |

172.16.16.0/31 |

|

|

BL-1 192.168.5.1 |

LS-1 |

ae1 |

172.16.11.0/31 |

|

LS-2 |

ae2 |

172.16.13.0/31 |

|

|

BL-2 192.168.5.2 |

LS-1 |

ae1 |

172.16.12.0/31 |

|

LS-2 |

ae2 |

172.16.14.0/31 |

Configure EBGP Underlay Peering

The underlay configuration is similar to the regular OISM EBGP underlay configuration example, but the enhanced OISM test environment has a few interface subnet differences. On each leaf device, configure EBGP for the underlay peering with neighbor devices LS-1 and LS-2. For example:

SL-1:

set policy-options policy-statement underlay-clos-export term loopback from interface lo0.0 set policy-options policy-statement underlay-clos-export term loopback then accept set protocols bgp group underlay-bgp type external set protocols bgp group underlay-bgp export underlay-clos-export set protocols bgp group underlay-bgp local-as 4200000011 set protocols bgp group underlay-bgp multipath multiple-as set protocols bgp group underlay-bgp bfd-liveness-detection minimum-interval 1000 set protocols bgp group underlay-bgp bfd-liveness-detection multiplier 3 set protocols bgp group underlay-bgp bfd-liveness-detection session-mode automatic set protocols bgp group underlay-bgp neighbor 172.16.1.0 peer-as 4200000021 set protocols bgp group underlay-bgp neighbor 172.16.3.0 peer-as 4200000022 set protocols bgp log-updown set protocols bgp graceful-restart

Substitute the corresponding interface IP addresses and AS numbers when you configure the underlay on the other OISM leaf devices.

Configure EBGP Overlay Peering

On each leaf device, configure the EBGP overlay peering with neighbor devices LS-1 and LS-2. For example:

SL-1:

set protocols bgp group overlay-ebgp type external set protocols bgp group overlay-ebgp multihop no-nexthop-change set protocols bgp group overlay-ebgp local-address 192.168.0.1 set protocols bgp group overlay-ebgp family evpn signaling set protocols bgp group overlay-ebgp local-as 4200000011 set protocols bgp group overlay-ebgp multipath multiple-as set protocols bgp group overlay-ebgp bfd-liveness-detection minimum-interval 4000 set protocols bgp group overlay-ebgp bfd-liveness-detection multiplier 3 set protocols bgp group overlay-ebgp bfd-liveness-detection session-mode automatic set protocols bgp group overlay-ebgp neighbor 192.168.2.1 peer-as 4200000021 set protocols bgp group overlay-ebgp neighbor 192.168.2.2 peer-as 4200000022 set protocols bgp group overlay-ebgp vpn-apply-export

Substitute the corresponding device loopback addresses and AS numbers when you configure the overlay on the other OISM leaf devices.

Verify Underlay and Overlay Peering

To verify the EBGP underlay and overlay peering on SL-1 (lo0: 192.168.0.1, AS

4200000011), for example, look for the following in the output from the

show bgp neighbor command:

-

Underlay peering on:

-

LS-1: Subnet 172.16.1.0/31

-

LS-2: Subnet 172.16.3.0/31

-

-

Overlay peering with:

-

LS-1: lo0 192.168.2.1, AS 4200000021

-

LS-2: lo0 192.168.2.2, AS 4200000022

-

user@SL-1> show bgp neighbor | grep Peer Peer: 172.16.1.0+179 AS 4200000021 Local: 172.16.1.1+58263 AS 4200000011 Options: <AuthKey GracefulRestart LogUpDown PeerAS Multipath LocalAS Refresh> Peer ID: 192.168.2.1 Local ID: 192.168.0.1 Active Holdtime: 90 Keepalive Interval: 30 Group index: 0 Peer index: 0 SNMP index: 0 NLRI for restart configured on peer: inet-unicast NLRI advertised by peer: inet-unicast Peer supports Refresh capability (2) Restart time configured on the peer: 120 Stale routes from peer are kept for: 300 Peer does not support Restarter functionality Restart flag received from the peer: Notification Peer does not support LLGR Restarter functionality Peer supports 4 byte AS extension (peer-as 4200000021) Peer does not support Addpath Peer: 172.16.3.0+179 AS 4200000022 Local: 172.16.3.1+57766 AS 4200000011 Options: <AuthKey GracefulRestart LogUpDown PeerAS Multipath LocalAS Refresh> Peer ID: 192.168.2.2 Local ID: 192.168.0.1 Active Holdtime: 90 Keepalive Interval: 30 Group index: 0 Peer index: 1 SNMP index: 1 NLRI for restart configured on peer: inet-unicast NLRI advertised by peer: inet-unicast Peer supports Refresh capability (2) Restart time configured on the peer: 120 Stale routes from peer are kept for: 300 Peer does not support Restarter functionality Restart flag received from the peer: Notification Peer does not support LLGR Restarter functionality Peer supports 4 byte AS extension (peer-as 4200000022) Peer does not support Addpath Peer: 192.168.2.1+51349 AS 4200000021 Local: 192.168.0.1+179 AS 4200000011 Options: <Multihop NoNextHopChange LocalAddress AuthKey GracefulRestart LogUpDown AddressFamily PeerAS Multipath LocalAS Rib-group Refresh> Peer ID: 192.168.2.1 Local ID: 192.168.0.1 Active Holdtime: 90 Keepalive Interval: 30 Group index: 1 Peer index: 0 SNMP index: 2 NLRI for restart configured on peer: evpn NLRI advertised by peer: evpn Peer supports Refresh capability (2) Restart time configured on the peer: 120 Stale routes from peer are kept for: 300 Peer does not support Restarter functionality Restart flag received from the peer: Notification Peer does not support LLGR Restarter functionality Peer supports 4 byte AS extension (peer-as 4200000021) Peer does not support Addpath Peer: 192.168.2.2+60628 AS 4200000022 Local: 192.168.0.1+179 AS 4200000011 Options: <Multihop NoNextHopChange LocalAddress AuthKey GracefulRestart LogUpDown AddressFamily PeerAS Multipath LocalAS Rib-group Refresh> Peer ID: 192.168.2.2 Local ID: 192.168.0.1 Active Holdtime: 90 Keepalive Interval: 30 Group index: 1 Peer index: 1 SNMP index: 3 NLRI for restart configured on peer: evpn NLRI advertised by peer: evpn Peer supports Refresh capability (2) Restart time configured on the peer: 120 Stale routes from peer are kept for: 300 Peer does not support Restarter functionality Restart flag received from the peer: Notification Peer does not support LLGR Restarter functionality Peer supports 4 byte AS extension (peer-as 4200000022) Peer does not support Addpath

Run the command on each OISM leaf devices and look for the corresponding interconnecting subnets and overlay device loopback addresses.

Configure the EVPN MAC-VRF Instance and Enable Enhanced OISM

You configure the same EVPN MAC-VRF instance named MACVRF-1 on all OISM server leaf and OISM border leaf devices, with only a few differences for parameters that depend on which device you're configuring. In later sections for different use case configurations, you add the VLANs, corresponding IRB interfaces, and VXLAN VNI mappings instance that are specific to that use case.

Configure Platform-specific Parameters

Configure the following platform-specific settings required in scaled EVPN-VXLAN environments on all OISM server leaf or OISM border leaf devices of the indicated device types. For details, see the similar configuration step 2 for regular OISM in Configure an OISM-Enabled EVPN MAC-VRF Instance.

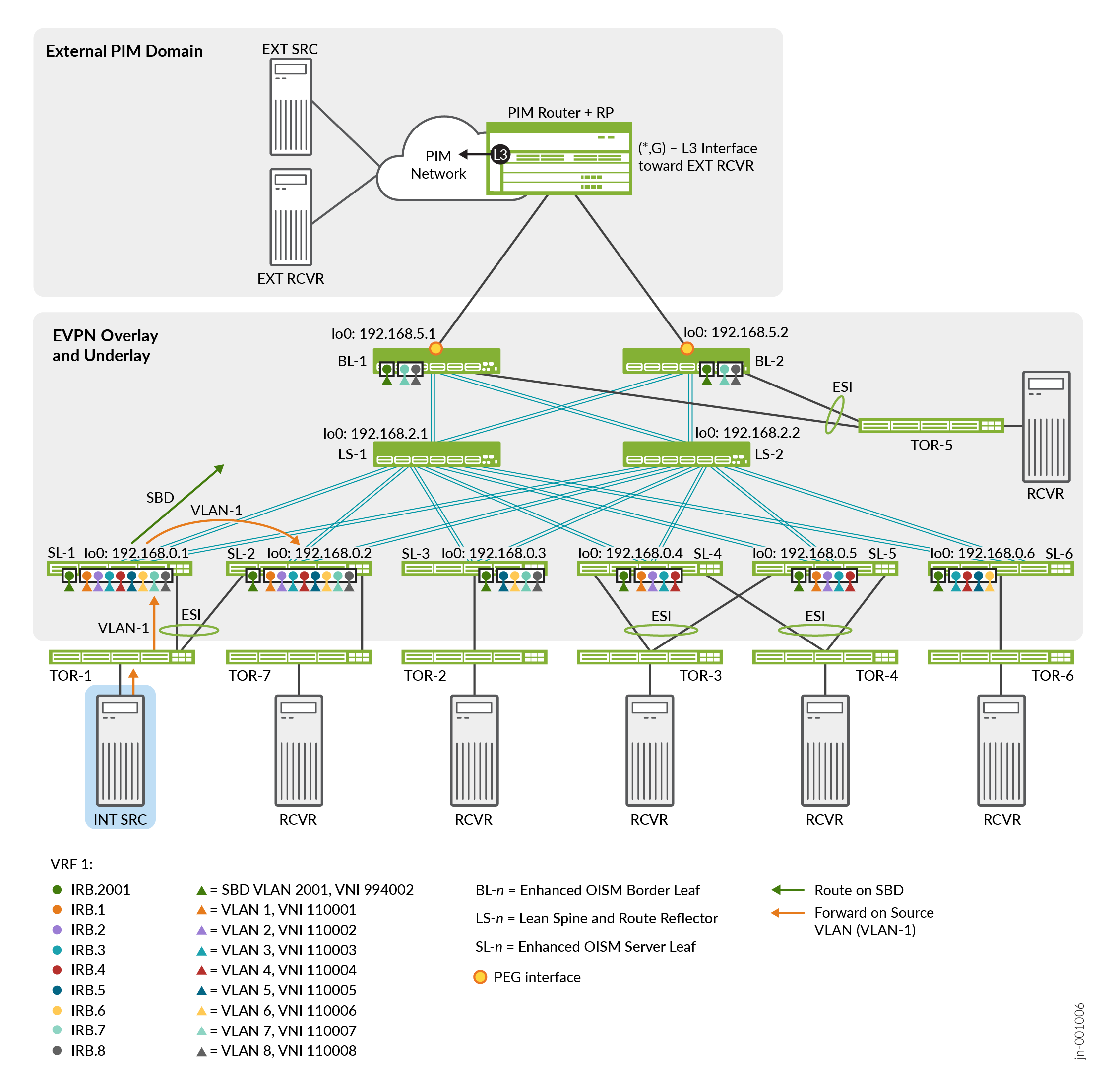

Use Case #1: Internal Source to Internal Receivers (Including Multihoming Peer) with IGMPv3—SSM

In use case #1, we configure the topology in Figure 2 with a tenant VRF called VRF-1. This use case includes:

-

An internal multicast source and internal multicast receivers using IGMPv3 with intra-VLAN and inter-VLAN multicast flows.

-

A single-homed receiver behind a server leaf device that is a multihoming peer of the ingress server leaf device, so:

-

The multihoming peer devices must have same set of revenue VLANs configured (even if both devices don't host all of the VLANs).

-

The ingress server leaf device forwards multicast traffic on the source VLAN (not the SBD) to the multihoming peer device toward the receiver behind that peer device.

-

The ingress server leaf device routes the multicast traffic on the SBD toward the receivers on all of the other OISM leaf devices.

-

Table 2 describes the multicast groups, device roles, configured VLANs, VXLAN VNI mappings for the VLANs, and the corresponding IRB interfaces for each VLAN.

|

Role |

Device |

Configured Revenue VLANs |

Configured IRB Interfaces |

VXLAN VNI Mappings |

|---|---|---|---|---|

|

SBD for VRF-1 on all enhanced OISM leaf devices: VLAN-2001, irb.2001, VNI 994002 Multicast source VLAN: VLAN-1, Source Host IP address: 10.0.1.12 IGMPv3—SSM multicast groups: 233.252.0.1 – 233.252.0.3 for intra-VLAN (L2), and 233.252.0.101 – 233.252.0.103 for inter-VLAN (L3) |

||||

|

Source |

TOR-1—Multihomed to SL-11 and SL-21 |

VLAN-1 - VLAN-8 |

irb.1 - irb.8 |

VNI 110001 - VNI 110008 |

|

Receivers |

TOR-7—Single-homed to SL-21 |

VLAN-1 - VLAN-8 |

irb.1 - irb.8 |

VNI 110001 - VNI 110008 |

|

TOR-2—Single-homed to SL-3 |

VLAN-5- VLAN-8 |

irb.5- irb.8 |

VNI 110005- VNI 110008 |

|

|

TOR-3—Multihomed to SL-42 and SL-52 |

VLAN-1 - VLAN-4 |

irb.1 - irb.4 |

VNI 110001 - VNI 110004 |

|

|

TOR-4—Multihomed to SL-42 and SL-52 |

VLAN-1 - VLAN-4 |

irb.1 - irb.4 |

VNI 110001 - VNI 110004 |

|

|

TOR-5—Multihomed to BL-13 and BL-23 |

VLAN-7 - VLAN-8 |

irb.7 - irb.8 |

VNI 110007 - VNI 110008 |

|

|

TOR-6—Single-homed to SL-6 |

VLAN-3 - VLAN-6 |

irb.3 - irb.6 |

VNI 110003 - VNI 110006 |

|

|

1 SL-1 and SL-2 are multihoming peers, so we configure the same revenue VLANs on SL-1 and SL-2. 2 SL-4 and SL-5 are multihoming peers, so we configure the same revenue VLANs on SL-4 and SL-5. 3 BL-1 and BL-2 are multihoming peers, so we configure the same revenue VLANs on BL-1 and BL-2. |

||||

Configure Use Case #1: Internal Source and Receivers with Multihoming Peer Receiver and IGMPv3—SSM

Configure the revenue VLANs, SBD, tenant VRF, and multicast protocols specific to the use case in Use Case #1: Internal Source to Internal Receivers (Including Multihoming Peer) with IGMPv3—SSM.

Verify Use Case#1: Internal Source and Receivers with Multihoming Peer Receiver and IGMPv3—SSM

Verify enhanced OISM operation for use case #1, in which the internal source is behind SL-1 and SL-2. SL-2 is a multihoming peer of SL-1, and also has an internal receiver. SL-3 through SL-6, BL-1, and BL-2 also have internal receivers. See the topology in Figure 2, and the configuration in Configure Use Case #1: Internal Source and Receivers with Multihoming Peer Receiver and IGMPv3—SSM.

Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

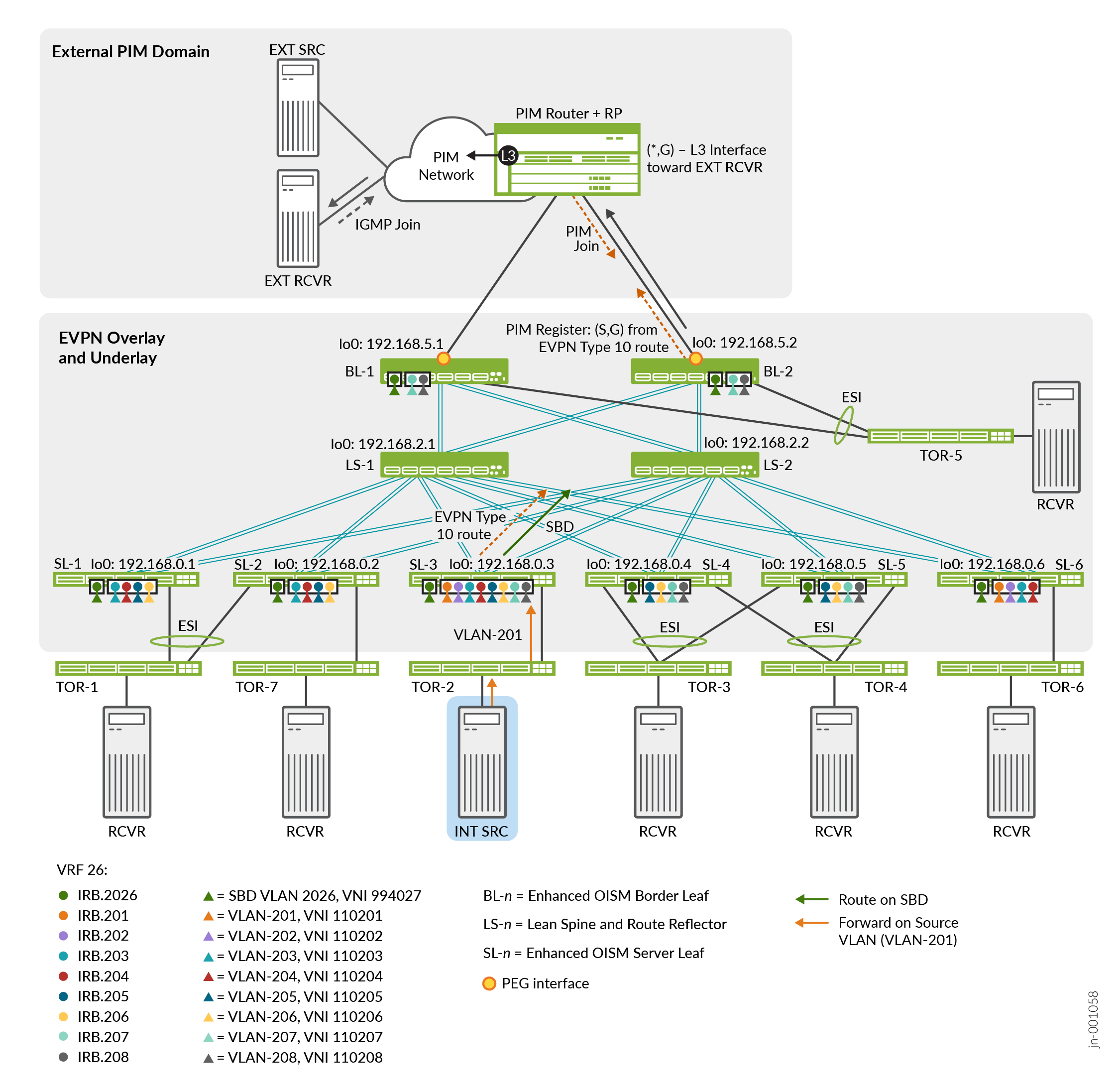

In use case #2, we configure the topology in Figure 3 with a tenant VRF called VRF-26. This use case includes:

-

Intra-VLAN and inter-VLAN multicast flows using IGMPv2.

-

A single-homed internal multicast source and internal multicast receivers.

-

An external multicast receiver in an external PIM domain. As a result:

-

The ingress leaf device advertises EVPN Type 10 S-PMSI A-D routes for each internal multicast source and group (S,G).

-

The OISM border leaf PEG devices, BL-1 and BL-2, receive the EVPN Type 10 route. The BL device that is the PIM designated router (DR) for the SBD, BL-2 in this case, sends a PIM register message for the (S,G) on its PEG interface to the PIM router in the external PIM domain.

-

In response to the PIM register message, the PIM router sends a PIM Join message to either of the multihomed attached OISM PEG devices, BL-1 or BL-2. In this case, BL-2 receives the PIM Join message. .

-

The OISM PEG device that receives the PIM join, BL-2, sends the multicast traffic on its PEG interface toward the external receiver behind the PIM router.

Note:The test environment uses an MX Series router as the PIM router and PIM RP in an external PIM domain. See Configure External Multicast PIM Router and PIM RP Router for an example of how to configure an MX Series router as a PIM router and RP with regular OISM; the steps are similar with enhanced OISM.

-

Table 4 describes the multicast groups, device roles, configured VLANs, VXLAN VNI mappings for the VLANs, and the corresponding IRB interfaces for each VLAN.

|

Role |

Device |

Configured Revenue VLANs |

Configured IRB Interfaces |

VXLAN VNI Mappings |

|---|---|---|---|---|

|

SBD for VRF-26 on all enhanced OISM leaf devices: VLAN-2026, irb.2026, VNI 994027 Multicast source VLAN: VLAN-201, Source Host IP address: 10.0.201.12 IGMPv2—ASM multicast groups: 233.252.0.71 – 233.252.0.73 for intra-VLAN (L2), and 233.252.0.171 – 233.252.0.173 for inter-VLAN (L3) |

||||

|

Source |

TOR-2—Single-homed to SL-3 |

VLAN-201 - VLAN-208 |

irb.201 - irb.208 |

VNI 110201 - VNI 110208 |

|

Receivers |

TOR-1—Multihomed to SL-11 and SL-21 |

VLAN-203- VLAN-206 |

irb.203- irb.206 |

VNI 110203- VNI 110206 |

|

TOR-3—Multihomed to SL-42 and SL-52 |

VLAN-205 - VLAN-208 |

irb.205 - irb.208 |

VNI 110205 - VNI 110208 |

|

|

TOR-4—Multihomed to SL-42 and SL-52 |

VLAN-205 - VLAN-208 |

irb.205 - irb.208 |

VNI 110205 - VNI 110208 |

|

|

TOR-5—Multihomed to BL-13 and BL-23 |

VLAN-207 - VLAN-208 |

irb.207 - irb.208 |

VNI 110207 - VNI 110208 |

|

|

TOR-6—Single-homed to SL-6 |

VLAN-201 - VLAN-204 |

irb.201 - irb.204 |

VNI 110201 - VNI 110204 |

|

|

EXT RCVR in External PIM domain |

VLAN-3126 |

n/a |

n/a |

|

|

1 SL-1 and SL-2 are multihoming peers, so configure the same revenue VLANs on SL-1 and SL-2. 2 SL-4 and SL-5 are multihoming peers, so configure the same revenue VLANs on SL-4 and SL-5. 3 BL-1 and BL-2 are multihoming peers, so configure the same revenue VLANs on BL-1 and BL-2. |

||||

Configure Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

Configure the revenue VLANs, SBD, tenant VRF, and multicast protocols specific to the use case in Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM.

The main differences in this use case from use case #1 are that here:

-

We use enhanced OISM for multicast flows with IGMPv2 (ASM) from a single-homed source behind SL-3.

-

SL-3 hosts revenue VLANs VLAN-201 through VLAN-208, and the other leaf devices host different subsets of those revenue VLANs.

-

We use VRF-26, and configure the SBD for this VRF as VLAN-2026.

Verify Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM

Verify enhanced OISM operation for use case #2 where we have a single-homed internal source behind SL-3 sending IGMPv2 multicast traffic. SL-2 is a multihoming peer of SL-1, and also connects to an internal receiver on TOR-7. SL-3 through SL-6, BL-1, and BL-2 connect to internal receivers. An external receiver also subscribes to the source traffic through an external PIM router and RP by way of OISM PEG devices BL-1 and BL-2. See the topology in Figure 3, and the configuration in Configure Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM.

In this case, we focus on how to verify enhanced OISM operation with an external receiver. SL-6, as the ingress OISM leaf device, advertises EVPN Type 10 S-PMSI A-D routes for the multicast source and groups (S,G). BL-1 and BL-2 receive the EVPN Type 10 route on the SBD. In this example, BL-2 is the PIM DR for the SBD, so BL-2 sends a PIM register message for the (S,G) on its PEG interface to the PIM router and RP. The PIM router sends a PIM Join back to either of the multihomed attached OISM PEG devices, in this case BL-2. BL-2 sends multicast traffic from that (S,G) on its PEG interface toward the external receiver.

See Use Case #2: Internal Source to Internal and External Receivers with IGMPv2—ASM for all of the parameters in this use case.

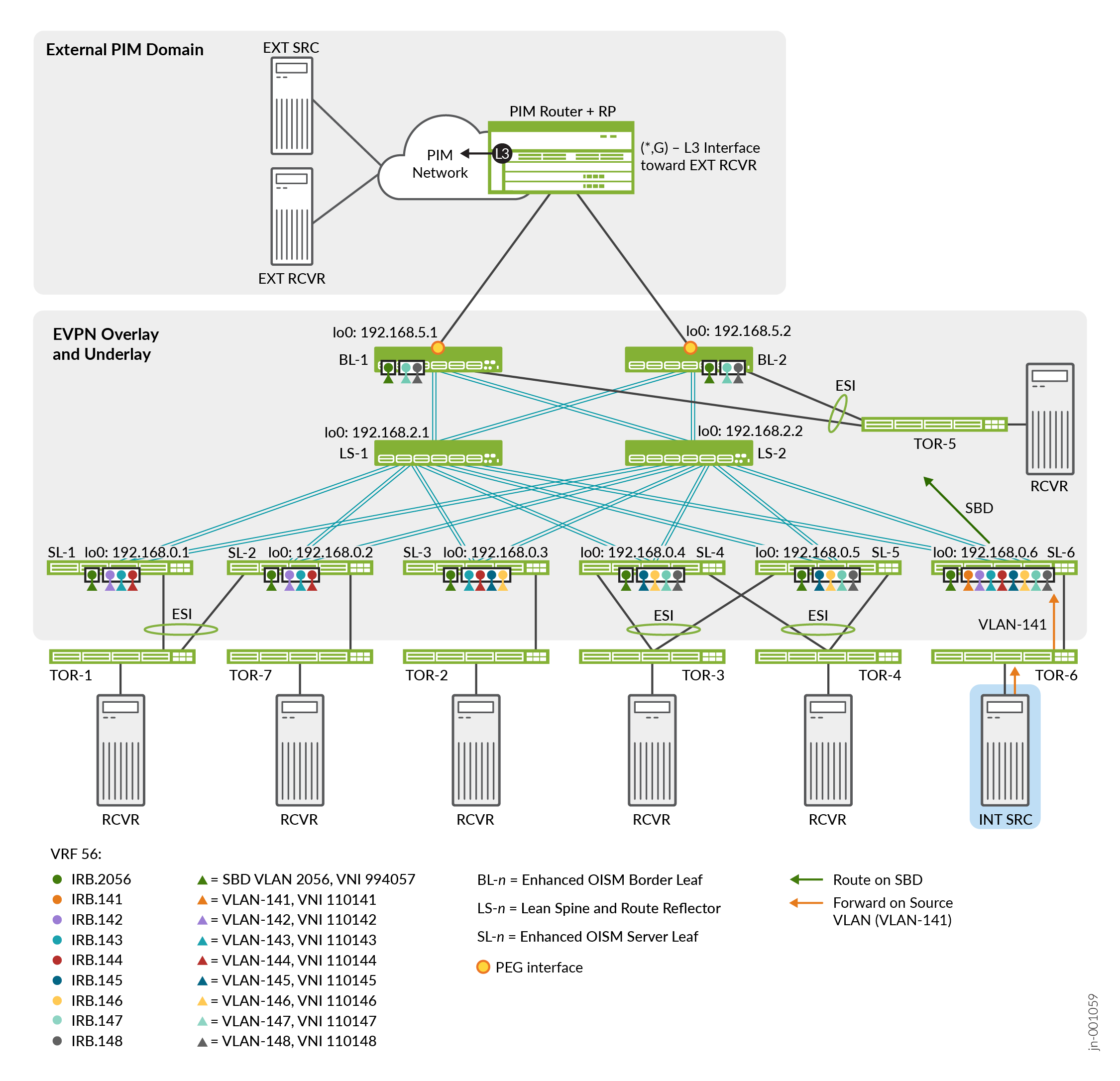

Use Case #3: Internal Source to Internal Receivers with MLDv2—SSM

In use case #3, we configure the topology in Figure 4 with a tenant VRF called VRF-56. This use case includes:

-

An internal multicast source and internal multicast receivers.

-

Inter-VLAN IPv6 multicast flows using MLDv2 (none of the OISM leaf devices host the source VLAN, so all OISM traffic will be inter-VLAN traffic)

Note that we support both IPv4 and IPv6 multicast data traffic with an IPv4 EVPN core. We use the same underlay and overlay peering for MLD multicast traffic as we use for IGMP in use case #1 and use case #2.

Table 6 describes the multicast groups, device roles, configured VLANs, VXLAN VNI mappings for the VLANs, and the corresponding IRB interfaces for each VLAN.

|

Role |

Device |

Configured Revenue VLANs |

Configured IRB Interfaces |

VXLAN VNI Mappings |

|---|---|---|---|---|

|

SBD for VRF-56 on all enhanced OISM leaf devices: VLAN-2056, irb.2056, VNI 994057 Multicast source VLAN: VLAN-141, Source Host IPv6 address: 2001:db8::10:0:8d:0c MLDv2—SSM multicast groups for inter-VLAN traffic only: ff0e::db8:0:1 – ff0e::db8:0:3 for inter-VLAN (L3) |

||||

|

Source |

TOR-6—Single-homed to SL-6 |

VLAN-141 - VLAN-148 |

irb.141 - irb.148 |

VNI 110141 - VNI 110148 |

|

Receivers |

TOR-1—Multihomed to SL-11 and SL-21 |

VLAN-142 - VLAN-144 |

irb.142 - irb.144 |

VNI 110142 - VNI 110144 |

|

TOR-7—Single-homed to SL-21 |

VLAN-142 - VLAN-144 |

irb.142 - irb.144 |

VNI 110142 - VNI 110144 |

|

|

TOR-2—Single-homed to SL-3 |

VLAN-143- VLAN-146 |

irb.143- irb.146 |

VNI 110143- VNI 110146 |

|

|

TOR-3—Multihomed to SL-42 and SL-52 |

VLAN-145 - VLAN-148 |

irb.145 - irb.148 |

VNI 110145 - VNI 110148 |

|

|

TOR-4—Multihomed to SL-42 and SL-52 |

VLAN-145 - VLAN-148 |

irb.145 - irb.148 |

VNI 110145 - VNI 110148 |

|

|

TOR-5—Multihomed to BL-13 and BL-23 |

VLAN-147 - VLAN-148 |

irb.147 - irb.148 |

VNI 110147 - VNI 110148 |

|

|

1 SL-1 and SL-2 are multihoming peers, so configure the same revenue VLANs on SL-1 and SL-2. 2 SL-4 and SL-5 are multihoming peers, so configure the same revenue VLANs on SL-4 and SL-5. 3 BL-1 and BL-2 are multihoming peers, so configure the same revenue VLANs on BL-1 and BL-2. |

||||

Configure Use Case #3: Internal Source and Receivers with MLDv2—SSM

Configure the revenue VLANs, SBD, tenant VRF, and multicast protocols specific to the use case in Use Case #3: Internal Source to Internal Receivers with MLDv2—SSM.

The main differences in this use case from use case #1 are that here:

-

We use enhanced OISM for IPv6 multicast flows with MLDv2 from a single-homed source behind SL-6.

-

SL-6 hosts revenue VLANs VLAN-141 through VLAN-148, and the other leaf devices host different subsets of revenue VLANs VLAN-142 through VLAN-148.

-

We configure a VRF named VRF-56, and configure the SBD for this VRF using VLAN-2056.

Verify Use Case #3: Internal Source and Receivers with MLDv2—SSM

Verify enhanced OISM operation for use case #3, in which the internal source is behind SL-6 sending IPv6 multicast flows for inter-VLAN multicast traffic with MLDv2—SSM. SL-1 through SL-5, BL-1, and BL-2 are internal receivers. See the topology in Figure 4, and the configuration in Configure Use Case #3: Internal Source and Receivers with MLDv2—SSM.

Verification for this use case is similar to the verification in use case #1 but with an IPv6 source address and IPv6 multicast traffic. We include verification commands here on SL-6 as the OISM leaf device sending the source traffic, and on SL-1 and SL-2 as the OISM leaf devices receiving the traffic. On the other receiving devices, based on the revenue VLANs they host, you'll see output similar to what we include here for SL-1 and SL-2.