Optimized Intersubnet Multicast (OISM) in EVPN Networks

Enable intersubnet multicast (OISM) to optimize multicast traffic routing and forwarding in an EVPN edge-routed bridging (ERB) overlay fabric. OISM avoids multicast data flooding to efficiently support scaled multicast environments. Also, with OISM your network can support multicast traffic flow among devices inside and outside of the EVPN fabric.

Overview of OISM

Traditional methods to support multicast traffic use ingress replication and flood multicast packets into the network to reach any interested listeners. Those methods don't scale well and have latency issues when your network has large multicast flows. Also, configuring the network to properly and efficiently handle multicast traffic from sources and to receivers outside of your network is complex.

Optimized intersubnet multicast (OISM) is a multicast traffic optimization feature that operates at Layer 2 (L2) and Layer 3 (L3) in EVPN-VXLAN edge-routed bridging (ERB) overlay fabrics. OISM solves many of the issues inherent in other multicast methods. The OISM design is based on the IETF draft specification https://datatracker.ietf.org/doc/html/draft-ietf-bess-evpn-irb-mcast.

We refer to our original OISM implementation as regular OISM. Regular OISM uses a symmetric bridge domains OISM model that requires you to configure all revenue VLANs (also called tenant VLANs) in the network on all OISM leaf devices.

Starting in Junos OS Release 23.4R1, we also support an enhanced version of OISM. Enhanced OISM uses an asymmetric bridge domains OISM model with which you don't need to configure all revenue VLANs in the network on all OISM devices. On each device, you can configure only the revenue VLANs that the device hosts. To support the asymmetric bridge domains model, enhanced OISM has some operational differences from the symmetric bridge domains model and small configuration differences. The differences are called out throughout this document.

You can apply OISM configuration and operation to multicast traffic but not to broadcast or unknown unicast traffic.

In EVPN ERB overlay fabric designs, the leaf devices in the fabric route traffic between tenant bridge domains (that is, between VLANs). When you enable OISM, the leaf devices route intersubnet multicast traffic locally through IRB interfaces using the control plane multicast states. With local routing between VLANs, the receiver IRB interface doesn't send the routed multicast traffic out into the EVPN core. The local routing model helps minimize the traffic load within the EVPN core. It also avoids traffic hairpinning.

OISM leaf devices also selectively forward traffic into the EVPN core only toward other EVPN devices with interested receivers. Selective forwarding further improves multicast traffic performance in the EVPN fabric.

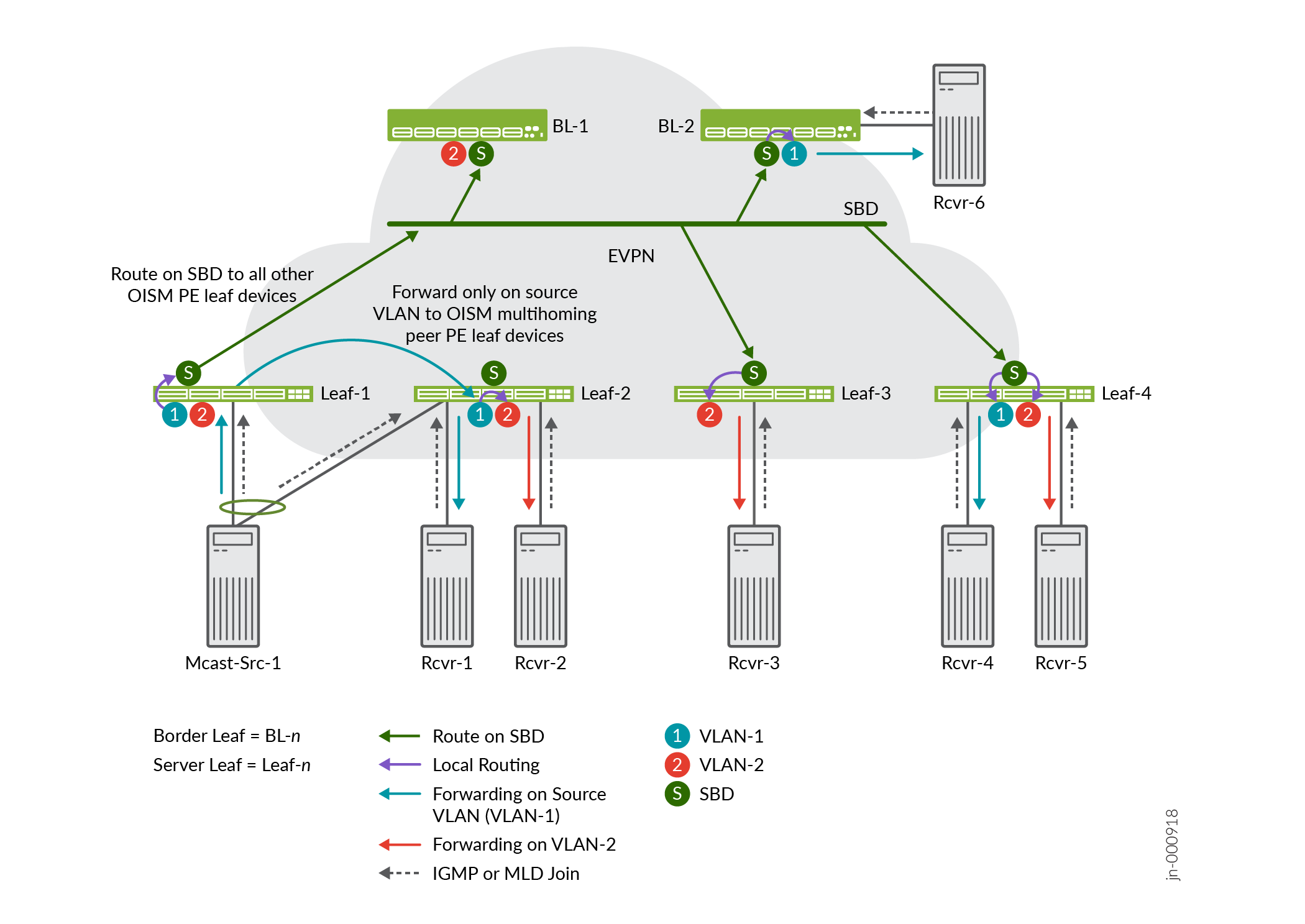

With OISM enabled, ERB overlay fabrics can efficiently and effectively support multicast traffic flow between devices inside and outside the EVPN fabric. Without OISM, fabric designers must use the centrally routed bridging (CRB) overlay model to support multicast with external sources or receivers. OISM border leaf devices support different methods to route traffic to and from an external PIM domain. These methods use either integrated routing and bridging (IRB) interfaces or Layer 3 (L3) interfaces. OISM also employs a supplemental bridge domain (SBD) inside the fabric as follows:

-

The SBD has a different VLAN ID from any of the revenue VLANs.

-

Border leaf devices use the SBD to carry the traffic from external sources toward receivers within the EVPN fabric.

-

In enhanced OISM mode, server leaf devices use the SBD to carry traffic from internal sources to other server leaf devices in the EVPN fabric that are not multihoming peers. Enhanced mode leaf devices use the source VLAN only to send multicast traffic to their multihoming peer leaf devices.

- Benefits of OISM

- OISM Support in EVPN Instances

- OISM Support with Tenant L3 VRF Instances or the Default L3 Routing Instance

- OISM with Multicast Protocols and Other Multicast Optimizations in EVPN Fabrics

- Platform-Specific OISM Behavior with Regular OISM

Benefits of OISM

- Enables EVPN-VXLAN fabrics with the ERB overlay model to support multicast traffic with sources and receivers outside of the fabric.

- Minimizes multicast control packets and replicated data packets in the EVPN fabric core to optimize fabric multicast performance in scaled designs.

-

With enhanced OISM mode, you can further support scaled network designs with leaf devices that host a large number of diverse VLANs (on each leaf device, you need to configure only the VLANs that the device hosts).

OISM Support in EVPN Instances

We support OISM in EVPN-VXLAN fabrics in the following types of EVPN instances:

-

EVPN in the default switch instance (on platforms that support a default switch instance)

-

MAC-VRF EVPN routing instances with

vlan-awareandvlan-basedservice types only (see MAC-VRF Routing Instance Type Overview)

On Junos OS Evolved devices, we support EVPN-VXLAN using EVPN configurations with MAC-VRF instances only, and not in the default switch instance. As a result, on these devices we support OISM only in MAC-VRF EVPN instances.

OISM Support with Tenant L3 VRF Instances or the Default L3 Routing Instance

You can configure OISM with the following types of tenant L3 routing instances:

-

instance-type vrf, which are named virtual routing and forwarding (VRF) instances supported by all platforms that support OISM. OISM configurations usually include multiple tenant VRF instances. -

The default L3 routing instance on the device, supported only on some platforms. See Feature Explorer for currently supported platforms.

We support using the default L3 routing instance with:

-

Regular OISM mode on server leaf and border leaf devices, including border leaf devices acting in the PIM EVPN gateway (PEG) role (see Table 3).

-

IPv4 underlay peering in the EVPN network.

-

IPv4 multicast data traffic with IGMPv1, IGMPv2, and IGMP snooping.

-

PEG designated forwarder (DF) election options (see PEG DF Election).

Any use cases or behaviors that are not supported with OISM in a configured VRF instance on a particular platform are likewise not supported in the default L3 routing instance on that platform. For example, if we don't support assisted replication (AR) with OISM in a configured VRF on a platform, then we also don't support AR with OISM in the default L3 routing instance on that platform.

-

Throughout this OISM documentation, we describe configuring particular elements

in an L3 VRF instance on the EVPN and OISM devices. If your configuration uses

the default L3 routing instance instead of VRF instances, then you configure

those elements at the global level instead of at the [edit

routing-instances name ...] hierarchy level. For

example, substitute the configuration statements at this hierarchy level:

[edit routing-instances L3VRF-1 protocols evpn ...]

with the same statements at the global hierarchy level:

[edit protocols evpn ...]

In OISM configurations that use the default L3 routing instance, we also require that you add a global routing policy configuration to match overlay VXLAN interface next hops and avoid installing those routes for underlay reachability. With EVPN-VXLAN configurations, the VXLAN tunnels are resolved only in the underlay. The device overlay peering uses the underlay to establish reachability among the EVPN devices, which is a valid use of underlay routes. However, the device's underlay peering might use overlay IRB routes for reachability in default L3 routing instance configurations, which can cause routing loops in the network.

In this case, to prevent the underlay peering from using overlay routes for

underlay reachability on OISM devices, you must include defining and applying a

routing policy with the install-nexthop

except overlay-vxlan-interfaces policy statement action, as

follows:

set policy-options policy-statement policy-name term 1 then install-nexthop strict set policy-options policy-statement policy-name term 1 then install-nexthop except overlay-vxlan-interfaces set routing-options forwarding-table export policy-name

OISM with Multicast Protocols and Other Multicast Optimizations in EVPN Fabrics

OISM works with the following multicast protocols and other EVPN multicast optimization features.

Multicast Protocols Supported with OISM

-

IGMPv2, IGMPv3, and IGMP snooping for IPv4 multicast traffic

-

MLDv1, MLDv2, and MLD snooping for IPv6 multicast traffic

-

PIM, which facilitates both local routing and external multicast traffic routing

See the various OISM entries in Feature Explorer for specific platform and release support with the different multicast protocols.

OISM supports IGMP snooping with both IGMPv2 and IGMPv3 on the same device at the same time only under certain configuration constraints. Similarly, OISM supports MLD snooping with both MLDv1 and MLDv2 at the same time under the same configuration constraints. See IGMPv2 and IGMPv3 (or MLDv1 and MLDv2) in the Same EVPN-VXLAN Fabric for details.

Also see Supported IGMP or MLD Versions and Group Membership Report Modes for information on IGMP or MLD any-source multicast (ASM) and source-specific multicast (SSM) mode support in EVPN-VXLAN fabrics.

Other Multicast Optimization Features That Work with OISM

OISM works with these other multicast optimization features:

-

IGMP snooping or MLD snooping (some platforms) on the access side on the leaf devices.

With IGMP snooping or MLD snooping enabled, a leaf device that receives multicast traffic forwards it only toward other devices with interested receivers.

-

Multihoming support in an Ethernet segment (ES) using EVPN Type 7 (Join Sync) and Type 8 (Leave Sync) routes.

EVPN fabric devices advertise these route types to synchronize the multicast state among EVPN devices that are multihoming peers.

Note: ACX Series OISM leaf devices can be multihoming peer PE devices only with ACX Series devices. -

Selective multicast Ethernet tag (SMET) forwarding in the EVPN fabric core using EVPN Type 6 routes.

EVPN devices use Type 6 routes to limit forwarding within the EVPN core only to receivers interested in receiving traffic for a multicast group. You can use OISM to make this optimization work in EVPN ERB overlay fabrics. When you configure IGMP or MLD snooping, the fabric enables SMET forwarding with OISM automatically.

-

Assisted replication (AR) (some platforms and releases).

Table 1 lists how you can integrate AR into a fabric running OISM using the platforms and releases that support the different AR and OISM device roles. We support AR only with regular OISM on any platforms.

Table 1: AR Integrated with Regular OISM—Platform-Specific Supported Roles and Behavior Platforms Starting Release Supported Roles and Behavior EX4650

QFX5110

QFX5120

QFX10002 (except QFX10002-60C)

QFX10008

QFX10016

Junos OS Release 22.2R1

You can configure the AR leaf role on any of these devices that are also acting as OISM border leaf or server leaf devices.

QFX10002 (except QFX10002-60C)

QFX10008

QFX10016

Junos OS Release 22.2R1

You can configure any of these switches as AR replicators, in either of the following AR replicator modes:

-

Collocated mode: The device acts as both an AR replicator device and an OISM border leaf device.

-

Standalone mode: The device is an AR replicator but isn't also an OISM border leaf or server leaf device.

QFX5130-32CD

QFX5700

Junos OS Evolved Release 22.2R1

You can configure the AR leaf role on any of these devices that are also acting as OISM border leaf or server leaf devices.

You can configure these devices as AR replicators with OISM in standalone mode only. In standalone mode, the AR replicator device doesn't also operate as an OISM border leaf or server leaf.

EX4400

Junos OS Release 24.4R1

You can configure the AR leaf role on any of these devices that are also acting as OISM border leaf or server leaf devices.

These devices don't support the AR replicator role, and support the AR leaf role with other devices configured as standalone AR replicators only.

Note:We support AR with OISM on these devices only with VLAN-based and VLAN-aware MAC-VRF EVPN instances.

Note:ACX Series and PTX Series routers don't support AR with OISM as AR replicator or AR leaf devices.

See these references for more on using AR and OISM together:

- How AR works and how to configure AR—

Assisted Replication Multicast Optimization in EVPN Networks.

-

How AR integrates with OISM—AR with Optimized Intersubnet Multicast (OISM).

-

Use case illustrations of AR and OISM working together:

-

Platform-Specific OISM Behavior with Regular OISM

Use the following table to review platform-specific behaviors when running regular OISM for your platforms.

|

Platform |

Difference |

|---|---|

|

PTX10008 with the PTX10K-LC1301-36DD (Express 5) and PTX10K-LC1201-36CD (Express 4) line cards |

The device has a limitation in reporting multicast packet statistics when the device operates in interop chassis mode (both Express 5 and Express 4 line cards interoperating on the device), because Express 5 line cards don't support multicast snooping route counters. The multicast snooping route counters record the number of

packets that use a snooping route toward the destination.

Express 4 line cards update the snooping route counters, but

Express 5 line cards don't update those counters. As a

result, on devices running in interop mode, the packet

statistics reported in the |

Overview of Enhanced OISM

Enhanced OISM doesn't require you to configure all revenue bridge domains (VLANs) in the network on all OISM devices. On each device, you can configure only the revenue VLANs the device hosts. As a result, we describe this mode as having an asymmetric bridge domains (VLANs) model compared to the regular OISM mode where you must configure the revenue VLANs symmetrically on all leaf devices.

However, in enhanced OISM mode, you must still configure revenue VLANs symmetrically on the OISM leaf devices that share any Ethernet segments. In other words, you must configure the same revenue VLANs on OISM leaf devices that are multihoming peers for an attached multihomed host or multihomed customer edge (CE) device.

Enhanced OISM mode enables OISM to scale well when your network has leaf devices that host larger numbers of different VLANs per device.

- Enhanced OISM Support

- How to Enable Enhanced OISM

- When to Use Enhanced OISM

- Summary of Enhanced OISM Differences

Enhanced OISM Support

We support enhanced OISM with:

IGMPv2, IGMPv3, and IGMP snooping.

MLDv1, MLDv2, and MLD snooping (some platforms).

Note:ACX Series and PTX Series routers don't support enhanced OISM with MLD and MLD snooping for IPv6 multicast traffic.

MAC-VRF EVPN instance type only. See MAC-VRF Routing Instance Type Overview.

EVPN-VXLAN configurations with an IPv6 underlay for IPv4 or IPv6 multicast traffic (some platforms). See Enhanced OISM with an EVPN-VXLAN IPv6 Underlay Configuration.

Seamless data center interconnect (DCI) stitching between EVPN-VXLAN data centers configured with IPv4 or IPv6 underlay peering (some platforms). See EVPN-VXLAN DCI Multicast with Enhanced OISM.

-

Group based policy (GBP). We support GBP unicast flows and enhanced OISM flows seamlessly together across the VXLAN tunnels. You must configure GBP with the

vxlan-gbp-mc-profileGBP unified forwarding table (UFT) profile setting at the[edit chassis forwarding-options]hierarchy level.See GBP Profiles for more on GBP profiles and configurations.

Note:We don't assign GBP tags to multicast traffic. Only unicast traffic carries GBP tags in the VXLAN headers.

We don't support enhanced OISM with AR.

Search in Feature Explorer for "enhanced OISM" to see various entries there for platforms and releases in which enhanced OISM is supported with different multicast protocols and other features.

How to Enable Enhanced OISM

You enable enhanced OISM using the enhanced-oism option at the [edit

forwarding-options multicast-replication

evpn

irb] hierarchy level. You use this option instead of

the regular OISM mode oism option at the same hierarchy level.

The enhanced-oism and oism options are

mutually exclusive.

Besides the difference in configuring VLANs on the leaf devices and setting the OISM mode to use, the OISM components and configuration elements are the same for enhanced OISM as for regular OISM mode. However, this mode has some operational differences and small configuration differences to support the asymmetric bridge domains model. As a result, you must use the same OISM mode on all OISM devices in the network.

See:

Overview of OISM for a brief introduction to OISM support.

OISM Components for descriptions of all of the components and configuration elements involved in OISM operation.

When to Use Enhanced OISM

You can use enhanced OISM if all OISM devices in the network support this OISM mode. In that case, you might want to use enhanced OISM when:

Your network has a large number of revenue bridge domains (VLANs), and resources might be strained on some devices to configure all the VLANs there.

Your network has a large number of disjointed bridge domains (VLANs) in the network (different devices host different sets of VLANs).

On OISM devices in your network, you don't have policies configured that are based on the source MAC address of the packets. If you do have source MAC address policies, use regular OISM in your network instead.

Your network uses seamless DCI stitching to span more than one EVPN-VXLAN data center, and you want to optimize multicast traffic flow between the data centers instead of always flooding multicast traffic across the interconnection. The DCI gateway devices and OISM leaf devices in both networks must support seamless DCI multicast with enhanced OISM. (See EVPN-VXLAN DCI Multicast with Enhanced OISM.)

You might want to use regular OISM instead of enhanced OISM if your network needs to pass multicast packets with stringent requirements for decrementing the time-to-live (TTL) field. The enhanced OISM model inherently has a limitation where packets with TTL=1 will not reach receivers on devices that are not multihoming peers of the source device. Regular OISM forwards source traffic on the source VLAN and doesn't decrement the TTL value for destinations on the same VLAN. Alternatively, if you want to use enhanced OISM but avoid this limitation, we have a solution that enables enhanced OISM devices to forward multicast traffic on the source VLAN like regular OISM devices do. You can enable this solution for particular multicast groups (or groups and sources) using routing policies and a configuration option. See Enhanced OISM Exception Policy to Forward on Source VLAN Instead of SBD for Packets with TTL=1 for details.

Summary of Enhanced OISM Differences

Where applicable, the sections throughout this document describe any operational or configuration differences when you use enhanced OISM.

We summarize the main differences with enhanced OISM operation and configuration here.

- East-West Traffic from Internal Sources

- North-South Traffic from Internal Sources Toward External Receivers

- OSPF Area for Server Leaf Device Connectivity on the SBD

East-West Traffic from Internal Sources

The ingress leaf devices forward east-west multicast source traffic on the source VLAN to their multihoming peer leaf devices with which they share at least one Ethernet segment. For all other OISM leaf devices, they route the source traffic only on the SBD (even if those other devices host the source VLAN). Then each leaf device locally routes the traffic from the SBD to the destination VLAN.

This operation differs from the regular OISM mode, which sends multicast traffic from internal sources only on the source VLAN. Then each leaf device locally forwards the traffic on the source VLAN or routes the traffic from the source VLAN to the destination VLAN.

North-South Traffic from Internal Sources Toward External Receivers

The ingress leaf devices generate EVPN Type 10 Selective P-router Multicast Service Interface (S-PMSI) Auto-Discovery (A-D) routes for internal multicast (S,G) sources and groups.

The OISM border leaf devices act as PIM EVPN gateway (PEG) devices to connect to external multicast sources and receivers. The PEG devices need to perform PIM source registration only for multicast sources inside the EVPN network, so they look for and only do PIM registration for the sources in the advertised S-PMSI A-D routes.

OSPF Area for Server Leaf Device Connectivity on the SBD

On each of the server leaf devices, enhanced OISM requires that you include an OSPF area configuration for the SBD IRB interface in each tenant virtual routing and forwarding (VRF) instance. You configure the SBD IRB interface in OSPF active mode to establish OSPF adjacencies and support routing among the OISM leaf devices on the SBD. However, you set the OSPF interface priority to 0 so the SL devices don't ever assume the OSPF designated router (DR) or backup DR (BDR) role. You configure any other interfaces in the VRF instance in the OSPF area using OSPF passive mode, so they can exchange routing information but don't form OSPF adjacencies and participate in OSPF protocol processing.

OISM Components

The OISM environment includes:

Leaf devices in the EVPN fabric that function in border roles and server access roles.

External multicast sources and receivers in an external L3 PIM domain.

Bridge domain (VLAN) configurations that enable the fabric to route multicast traffic between internal and external devices.

The EVPN-VXLAN ERB overlay design includes lean spine devices that support L3 transit functions for the leaf devices. The lean spine devices don't usually perform any OISM functions.

The following sections describe these OISM components.

- OISM Device Roles

- PIM Domain with External Multicast Sources and Receivers

- Supported Methods for Multicast Data Transfer to or from an External PIM Domain

- OISM Bridge Domains (VLANs)

- Regular OISM Mode—Symmetric Bridge Domains Model

- Enhanced OISM Mode—Asymmetric Bridge Domains Model

- Configuration Elements for OISM Devices

OISM Device Roles

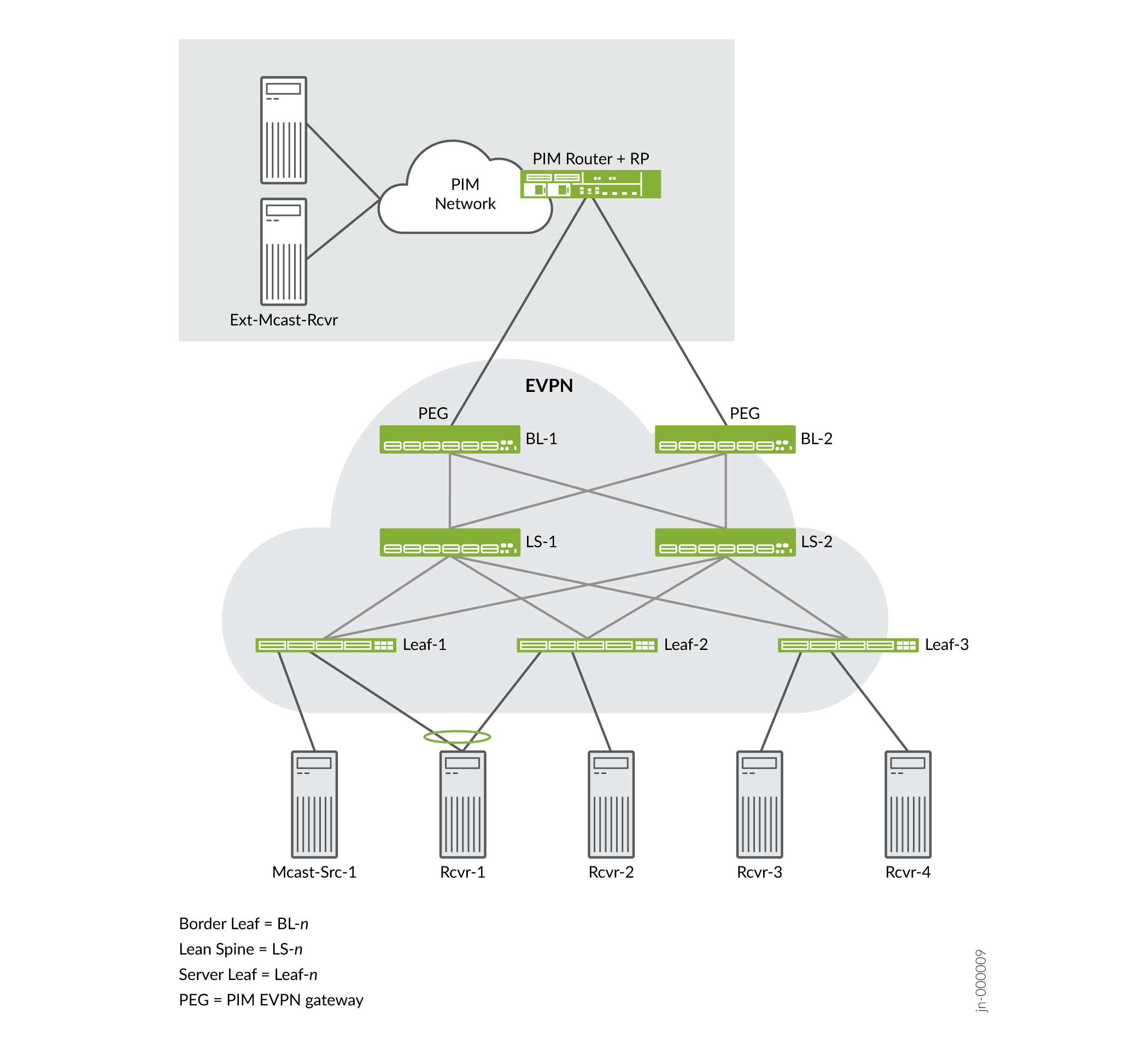

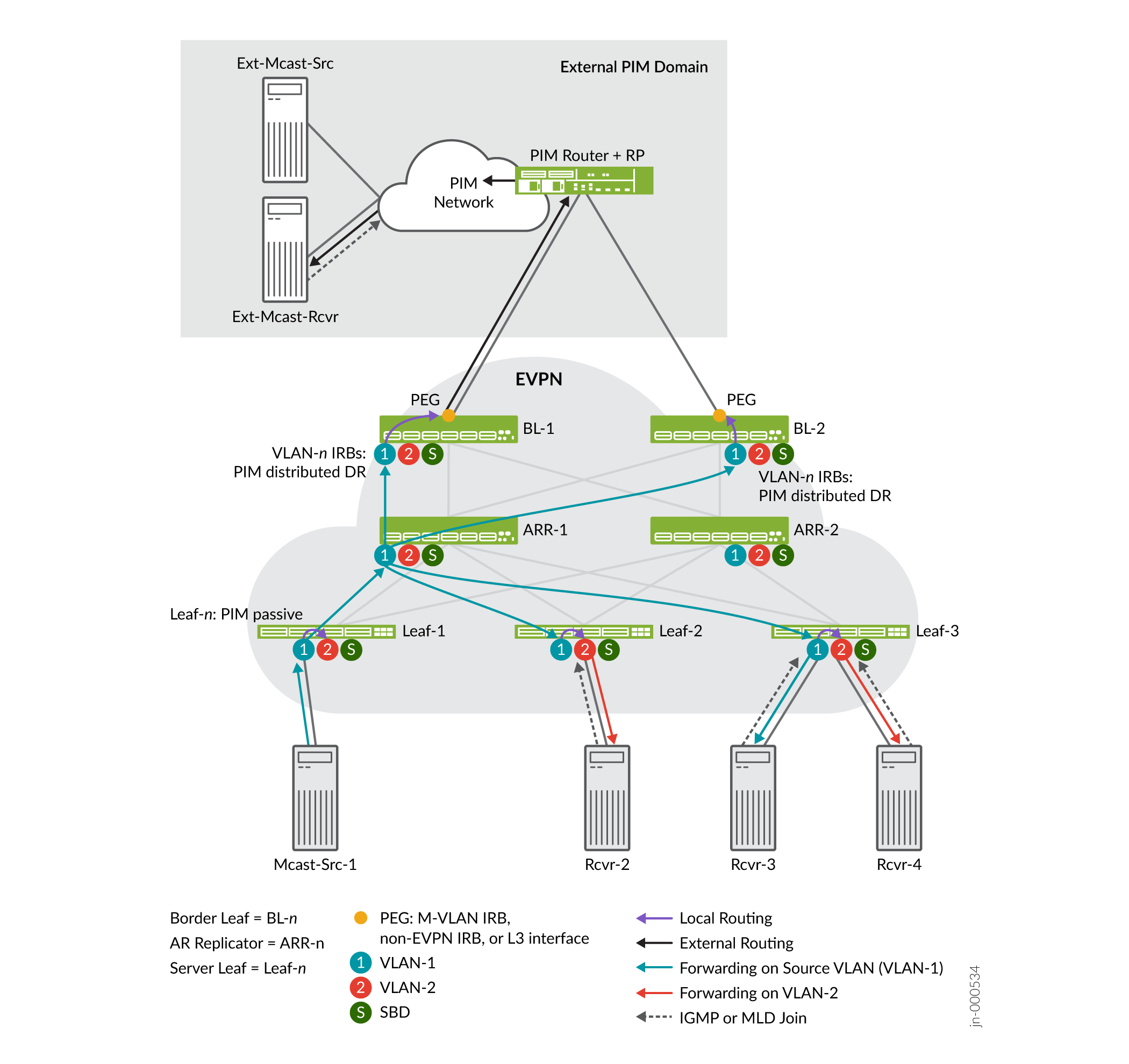

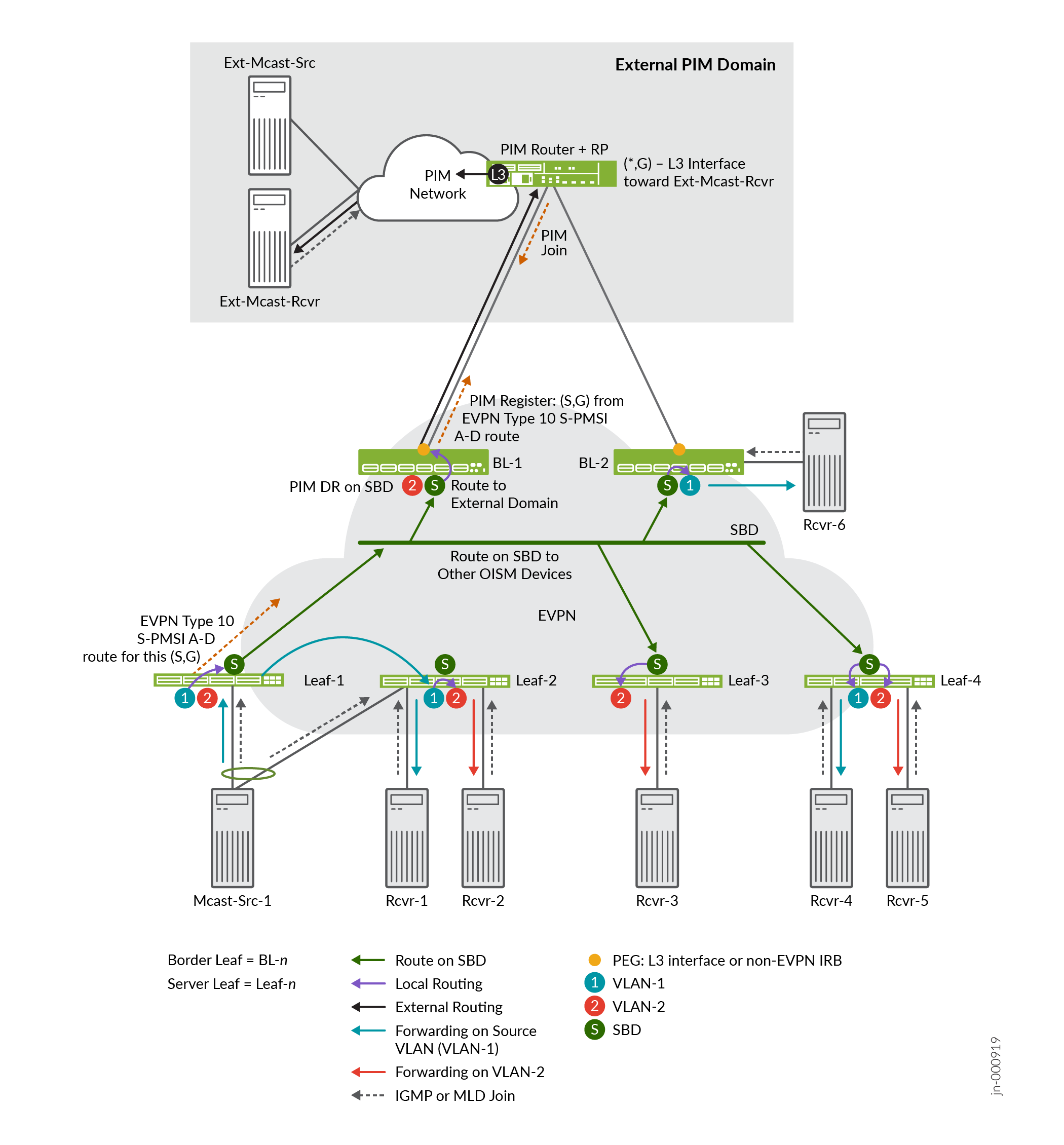

Figure 1 shows a simple EVPN-VXLAN ERB overlay fabric and the OISM device roles in the fabric.

Table 3 summarizes the device roles.

| Device Role | Description |

|---|---|

Border leaf (BL) |

OISM leaf devices in the EVPN fabric underlay and overlay. Border leaf devices function as gateways interconnecting the EVPN fabric to multicast devices (sources and receivers) outside the fabric in an external PIM domain. These devices serve in the PIM EVPN gateway (PEG) role. |

Lean spine (LS) |

Spine devices in the underlay of the EVPN fabric. These devices usually operate as lean spines that support the EVPN underlay as IP transit devices. The lean spines might also act as route reflectors in the fabric. You configure OISM elements on the lean spine devices only in the following use cases:

|

Server leaf (Leaf) |

OISM leaf devices on the access side in the EVPN fabric underlay and overlay. Server leaf devices are often top-of-rack (ToR) switches. These devices connect the EVPN fabric to multicast sources and multicast receiver hosts on bridge domains or VLANs within the fabric. |

See Configuration Elements for OISM Devices for details on the configuration elements that are common and those that are different for each device role.

PIM Domain with External Multicast Sources and Receivers

In Figure 1, the OISM border leaf devices connect to multicast sources and receivers outside the EVPN fabric in a representative external PIM domain. The multicast devices in the external PIM domain follow standard PIM protocol procedures; their operation is not specific to OISM. External multicast traffic flows at L3 through the PIM domain.

You can use OISM to route and to forward multicast traffic in an EVPN-VXLAN ERB overlay fabric between devices in the following use cases:

Internal multicast sources and receivers

Internal multicast sources and external multicast receivers

External multicast sources and internal multicast receivers

For simplicity, in this documentation we represent the external PIM domain as:

A PIM router (a device such as an MX Series router) that doubles as the PIM rendezvous point (RP).

An external source.

An external receiver.

Supported Methods for Multicast Data Transfer to or from an External PIM Domain

OISM border leaf devices support one or more methods to route multicast traffic to and from devices outside of the fabric. Supported methods are platform-dependent.

Some platforms don't support the border leaf role. If you don't see a platform listed in Table 4 in the Supported Platforms column for any of the external multicast methods, that means the platform doesn't support the border leaf role.

| Name | Connection Method | Supported Platforms |

|---|---|---|

M-VLAN IRB method |

IRB interfaces on a multicast VLAN (M-VLAN) that you extend in the EVPN instance. The fabric uses the M-VLAN and corresponding IRB interfaces only for external multicast traffic flow to and from the external PIM domain. This method supports EVPN Ethernet segment identifier (ESI) multihoming to connect the external PIM router to more than one OISM border leaf device in the fabric. Note:

We don't support this method with enhanced OISM. |

PTX10001-36MR PTX10002-36QDD PTX10004 PTX10008 PTX10016 QFX10002 (except QFX10002-60C) QFX10008 QFX10016 |

Classic L3 interface method |

Classic physical L3 interfaces on OISM border leaf devices that connect individually to the external PIM domain on different subnets. These interfaces aren't associated with a VLAN. You don't configure these interfaces in the EVPN instances. Instead, you assign IP addresses to these interfaces and include them in the tenant L3 VRF instances. Note:

The L3 interface connection can be an aggregated Ethernet (AE) interface bundle. |

ACX7024 ACX7100 ACX7332 ACX7348 ACX7509 EX4400 EX4650 PTX10001-36MR PTX10002-36QDD PTX10004 PTX10008 PTX10016 QFX5110 QFX5120 QFX5130-32CD QFX5700 QFX10002 (except QFX10002-60C) QFX10008 QFX10016 |

Non-EVPN IRB method |

IRB interfaces on an extra VLAN that you don't extend in the EVPN instance. You include these logical interfaces in the tenant L3 VRF instances. On each border leaf device, you assign a unique extra VLAN ID and subnet for the associated IRB interface. We call this type of interface a non-EVPN IRB interface for external multicast. |

PTX10001-36MR PTX10002-36QDD PTX10004 PTX10008 PTX10016 QFX5130-32CD QFX5700 |

See Overview of Multicast Forwarding with IGMP Snooping or MLD Snooping in an EVPN-VXLAN Environment for a general overview of connecting EVPN-VXLAN fabrics to an external PIM domain using a L2 M-VLAN or L3 links.

OISM Bridge Domains (VLANs)

Table 5 summarizes the OISM bridge domains or VLANs and describes how OISM uses them.

References in this document to all OISM devices correspond to the border leaf and server leaf devices on which you enable OISM.

| Bridge Domain/VLAN | Description | Configure On: |

|---|---|---|

Multicast VLAN (M-VLAN) |

(M-VLAN IRB method for external multicast) A VLAN in the EVPN fabric with associated IRB interfaces that connect the fabric to an external multicast router. This VLAN and IRB interface enable traffic flow between devices inside and outside the fabric. To support IGMP snooping with both IGMPv2 and IGMPv3 traffic, you assign separate M-VLANs to carry traffic for each IGMP version. You extend this VLAN in the EVPN instance. You can also multihome the external multicast router to multiple border leaf device M-VLAN IRB interfaces in the same EVPN ES. The usual EVPN multihoming DF rules apply to send only one copy of the traffic in the ES on the M-VLAN. Configure the M-VLAN as a VLAN that is not the SBD or any of the revenue bridge domains in the EVPN fabric. Note:

We don't support the M-VLAN IRB method with enhanced OISM. See Supported Methods for Multicast Data Transfer to or from an External PIM Domain for other supported methods to connect to external sources and receivers. |

Border leaf devices |

Extra non-EVPN VLAN |

(Non-EVPN IRB method for external multicast) An extra VLAN that isn't in the EVPN instances in the fabric. You configure associated IRB interfaces in the tenant L3 VRF instances. This VLAN and IRB interface enable multicast traffic flow between devices inside the fabric and devices outside the fabric. You must assign distinct extra VLANs and corresponding IRB interface subnets on each border leaf device that are unique across the fabric. Also, to support IGMP snooping with both IGMPv2 and IGMPv3 traffic, use separate distinct extra non-EVPN VLANs to carry traffic for each IGMP version. The same constraints apply if you want to support MLD snooping with both MLDv1 and MLDv2 traffic. Note:

See Supported Methods for Multicast Data Transfer to or from an External PIM Domain for other supported methods to connect to external sources and receivers. |

Border leaf devices |

Revenue bridge domains (VLANs) |

Bridge domains for subscribers to the services that the fabric provides. You configure the revenue bridge domains as VLANs in the fabric. The revenue bridge domains correspond to the customer VLANs in the fabric. These VLANs are not specific to OISM, but the multicast sources and receivers in the fabric are in these bridge domains. For details on how to allocate VLANs for these bridge domains if you want to support IGMP snooping with both IGMPv2 and IGMPv3 receivers in the fabric, see IGMPv2 and IGMPv3 (or MLDv1 and MLDv2) in the Same EVPN-VXLAN Fabric. Note that the same constraints apply if you want to support MLD snooping with both MLDv1 and MLDv2 receivers. |

All OISM devices |

Supplemental bridge domain (SBD) |

Bridge domain that enables support for external multicast traffic, implements SMET optimization in the EVPN core, and supports the enhanced OISM mode implementation where all devices don't need to know all VLANs in the network. You configure the SBD as a regular VLAN that is different from the revenue bridge domain VLANs, the M-VLAN, or the extra non-EVPN VLANs. The SBD usually serves all OISM leaf devices in the fabric. To support IGMP snooping with both IGMPv2 and IGMPv3 traffic, or to support MLD snooping with both MLDv1 and MLDv2 traffic, you assign separate SBDs to carry traffic for each IGMP or MLD version. The SBD carries:

Note:

The SBD is central to OISM operation for:

The SBD IRB interface must always be up for OISM to work. You configure the VLAN that you want to use as the SBD for each L3 VRF instance, and configure a corresponding IRB interface. Use the |

All OISM devices |

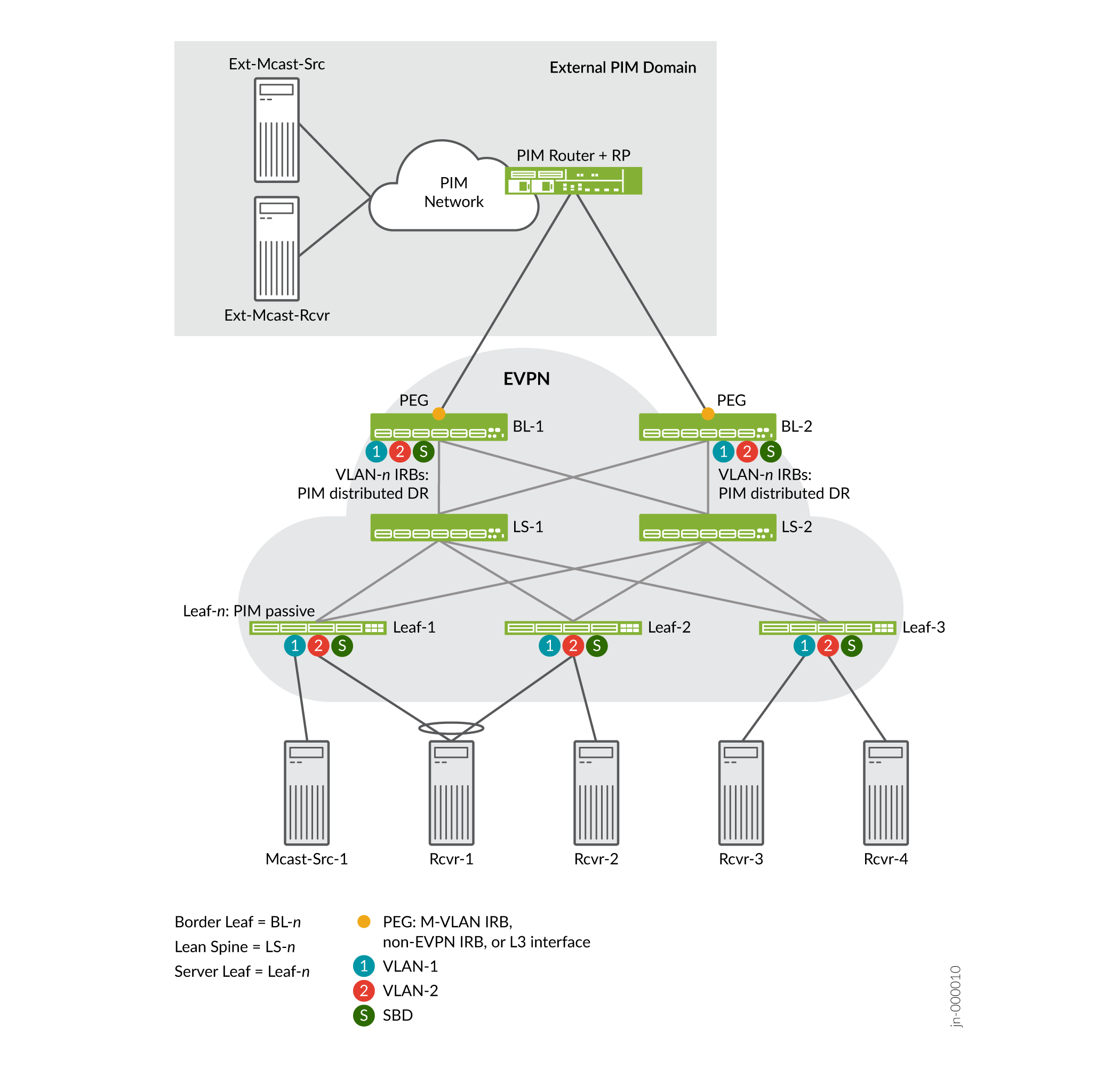

Regular OISM Mode—Symmetric Bridge Domains Model

The regular OISM implementation uses a symmetric bridge domains model. We also refer to this OISM mode as the bridge domains everywhere (BDE) model. You configure all of the revenue bridge domains (VLANs) on all of the OISM devices in the network with this model.

In the symmetric bridge domains model, you must configure the SBD and all revenue bridge domains on all OISM border leaf and server leaf devices.

You also configure the following VLANs uniformly on the border leaf devices that connect to the external PIM domain:

The M-VLAN, if you use the M-VLAN IRB method for external multicast.

A unique extra non-EVPN VLAN on each border leaf device, if you use the non-EVPN IRB method for external multicast.

The lean spine devices in the fabric usually serve only as IP transit devices and possibly as route reflectors. As a result, you don't usually need to configure these elements on the lean spine devices. (See the Lean Spine row in Table 3 for some exceptions.)

Enhanced OISM Mode—Asymmetric Bridge Domains Model

The enhanced OISM implementation supports an asymmetric bridge domains model in which on each leaf device, you can configure only the revenue VLANs that the device hosts. As a result, we sometimes refer to enhanced OISM as the bridge domains NOT everywhere (BDNE) model.

In general, enhanced OISM uses the same high-level OISM structure and network components you see in Figure 2, but with some operational differences to enable the asymmetric bridge domains model.

See Overview of Enhanced OISM for an introduction to the main differences from the regular OISM implementation. Throughout this document, we describe the operational or configuration differences when you use enhanced OISM instead of regular OISM, as applicable.

Configuration Elements for OISM Devices

This section summarizes the elements you need to configure on:

All OISM devices—Devices in the border leaf role and the server leaf role, and spine devices that also serve as AR replicators in standalone mode when you integrate AR with OISM.

See Table 6.

Server leaf devices only.

See Table 7.

Border leaf devices only, based on the method you use for external multicast:

M-VLAN IRB interface

Classic L3 interface

Non-EVPN IRB interface

See Table 8.

Some elements are optional, which the description notes.

EX4650, QFX5110, and QFX5120 switches support enterprise style interface configurations for OISM elements, but not service provider style interface configurations. For more information on these interface configuration styles, see Flexible Ethernet Services Encapsulation and Understanding Flexible Ethernet Services Support With EVPN-VXLAN.

Table 6 lists the elements you configure on all OISM devices.

| Configuration Element | Description |

|---|---|

OISM mode |

Enable OISM globally, and enable OISM routing functions in L3 VRF instances. You enable OISM in the regular mode or the enhanced mode, and all devices must run the same OISM mode. A device with OISM enabled advertises EVPN Type 3 Inclusive Multicast Ethernet Tag (IMET) routes as follows:

|

Revenue bridge domains (customer VLANs) and corresponding IRB interfaces |

Configure revenue bridge domains (customer VLANs) according to your data center services requirements. With regular OISM, you must configure all revenue bridge domain VLANs and corresponding IRB interfaces symmetrically on all OISM devices. With enhanced OISM, on each OISM device you need to configure only the revenue VLANs that the device hosts, along with the corresponding IRB interfaces. However, you must still configure the revenue VLANs symmetrically on any sets of multihoming peer leaf devices. See OISM Bridge Domains (VLANs) for more information. See IGMPv2 and IGMPv3 (or MLDv1 and MLDv2) in the Same EVPN-VXLAN Fabric for special considerations to configure the revenue bridge domains if you want to support:

|

SBD (VLAN) and corresponding IRB interface |

On all OISM devices, in each L3 VRF that supports OISM routing, configure a VLAN as the SBD and a corresponding IRB interface. The SBD can be any VLAN that is distinct from the M-VLAN, any of the non-EVPN VLANs or any revenue bridge domain VLANs in the EVPN fabric. See OISM Bridge Domains (VLANs) for more information. To identify this VLAN as the SBD in the L3 VRF instance, configure the Starting in Junos OS and Junos OS Evolved 24.1R1, for interoperability with other vendors and compliance with the OISM draft standard, the EVPN Type 3 IMET routes for the SBD IRB interface include the OISM SBD flag in the multicast flags extended community. |

L3 multicast protocol—IGMPv2 or IGMPv3 |

Enable IGMPv2 or IGMPv3 L3 multicast protocols. Receivers send IGMP reports to express interest in receiving traffic for a multicast group. You can use IGMPv2 or IGMPv3 in any-source multicast (ASM) mode, or IGMPv3 in source-specific multicast (SSM) mode. Note that you can't enable IGMP snooping for both IGMP versions together for the same VLAN or in the same VRF instance with OISM enabled. However, to support IGMP snooping with IGMPv2 and IGMPv3 receivers in the same fabric, you can enable IGMP snooping with IGMPv2 for specific VLANs in one VRF instance, and enable IGMP snooping with IGMPv3 for other VLANs in another VRF instance. See IGMPv2 and IGMPv3 (or MLDv1 and MLDv2) in the Same EVPN-VXLAN Fabric for details. IGMPv2 is the default IGMP version. To configure IGMPv3, you must specify the |

L3 multicast protocol—MLDv1 or MLDv2 |

Enable MLDv1 or MLDv2 L3 multicast protocols if you have IPv6 multicast traffic in your fabric. Receivers send MLD reports to express interest in receiving traffic for a multicast group. You can use MLDv1 or MLDv2 in any-source multicast (ASM) mode, or MLDv2 in source-specific multicast (SSM) mode. Note that with OISM enabled, you can't enable MLD snooping for both MLD versions together for the same VLAN or in the same VRF instance. However, you can support MLD snooping with MLDv1 and MLDv2 receivers in the same fabric if you:

See IGMPv2 and IGMPv3 (or MLDv1 and MLDv2) in the Same EVPN-VXLAN Fabric for details. MLDv1 is the default MLD version. To configure MLDv2, you must specify the |

L2 multicast optimizations—IGMP snooping with SMET |

Enable IGMP snooping at L2 with IGMPv2 or IGMPv3 protocols as part of the optimizations OISM provides. With IGMP snooping, the device routes or forwards multicast traffic only toward interested access-side receivers. Receivers send IGMP reports to express interest in receiving traffic for a multicast group. When you enable IGMP snooping, the device also automatically advertises SMET Type 6 routes. With SMET, the device sends copies of the traffic into the EVPN core only toward other devices that have interested receivers. Configure IGMP snooping as follows:

|

L2 multicast optimizations—MLD snooping with SMET for IPv6 multicast traffic |

Enable MLD snooping at L2 with MLDv1 or MLDv2 protocols if you have IPv6 multicast traffic in the fabric. With MLD snooping, the device routes or forwards multicast traffic only toward interested access-side receivers. Receivers send MLD reports to express interest in receiving traffic for a multicast group. When you enable MLD snooping, the device also automatically advertises SMET Type 6 routes. With SMET, the device sends copies of the traffic into the EVPN core only toward other devices that have interested receivers. Configure MLD snooping as follows:

|

L3 VRF instance (or the default IRB routing instance) |

Configure one or more routing instances ( If your configuration has user-specified VRF instances, then

you configure the relevant L3 OISM routing functions at the

|

originate-smet-on-revenue-vlan-too |

(Optional) Enable the device to originate SMET Type 6 routes for revenue bridge domains (as well as on the SBD) upon receiving local IGMP or MLD reports. By default, OISM devices originate Type 6 routes only on the SBD. Use this option for compatibility with other vendor devices that don't support OISM. Those devices can't create the right states for the revenue bridge domain VLANs upon receiving Type 6 routes on the SBD. |

install-star-g-routes |

Enable the Routing Engine (RE) on the device to install (*,G) multicast routes on the Packet Forwarding Engine (PFE) for all of the revenue bridge domain VLANs in the routing instance immediately upon receiving an EVPN Type 6 route. Setting this option helps minimize traffic loss when multicast traffic first arrives. This option is mutually exclusive with the We require this option on:

Setting this option is generally not recommended in any use cases other than those listed above where it is required. See Latency and Scaling Trade-Offs for Installing Multicast Routes with OISM (install-star-g-routes Option) for details on how and when to set this option. |

conserve-mcast-routes-in-pfe |

(Required on ACX Series routers, QFX5130-32CD switches, and QFX5700 switches when you configure those devices as OISM server leaf or OISM border leaf devices) Configure this option with OISM to conserve PFE table space. The device installs only the L3 multicast routes and avoids installing L2 multicast snooping routes. Don't set this option on QFX5130-32CD and QFX5700 switches when you configure those devices as standalone AR replicator devices with OISM. This option is mutually exclusive with the See ACX Series Routers, QFX5130-32CD Switches, and QFX5700 Switches as Server Leaf and Border Leaf Devices with OISM for details. |

Table 7 lists the elements you configure on the server leaf devices.

| Configuration Element | Description |

|---|---|

PIM in passive mode on all revenue bridge domains and the SBD |

Configure this mode to facilitate local routing without all of the traditional PIM protocol functions. The server leaf device:

|

PIM with the |

This option enables an SBD IRB interface to accept multicast traffic from a source that isn't on the same subnet. The server leaf devices require this setting because:

|

OSPF in tenant VRFs for peer connectivity to support:

|

Configure OSPF in each tenant VRF so the server leaf devices learn routes:

The device creates the PIM (S,G) entries it needs to forward the traffic from the SBD to the revenue bridge domains. With regular OISM, on server leaf devices, you configure all interfaces in the L3 VRF instance in OSPF passive mode so these devices can share internal routes without forming OSPF adjacencies. With enhanced OISM only, on server leaf devices, you configure the SBD IRB interface in the L3 VRF instance in OSPF active mode. The SBD IRB interfaces need to establish OSPF adjacencies in this case because the server leaf devices exchange multicast traffic among themselves mostly on the SBD. You configure all other interfaces in the L3 VRF instance in OSPF passive mode. |

Table 8 lists the elements you configure on the border leaf devices based on the external multicast method you use.

| Configuration Element | External Multicast Method | Description |

|---|---|---|

M-VLAN and corresponding IRB interface (in EVPN instance) |

M-VLAN IRB method |

Configure a VLAN to serve as the M-VLAN, and extend this VLAN in the EVPN instance. This VLAN must be distinct from the SBD or any revenue bridge domain VLANs in the EVPN fabric. Also configure an M-VLAN IRB interface in the EVPN instance. See OISM Bridge Domains (VLANs) for more information about the M-VLAN. You can link multiple border leaf device M-VLAN IRB interfaces to the external multicast router in the same EVPN ES. The usual EVPN multihoming DF rules apply to prevent sending duplicate traffic on the M-VLAN. |

L2 multicast router interface on external multicast ports |

M-VLAN IRB method or non-EVPN IRB method |

Configure the With the M-VLAN IRB method, these interfaces support multicast traffic when the external domain router is multihomed to the border leaf devices. As a result, multihomed M-VLAN use cases require this configuration. This setting is also required with the non-EVPN IRB method. |

PIM on M-VLAN IRB interface |

M-VLAN IRB method |

Configure PIM in distributed designated router (DR) mode (distributed-dr) or standard PIM mode on an M-VLAN IRB interface. We recommend using distributed DR mode in most cases, especially on border leaf devices where the external PIM router is multihomed to multiple border leaf devices. The device uses PIM to:

You configure PIM on the M-VLAN IRB interface in the tenant VRF instances. similar to how you configure PIM on the revenue bridge domains. |

L3 physical interface with IP address |

Classic L3 interface |

Configure a physical L3 interface with an IP address for external multicast that connects the border leaf device to the external PIM domain at L3. Define the external multicast L3 interface in a different subnet on each border leaf device. Note:

The L3 interface connection can be an AE interface bundle. |

PIM on the logical interface for the external multicast physical L3 interface |

Classic L3 interface |

Configure the logical interface (unit 0) for the external multicast L3 interface in the tenant VRF instances. Configure standard PIM mode on the logical interface. With this setting, the border leaf device forms a PIM neighbor relationship with the external PIM router to send join messages and transmit or receive external multicast traffic. |

Extra VLAN and corresponding IRB interface (not in EVPN instance) |

Non-EVPN IRB method |

Configure an extra VLAN and IRB interface globally for external multicast without EVPN signaling. This VLAN and the IRB interface subnet must be distinct from the SBD, any revenue bridge domain VLANs, or the extra VLAN on any other border leaf device in the EVPN fabric. See OISM Bridge Domains (VLANs) for more about this extra VLAN and external multicast method. |

PIM on non-EVPN IRB interface |

Non-EVPN IRB method |

Configure PIM on the non-EVPN IRB interface in the tenant VRF instances. With this setting, the border leaf device forms a PIM neighbor relationship with the external PIM router to send join messages and transmit or receive external multicast traffic. |

PIM on SBD IRB interface |

All |

Configure standard PIM mode on the SBD IRB interface in the tenant VRF instances for SBD routing and forwarding. With this setting, the border leaf device:

|

PIM with the |

Methods (platform-specific) supported with enhanced OISM:

|

(Enhanced OISM only) With this option, the border leaf device accepts multicast traffic from a source that isn't on the same subnet. We need this option because with enhanced OISM, you might not have configured all of the revenue VLANs on all of the OISM devices. Including this option enables border devices to have routes to a multicast source located behind other OISM leaf devices when the source is on a VLAN that isn't also configured on the border leaf device. |

PIM EVPN gateway (PEG) role |

All (include the external IRB option for EVPN, |

Configure the pim-evpn-gateway role on the border leaf device to connect to the external PIM router. In this role, the border leaf device uses traditional PIM routing behavior and does local routing, as follows: For externally-sourced traffic:

For internally-sourced traffic:

EVPN IMET routes for PEG interfaces include the OISM PEG flag in the multicast flags extended community field of the route. |

OSPF for:

|

All |

Configure an OSPF area in the tenant L3 VRF instance so the border leaf device learns routes to the multicast sources. The device requires these routes to support forwarding multicast traffic:

The device needs this route information to create the PIM (S,G) entries to forward the traffic on the external multicast interfaces, the SBD, and the revenue bridge domains. (Enhanced OISM) The border leaf devices also need to learn the routes for east-west traffic on the SBD among the leaf devices that aren't multihoming peers. As a result, with either regular OISM or enhanced OISM, on border leaf devices, you configure OSPF in active mode on:

You configure any other interfaces in the L3 VRF instance in OSPF passive mode. |

PIM distributed DR mode on revenue bridge domain IRB interfaces |

All |

Configure PIM in distributed DR mode (distributed-dr) on the revenue bridge domain IRB interfaces in the tenant VRF instances. In this mode, the border leaf device:

|

PIM accept-join-always-from option and policy on M-VLAN IRB interface |

M-VLAN IRB method | Set this option on the M-VLAN IRB interface in the tenant VRF instances when the external PIM router is multihomed to more than one EVPN border leaf device. With this option, the device can accept and install the same PIM (S,G) join states on multihoming peer border leaf devices. This option supports sending multicast traffic from sources inside the fabric to receivers in the external PIM domain. With multihoming on the M-VLAN, the usual EVPN multihoming DF rules apply in an ES to prevent sending duplicate traffic. If peer border leaf devices have the same valid join states in place, any device that is the EVPN DF can forward the multicast traffic. Configure this statement with policies that specify the interface should always install PIM joins from upstream neighbor addresses that correspond to the external PIM router. Note:

You don't use this option with the classic L3 interface and non-EVPN IRB methods. Those methods don't extend the external multicast interfaces in the EVPN instance. |

See the following sections for more details on configuring OISM devices:

Configure Common OISM Elements on Border Leaf Devices and Server Leaf Devices

Configure Border Leaf Device OISM Elements with Classic L3 Interface Method

Configure Border Leaf Device OISM Elements with Non-EVPN IRB Method

For a full OISM configuration example of a data center fabric use case that includes classic L3 interface connections to the external PIM domain, see Optimized Intersubnet Multicast (OISM) with Assisted Replication (AR) for Edge-Routed Bridging Overlays.

How OISM Works

The following sections describe how OISM works and show how the multicast traffic flows in several common use cases with the symmetric bridge domains OISM model.

The use cases we support with enhanced OISM (the asymmetric bridge domains model) are similar to those in this section, but with a few operational differences. Also, as mentioned previously, you don't need to configure all VLANs on all leaf devices as the figures in this sections show.

For an overview of the differences with enhanced OISM, see Overview of Enhanced OISM. For more details on the operational differences, see How Enhanced OISM Works.

- Local Routing on OISM Devices

- Multicast Traffic Forwarding and Routing with Source and Receivers Inside the EVPN Data Center

- Multicast Traffic From an Internal Source to Receivers Outside the EVPN Data Center—M-VLAN IRB Method

- Multicast Traffic from an Internal Source to Receivers Outside the EVPN Data Center—L3 Interface Method or Non-EVPN IRB Method

- Multicast Traffic from an External Source to Receivers Inside the EVPN Data Center—M-VLAN IRB Method

- Multicast Traffic from an External Source to Receivers Inside the EVPN Data Center—L3 Interface Method or Non-EVPN IRB Method

- AR and OISM with an Internal Multicast Source

- AR and OISM with an Internal Multicast Source and Multihomed Receiver

- AR and OISM with an External Multicast Source

Local Routing on OISM Devices

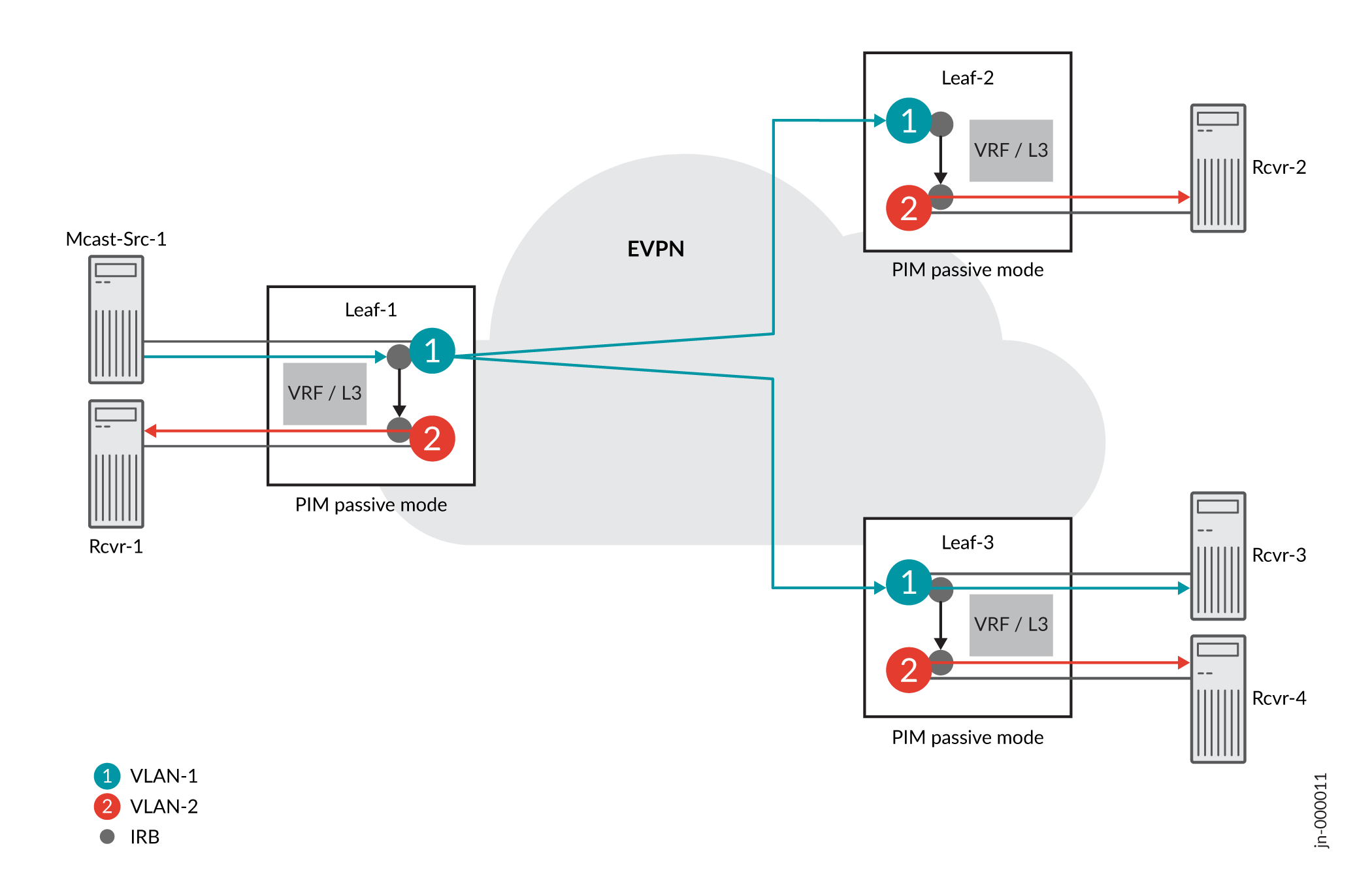

In Figure 3, we illustrate how local routing and forwarding works in general on OISM devices. As the figure shows, OISM local routing forwards the traffic on the source VLAN. Each leaf device routes the traffic locally to its receivers on other VLANs, which avoids hairpinning for intersubnet routing on the same device.

In this case, the source traffic comes from Mcast-Src-1 on VLAN-1, the blue VLAN. Server leaf devices use IRB interfaces and PIM in passive mode to route traffic between VLANs. With PIM in passive mode, server leaf devices:

Don’t become PIM neighbors with the other leaf devices.

Act as a local PIM RP, create local PIM state upon receiving IGMP or MLD reports, and avoid doing source registration.

As a result, the server leaf devices forward and route multicast traffic within the fabric as follows to receivers interested in the multicast group:

The ingress leaf device (Leaf-1) forwards the traffic on the source VLAN into the EVPN fabric toward the other leaf devices with interested receivers.

All of the server leaf devices don't need to forward the traffic back into the EVPN core to another device that is a designated router. Server leaf devices can locally:

Forward the traffic on the source VLAN toward local interested receivers on the source VLAN.

Route the traffic from the source VLAN through the IRB interfaces toward local interested receivers in other VLANs.

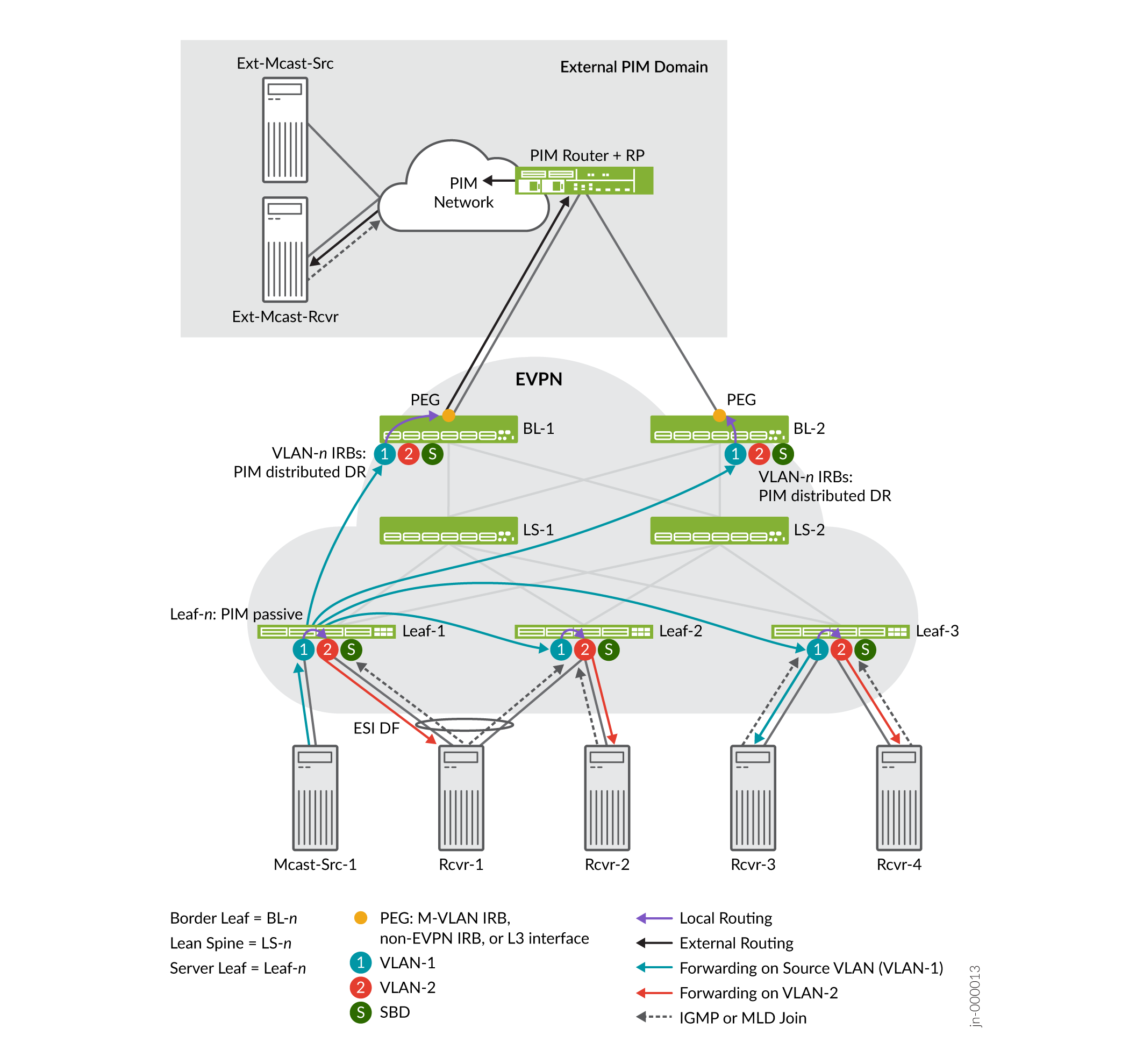

Multicast Traffic Forwarding and Routing with Source and Receivers Inside the EVPN Data Center

When the multicast source is inside the EVPN fabric, the server leaf devices receive the multicast traffic on the source VLAN. Then they locally route or forward the traffic as described in Local Routing on OISM Devices.

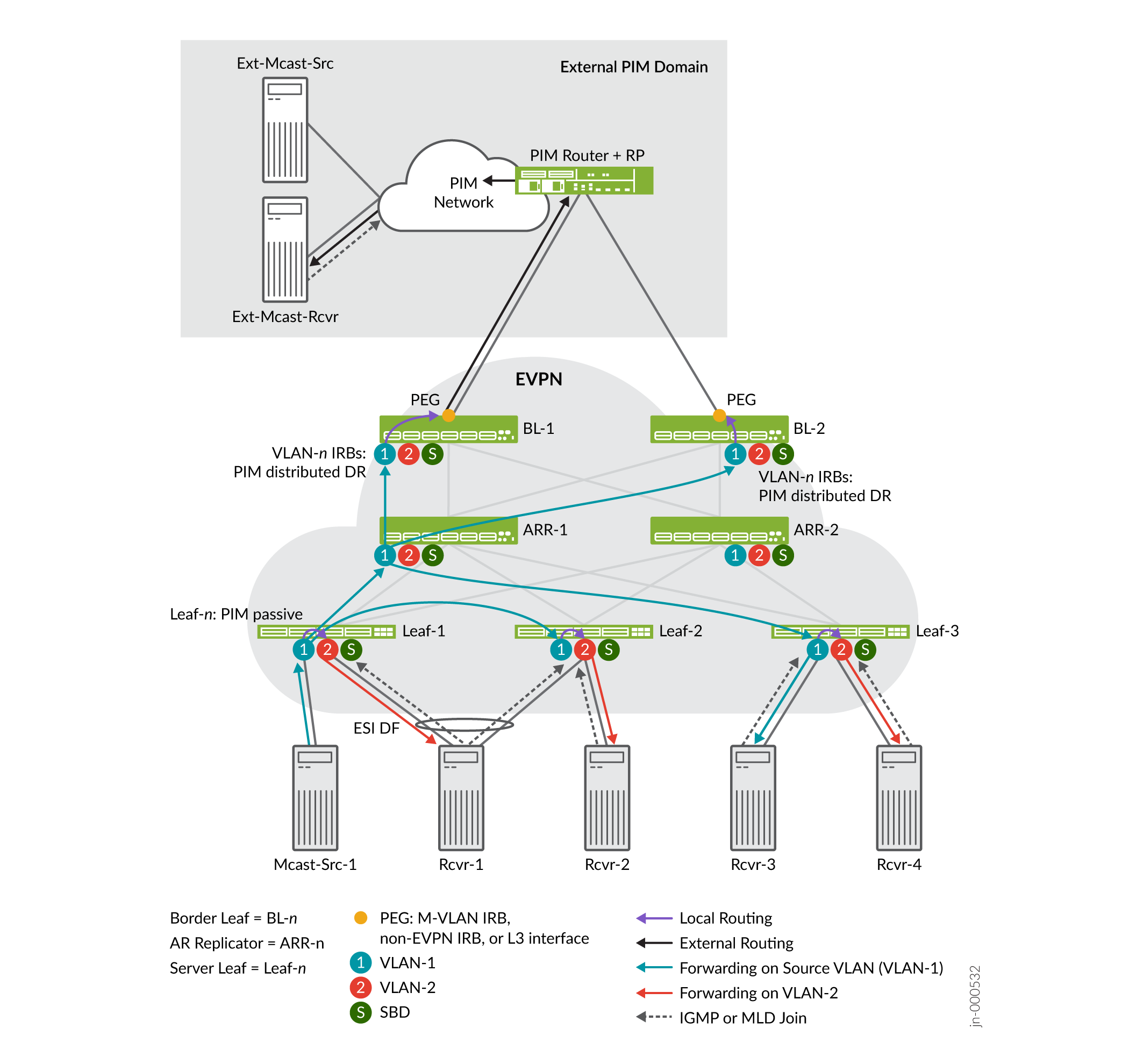

The following figure illustrates OISM local routing and forwarding within an EVPN fabric in detail. The figure also shows how local routing works with EVPN multihoming for a multicast receiver.

In Figure 4, the multicast source, Mcast-Src-1, is single-homed to Leaf-1. The source VLAN is VLAN-1 (the blue VLAN). Multicast control and data traffic flow proceeds as follows:

Receivers on all three server leaf devices sent IGMP or MLD reports (join messages) expressing interest in receiving the traffic for a multicast group.

Leaf-1 forwards the traffic on the source VLAN to both Leaf-2 and Leaf-3 because both leaf devices have interested receivers. In this case, the receivers on Leaf-2 and Leaf-3 use single-homing.

Leaf-2 and Leaf-3 forward or locally route the traffic to their interested receivers (Rcvr-2, Rcvr-3, and Rcvr-4) as described in Local Routing on OISM Devices.

Rcvr-1 on VLAN-2 is multihomed to Leaf-1 and Leaf-2 in an EVPN ES. Rcvr-1 has expressed interest in receiving the multicast traffic, so:

- Both server leaf devices, Leaf-1 and Leaf-2, receive the IGMP or MLD report.

- Both Leaf-1 and Leaf-2 locally route the traffic from the source VLAN (VLAN-1) because each device has the PIM passive mode configuration.

- However, because Leaf-1 is the DF for the EVPN ES, only Leaf-1 forwards the traffic to Rcvr-1.

The border leaf devices receive the multicast traffic through the EVPN fabric on the source VLAN. Note that the border leaf devices could have local receivers, although we don't show that case. With local receivers, the device also locally routes or forwards the traffic to those receivers the same way the server leaf devices do.

Figure 4 also shows that the border leaf devices locally route the traffic from the source VLAN toward any external multicast receivers in the external PIM domain. See Supported Methods for Multicast Data Transfer to or from an External PIM Domain for the available external multicast methods by platform. Later sections describe the multicast control and data traffic flow for external source and external receiver use cases.

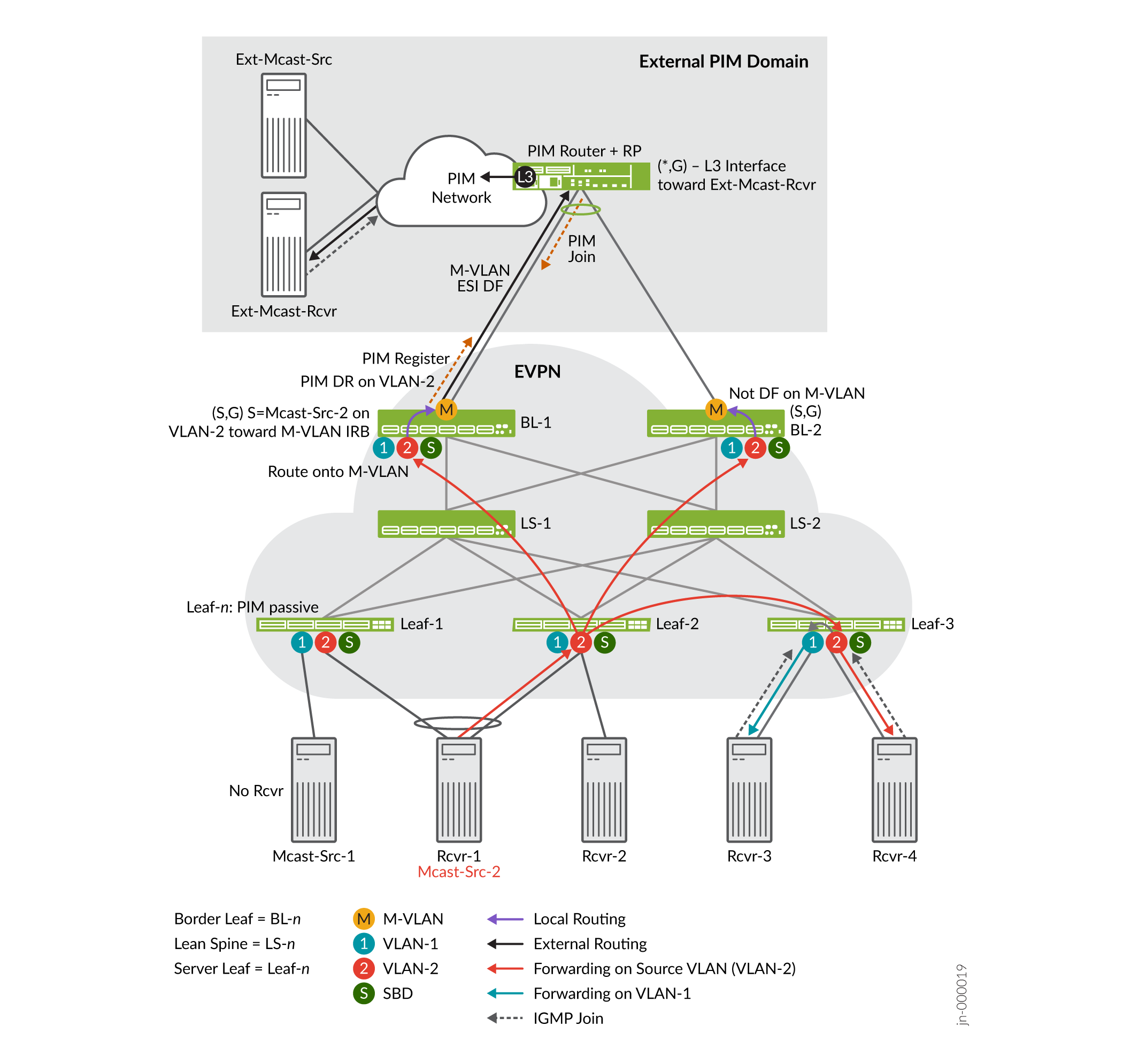

Multicast Traffic From an Internal Source to Receivers Outside the EVPN Data Center—M-VLAN IRB Method

In Figure 5, we illustrate the OISM use case where a multicast source inside the EVPN fabric sends multicast traffic to an interested receiver outside the fabric using the M-VLAN IRB method for external multicast. (Supported Methods for Multicast Data Transfer to or from an External PIM Domain lists external multicast method support by platform.)

The border leaf devices you configure in the OISM PEG role receive the multicast traffic on the source VLAN through the EVPN core. Then the border leaf devices replicate the traffic and route it onto the M-VLAN toward the external PIM domain to reach the external receiver.

PEG border leaf devices only send multicast source traffic received on the revenue bridge domains to the M-VLAN. These devices don't forward the traffic back into the EVPN core toward the other border leaf devices.

In Figure 5, the internal source for a multicast group is Mcast-Src-2, the same device as Rcvr-1, which is multihomed to Leaf-1 and Leaf 2. The source sends the multicast traffic on VLAN-2. The external receiver, Ext-Mcast-Rcvr, expresses interest in receiving the multicast traffic for that multicast group (sends a join message). Internal receivers Rcvr-3 (on VLAN-1) and Rcvr-4 (on VLAN-2) also request to join the multicast group and receive the traffic.

Note that the PIM router is multihomed to both BL-1 and BL-2, the PEG devices, in the EVPN fabric. Those connections are in the same ES; the DF election process chooses one of these devices as the DF for the ES. Only the DF will forward traffic (on the M-VLAN) toward external receivers.

The source traffic reaches the interested internal and external receivers as follows:

- Traffic Flow from Multihomed Source to Internal Receivers

- Traffic Flow to External Receiver—M-VLAN IRB Method

Traffic Flow from Multihomed Source to Internal Receivers

These steps summarize the multicast control and data traffic flow from the multihomed source to the internal receivers:

Mcast-Src-2 (also labeled Rcvr-1) originates the traffic on VLAN-2, the red VLAN. Because the device is multihomed to Leaf-1 and Leaf-2, the device hashes the traffic on VLAN-2 to one of those server leaf devices. In this case, Leaf-2 receives the traffic.

The red arrows show that Leaf-2 forwards the traffic on the source VLAN, VLAN-2, only to:

The other server leaf devices with interested receivers—In this case, only Leaf-3.

The border leaf devices, which both act in the OISM PEG role.

Note that no receivers behind Leaf-1 or Leaf-2 sent an IGMP report to join the multicast group. With IGMP snooping and SMET forwarding enabled, Leaf-2 doesn't forward the traffic to Leaf-1 because Leaf-1 has no interested receivers. Leaf-2 also doesn't locally route the traffic to Rcvr-2 for the same reason.

Leaf-3 receives the source traffic on VLAN-2. Then Leaf-3 routes the traffic locally to VLAN-1 to Rcvr-3. Leaf-3 also forwards the traffic to Rcvr-4 on VLAN-2.

Both border leaf devices BL-1 and BL-2 also receive the source traffic from the EVPN core. We describe the external multicast flow next.

Traffic Flow to External Receiver—M-VLAN IRB Method

These steps summarize the multicast control and data traffic flow in Figure 5 from the border leaf devices toward the external receiver using the M-VLAN IRB method:

In the external PIM domain, the PIM RP enters a PIM (*,G) multicast routing table entry. The entry includes the L3 interface toward Ext-Mcast-Rcvr as the downstream interface.

Both border leaf devices BL-1 and BL-2 receive the source traffic from the EVPN core. The IRB interface on VLAN-2 on one of these border leaf devices is the PIM DR for VLAN-2. In this case, the PIM DR is on BL-1, so BL-1 sends a PIM Register message toward the PIM RP on the M-VLAN IRB interface.

The PIM RP sends a PIM Join message back toward BL-1. BL-1 creates an (S,G) multicast routing table entry as follows:

- The source address is the IP address of Mcast-Src-2 in VLAN-2.

- The downstream interface is the M-VLAN IRB interface.

Both BL-1 and BL-2 are PEG devices and configured in PIM distributed DR mode for the revenue bridge domain (VLAN-1 and VLAN-2) IRB interfaces. As a result, both BL-1 and BL-2 receive the PIM Join and create a similar (S,G) state. Both devices route the traffic locally from VLAN-2 to the M-VLAN.

However, only the DF for the M-VLAN ES actually forwards the data on the M-VLAN to the external PIM domain. In this case, BL-1 is the DF and sends the traffic toward the external receiver. (See the label "M-VLAN ESI DF" and the black arrow between BL-1 and the PIM router in Figure 5.)

The PIM RP receives the traffic from the OISM M-VLAN IRB interface connection. The PIM router sends the traffic to an L3 interface toward the external receiver.

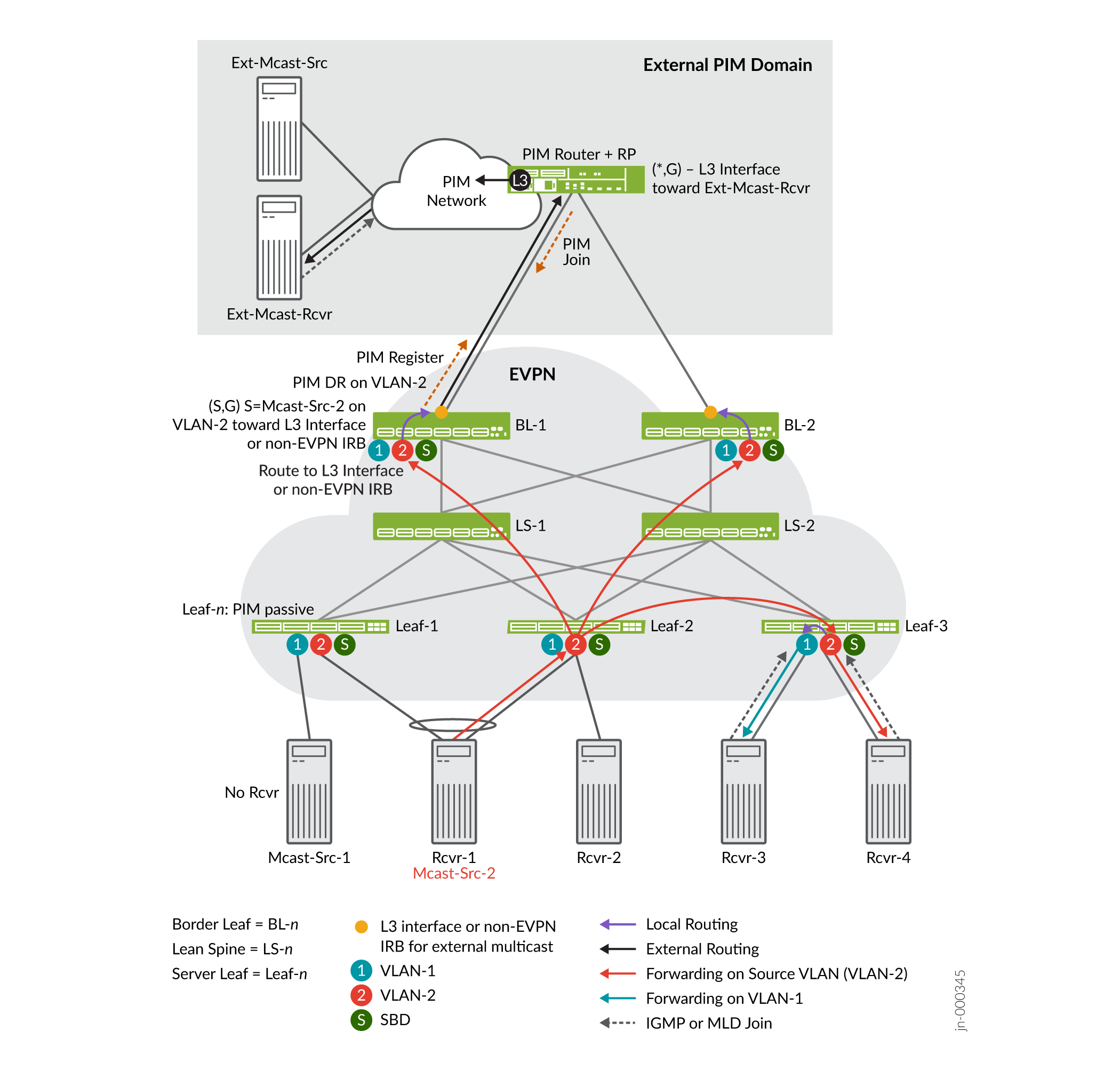

Multicast Traffic from an Internal Source to Receivers Outside the EVPN Data Center—L3 Interface Method or Non-EVPN IRB Method

In Figure 6, we illustrate the OISM use case where a multicast source inside the EVPN fabric sends multicast traffic to a receiver outside the fabric using either of the following methods for external multicast:

Classic L3 interface external multicast method:

On each border leaf device, you configure a classic L3 interface with family

inetthat connects to the external PIM router. You assign an IP address to that interface on a different subnet than the L3 interface subnets on other border leaf devices in the fabric.You enable PIM on the interface and include the interface in the tenant VRF instances that have multicast data receivers. This method differs from the M-VLAN IRB method because you don't extend this interface in the EVPN instance.

Note:The L3 interface connection here can be an individual physical interface or an AE interface bundle that includes multiple physical L3 interfaces.

Non-EVPN IRB external multicast method:

On each border leaf device, you configure a unique extra VLAN that is only for external multicast. You also configure a corresponding L3 IRB interface with an IP address that connects to the external PIM router. The extra VLAN ID can't be the same as the VLAN ID of the revenue bridge domains, SBD, or extra VLAN on any other border leaf device in the fabric. In addition, similar to the L3 interface method, the non-EVPN IRB interfaces on different border leaf devices should connect to the PIM router on different subnets in the fabric.

You enable PIM on the IRB interface and include the interface in the tenant VRF instances that have multicast data receivers. This method differs from the M-VLAN IRB method because you don't extend this VLAN or IRB interface in the EVPN instance.

Supported Methods for Multicast Data Transfer to or from an External PIM Domain lists the platforms that support these external multicast methods.

Figure 6 includes the same internal multihomed source, internal receivers, and an external receiver as in Multicast Traffic From an Internal Source to Receivers Outside the EVPN Data Center—M-VLAN IRB Method. See the steps in Traffic Flow from Multihomed Source to Internal Receivers for details on the internal multicast traffic flow. In this section we describe only what's different in this case, which is the multicast traffic flow from the border leaf devices to the external receiver.

In Figure 6, the internal source for a multicast group is Mcast-Src-2, which is multihomed to Leaf-1 and Leaf-2. The source sends the multicast traffic on VLAN-2, the red VLAN. The external receiver, Ext-Mcast-Rcvr, expresses interest in receiving the multicast traffic for that multicast group (sends a join message).

The external multicast flow in this use case is very similar to the M-VLAN IRB use case. The border leaf devices in the OISM PEG role receive the multicast traffic on the source VLAN through the EVPN core. However, the main difference in this case is that the external multicast interfaces don't use EVPN signaling and don't share an ESI across the border leaf devices. The external multicast interfaces on each border leaf device are distinct, and each have L3 reachability to the external PIM gateway router. The border leaf device that establishes the PIM join state replicates and sends the traffic on the L3 interface or non-EVPN IRB interface to the external PIM domain with the external receiver.

PEG border leaf devices only send multicast source traffic received on the revenue bridge domains to the external PIM domain. These devices don't forward the traffic back into the EVPN core toward the other border leaf devices.

The following section explains how the source traffic reaches the interested external receiver.

Traffic Flow to External Receiver—L3 Interface or Non-EVPN IRB Method

These steps summarize the multicast control and data traffic flow in Figure 6 from the border leaf devices toward the external receiver using the classic L3 interface method or the non-EVPN IRB method:

In the external PIM domain, the PIM RP enters a PIM (*,G) multicast routing table entry. The entry includes the L3 interface toward Ext-Mcast-Rcvr as the downstream interface.

Both border leaf devices BL-1 and BL-2 receive the source traffic from the EVPN core on VLAN-2. The IRB interface on VLAN-2 on one of these border leaf devices is the PIM DR for VLAN-2. In this case, the PIM DR is on BL-1, so BL-1 sends a PIM Register message toward the PIM RP on its external multicast L3 interface or non-EVPN IRB interface.

The PIM RP sends a PIM Join message back toward BL-1. BL-1 receives the PIM join and creates an (S,G) multicast routing table entry as follows:

- The source address is the IP address of Mcast-Src-2 in VLAN-2.

- The downstream interface is the external multicast L3 interface or the non-EVPN IRB interface.

BL-1 routes the traffic from VLAN-2 to its external multicast L3 interface or non-EVPN IRB interface.

The PIM RP receives the traffic from BL-1 on the external multicast interface. The PIM router sends the traffic to an L3 interface toward the external receiver. The external receiver receives the multicast traffic.

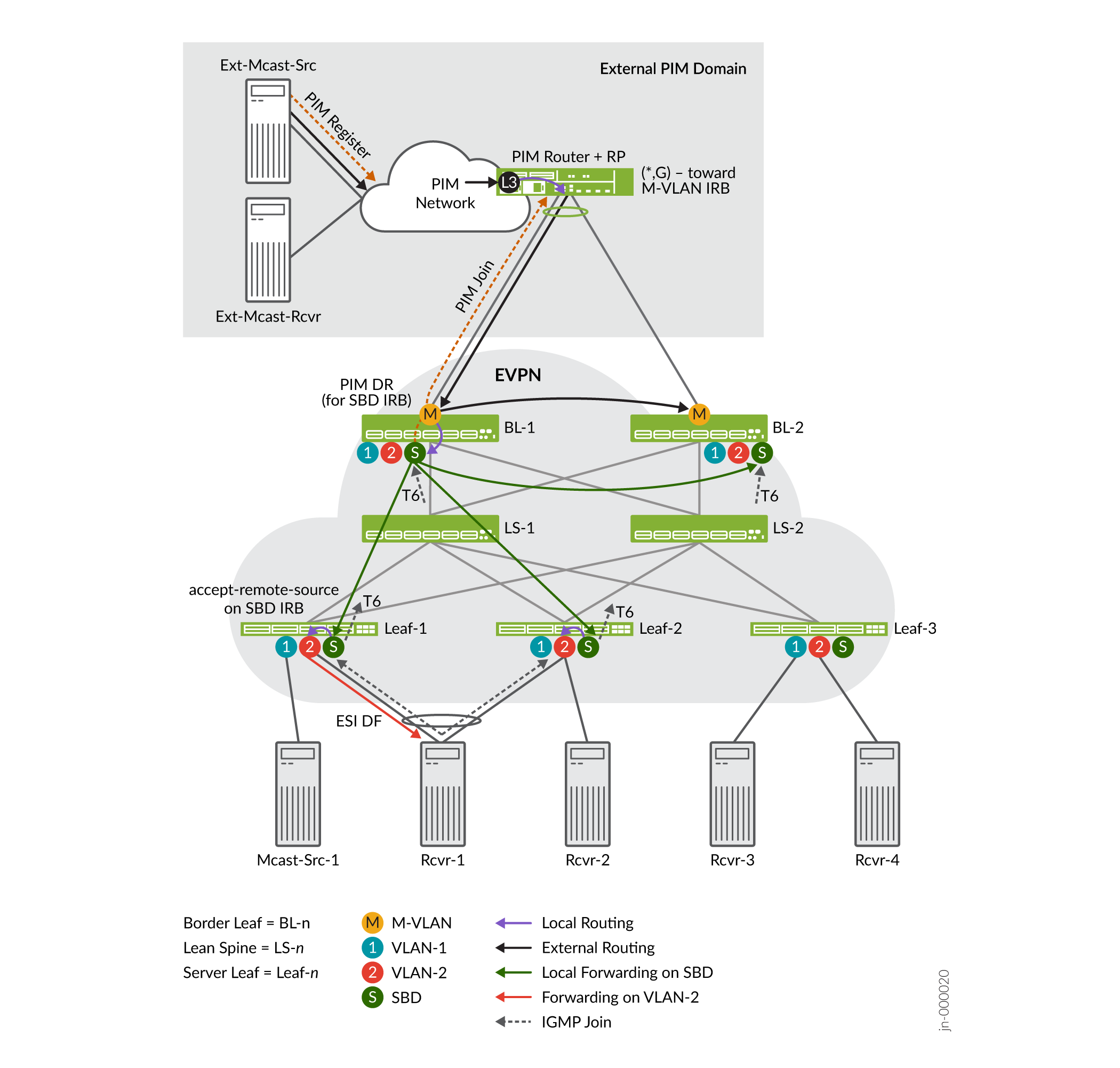

Multicast Traffic from an External Source to Receivers Inside the EVPN Data Center—M-VLAN IRB Method

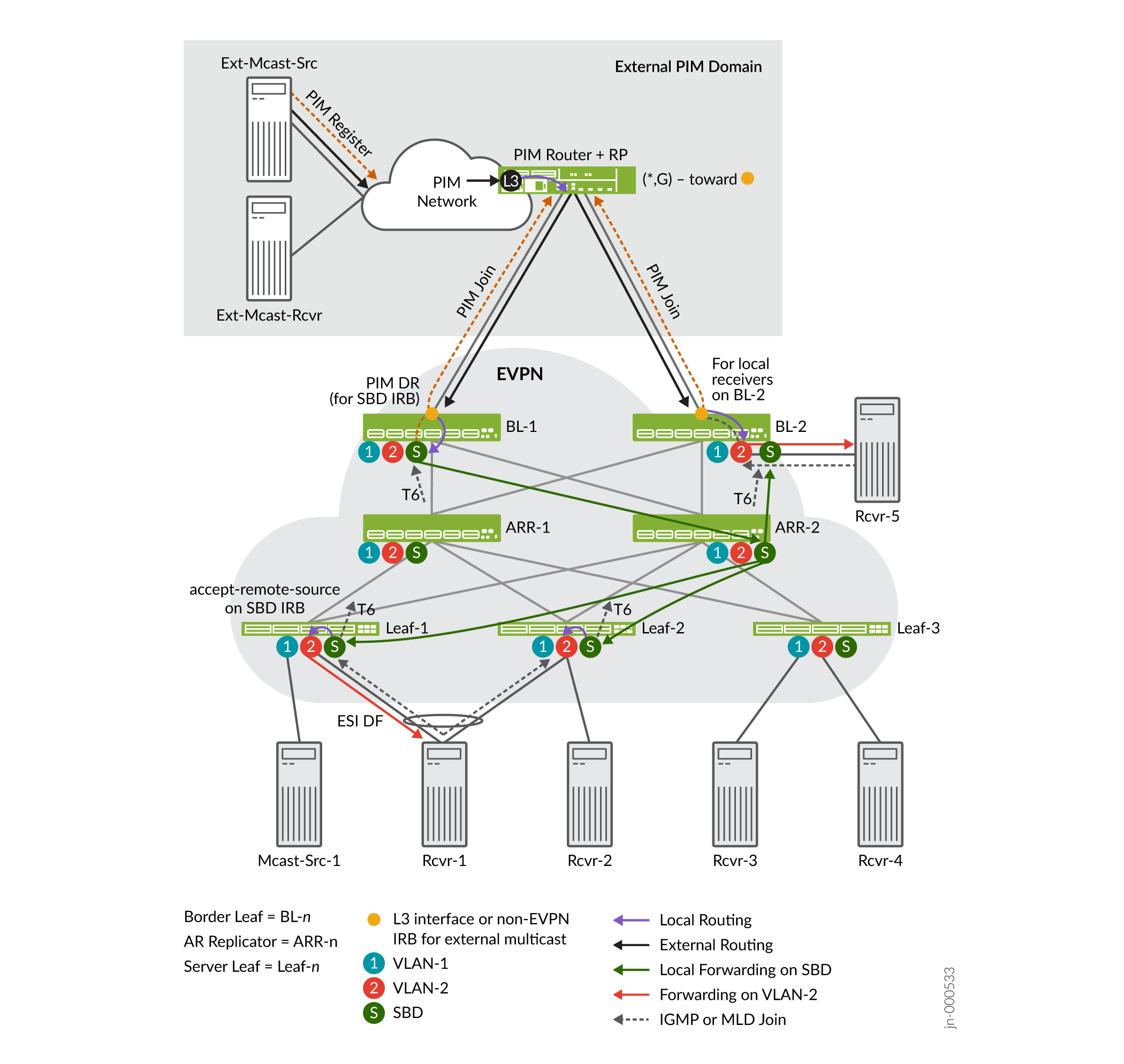

Figure 7 illustrates the OISM use case where a multicast source outside the EVPN fabric sends multicast traffic to receivers inside the fabric. This use case exhibits the two main ways OISM uses the SBD in the EVPN core:

To carry external multicast source traffic.

To advertise SMET Type 6 routes.

The Type 6 routes ensure that the border leaf devices only forward the traffic toward the EVPN devices with interested receivers.

OISM border leaf devices receive external multicast source traffic through the M-VLAN IRB interfaces. OISM devices use the SBD to forward traffic toward EVPN server leaf devices with interested receivers on the revenue bridge domains. Each leaf device then locally forwards or routes the traffic on the revenue bridge domains to its local receivers.

This use case has an internal receiver that is multihomed to two server leaf devices.

In Figure 7, Rcvr-1 within the EVPN fabric is multihomed to server leaf devices Leaf-1 and Leaf-2. Rcvr-1 expresses interest in receiving traffic from a multicast group. The source for the multicast traffic for the group is Ext-Mcast-Src in the external PIM domain.

The external source traffic reaches the interested multihomed receiver, Rcvr-1, as follows:

- Multicast Control Flow between the Internal Multihomed Receiver and the External Source—M-VLAN IRB Method

- Traffic Flow from the Border Leaf Devices to Internal Receivers—M-VLAN IRB Method

- What Happens When a Multihomed External PIM Router Load-Balances the Traffic—M-VLAN IRB Method

- What Happens with Local Receivers on Border Leaf Devices—M-VLAN IRB Method

Multicast Control Flow between the Internal Multihomed Receiver and the External Source—M-VLAN IRB Method

These steps summarize the multicast control flow in this use case:

Rcvr-1 sends an IGMP join message to both multihoming peers Leaf-1 and Leaf-2.

Both Leaf-1 and Leaf-2 generate an EVPN Type 6 route toward the EVPN core on the SBD. The Type 6 (SMET) route advertises that Rcvr-1 is interested in the multicast data.

Both border leaf devices BL-1 and BL-2 receive the Type 6 route on the SBD.

The Type 6 route (on the SBD) signals the border leaf devices to create a PIM join toward the PIM RP (reachable through the M-VLAN). However, to avoid duplicate join messages, only the border leaf device that is the PIM DR for the SBD generates the PIM join message. In this case, the figure shows the PIM DR for the SBD is BL-1. BL-1 sends the PIM join message toward the PIM RP by way of its neighbor, the M-VLAN IRB interface.

The PIM RP receives the join message. Then the PIM RP creates a PIM (*,G) entry in the multicast routing table with the M-VLAN IRB interface as the downstream interface.

The external source Ext-Mcast-Src registers with the PIM RP. The PIM RP has a multicast route for the group with the M-VLAN IRB interface as the downstream interface. As a result, the PIM RP routes the multicast traffic coming in at L3 onto its connection to the M-VLAN IRB toward BL-1 or BL-2. In this case, BL-1 sent the PIM join, so BL-1 receives the traffic on its M-VLAN IRB interface.

Traffic Flow from the Border Leaf Devices to Internal Receivers—M-VLAN IRB Method

In Figure 7, BL-1 is the PIM DR for the SBD and sent the PIM join toward the external PIM domain. BL-1 receives and routes (or forwards) the external source traffic as follows:

BL-1 routes the traffic locally from the M-VLAN to the SBD on its SBD IRB interface because BL-1 is the PIM DR for the SBD. See the small gray arrow from the M-VLAN to the SBD on BL-1.

BL-1 forwards a copy of the traffic on the M-VLAN toward BL-2 because both border leaf devices are in the PEG role. See the black arrow from BL-1 toward BL-2.

As a PEG device using the M-VLAN IRB method, BL-2 expects to receive external multicast traffic only on the M-VLAN IRB interface. If BL-2 has any local receivers, BL-2 can receive the traffic and route it locally to those receivers.

BL-1 also forwards a copy of the traffic on the SBD into the EVPN core to BL-2. See the green arrow from BL-1 toward BL-2.

BL-2 drops the traffic because again, as a PEG device using the M-VLAN IRB method, BL-2 expects to receive external source traffic only on the M-VLAN IRB interface. BL-2 doesn't expect external source traffic on the SBD IRB interface from BL-1. In other words, BL-2 sees this case as a source interface mismatch (a reverse path forwarding [RFP] failure).

Note:One reason why the ingress border leaf device also forwards a copy on the SBD to other border leaf devices is to ensure that another border leaf device can receive external source traffic if its M-VLAN interface is down. Then any interested local receivers on the other border leaf device can still get the traffic.

BL-1 selectively forwards copies of the traffic on the SBD to the server leaf devices with interested receivers, based on the advertised Type 6 routes.

In this case, Leaf-1 and Leaf-2 have a multihomed interested receiver, Rcvr-1, on VLAN-2. As a result, BL-1 sends the traffic toward both leaf devices. See the green arrows from BL-1 toward Leaf-1 and Leaf-2.

Note:In a similar use case with the PIM router multihomed to BL-1 and BL-2, BL-1 might receive the external multicast source traffic, but BL-2 is the PIM DR on the SBD. One reason why BL-1 forwards the incoming external multicast traffic toward BL-2 on the M-VLAN is so that BL-2 can handle this use case. See the black arrow from BL-1 toward BL-2 on the M-VLAN in Figure 7. If BL-2 is the PIM DR on the SBD, upon receiving the traffic on the M-VLAN from BL-1, BL-2 forwards the traffic on the SBD toward Leaf-1 and Leaf-2. In this case, the green arrows in the figure would flow from BL-2 toward the other EVPN devices instead of flowing from BL-1.

Leaf-1 and Leaf-2 locally route the traffic from the SBD IRB interface to the revenue bridge domain IRB interface for VLAN-2 toward the interested (multihomed) receiver. However, with EVPN multihoming, only the EVPN DF in the ES forwards the traffic toward Rcvr-1 so Rcvr-1 doesn't get duplicate traffic.

In this case, Leaf-1 is the EVPN DF, so only Leaf-1 forwards the traffic to Rcvr-1.

What Happens When a Multihomed External PIM Router Load-Balances the Traffic—M-VLAN IRB Method

In Figure 7, the external PIM gateway router is multihomed to BL-1 and BL-2 on an ES in the EVPN fabric. If the pair of connections on the PIM router side is an AE interface bundle, the PIM router does load balancing among the interfaces in the bundle. In that case, both BL-1 and BL-2 will each receive some of the multicast traffic flow from the external source. However, all receivers should receive all of that traffic. For simplicity, the figure doesn't show traffic arrows for this load balancing, but we'll describe the flow here.

BL-1 and BL-2 each receive part of the external multicast source traffic on their M-VLAN IRB interfaces. However, because BL-1 is the PIM DR on the SBD, only BL-1 will route the traffic onto the SBD into the EVPN fabric, as follows:

BL-1 routes the traffic it receives onto the SBD toward the server leaf devices.

BL-1 also forwards that traffic on the M-VLAN and routes it on the SBD toward BL-2, in case BL-2 has any local receivers (as described in Traffic Flow from the Border Leaf Devices to Internal Receivers—M-VLAN IRB Method).

BL-2 forwards the traffic it receives from the external PIM domain on the M-VLAN toward BL-1, because BL-1 only expects to receive external source traffic on the M-VLAN.

Due to DF and split horizon rules, BL-2 won't forward any traffic it receives on the M-VLAN from BL-1 into the EVPN core or back toward the source, BL-1.

BL-1 routes the traffic it receives on the M-VLAN from BL-2 onto the SBD toward the server leaf devices.

What Happens with Local Receivers on Border Leaf Devices—M-VLAN IRB Method

Figure 7 doesn't show local receivers attached to the border leaf devices. However, let's look briefly at PIM join message flow and how external source traffic reaches local receivers on border leaf devices.

Consider that BL-1 or BL-2 has an interested receiver on a revenue bridge domain in the fabric. In that case:

Both devices generate a PIM join on the IRB interfaces for the revenue bridge domain toward the PIM RP.

You configure the border leaf devices with PIM in distributed DR mode on the revenue bridge domain IRB interfaces. That way, neither BL-1 nor BL-2 acts as the PIM DR alone. Both devices locally route external multicast source traffic coming in on the M-VLAN IRB interface to the appropriate revenue bridge domain IRB interface.

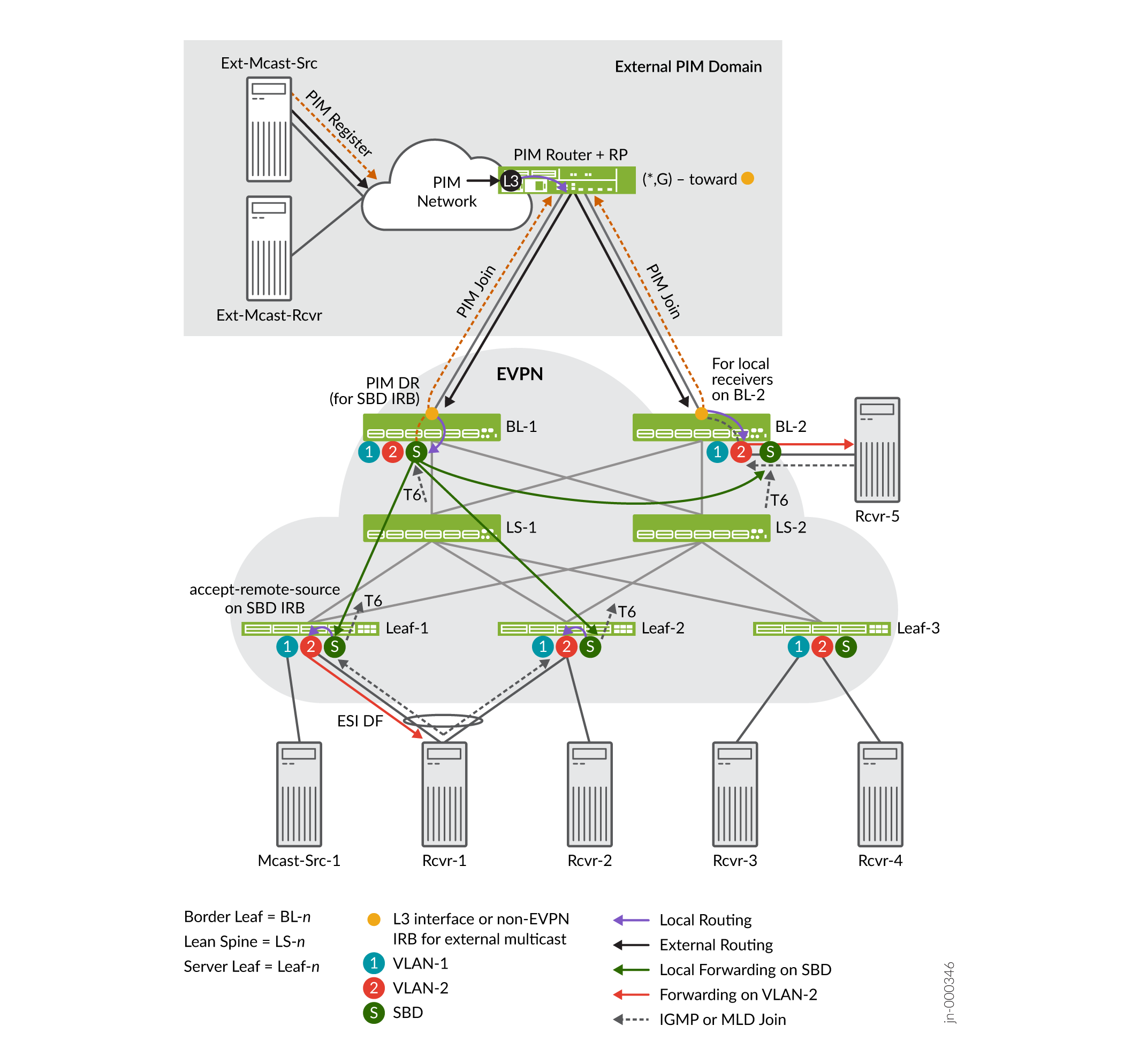

Multicast Traffic from an External Source to Receivers Inside the EVPN Data Center—L3 Interface Method or Non-EVPN IRB Method

Figure 8 illustrates the OISM use case where a multicast source outside the EVPN fabric sends multicast traffic to receivers inside the fabric. In this case, the fabric uses the classic L3 interface or non-EVPN IRB external multicast method to connect to the external PIM domain. Also, this case includes the following internal receivers:

A receiver that is multihomed to two server leaf devices.

A local receiver on one of the border leaf devices.

This use case, like the M-VLAN IRB external source use case in Figure 7, shows the two main ways OISM uses the SBD in the EVPN core—To carry external multicast source traffic and to advertise SMET Type 6 routes. The Type 6 routes ensure that the border leaf devices only forward the traffic toward the EVPN devices with interested receivers.

In Figure 8:

Rcvr-1 is multihomed to server leaf devices Leaf-1 and Leaf-2 in the EVPN fabric, and expresses interest in receiving traffic from a multicast group.

Rcvr-5 on BL-2 in the EVPN fabric is also interested in receiving the multicast traffic.

Ext-Mcast-Src in the external PIM domain is the source for the traffic for the multicast group.

The multicast control flow and data traffic flow for external multicast are similar for the classic L3 interface and the non-EVPN IRB interface methods. As a result, in this section we commonly say external multicast interface when we refer to the border leaf device external connection points.

The external source traffic reaches the interested receivers (Rcvr-1 and Rcvr-5) as follows:

- Multicast Control Flow between the Internal Receivers and the External Source—L3 Interface or Non-EVPN IRB Method

- Traffic Flow from the Border Leaf Devices to Internal Receivers—L3 Interface or Non-EVPN IRB Method

Multicast Control Flow between the Internal Receivers and the External Source—L3 Interface or Non-EVPN IRB Method

These steps summarize the multicast control flow in this use case:

Rcvr-1 sends an IGMP or MLD join message on VLAN-2 to both multihoming peers Leaf-1 and Leaf-2.

Both Leaf-1 and Leaf-2 generate an EVPN Type 6 route toward the EVPN core on the SBD. The Type 6 (SMET) route advertises that Rcvr-1 is interested in the multicast data.

Both border leaf devices BL-1 and BL-2 receive the Type 6 route on the SBD.

The Type 6 route (on the SBD) signals the border leaf devices to create a PIM join toward the PIM RP (reachable through the external multicast interface). However, to avoid duplicate join messages for server leaf devices on the SBD, only the border leaf device that is the PIM DR for the SBD generates the PIM join message. In this case, the figure shows the PIM DR for the SBD is BL-1. BL-1 sends the PIM join message toward the PIM RP by way of its PIM neighbor, the external multicast interface.

The local receiver on BL-2, Rcvr-5, also sends an IGMP or MLD join message on VLAN-2 to BL-2. Note that in this case, BL-1 and BL-2 are not multihoming peers in an EVPN ES. As a result, BL-2 sends a separate PIM join message on its external multicast interface because it has a local interested receiver (Rcvr-5).

The PIM RP receives the join messages. The PIM RP creates PIM (*,G) entries in the multicast routing table with the BL-1 and BL-2 external multicast interfaces as downstream interfaces.

The external source Ext-Mcast-Src registers with the PIM RP. The PIM RP has multicast routes for the group with the BL-1 and BL-2 external multicast interfaces as downstream interfaces. As a result, the PIM RP routes the multicast traffic coming in at L3 toward BL-1 and BL-2.

Both BL-1 and BL-2 receive the multicast traffic. The next section explains how the border leaf devices forward or route the traffic in the EVPN fabric.

Traffic Flow from the Border Leaf Devices to Internal Receivers—L3 Interface or Non-EVPN IRB Method