Paragon Automation System Requirements

Before you install the Paragon Automation software, ensure that your system meets the requirements that we describe in these sections.

To determine the resources required to implement Paragon Automation, you must understand the fundamentals of the Paragon Automation underlying infrastructure.

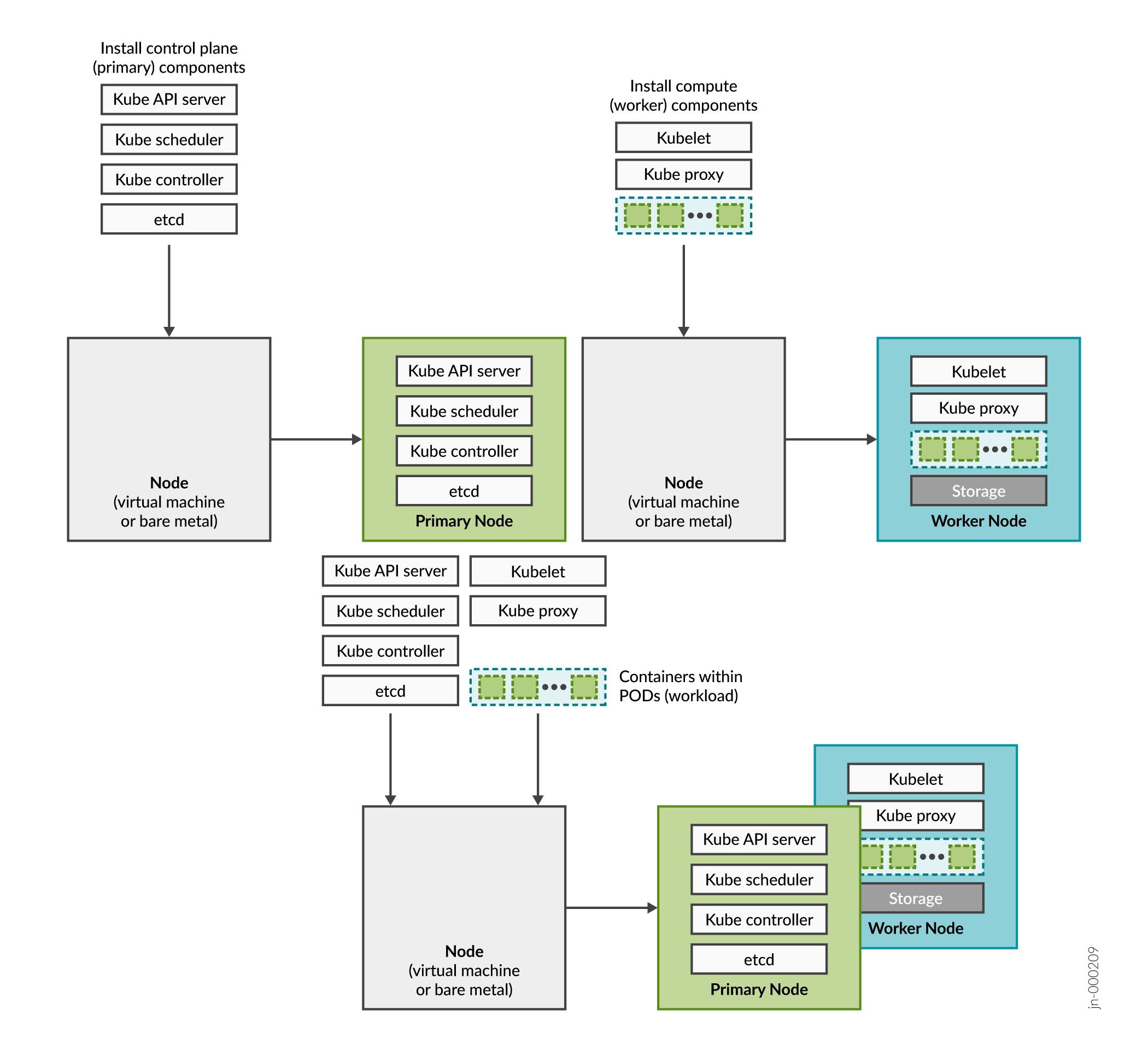

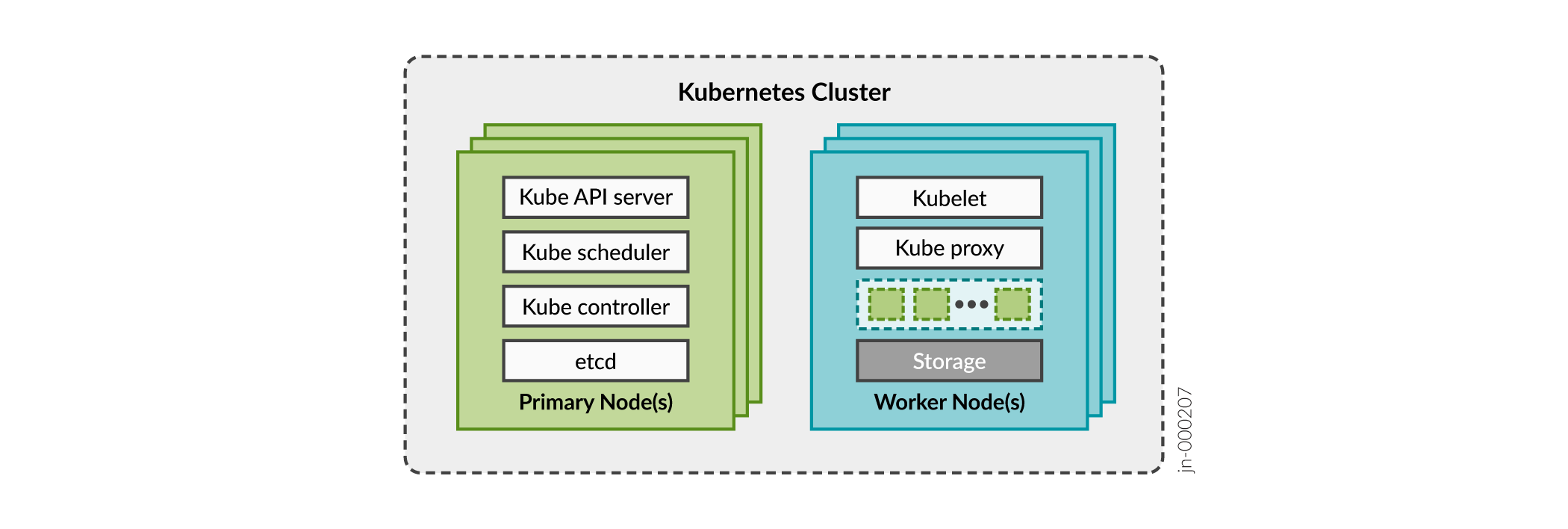

Paragon Automation is a collection of microservices that interact with one another through APIs and run within containers in a Kubernetes cluster. A Kubernetes cluster is a set of nodes or machines running containerized applications. Each node is a single machine, either physical (bare-metal server) or virtual (virtual machine).

The nodes within a cluster implement different roles or functions depending on which Kubernetes components are installed. During installation you specify which role each node will have and the installation playbooks will install the corresponding components on each node accordingly.

-

Control plane (primary) node—Monitors the state of the cluster, manages the worker nodes, schedules application workloads, and manages the life cycle of the workloads.

-

Compute (worker) node—Performs tasks that the control plane node assigns, and hosts the pods and containers that execute the application workloads. Each worker node hosts one or more pods which are collections of containers.

-

Storage node—Provides storage for objects, blocks, and files within the cluster. In Paragon Automation, Ceph provides storage services in the cluster. A storage node must be in a worker node, although not every worker node needs to provide storage.

For detailed information on minimum configuration for primary, worker, and storage nodes, see Paragon Automation Implementation and Hardware Requirements.

A Kubernetes cluster comprises several primary nodes and worker nodes. A single node can function as both primary and worker if the components required for both roles are installed in the same node.

You need to consider the intended system's capacity (number of devices, LSPs, etc), the level of availability required, and the expected system's performance, to determine the following cluster parameters:

- Total number of nodes (virtual or physical) in the cluster

- Amount of resources on each node (CPU, memory, and disk space)

- Number of nodes acting as primary, worker, and storage nodes

Paragon Automation Implementation

Paragon Automation is implemented on top of a Kubernetes cluster, which consists of one or more primary nodes and one or more worker nodes. At minimum, one primary node and one worker node are required for a functional cluster. Paragon Automation is implemented as a multinode cluster.

A multinode implementation comprises multiple nodes, either VMs or BMSs, where at least one node acts as primary and at least three nodes as workers and provide storage. This implementation not only improves performance but allows for high availability within the cluster:

-

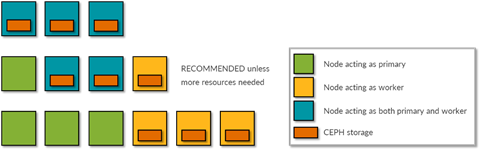

Control plane high availability—For control plane redundancy, you must have a minimum of three primary nodes. The total number of primary nodes must be an odd number and we do not recommend more than three primary nodes.

-

Workload high availability—For workload high availability and workload performance, you must have more than one worker. You can add more workers to the cluster as needed.

-

Storage high availability—For storage high availability, you must have at least three nodes for Ceph storage. You must enable

Master Schedulingduring installation if you want any of the primary nodes to provide Ceph storage. Enabling master scheduling allows the primary to act as a worker as well.You could implement a setup that provides redundancy in different ways, as shown in the examples in Figure 3.

Figure 3: Multinode Redundant Setups Note:

Note:For Paragon Automation production deployments, we recommend that you have a fully redundant setup with a minimum of three primary nodes (multi-primary node setup) with atleast one worker node if

Master Schedulingis enabled, or a minimum of three primary nodes and three worker nodes providing Ceph storage ifMaster Schedulingis disabled. You must enableMaster Schedulingduring the installation process.

Hardware Requirements

This section lists the minimum hardware resources required for the Ansible control host node and the primary and worker nodes of a Paragon Automation cluster.

The compute, memory, and disk requirements of the Ansible control host node are not dependent on the intended capacity of the system. The following table shows the requirements for the Ansible control host node:

|

Node |

Minimum Hardware Requirement |

Storage Requirement |

Role |

|---|---|---|---|

|

Ansible control host |

2–4-core CPU, 12-GB RAM, 100-GB HDD |

No disk partitions or extra disk space required |

Carry out Ansible operations to install the cluster. |

In contrast, the compute, memory, and disk requirements of the cluster nodes vary widely based on the intended capacity of the system. The intended capacity depends on the number of devices to be monitored, type of sensors, frequency of telemetry messages, and number of playbooks and rules. If you increase the number of device groups, devices, or playbooks, you'll need higher CPU and memory capacities.

The following table summarizes the minimum hardware resources required per node for a successful installation of a multinode cluster.

|

Node |

Minimum Hardware Requirement |

Storage Requirement |

Role |

|---|---|---|---|

|

Primary or worker node |

32-core CPU, 32-GB RAM, 200 GB SSD storage (including Ceph storage) Minimum 1000 IOPS for the disks |

The cluster must include a minimum of three storage nodes. Each node must have an unformatted disk partition or a separate unformatted disk, with at least 30-GB space, for Ceph storage. See Disk Requirements. |

Kubernetes primary or worker node |

SSDs are mandatory on bare-metal servers.

Paragon Automation, by default, generates a Docker registry and stores it internally in the /var/lib/registry directory in each primary node.

Here, we've listed only minimum requirements for small deployments supporting up to two device groups. In such deployments, each device group may comprise two devices and two to three playbooks across all Paragon Automation components. See Paragon Automation User Guide, for information about devices and device groups.

To get a scale and size estimate of a production deployment and to discuss detailed dimensioning requirements, contact your Juniper Partner or Juniper Sales Representative.

Software Requirements

-

You must install a base OS of Ubuntu version 20.04.4 LTS (Focal Fossa) or Ubuntu 22.04.2 LTS (Jammy Jellyfish), or RHEL version 8.4 or RHEL version 8.10 on all nodes. Paragon Automation also has experimental support on RHEL 8.8. All the nodes must run the same OS (Ubuntu or RHEL) version of Linux.

Note:If you are using RHEL version 8.10, you must remove the following RPM bundle:

rpm -e buildah cockpit-podman podman-catatonit podman

-

You must install Docker on the Ansible control host. The control host is where the installation packages are downloaded and the Ansible installation playbooks are executed. For more information, see Installation Prerequisites on Ubuntu or Installation Prerequisites on Red Hat Enterprise Linux.

If you are using Docker CE, we recommend version 18.09 or later.

If you are using Docker EE, we recommend version 18.03.1-ee-1 or later. Also, to use Docker EE, you must install Docker EE on all the cluster nodes acting as primary and worker nodes in addition to the control host.

Docker enables you to run the Paragon Automation installer file, which is packaged with Ansible (version 2.9.5) as well as the roles and playbooks that are required to install the cluster.

Installation will fail if you don't have the correct versions. We've described the commands to verify these versions in subsequent sections in this guide.

Disk Requirements

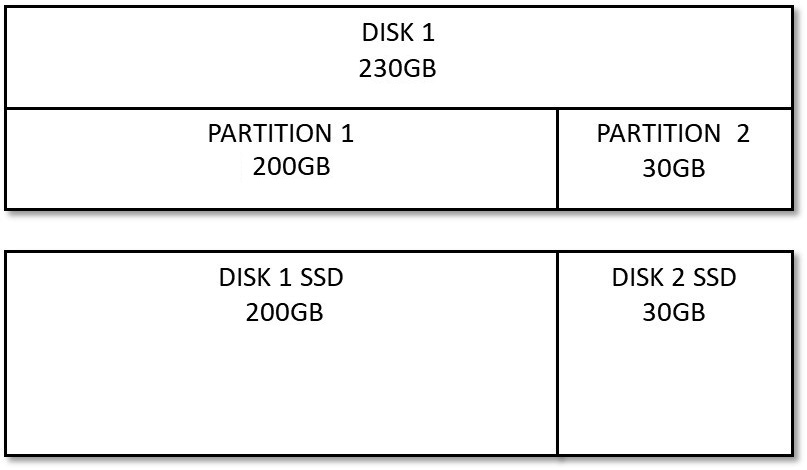

The following disk requirements apply to the primary and worker nodes, in both single-node and multinode deployments:

- Disk must be SSD.

- Required partitions:

-

Root partition:

You must mount the root partition at /.

You can create one single root partition with at least 200-GB space.

Alternatively, you can create a root partition with at least 50-GB space and a data partition with at least 150-GB space. You must also bind-mount the system directories "/var/local", "/var/lib/rancher", and "/var/lib/registry". For example:

# mkdir -p /export/rancher /var/lib/rancher /export/registry /var/lib/registry /export/local /var/local # vi /etc/fstab [...] /export/rancher /var/lib/rancher none bind 0 0 /export/registry /var/lib/registry none bind 0 0 /export/local /var/local none bind 0 0 [...] # mount -a

You use the data partition mounted at /export for Postgres, ZooKeeper, Kafka, and Elasticsearch. You use the data partition mounted at /var/local for Paragon Insights Influxdb.

-

Ceph partition:

The unformatted partition for Ceph storage must have at least 30-GB space.

Note:Instead of using this partition, you can use a separate unformatted disk with at least 30-GB space for Ceph storage.

-

Network Requirements

- All nodes must run NTP or other time-synchronization at all times.

- An SSH server must be running on all nodes. You need a common SSH username and password for all nodes.

- You must configure DNS on all nodes, and make sure all the nodes (including the Ansible control host node) are synchronized.

- All nodes need Internet connection. If the cluster nodes do not have Internet connection, you can use the air-gap method for installation. The air-gap method is supported on nodes with Ubuntu and RHEL as the base OS.

-

You must allow intercluster communication between the nodes. In particular, you must keep the ports listed in Ports That Firewalls Must Allow open for communication. Ensure that you check for any iptables entry on the servers that might be blocking any of these ports.

Table 3: Ports That Firewalls Must Allow Port Numbers Purpose Enable these ports on all cluster nodes for administrative user access. 80 HTTP (TCP) 443 HTTPS (TCP) 7000 Paragon Planner communications (TCP) Enable these ports on all cluster nodes for communication with network elements. 67 ztpservicedhcp (UDP) 161 SNMP, for telemetry collection (UDP) 162 ingest-snmp-proxy-udp (UDP) 11111 hb-proxy-syslog-udp (UDP) 4000 ingest-jti-native-proxy-udp (UDP) 830 NETCONF communication (TCP) 7804 NETCONF callback (TCP) 4189 PCEP Server (TCP) 30000-32767 Kubernetes port assignment range (TCP) Enable communication between cluster nodes on all ports. At the least, open the following ports. 6443 Communicate with worker nodes in the cluster (TCP) 3300 ceph (TCP) 6789 ceph (TCP) 6800-7300 ceph (TCP) 6666 calico etcd (TCP) 2379 etcd client requests (TCP) 2380 etcd peer communication (TCP) 9080 cephcsi (TCP) 9081 cephcsi (TCP) 7472 metallb (TCP) 7964 metallb (TCP) 179 calico (TCP) 10250-10256 Kubernetes API communication (TCP) Enable this port between the control host and the cluster nodes. 22 TCP 9345 Kubernetes RKE2 control plane (TCP)

Web Browser Requirements

Table 4 lists the 64-bit Web browsers that support Paragon Automation.

|

Browser |

Supported Versions |

Supported OS Versions |

|---|---|---|

|

Chrome |

85 and later |

Windows 10 |

|

Firefox |

79 and later |

Windows 10 |

|

Safari |

14.0.3 |

MacOS 10.15 and later |

Installation on VMs

Paragon Automation can be installed on virtual machines (VMs). The VMs can be created on any Hypervisor, but must fulfill all the size, software, and networking requirements described in this topic.

The VMs must have the recommended base OS installed. The installation process for VMs and bare-metal servers is the same.