Customer Success Story

SambaNova makes high performance and compute-bound machine learning easy and scalable

AI promises to transform healthcare, financial services, manufacturing, retail, and other industries, but many organizations seeking to improve the speed and effectiveness of human efforts have yet to reach the full potential of AI.

To overcome the complexity of building complex and compute-bound machine learning (ML), SambaNova engineered DataScale. Designed using SambaNova Systems’ Reconfigurable Dataflow Architecture (RDA) and built using open standards and user interfaces, DataScale is an integrated software and hardware systems platform optimized from algorithms to silicon. Juniper switching moves massive volumes of data for SambaNova’s Datascale systems and services.

Enable customers to deploy AI in days, not months

Accelerate time to value for AI systems

Augment in-house ML expertise

Accelerate high-performance ML model building across industries

Applying the full value of computer vision, natural language processing, recommendation systems, and other AI-driven applications has been limited to those organizations with the most resources and talent.

And while most organizations would rather concentrate on developing, training, and running their AI systems, they often take a do-it-yourself (DIY) approach to building the ML infrastructure that includes using GPUs and clusters. Integrating the perfect mix of hardware and software can take months of painstaking, expert work, delaying the time-to-value for their AI initiatives.

SambaNova set out to change the game for AI-driven application development and deployment.

SambaNova Puts an End to DIY Compute-Bound AI

SambaNova lifts the heavy burden of building ML infrastructure and delivers greater capabilities and efficiency for ML model training, inference, and high-performance computing.

SambaNova DataScale is an integrated software and hardware systems platform with optimized algorithms and next-generation processes. At the heart is SambaNova Reconfigurable Dataflow Unit (RDU), a chip that’s designed to allow ML models to run freely and without the system bottlenecks that can hamper traditional compute models.

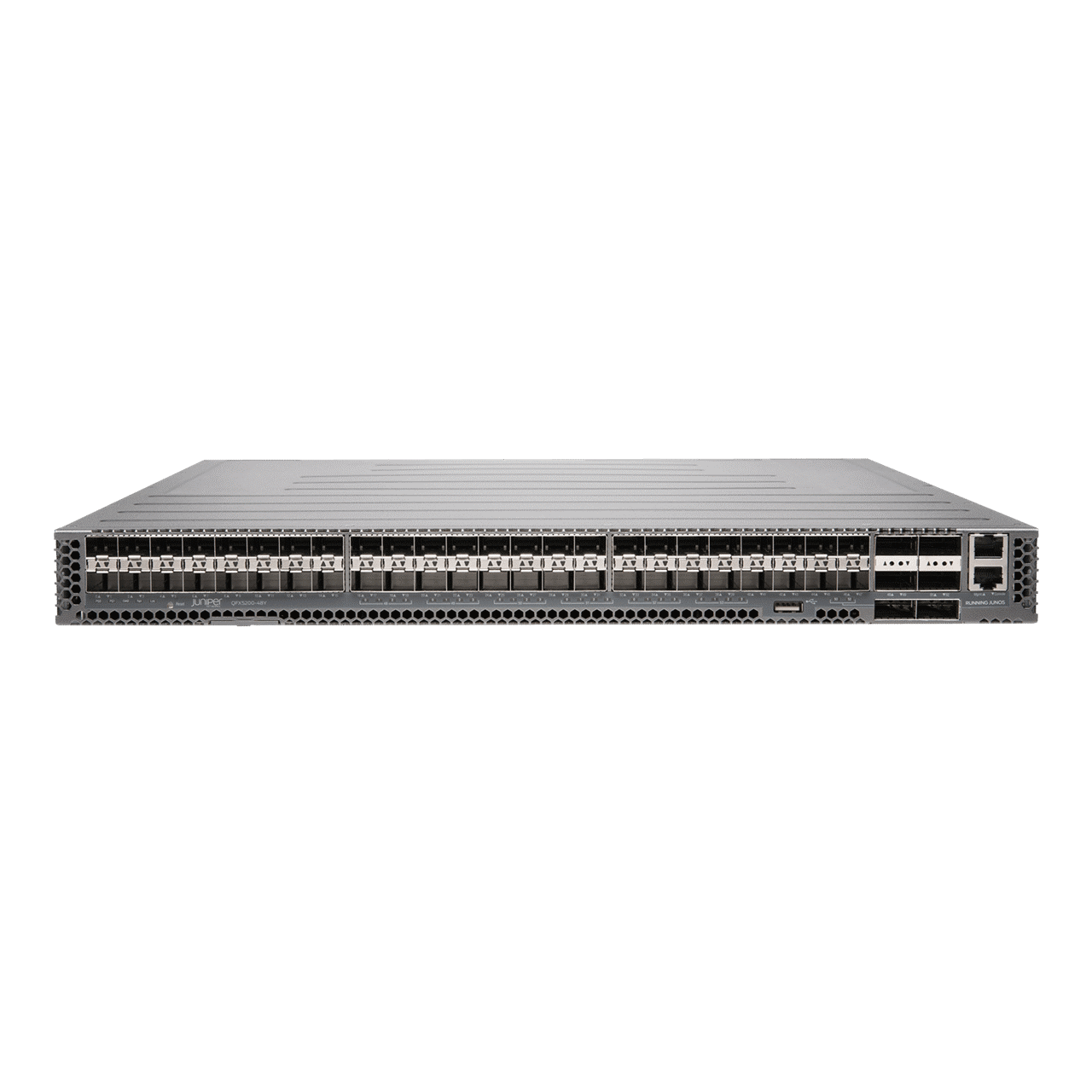

Juniper QFX5200 Switches form DataScale’s network fabric, providing 100Gbps interfaces for server and intrafabric connectivity.

Ultimately, SambaNova unleashes massive amounts of compute for ML workloads. A quarter-rack system can deliver hundreds of petaflops of compute. And for organizations that prefer a cloud service, there is SambaNova Dataflow-as-a-Service available via SambaNova’s cloud service provider partnership.

Ready for next-generation ML workloads and beyond

Training a computer vision model that detects cancer may require the processing of thousands of high-resolution images. Without massive bandwidth readily available, image quality—and thus accuracy of the AI models—is reduced. When AI is helping radiologists read patient scans for breast cancer or emergency room doctors rapidly diagnose a collapsed lung, tiny reductions in quality can have a huge impact on patient care.

Scientists and researchers have been among the first to experience the advantages of SambaNova’s purpose-built AI infrastructure, but adoption is growing across industries, including manufacturing and financial services.

Lawrence Livermore National Laboratory and Los Alamos National Laboratory use the Corona supercomputer with SambaNova attached to accelerate AI and high-performance compute workloads for COVID-19 drug discovery. Incredibly, the team got SambaNova running in one weekend, with internal tests showing performance 5X better than a traditional GPU architecture.

Argonne National Labs, another SambaNova customer, had its first AI model up and running on SambaNova in a mere 45 minutes. And it’s seeing orders of magnitude higher performance than individual GPUs, with plenty of headroom for growth.

Published October 2021