ON THIS PAGE

Solution Architecture

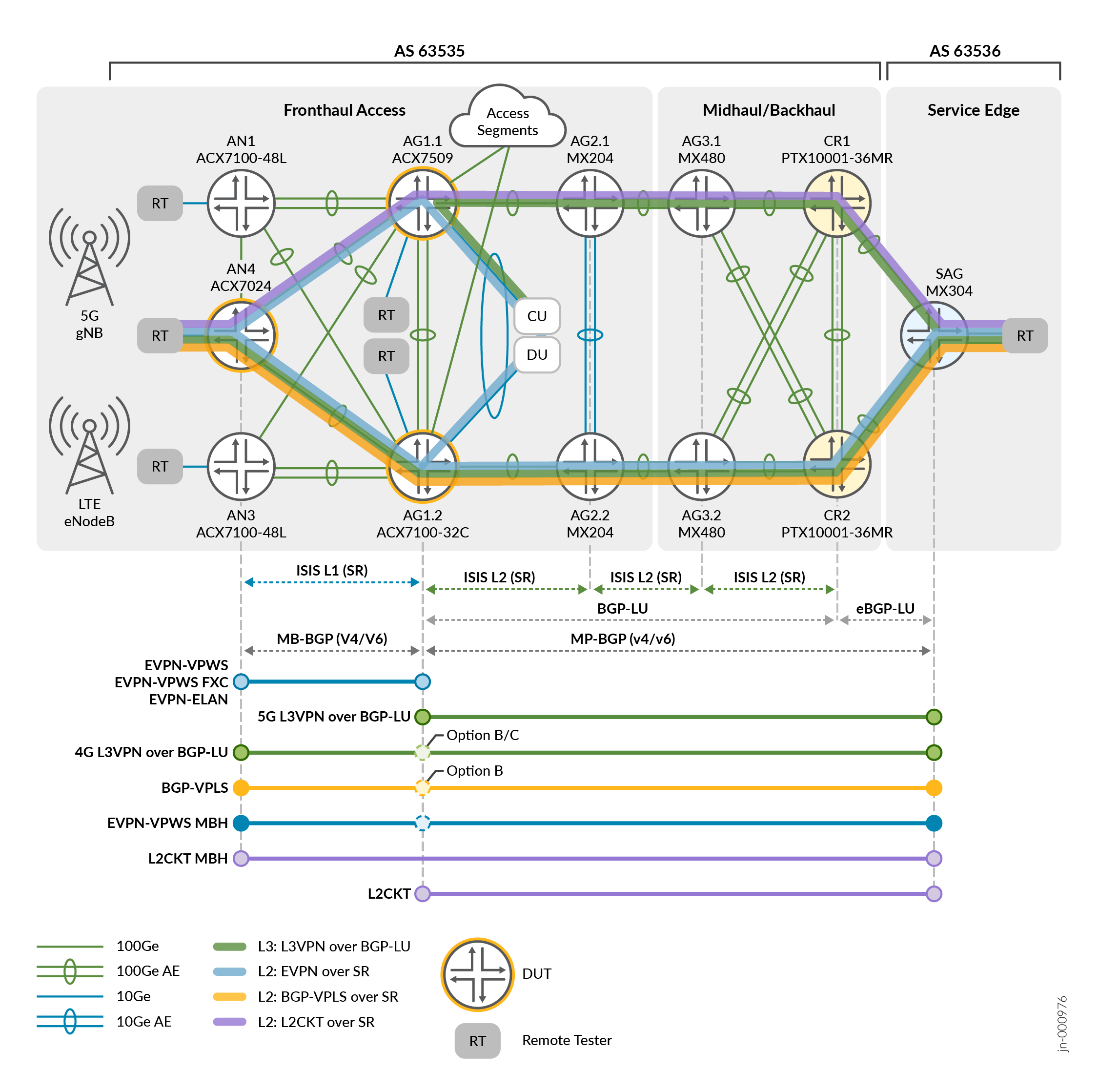

The solution architecture deploys spine-leaf access fronthaul topology, midhaul/backhaul ring topologies are combined to include aggregation and core roles with the services gateway comprising the complete xHaul infrastructure. While the QoS implementation is generally transferrable across multiple network designs, the major components of building the end-to-end JVD solution include the following key attributes.

- 5G xHaul MBH reference architecture

- Seamless MPLS across xHaul IGP domains (Inter-AS and Inter-Domain BGP-LU)

- Segment Routing L-IS-IS

- Fast failover and detection mechanisms TI-LFA, BFD, Microloop Avoidance, OAM, and so on.

- Redundant route reflectors

- Community-based route optimizations

- Inter-AS Option B/C

- EVPN-VPWS and Flexible Cross Connect (FXC) with A/A Multihoming

- EVPN-ELAN with A/A Multihoming and EVPN Virtual Gateway Address (VGA) IRB

- BGP-VPLS single-homed

- L2Circuit MBH

- L3VPN

Figure 1 describes an end-to-end service architecture for the joint 4G/5G solution where hub site AG1.1 and AG1.2 routers also support aggregation of the L3 MBH network, collapsing 4G Pre-Aggregation and 5G HSR roles. VPN PE functions are supported in parallel to Inter-AS Option-B and Option-C procedures. AG1.1/AG1.2 nodes provide O-DU connectivity and additional access segment insertion points to emulate network attachments with increased scale.

The network underlay comprises interdomain Seamless MPLS SR with BGP Labeled Unicast (BGP-LU). Access nodes (AN) are placed into an IS-IS Level 1 domain with adjacencies to L1/L2 HSR (AG1) nodes, where the Level 2 domain extends from aggregation (AG2, AG3) to core (CR) segments. Seamless MPLS is achieved by enabling BGP-LU at border nodes. TI-LFA loose mode node redundancy is enabled within each domain instantiation. The environment is scaled by two sets of route reflectors at CR1 and CR2, with westward HSR (AG1) clients. AG1.1 and AG1.2 serve as the redundant route reflectors for the access fronthaul segment. Multi-Protocol BGP peering between SAG to HSR (AG1) supports inter-AS Option-B solutions.

Services overlay incorporates modern and legacy VPNs, including EVPN, L3VPN, BGP-VPLS, and L2Circuit, with enhancements for Flow Aware Transport Pseudowire Label (FAT-PW) and Ethernet OAM where applicable.

Overall, the types of traffic flows represented in the topology include:

- 5G fronthaul layer 2 eCPRI between O-RU to O-DU

- 5G midhaul and backhaul layer 3 IP packet flows between 5G O-CU to UPF/5GC

- Layer 3 IP between 5G supporting open Fronthaul Management Plane and Midhaul/Backhaul Control and User plane

- 4G L3MBH IP packet flows between 4G CSR and EPC (SAG)

- 4G L2 wholesale MBH flows between CSR (AN) to EPC (SAG)

The split 7.2x includes layer 2 EVPN 5G Fronthaul traffic flows from O-RU to O-DU and L3VPN from O-CU to UPF at SAG. The architecture supports additional functional splits by simply enabling required connectivity from AG2 or AG3 segments.

Service Profiles

The following profiles explain the VPN services included in the JVD profile and how these services correlate to the 4G and 5G use cases. It is not an absolute mapping; operators might select different VPN technologies to support services across the xHaul. However, EVPN-VPWS is the primary delivery mechanism for critical 5G fronthaul flows, with L3VPN servicing C/U and M plane communications across the fronthaul, midhaul, and backhaul segments.

Additional VPN services are shown to represent potential points of connectivity, such as O-RU emulation or attachment of access regions. This might include inter-region VPN services (not requiring transport back to SAG) or connectivity into additional Telco Cloud complexes, which might be facilitated at the HSR.

| Use Case | Service Overlay Mapping | Endpoints |

|---|---|---|

| 5G Fronthaul | Fronthaul CSR to HSR EVPN-VPWS Single-Homing with E-OAM Performance Monitoring and FAT-PW | AN4 - AG1.1/AG1.2 |

| 5G Fronthaul | Fronthaul CSR-HSR EVPN-VPWS with active-active Multihoming | AN4 – AG1.1/AG1.2 |

| 5G Fronthaul | Fronthaul CSR-HSR EVPN-ELAN with active-active Multihoming | AN4 - AG1.1/AG1.2 |

| 5G Fronthaul | Fronthaul CSR-HSR EVPN-VPWS Flexible Cross Connect (FXC) Single-Homing with E-OAM Performance Monitoring | AN4 - AG1.1/AG1.2 |

| 5G Fronthaul | Fronthaul CSR-HSR EVPN-VPWS Flexible Cross Connect (FXC) with active-active Multihoming | AN4 - AG1.1/AG1.2 |

| 5G Fronthaul | Fronthaul CSR-HSR L3VPN for M-Plane | AN4 - AG1.1/AG1.2 |

| 5G Midhaul | EVPN IRB anycast gateway with L3VPN Multihoming DU/HSR to SAG | AG1.1/AG1.2 - SAG |

| 5G Midhaul | Bridge Domain IRB anycast static MAC/IP with L3VPN Multihoming DU/HSR to SAG | AG1.1/AG1.2 - SAG |

| L2 MBH | End-to-End L2Circuit CSR to SAG with FAT-PW | AN4 - SAG |

| L2 MBH | End-to-End Single-Homing EVPN-VPWS CSR to SAG with E-OAM and FAT-PW | AN4 - SAG |

| L2 MBH | End-to-End Single-Homing BGP-VPLS CSR to SAG with E-OAM and FAT-PW | AN4 - SAG |

| L3 MBH | End-to-End L3VPN CSR to SAG | AN4 - SAG |

Traffic Types

The following table prioritizes examples of traffic types and explains how these are mapped with the associated forwarding classes.

| Forwarding Class | Priority | Traffic Examples |

|---|---|---|

| Q7: FC-SIGNALING | strict-high | OAM aggressive timers, O-RAN/3GPP C-plane |

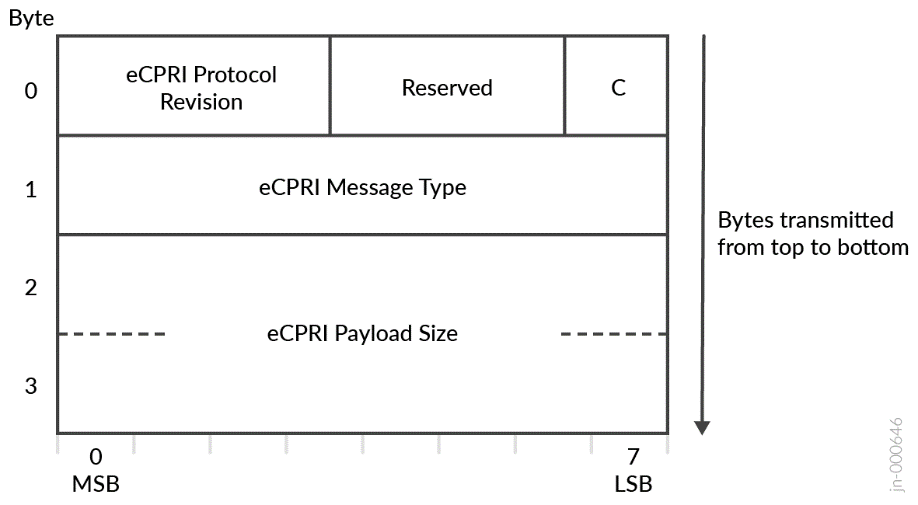

| Q6: FC-LLQ | low-latency | CPRI RoE, eCPRI C/U-Plane ≤2000 bytes |

| Q5: FC-REALTIME | medium-high | 5QI/QCI Group 1 low-latency U-plane, low latency business. Interactive video, low latency voice |

| Q4: FC-HIGH | low | 5QI/QCI Group 2 medium latency U-plane data |

| Q3: FC-CONTROL | high | Network control: OAM relaxed timers, IGP, BGP, PTP aware mode, and so on. |

| Q2: FC-MEDIUM | low | 5QI/QCI Group 3 remainder GBR U-plane guaranteed business data. Video on-demand. O-RAN/3GPP M-plane, e.g., eCPRI M-plane, other management, software upgrades |

| Q1: FC-LOW | low | high latency, guaranteed low priority data |

| Q0: FC-BEST-EFFORT | low-remainder | remainder non-GBR U-plane data |

Service Carve Out

Fronthaul traffic flows are appropriately prioritized with objective latency budgets and low delay and jitter tolerance. As a best practice, delayed critical eCPRI traffic is assigned the highest priority queue for handling low-latency workloads. Midhaul and backhaul services can be considered with lower requirements but might include URLLC use cases, such as delay-sensitive real-time video. These aspects are considered in the JVD.

The VPN services required to complete the essential 5G xHaul communications include:

- EVPN-VPWS for fronthaul C/U Plane

- L3VPN for fronthaul management plane

- L3VPN for midhaul/backhaul control plane

- L3VPN for midhaul/backhaul data plane

The environment is enhanced with additional VPN services at scale, providing performance and emulated expansion of additional network segments, e.g., CSR supporting many O-RUs.

| VPN Service | Segment | Classification Type | Forwarding Classes |

|---|---|---|---|

| EVPN-VPWS | Fronthaul | Fixed | FC-LLQ |

| EVPN-VPWS | Fronthaul | Multifield | FC-LLQ, FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-BEST-EFFORT |

| EVPN-FXC | Fronthaul | BA | FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

| EVPN-ELAN | Fronthaul | BA | FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

| L3VPN | Fronthaul | BA | FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

| L3VPN | Midhaul | Fixed | FC-REALTIME |

| L3VPN | Midhaul | BA | FC-LLQ, FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

| L3VPN | Midhaul | Multifield | FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW |

| L2Circuit | MBH | Fixed | FC-HIGH, FC-MEDIUM, FC-LOW |

| EVPN-VPWS | MBH | BA | FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

| BGP-VPLS | MBH | BA | FC-HIGH, FC-MEDIUM, FC-LOW |

| L3VPN | MBH | BA | FC-SIGNALING, FC-CONTROL, FC-REALTIME, FC-HIGH, FC-MEDIUM, FC-LOW, FC-BEST-EFFORT |

Class of Service Architecture

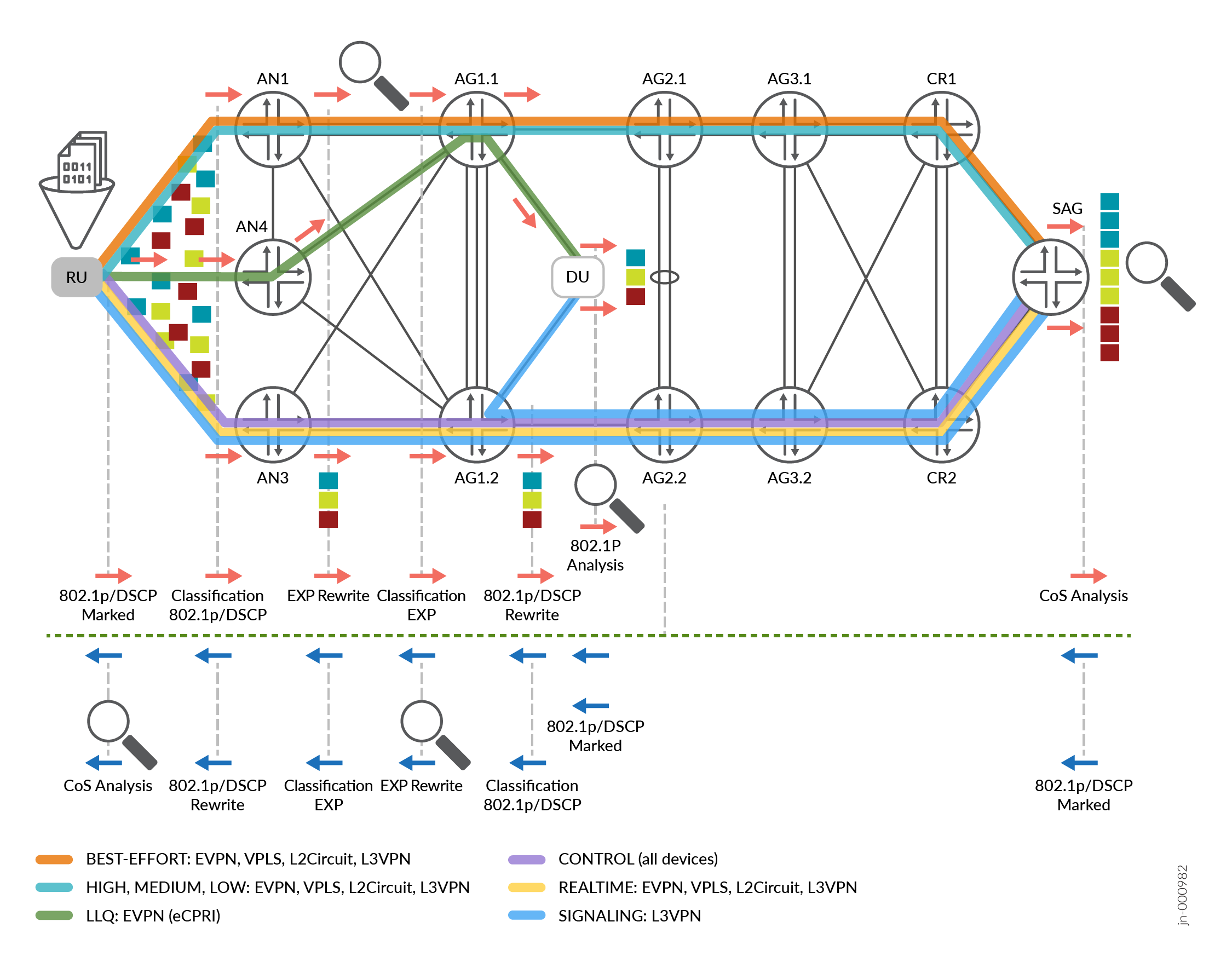

Flows are sent through access nodes (Figure 2) toward O-DU or SAG with Layer 2 (802.1p) or Layer 3 (DSCP) marking at positions defined as classification and rewritten to EXP at egress across the SR-MPLS topology. Queue statistics are monitored to ensure that the intended classification and scheduling produce the expected results. Rewrite operations occur at the specified positions. Packet captures are taken to verify that DSCP, 802.1p, or EXP bits are correctly rewritten or preserved. In the inverse direction, flows sent through SAG are marked and validated once egressing the access nodes.

The 5G CoS LLQ JVD builds upon previous validated designs featuring ACX, MX, and PTX platform families to focus on delivering differentiated services requiring ultra-low latency. This profile incorporates capabilities of ACX7000 platforms in the fronthaul to dedicate queuing machinery to preserve low latency workloads. LLQ enables support of a more comprehensive O-RAN traffic profile for multi-level priority QoS.

MX304 as SAG and PTX10001-36MR core nodes, implements a corresponding configuration to support the intended use cases and diverse services.

Figure 2 is presented as a generalization of the end-to-end traffic, differentiating fronthaul vs backhaul and points of CoS operations to validate these critical functions, e.g., classification, scheduling, and rewrite operations. The diagram does not represent the precise per-flow path selection used in the JVD. The points of packet capture for further inspection are indicated.

The major objective is to examine predictable behaviors regarding how critical and noncritical traffic flows are handled across 5G xHaul network services. CoS functionalities are validated across EVPN, L3VPN, BGP-VPLS, and L2Circuit VPNs, aligning with 5QI traffic classification. QoS implementation should exhibit deterministic functionality. The transport architecture must be capable of supporting the adaptation of existing and emerging mobile applications, preserving delay budget integrity while guaranteeing traffic priorities.

Test Topologies for Latency Measurement

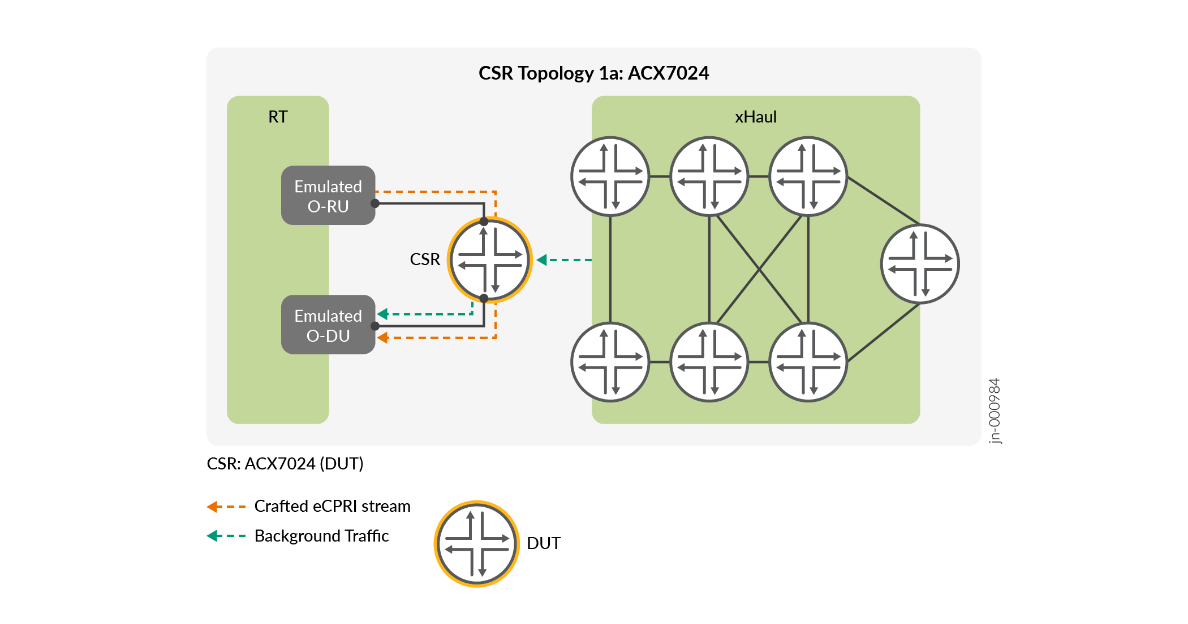

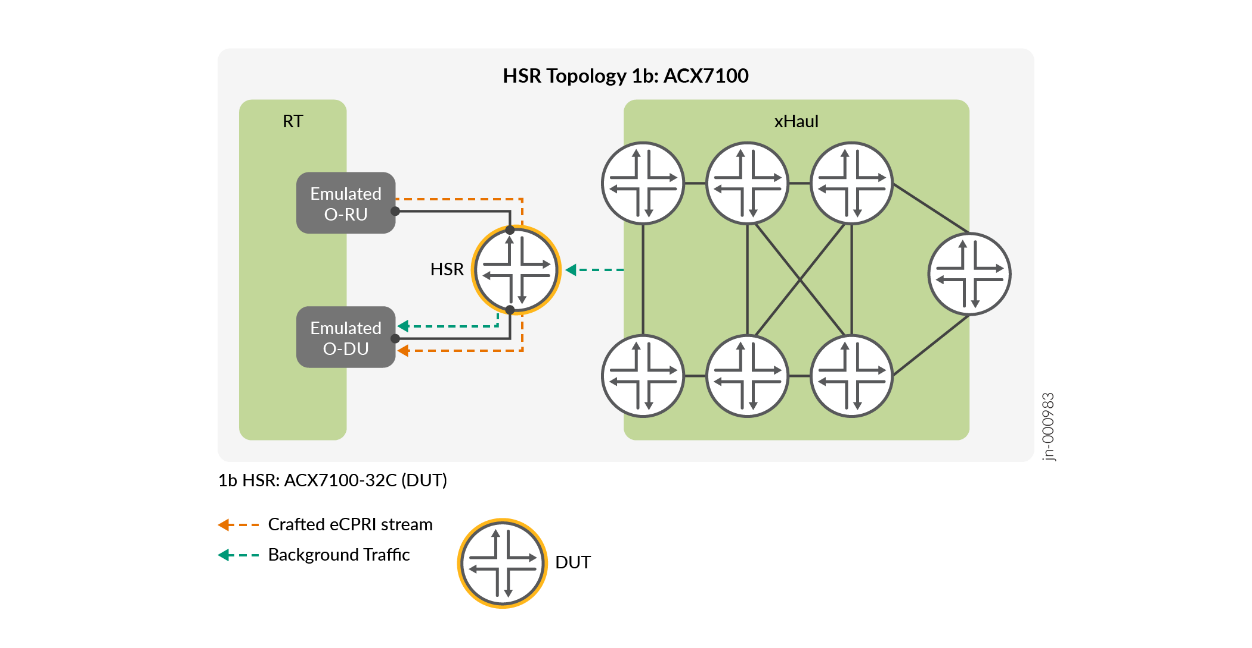

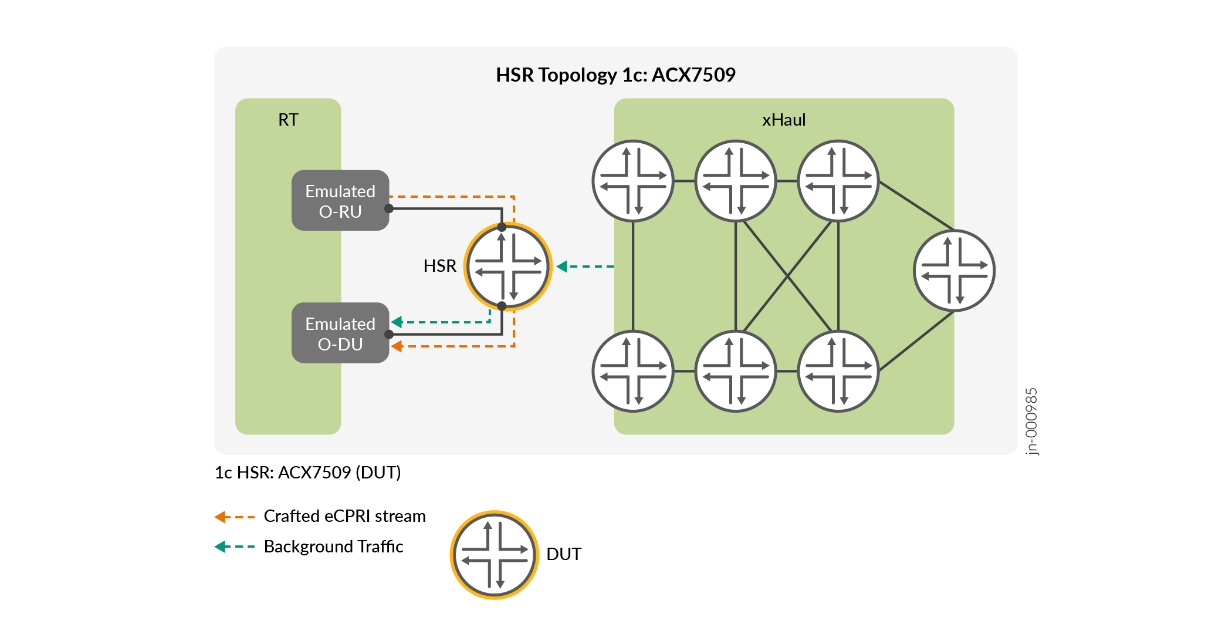

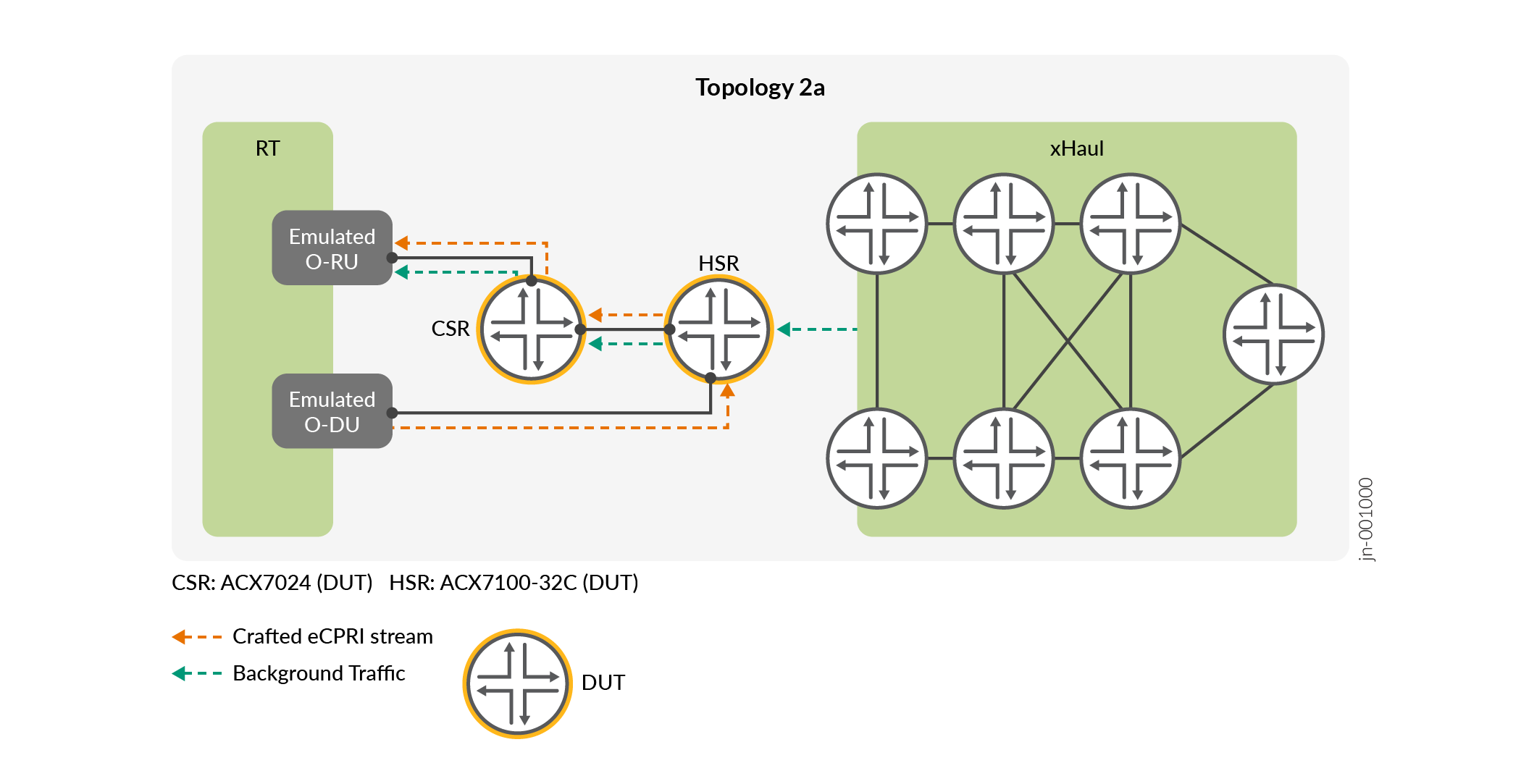

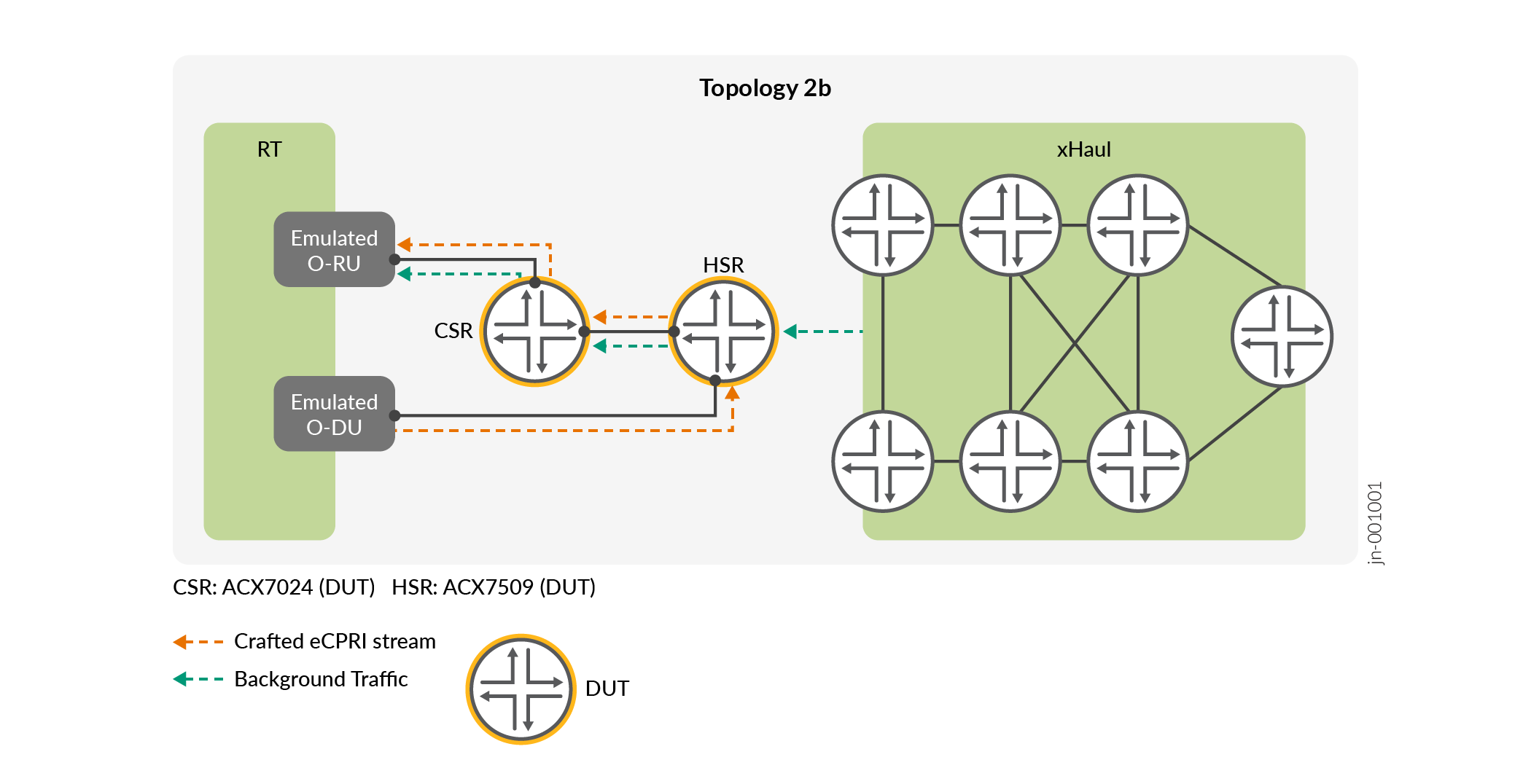

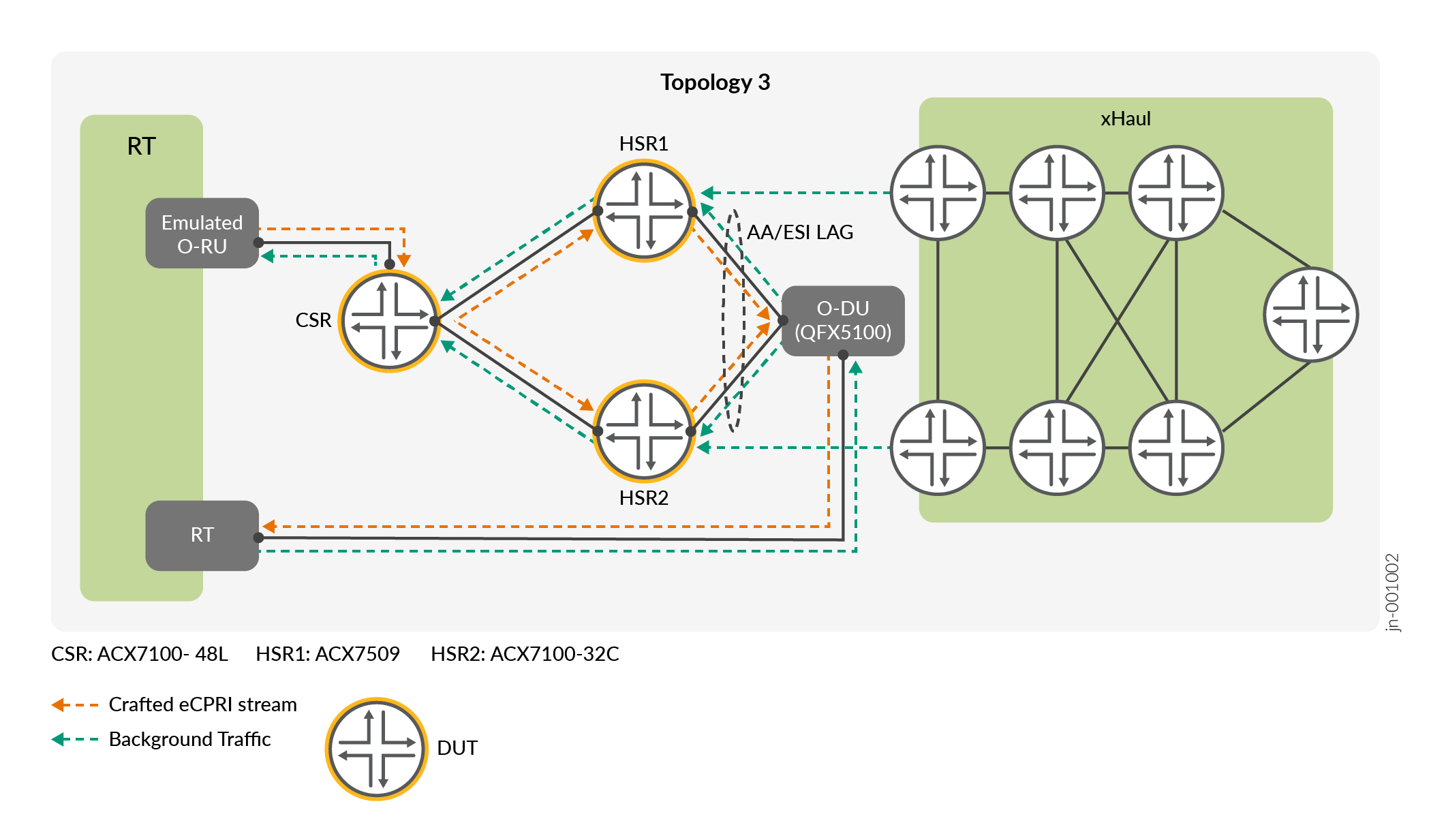

The JVD proposes multiple topologies to measure and report on latency performance for each DUT and across fronthaul and backhaul network segments. This ensures to capture meaningful data on the device performance across different functional roles and topologies.

- Topology 1 validates the individual DUT performance acting in a CSR or HSR role. This topology provides most accurate performance for the individual device.

- Topology 2 measures performance over point-to-point EVPN-VPWS services between CSR and HSR. ACX7024 acts as CSR DUT with ACX7100-32C or ACX7509 as HSR DUTs. The test equipment emulates O-RU and O-DU.

- Topology 3 measures performance across EVPN-VPWS active-active multihoming connectivity with a single CSR DUT to a pair of HSRs leveraging All-Active ESI LAG toward DU (QFX5120).

Critical flows are crafted to emulate the fronthaul traffic patterns with burst and steady streams (validating each pattern) across a variety of packet sizes. The packet sizes include 64B – 2000B for fronthaul flows, with a maximum frame size of 2020 bytes, including up to 7-byte Preamble, 1-byte Start of Frame Delimiter (FSG), and 20-byte Inter Frame Gap (IFG). Each test scenario includes fronthaul priority flows where performance measurements are taken based on the frame size.

As per TSN Profile A, non-fronthaul traffic should include a maximum frame size of 2000 octets, though the validation includes scenarios with jumbo frames for comparison. Background traffic is generated with iMIX 64B to 2000B frame size from SAG (xHaul) toward O-DU or O-RU to create congestion scenarios measured at converging DUT egress points. Test equipment traffic excludes self-latency. However, it should be noted that where topology includes a physical DU (QFX Series Switch), latency is incurred and counted against the total as a transit next hop.

For more information on 5G specifications, see the Recommended Latency Budgets section.

Topology 1 (a, b, c) is crucial to understanding the individual DUTs latency contribution. Flows of various packet sizes of burst and continuous traffic patterns are included. Background traffic includes fixed frame size and iMIX traffic flows. For more details, you can refer to the Test Report. The configuration is locally switched on DUT. The included DUTs are ACX7024 as the primary CSR, ACX7100-32C as HSR1, and ACX7509 as HSR2.

Topology 2 provides single-homed performance data in a 2-hop scenario without a physical RU or DU element (both emulated by the test center).

Topology 3 provides multihomed performance data in a 3-hop scenario, which includes QFX Series Switches as the DU connecting to the all-active ESI LAG. The test center emulates RU.

O-RAN and eCPRI Emulation

The O-RAN and eCPRI test scenarios include several functional permutations with emulated O-DU and Remote Radio Unit (RRU). Innovative steps are taken to validate the performance and assurance of 5G eCPRI communications, U-Plane message-type behaviors, and O-RAN conformance. These steps ensure that the featured DUTs are correctly and consistently transporting critical 5G services.

Results evolve across multiple topologies to capture a range of DUT performance characteristics in non-congested and congested scenarios.

The summary of O-RAN and eCPRI test scenarios include:

- eCPRI O-RAN Emulation: Leverages a standard IQ sample file to generate flows.

- O-RAN Conformance: Analyzes messages for conformance to O-RAN specification.

- Crafted eCPRI O-RAN: Produces variable eCPRI payload for comparing latency performance.

- eCPRI Services Validation: Emulates user plane messages, performing functional and integrity analysis.

- eCPRI Remote Memory Access (Type 4): Performs read or write from or to a specific memory address on the opposite eCPRI node and validates expected success or failure conditions.

- eCPRI Delay Measurement Message (Type 5): Estimates one-way delay between two eCPRI ports.

- eCPRI Remote Reset Message (Type 6): One eCPRI node requests a reset of another node. It validates expected sender or receiver operations.

- eCPRI Event Indication Message (Type 7): Either side of the protocol indicates to the other side that an event has occurred. An event is either a raised or ceased fault or a notification. Validation confirms events raised as expected.

VLAN Operations

The ACX7000 series supports a comprehensive set of VLAN manipulation operations. For more information on the 80 VLAN combinations tested across L2Circuit, BGP-VPLS, EVPN-VPWS, and EVPN-ELAN services, see the validated design for 5G Mobile xHaul CSR.

The following table summarizes VLAN operations supported by ACX EVO-based platforms.

| IFL Tag Type |

Input Map Operation |

Output Map Operation | Eth Bridge | Vlan Bridge | Supported IFD Tagging |

|---|---|---|---|---|---|

| UT | None | None | Yes | No | None |

| UT | Push | Pop | Yes | No | None |

| UT | Push-Push | Pop-Pop | Yes | No | None |

| ST | None | None | No | Yes | VLAN and Flex Tagging |

| ST | Push | Pop | No | Yes | VLAN and Flex Tagging |

| ST | Swap | Swap | No | Yes | VLAN and Flex Tagging |

| ST | Pop | Push | No | Yes | VLAN and Flex Tagging |

| ST | Push-Swap | Swap-Pop | No | Yes | VLAN and Flex Tagging |

| DT | None | None | No | Yes | VLAN and Flex Tagging |

| DT | Pop | Push | No | Yes | VLAN and Flex Tagging |

| DT | Swap | Swap | No | Yes | VLAN and Flex Tagging |

| DT | Swap-Swap | Swap-Swap | No | Yes | VLAN and Flex Tagging |

| DT | Pop-Swap | Swap-Push | No | Yes | VLAN and Flex Tagging |

| DT | Pop-Pop | Push-Push | No | Yes | VLAN and Flex Tagging |

| Native ST | None | None | No | Yes | Flexible Tagging |

| Native ST | Push | Pop | No | Yes | Flexible Tagging |

| Native ST | Swap | Swap | No | Yes | Flexible Tagging |

| Native ST | Pop | Push | No | Yes | Flexible Tagging |

| Priority ST | Push | Pop | No | Yes | VLAN and Flex Tagging |

| Priority ST | Swap | Swap | No | Yes | VLAN and Flex Tagging |

| Priority ST | Pop | Push | No | Yes | VLAN and Flex Tagging |

Test scenarios include the following VLAN operations:

- Pop s-tag in outer position (on top of 802.1Q tagged frames)

- Push s-tag (moving c-tag to inner position)

- Swap outer tag

- Classification based on 802.1p priority code point (PCP) bits

- Rewrite outer VLAN tag 802.1p bits

- Preservation of c-tag PCP bits

- Translate or rewrite the VLAN tag of 802.1Q frames

- Multiple untagged, single-tagged, and dual-tagged operations

For single-tagged and dual-tagged operations, the classification of incoming frames is done based on outer 802.1Q Ethernet header PCP bits. All traffic types (single-tagged, dual-tagged, or untagged) are classified into appropriate forwarding classes, determined by VPN service type, with EVPN (eCPRI + critical fronthaul flows) given the highest priority and lowest latency.

Low Latency Queuing

Junos OS Evolved Release 23.3R1 introduces the LLQ for ACX7000 platforms, enabling delay-sensitive data to be given preferential treatment over other traffic by allowing dequeuing of data so that priority traffic can be sent first.

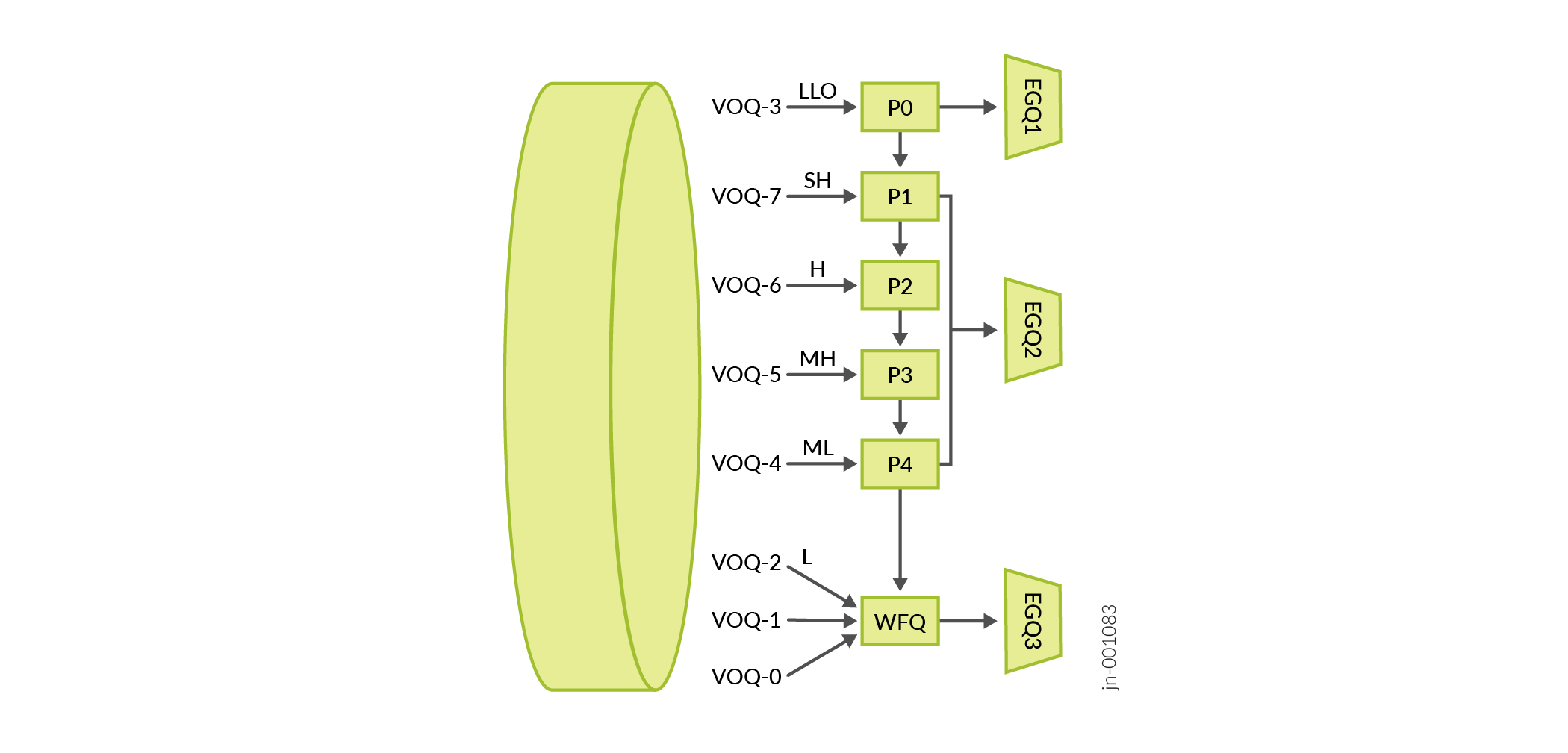

With LLQ functionality, the queue is prioritized over all other priority queues to ensure latency is preserved. The Virtual Output Queues (VOQ) and Egress Queue (EGQ) priority hierarchy is as follows:

Latency Queues > Priority Queues > Low Queues

With Latency Queuing support, applications that require low latency queuing have the

option to configure this priority through CLI:set class-of-service schedulers

priority low-latency

When queues (other than LLQ) are congested, LLQ is expected to support 10µs average latency. The LLQ mechanics are capable of ≤6µs without congestion. Multiple factors might influence delay. The validation demonstrates LLQ capability exceeding expectations.

LLQ supports multiple queues of the same priority. However, we recommend to include no more than two LLQs per system to ensure latency integrity is preserved. Reasonably, the more latency queues that must perform round-robin distribution create delay. A PFE syslog warning is given if more than two LLQs are configured.

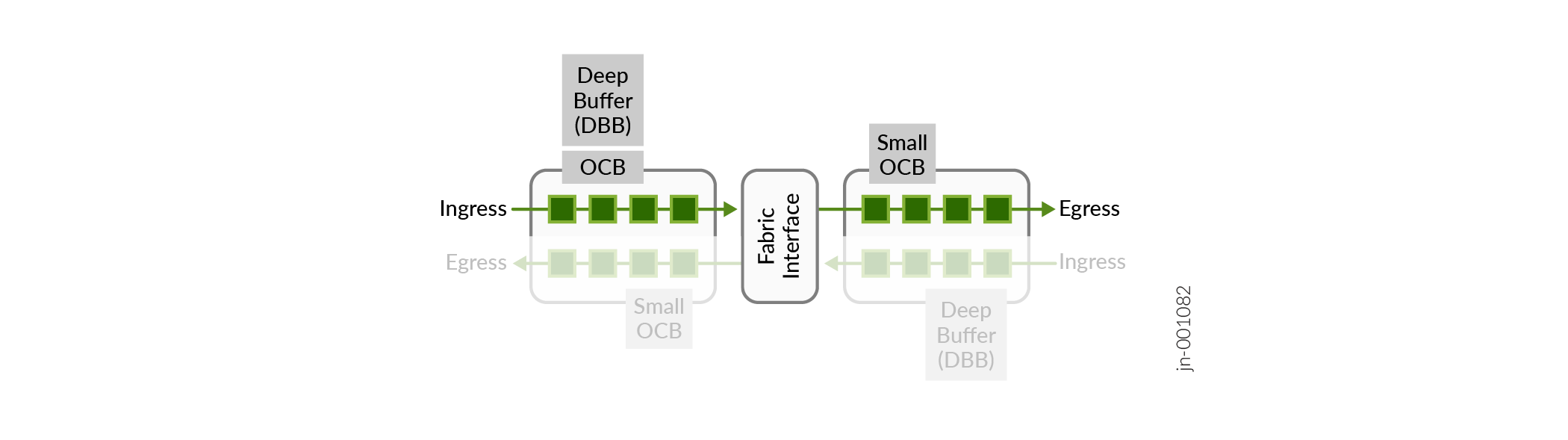

The ACX7000 family implements a VOQ architecture designed to avoid Head of Line Blocking (HOLB) and optimize buffering. This approach uses feedback mechanisms between the ingress traffic manager (ITM) and the egress traffic manager (ETM). Packets received at ingress VOQs are mapped to the destination egress port. The ITM generates a credit request and sends it to the ETM. Depending on the egress port and queue credit availability, the ETM generates appropriate credit and schedules the traffic with minimal buffering. Ingress VOQ bundles are associated with a specific egress port. Each bundle consists of eight VOQs. The VOQ connection forms a virtual representation of the queue and an egress port association called a Virtual Output Queue Identifier (VOQ ID).

Packet buffering is performed at ingress with a large delay bandwidth buffer (DBB) and SRAM small on-chip buffer (OCB). An additional on-chip egress buffer is leveraged for packet serialization.

Figure 11 explains the ACX7000 scheduling hierarchy in Port QoS mode. Along with priority, a low delay scheduling profile is associated with VOQs configured as low latency. With Port QoS mode, three distinct dedicated Egress Queues (EGQ) are enabled to support LLQ, Priority Queues, and Low Queues.

EGQ1 is dedicated to handling VOQs configured with low latency. EGQ2 is dedicated to handling priority queues other than latency queues. EGQ3 is dedicated to handling low-priority traffic. The EGQ separation allows independent treatment to each category while minimizing the impact on each other. VOQ priority association is based on configurations.

Multi-Level Priority

Junos OS Evolved Release 23.3R1 introduces multi-level priorities for the ACX7000 series. Six priorities are supported: low-latency, strict-high, high, medium-high, medium-low, and low. All queues preempt the lower priority. Equal-priority queues perform round-robin distribution, and low-priority queues are WFQ. This support is extended to both port-level QoS and hierarchical QoS (HQoS).

Only port-based QoS is covered in this validation.

Before Junos OS Evolved release 23.3R1, the ACX7000 family supported strict-high and low priority queues, with SH preempting low. In the new CoS architecture, each queue is given a priority level that is capable of preempting lower priority queues. These priority levels are:

- P0 (highest priority): Low-Latency

- P1: Strict-High

- P2: High

- P3: Medium-High

- P4: Medium-Low

- WFQ (lowest priority): Low

Priority Queues are designated from P0-P4 and leverage round-robin distribution for queues within the same priority level. Low priority is the only WFQ, where the transmit rate is the weight given for the round-robin distribution of multiple low-priority queues. With strict queue preemption, we recommend to shape the priority queues to prevent starving lower priority queues.

The functional behavior differs from TRIO-based MX architectures, which leverage guaranteed and excess regions to ensure the configured transmit rate for all priority queues, including low priority. PQ-DWRR is implemented in the guaranteed region. Equal priority queues are serviced as WRR, with the transmit-rate being the weight. Only the strict-high queue operates without an excess region and can starve low-priority queues.

From Junos OS Evolved Release 24.3R1, ACX7000 supports eight priority levels, with the addition of low-high (P5) and low-medium (P6) for port QoS.

Forwarding Classes

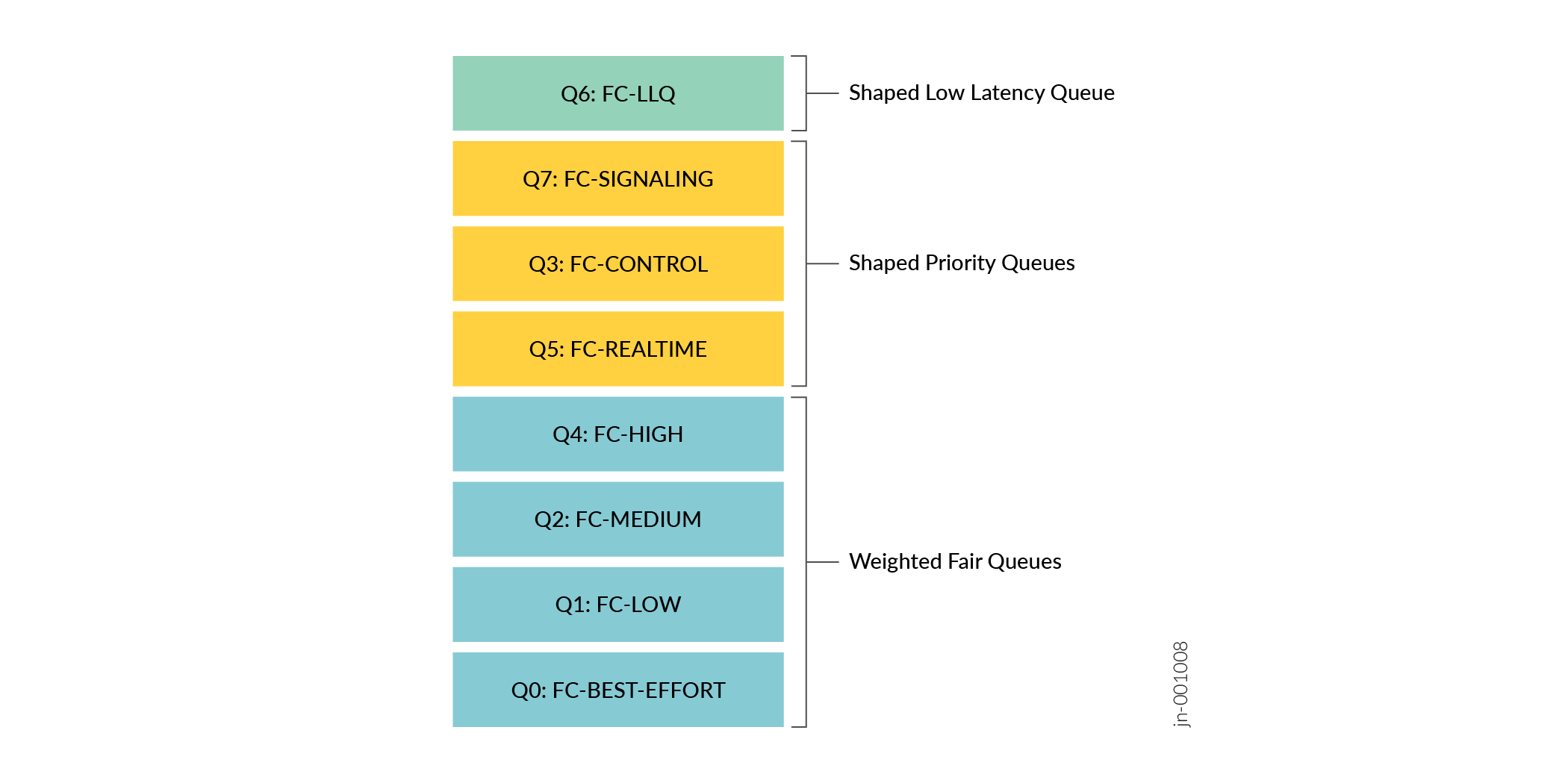

ACX7000 supports eight forwarding classes (FC), with four being enabled by default. One or more FCs can be mapped up to the eight supported queues. Conversely, MX-series supports sixteen forwarding classes, which can be mapped across eight queues. For this JVD, a maximum of eight queues are utilized for each transit device, supporting O-RAN proposed traffic profiles.

The JVD CoS model most closely aligns with the O-RAN Multiple Priority Queue structure and includes the following queue assignments:

| QUEUE | Forwarding Class | Queue Characteristics |

|---|---|---|

| 7 | FC-SIGNALING | strict-high priority and PIR shaped |

| 6 | FC-LLQ | low-latency priority and PIR shaped (eCPRI) |

| 5 | FC-REALTIME | medium-high priority and PIR shaped (voice & interactive video) |

| 4 | FC-HIGH | low priority WFQ guaranteed |

| 3 | FC-CONTROL | High priority and PIR shaped (network control) |

| 2 | FC-MEDIUM | low priority WFQ guaranteed |

| 1 | FC-LOW | low priority WFQ guaranteed |

| 0 | FC-BEST-EFFORT | low priority WFQ remainder |

Figure 12 illustrates the CoS model proposed for the solution architecture. The CoS hierarchy can essentially be divided into three main components.

- Low Latency Queues

- Shaped Priority Queues

- Weighted Fair Queues

Critical fronthaul flows are mapped to the LLQ or PQs, which allows a parallel queueing structure for non-fronthaul flows with WFQs. The high, medium, and low traffic types are given a guaranteed committed information rate (CIR) while allowing a dynamic peak information rate (PIR) if bandwidth is available. To achieve this result, the transmit rates configured for low-priority queues (WFQ) must be calculated based on the appropriate port speed, excluding bandwidth allocated to shaped queues. For more information, see the Scheduling section. The best-effort (remainder) queue has no guarantee.

When implementing the 5G architecture, the LLQ PQ model might be contained within the fronthaul segment with WFQs designated for MBH. This is not a goal for the JVD. In general, fronthaul flows are mapped to the first three queues, as shown in Figure 12, with FC-REALTIME supporting URLLC end-to-end applications. Non-fronthaul (i.e., MBH) is always mapped to the lower four low-priority queues (WFQ). FC-CONTROL is applicable to all devices. Low-priority queues might still support some functions within the fronthaul, such as M-plane and software upgrades.

The following example displays the Forwarding Class configuration, which is the same across ACX, MX, and PTX platforms.

ACX-EVO Forwarding Class set class-of-service forwarding-classes class FC-SIGNALING queue-num 7 set class-of-service forwarding-classes class FC-LLQ queue-num 6 set class-of-service forwarding-classes class FC-REALTIME queue-num 5 set class-of-service forwarding-classes class FC-HIGH queue-num 4 set class-of-service forwarding-classes class FC-CONTROL queue-num 3 set class-of-service forwarding-classes class FC-MEDIUM queue-num 2 set class-of-service forwarding-classes class FC-LOW queue-num 1 set class-of-service forwarding-classes class FC-BEST-EFFORT queue-num 0 |

Example traffic types per queue:

| QUEUE | Forwarding Class | Example Traffic Type |

|---|---|---|

| 7 | FC-SIGNALING | OAM aggressive timers, O-RAN/3GPP C-plane |

| 6 | FC-LLQ | CPRI RoE, eCPRI C/U-Plane ≤2000 bytes |

| 5 | FC-REALTIME | 5QI/QCI Group 1 low-latency U-plane, low-latency business. Interactive video, low latency voice |

| 4 | FC-HIGH | 5QI/QCI Group 2 medium latency U-plane data |

| 3 | FC-CONTROL | Network control: OAM relaxed timers, IGP, BGP, PTP aware mode, and so on |

| 2 | FC-MEDIUM | 5QI/QCI Group 3 remainder GBR U-plane guaranteed business data. Video-on-demand. O-RAN/3GPP M-plane, e.g., eCPRI M-plane, other management, software upgrades |

| 1 | FC-LOW | high latency, guaranteed low-priority data |

| 0 | FC-BEST-EFFORT | 5QI/QCI Group 4 – remainder non-GBR U-plane data |

Classification

Classification is performed at ingress. The following three styles of classification are included in the validation.

- Behavior Aggregate (BA) matches on the received layer 2 802.1p bits and/or layer 3 DSCP. In the event where both are received, DSCP takes priority. Behavior Aggregate is packet-based, where flows are pre-marked with layer 3 DSCP, layer 2 802.1Q Priority Code Points (PCP), or MPLS EXP.

- Fixed or Discrete Classification allows the forwarding class to map directly on the interface. Fixed classification is context-based, where all traffic arriving on a specific interface is mapped to one forwarding class.

- Multifield (MF) Classification allows matching criteria within the packet fields and maps to one or more forwarding classes.

Classification on transit nodes is performed at ingress and is matched upon outer label MPLS EXP bits. Core interfaces utilize BA classifiers. BA, Fixed, and MF-style classifiers are used at service interfaces (CE-facing).

When both BA and MF classifications are performed simultaneously, MF takes priority since BA is processed first and followed by MF processing. As a result, MF overrides BA results (assuming a match).

In the use cases where received tagged frames include trusted PCP bits, behavior aggregate classification is used. For the use cases where received frames are untrusted PCP bits, fixed or multifield classification is used.

The classification posture is the same across ACX, MX, and PTX platforms included in the JVD. Table 7 describes the overall priority mapping.

| QUEUE | Forwarding Class | 802.1p | DSCP | EXP |

|---|---|---|---|---|

| 7 | FC-SIGNALING | 110 | CS5, CS6 | 110 |

| 6 | FC-LLQ | 100 | CS4, AF41, AF42, AF43 | 100 |

| 5 | FC-REALTIME | 101 | EF | 101 |

| 4 | FC-HIGH | 011 | CS3, AF31, AF32, AF33 | 011 |

| 3 | FC-CONTROL | 111 | CS7 | 111 |

| 2 | FC-MEDIUM | 010 | CS2, AF21, AF22, AF23 | 010 |

| 1 | FC-LOW | 001 | CS1, AF11, AF12, AF13 | 001 |

| 0 | FC-BEST-EFFORT | 000 | BE | 000 |

EXP Classifier

set class-of-service classifiers exp CL-MPLS import default set class-of-service classifiers exp CL-MPLS forwarding-class FC-SIGNALING loss-priority low code-points 110 set class-of-service classifiers exp CL-MPLS forwarding-class FC-LLQ loss-priority low code-points 100 set class-of-service classifiers exp CL-MPLS forwarding-class FC-REALTIME loss-priority low code-points 101 set class-of-service classifiers exp CL-MPLS forwarding-class FC-HIGH loss-priority low code-points 011 set class-of-service classifiers exp CL-MPLS forwarding-class FC-CONTROL loss-priority low code-points 111 set class-of-service classifiers exp CL-MPLS forwarding-class FC-MEDIUM loss-priority low code-points 010 set class-of-service classifiers exp CL-MPLS forwarding-class FC-LOW loss-priority low code-points 001 set class-of-service classifiers exp CL-MPLS forwarding-class FC-BEST-EFFORT loss-priority low code-points 000 |

802.1P Classifier

set class-of-service classifiers ieee-802.1 CL-8021P import default set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-SIGNALING loss-priority low code-points 110 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-LLQ loss-priority low code-points 100 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-REALTIME loss-priority low code-points 101 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-HIGH loss-priority low code-points 011 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-CONTROL loss-priority low code-points 111 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-MEDIUM loss-priority low code-points 010 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-LOW loss-priority low code-points 001 set class-of-service classifiers ieee-802.1 CL-8021P forwarding-class FC-BEST-EFFORT loss-priority low code-points 000 |

DSCP IPv4 Classifier

set class-of-service classifiers dscp CL-DSCP import default set class-of-service classifiers dscp CL-DSCP forwarding-class FC-SIGNALING loss-priority low code-points cs6 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-SIGNALING loss-priority high code-points cs5 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LLQ loss-priority low code-points cs4 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LLQ loss-priority low code-points af41 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LLQ loss-priority medium-high code-points af42 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LLQ loss-priority high code-points af43 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-REALTIME loss-priority low code-points ef set class-of-service classifiers dscp CL-DSCP forwarding-class FC-HIGH loss-priority low code-points cs3 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-HIGH loss-priority low code-points af31 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-HIGH loss-priority medium-high code-points af32 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-HIGH loss-priority high code-points af33 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-CONTROL loss-priority low code-points cs7 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-MEDIUM loss-priority low code-points cs2 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-MEDIUM loss-priority low code-points af21 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-MEDIUM loss-priority medium-high code-points af22 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-MEDIUM loss-priority high code-points af23 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LOW loss-priority low code-points cs1 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LOW loss-priority low code-points af11 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LOW loss-priority medium-high code-points af12 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-LOW loss-priority high code-points af13 set class-of-service classifiers dscp CL-DSCP forwarding-class FC-BEST-EFFORT loss-priority high code-points be |

DSCP IPv6 Classifier

set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 import default set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-SIGNALING loss-priority low code-points cs6 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-SIGNALING loss-priority high code-points cs5 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LLQ loss-priority low code-points cs4 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LLQ loss-priority low code-points af41 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LLQ loss-priority medium-high code-points af42 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LLQ loss-priority high code-points af43 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-REALTIME loss-priority low code-points ef set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-HIGH loss-priority low code-points cs3 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-HIGH loss-priority low code-points af31 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-HIGH loss-priority medium-high code-points af32 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-HIGH loss-priority high code-points af33 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-CONTROL loss-priority low code-points cs7 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority low code-points cs2 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority low code-points af21 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority medium-high code-points af22 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority high code-points af23 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LOW loss-priority low code-points cs1 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LOW loss-priority low code-points af11 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LOW loss-priority medium-high code-points af12 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-LOW loss-priority high code-points af13 set class-of-service classifiers dscp-ipv6 CL-DSCP-IPV6 forwarding-class FC-BEST-EFFORT loss-priority high code-points be |

The following configuration is an example of multifield classification matching distinct traffic types to assign a forwarding class. The filter might be modified as required to adapt an appropriate match and/or prioritization requirements.

set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term eCPRI from ether-type 0xAEFE set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term eCPRI then forwarding-class FC-LLQ set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term eCPRI then count eCPRI-in set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term PTPoE from destination-mac-address 01:1b:19:00:00:00/48 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term PTPoE from ether-type 0x88F7 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term PTPoE then forwarding-class FC-CONTROL set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term CFM from ether-type 0x8902 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term CFM then forwarding-class FC-SIGNALING set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term REALTIME from learn-vlan-1p-priority 5 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term REALTIME then forwarding-class FC-REALTIME set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term HIGH from learn-vlan-1p-priority 3 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term HIGH then forwarding-class FC-HIGH set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term MEDIUM from learn-vlan-1p-priority 2 set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term MEDIUM then forwarding-class FC-MEDIUM set firewall family ethernet-switching filter FF-5G-LLQ-CLASS term INTERNET then forwarding-class FC-BEST-EFFORT |

Scheduling

ACX7000 scheduling begins in the ingress pipeline and is realized as an egress function with a feedback loop mechanism. Once the packet is classified and arrives at the ingress VOQ, the Ingress Traffic Manager (ITM) issues a credit request to the Egress Traffic Manager (ETM). Depending on the egress port and queue availability, a credit request is granted by ETM, and packets are scheduled and finally arrive at the Egress Queue (EGQ).

The 8-Queues (configurable per port) are associated with the VOQ architecture rather than EGQ. The VOQs map into the two or three EGQs depending on the implementation. The scheduling architecture for ACX7000 platforms has changed from Junos OS Evolved Release 23.3R1. For more information about the updated EGQ hierarchy with LLQ, see the Low Latency Queuing section.

Schedulers assign traffic priorities and bandwidth characteristics to the forwarding classes. ACX7000 platforms support six traffic priorities (Eight priorities as per Junos OS Evolved Release 24.3R1). MX-series platforms support five traffic priorities.

Priorities are associated with VOQs, and VOQs map to EGQs. As a result, the mapping of priority level to EGQ assignment is shown here as the 8-priority level model.

| Priority Name | Priority Level | Egress Queue Assignment |

|---|---|---|

| Low-Latency | P0 | EGQ1 |

| Strict-High | P1 | EGQ2 |

| High | P2 | EGQ2 |

| Medium-High | P3 | EGQ2 |

| Medium-Low | P4 | EGQ2 |

| Low-High | P5 | EGQ2 |

| Low-Medium | P6 | EGQ2 |

| Low (WFQ) | P7 | EGQ3 |

As shown, multiple priority queues map to the same EGQ, so it is important to understand the nature of the ACX ingress pipeline. ACX efficient packet processing mechanisms prevent Head-of-Line-Blocking (HOLB) and avoid buffering packets that might be later dropped. Once a packet is scheduled, it should not be dropped.

There are important functional differences between the CoS operations of ACX and MX. All priority queues for ACX (low-latency, strict-high, high, med-high, and med-low) preempt the low-priority queues. PQs can be shaped to prevent the starving lower priority queues. By contrast, on MX TRIO-based platforms, queues operate in a guaranteed or excess region. Queues in profile (operating within the configured transmit rate) are serviced in the guaranteed region and are moved to the excess region when exceeding the transmit rate. Only strict-high priority has the authority to preempt all other priorities since it does not have an excess region and is therefore always guaranteed.

Let’s now consider the behavior when the shaping rate is included for ACX7000 platforms on a priority queue. It is important to understand in case there is a combination of low-priority queues using transmit-rate with PQs using shaping-rate. The configured shaping-rate bandwidth applied to the queue is deducted from the port speed. This means the configured transmit-rate is based on the port speed minus shaping-rates configured.

The following table provides an example of how the shaping rate configuration influences the port speed, which is used to determine how much bandwidth is delegated based on the transmit rate percentage. The first row shows a port speed of 100GbE, and no queues are using the shaping rate. In this case, the 20% transmit rate on the low-priority queue allocates 20Gb.

The next row has 100GbE port speed, and one high-priority queue is given 30% of the port speed (shaping-rate). This brings the total remaining port speed to 70Gb. The 20% transmit rate for the low-priority queue is based on 70Gb, which accounts for 14Gb.

In the third row, the total shaping rate is 50% of the port speed, which allocates 10Gb based on the 20% transmit rate for the low-priority queue.

Finally, in row four, a total of 100% is allocated as shaping rate, leaving 0 for low priority. In all cases, unused bandwidth is available to be distributed across other queues. When bandwidth is available, the allocation is allowed to exceed the configured transmit rate. The shaping rate cannot be exceeded. Packets exceeding the configured shaping rate are dropped.

| Port Speed |

High PQ Shaping-Rate |

Med-High PQ Shaping-Rate |

Updated Port Speed |

Low Priority Transmit-Rate |

Low Priority Bandwidth (Gbps) |

|---|---|---|---|---|---|

| 100GbE | - | - | 100 | 20% | 20 |

| 100GbE | 30% | - | 70 | 20% | 14 |

| 100GbE | 30% | 20% | 50 | 20% | 10 |

| 100GbE | 50% | 50% | 0 | 20% | 0 |

The previous generation ACX5448/ACX710 allows configurations of shaping-rate and transmit-rate at once. As a result, port speed does not change with the inclusion of the shaping rate. The ACX7000 series does not use this combination.

In the validated design, a 5G CoS model is created with four priority queues configured with shaping rate: Signaling, LLQ, Realtime, and Control. These queues carry the most critical traffic types. Under full congestion, these PQs are allowed to consume 80% of the total bandwidth, which is guaranteed.

The remaining 20% of the total port bandwidth is delegated across four WFQs. Although only 20% is allocated, recall that this is only if the PQ utilization is completely full. Leftover bandwidth will be available. For a moment, let’s ignore the four priority queues and create a simple proportional bandwidth distribution model based on queues: High, Medium, Low, and Best-Effort. All these queues are treated the same with low-priority WFQ scheduling.

- High is given 40% of the total bandwidth

- Medium is given 30% of the total bandwidth

- Low is given 20% of the total bandwidth

- Best-Effort is given the remainder

The following table explains the WFQ CoS model, which exists in parallel to the PQ CoS model.

| Queue Name | Priority | Transmit Rate | Egress Queue |

|---|---|---|---|

| High | Low | 40% | EGQ3 |

| Medium | Low | 30% | EGQ3 |

| Low | Low | 20% | EGQ3 |

| Best Effort | Low | Remainder (10%) | EGQ3 |

| Total: | 100% | ||

All four queues are allowed to borrow from other queues if the bandwidth is available, with the weighted distribution (based on TR) favoring the queues in the order shown. High, Medium, and Low are all guaranteed some bandwidth, even if PQs are consuming all their configured rates. Only a small amount is remaining for Best-Effort, so this queue is not guaranteed.

This is one example of a potential 5G CoS model. Traffic patterns are quite different between operators. It is not possible to create one model that works perfectly for all situations. Thus, you must update the design to appropriately fit your use case.

A goal of the validation is to create a model realizing the O-RAN multiple PQ structure (transport core profile-B) with TSN Profile A, enabling PQs to preempt other PQs with LLQ supporting distinct dequeuing prioritization.

The following scheduler configuration is based on the ACX7000 family. Please contact your Juniper representative for the full configurations.

Schedulers

set class-of-service schedulers SC-SIGNALING shaping-rate percent 5 set class-of-service schedulers SC-SIGNALING buffer-size percent 5 set class-of-service schedulers SC-SIGNALING priority strict-high set class-of-service schedulers SC-LLQ shaping-rate percent 40 set class-of-service schedulers SC-LLQ buffer-size percent 10 set class-of-service schedulers SC-LLQ priority low-latency set class-of-service schedulers SC-REALTIME shaping-rate percent 30 set class-of-service schedulers SC-REALTIME buffer-size percent 20 set class-of-service schedulers SC-REALTIME priority medium-high set class-of-service schedulers SC-HIGH transmit-rate percent 40 set class-of-service schedulers SC-HIGH buffer-size percent 30 set class-of-service schedulers SC-HIGH priority low set class-of-service schedulers SC-CONTROL shaping-rate percent 5 set class-of-service schedulers SC-CONTROL buffer-size percent 5 set class-of-service schedulers SC-CONTROL priority high set class-of-service schedulers SC-MEDIUM transmit-rate percent 30 set class-of-service schedulers SC-MEDIUM buffer-size percent 20 set class-of-service schedulers SC-MEDIUM priority low set class-of-service schedulers SC-LOW transmit-rate percent 20 set class-of-service schedulers SC-LOW buffer-size percent 10 set class-of-service schedulers SC-LOW priority low set class-of-service schedulers SC-BEST-EFFORT transmit-rate remainder set class-of-service schedulers SC-BEST-EFFORT buffer-size remainder set class-of-service schedulers SC-BEST-EFFORT priority low |

Scheduler Map

set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-SIGNALING scheduler SC-SIGNALING set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-LLQ scheduler SC-LLQ set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-REALTIME scheduler SC-REALTIME set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-HIGH scheduler SC-HIGH set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-CONTROL scheduler SC-CONTROL set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-MEDIUM scheduler SC-MEDIUM set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-LOW scheduler SC-LOW set class-of-service scheduler-maps SM-5G-SCHEDULER forwarding-class FC-BEST-EFFORT scheduler SC-BEST-EFFORT |

The configuration at this point produces the following port-scheduling hierarchies. The VOQ ID is arbitrary and is assigned from a VOQ pool as part of the bundle association. Note the configuration results in the EGQ assignment.

| Queue Priority | Forwarding Class | Queue | VOQ ID | Egress Queue Assignment |

|---|---|---|---|---|

| Low-Latency | FC-LLQ | 6 | 750 | EGQ1 |

| Strict-High | FC-SIGNALING | 7 | 751 | EGQ2 |

| High | FC-CONTROL | 3 | 747 | EGQ2 |

| Medium-High | FC-REALTIME | 5 | 749 | EGQ2 |

| Low | FC-HIGH | 4 | 748 | EGQ3 |

| Low | FC-MEDIUM | 2 | 746 | EGQ3 |

| Low | FC-LOW | 1 | 745 | EGQ3 |

| Low-Remainder | FC-BEST-EFFORT | 0 | 744 | EGQ3 |

Port Shaping

Queue shaping is explained in the previous section. Port Shaping is applied directly to the interface under the CoS hierarchy and adjusts the configured scheduler percentages based on the new port speed. For example, if a 10G shaper is configured on a 100GbE port, the reference port speed is 10G. A scheduler with a 50% transmit rate results in 5Gbps rather than 50Gbps.

Port Shaper

set class-of-service interface <x> shaping-rate <bps> |

Rewrite Rules

Rewrite is performed at the egress path based on the protocol match. The following configurations are applicable to ACX, PTX, and MX platforms. While sending dual tags, the rewrite operations are performed on the outer (S-TAG). It is generally desirable that inner C-TAG 802.1p bits are left unchanged and transmitted transparently.

802.1P Rewrite

set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-SIGNALING loss-priority low code-point 110 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-SIGNALING loss-priority high code-point 110 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LLQ loss-priority low code-point 100 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LLQ loss-priority medium-high code-point 100 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LLQ loss-priority high code-point 100 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-REALTIME loss-priority low code-point 101 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-REALTIME loss-priority high code-point 101 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-HIGH loss-priority low code-point 011 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-HIGH loss-priority medium-high code-point 011 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-HIGH loss-priority high code-point 011 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-CONTROL loss-priority low code-point 111 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-CONTROL loss-priority high code-point 111 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-MEDIUM loss-priority low code-point 010 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-MEDIUM loss-priority medium-high code-point 010 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-MEDIUM loss-priority high code-point 010 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LOW loss-priority low code-point 001 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LOW loss-priority medium-high code-point 001 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-LOW loss-priority high code-point 001 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-BEST-EFFORT loss-priority low code-point 000 set class-of-service rewrite-rules ieee-802.1 RR-8021P forwarding-class FC-BEST-EFFORT loss-priority high code-point 000 |

Exp Rewrite

set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-SIGNALING loss-priority low code-point 110 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-SIGNALING loss-priority high code-point 110 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LLQ loss-priority low code-point 100 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LLQ loss-priority medium-high code-point 100 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LLQ loss-priority high code-point 100 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-REALTIME loss-priority low code-point 101 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-REALTIME loss-priority high code-point 101 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-HIGH loss-priority low code-point 011 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-HIGH loss-priority medium-high code-point 011 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-HIGH loss-priority high code-point 011 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-CONTROL loss-priority low code-point 111 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-CONTROL loss-priority high code-point 111 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-MEDIUM loss-priority low code-point 010 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-MEDIUM loss-priority medium-high code-point 010 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-MEDIUM loss-priority high code-point 010 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LOW loss-priority low code-point 001 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LOW loss-priority medium-high code-point 001 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-LOW loss-priority high code-point 001 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-BEST-EFFORT loss-priority low code-point 000 set class-of-service rewrite-rules exp RR-MPLS forwarding-class FC-BEST-EFFORT loss-priority high code-point 000 |

DSCP IPv4 Rewrite

set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-SIGNALING loss-priority low code-point cs6 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-SIGNALING loss-priority high code-point cs5 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LLQ loss-priority low code-point af41 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LLQ loss-priority medium-high code-point af42 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LLQ loss-priority high code-point af43 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-REALTIME loss-priority low code-point ef set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-REALTIME loss-priority high code-point ef set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-HIGH loss-priority low code-point af31 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-HIGH loss-priority medium-high code-point af32 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-HIGH loss-priority high code-point af33 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-CONTROL loss-priority low code-point cs7 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-CONTROL loss-priority high code-point cs7 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-MEDIUM loss-priority low code-point af21 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-MEDIUM loss-priority medium-high code-point af22 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-MEDIUM loss-priority high code-point af23 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LOW loss-priority low code-point af11 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LOW loss-priority medium-high code-point af12 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-LOW loss-priority high code-point af13 set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-BEST-EFFORT loss-priority low code-point be set class-of-service rewrite-rules dscp RR-DSCP forwarding-class FC-BEST-EFFORT loss-priority high code-point be |

DSCP IPv6 Rewrite

set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-SIGNALING loss-priority low code-point cs6 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-SIGNALING loss-priority high code-point cs5 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LLQ loss-priority low code-point af41 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LLQ loss-priority medium-high code-point af42 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LLQ loss-priority high code-point af43 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-REALTIME loss-priority low code-point ef set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-REALTIME loss-priority high code-point ef set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-HIGH loss-priority low code-point af31 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-HIGH loss-priority medium-high code-point af32 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-HIGH loss-priority high code-point af33 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-CONTROL loss-priority low code-point cs7 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-CONTROL loss-priority high code-point cs7 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority low code-point af21 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority medium-high code-point af22 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-MEDIUM loss-priority high code-point af23 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LOW loss-priority low code-point af11 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LOW loss-priority medium-high code-point af12 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-LOW loss-priority high code-point af13 set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-BEST-EFFORT loss-priority low code-point be set class-of-service rewrite-rules dscp-ipv6 RR-DSCP-IPV6 forwarding-class FC-BEST-EFFORT loss-priority high code-point be |

Interface Class of Service

Once the CoS parameters are established, the application of the configuration is made to the interface itself under CoS hierarchy. Recall the three styles of classification: Behavior Aggregate, Fixed, and Multifield Classifier. Only BA and Fixed styles are applicable under this configuration stanza. BA matches on received layer 2 802.1p bits and/or layer 3 DSCP. Fixed classification maps all traffic received on the interface to a single forwarding class. Wildcard match conditions are used for interfaces and units where applicable. From Junos OS Evolved Release 23.2R1, multiple classifiers and rewrite rules are allowed on the same interface for ACX7000 platforms.

Scheduler Map

set class-of-service interfaces <ifd> scheduler-map SM-5G-SCHEDULER BA Classifier set class-of-service interfaces <ifd> unit <ifl> classifiers exp CL-MPLS set class-of-service interfaces <ifd> unit <ifl> classifiers dscp CL-DSCP set class-of-service interfaces <ifd> unit <ifl> classifiers ieee-802.1 CL-8021P Fixed Classifier set class-of-service interfaces <ifd> forwarding-class <fc name> Rewrite Rules set class-of-service interfaces <ifd> unit <ifl> rewrite-rules exp RR-MPLS set class-of-service interfaces <ifd> unit <ifl> rewrite-rules dscp RR-DSCP set class-of-service interfaces <ifd> unit <ifl> rewrite-rules ieee-802.1 RR-8021P |

BA Classifier

set class-of-service interfaces <ifd> unit <ifl> classifiers exp CL-MPLS set class-of-service interfaces <ifd> unit <ifl> classifiers dscp CL-DSCP set class-of-service interfaces <ifd> unit <ifl> classifiers ieee-802.1 CL-8021P |

Fixed Classifier

set class-of-service interfaces <ifd> forwarding-class <fc name> |

Rewrite Rules

set class-of-service interfaces <ifd> unit <ifl> rewrite-rules exp RR-MPLS set class-of-service interfaces <ifd> unit <ifl> rewrite-rules dscp RR-DSCP set class-of-service interfaces <ifd> unit <ifl> rewrite-rules ieee-802.1 RR-8021P |

Host Outbound Traffic

ACX7000 series platforms support host-outbound classification from Junos OS Evolved Release 23.3R1.

set class-of-service host-outbound-traffic forwarding-class <FC-NAME> |

Buffer Management

ACX7000 series platforms leverage VOQ ingress buffer machinery based on guaranteed and dynamic buffers. Dynamic buffers are elastic in nature and leverage Fair Adaptive Dynamic Threshold (FADT) as the algorithm to manage the shared buffer pool.

When the default CoS is used, the ACX7K allocates the buffer as:

| Queue | Buffer |

|---|---|

| 0 | 95% |

| 3 | 5% |

| Other Queues | * Minimum |

* Based on port speed.

The minimum buffer that can be allocated is based on the port speed:

- 10G = 2048

- 25G = 4096

- 40G = 4096

- 50G = 4096

- 100G = 8192

Buffer usage can be monitored primarily with these commands:

- show interface voq (from CLI)

- show cos voq buffer-occupancy ifd (from VTY)

Buffer Allocation

The CoS buffer configuration outlined in the Scheduling section proposes the following buffer allocation per queue for the ACX7000 platform.

set class-of-service schedulers SC-SIGNALING buffer-size percent 5 set class-of-service schedulers SC-LLQ buffer-size percent 10 set class-of-service schedulers SC-REALTIME buffer-size percent 20 set class-of-service schedulers SC-HIGH buffer-size percent 30 set class-of-service schedulers SC-CONTROL buffer-size percent 5 set class-of-service schedulers SC-MEDIUM buffer-size percent 20 set class-of-service schedulers SC-LOW buffer-size percent 10 set class-of-service schedulers SC-BEST-EFFORT buffer-size remainder |

The following table explains the buffer allocation calculated for each queue utilized in the validation. For example, a 100GbE port with 1250KB of dedicated buffer can be used.

| Queue | Rate | Buffer Size | Priority | Calculation | Buffer |

|---|---|---|---|---|---|

| Q7 | 5% shaped rate | 5% | strict-high | 1250000*0.05 | 62500 |

| Q6 | 40% shaped rate | 10% | low-latency | 1250000*0.1 | 125000 |

| Q5 | 30% shaped rate | 20% | medium-high | 1250000*0.2 | 250000 |

| Q4 | 40% transmit rate | 30% | low | 1250000*0.3 | 375000 |

| Q3 | 5% shaped rate | 5% | high | 1250000*0.05 | 62500 |

| Q2 | 30% transmit rate | 20% | low | 1250000*0.2 | 250000 |

| Q1 | 20% transmit rate | 10% | low | 1250000*0.1 | 125000 |

| Q0 | remainder | remainder | low | 1250000*0 | *8192 |

* remaining buffer is 0%, so minimum buffer programmed

Test Bed Configuration

Contact your Juniper account representative to obtain the full archive of the test bed configuration used for this JVD.