VAST Storage Configuration

The VAST Data Platform is a modern, all-flash storage solution designed to support AI, machine learning (ML), and high-performance computing (HPC) workloads at any scale. By leveraging a disaggregated and shared everything (DASE) architecture, VAST eliminates traditional storage bottlenecks, delivering high throughput, low latency, and simplified data management.

By integrating VAST Data into the AI JVD design, we ensure that AI workloads have the performance, scalability, and resilience necessary to drive next-generation innovation. We selected the VAST Data Platform as part of the AI JVD design due to the following advantages:

- High Performance: Vast Data's architecture leverages all-flash storage for unparalleled data access speeds, making it ideal for AI/ML workloads, real-time analytics, and high-performance computing (HPC) environments.

- Scalability: With a disaggregated, shared-nothing architecture, Vast Data scales seamlessly from terabytes to exabytes without sacrificing performance. This design overcomes the limitations of traditional storage solutions and allows enterprises to grow efficiently.

- Unified Storage: Vast Data provides a single platform supporting multiple storage protocols, including NFS, S3, and SMB. Its ability to support both file and object storage offers flexibility in managing diverse data sets.

- Data Resilience: With advanced erasure coding and fault-tolerant architecture, Vast Data ensures data protection and high availability, even in the event of hardware failures.

- Ease of Management: The platform features a streamlined management experience, offering a single global namespace and real-time system monitoring for simplified operations and scalability.

- Support for GPUs: Vast Data's architecture is optimized for GPU-driven workloads, making it a perfect fit for environments focused on AI, machine learning, and other compute-intensive applications.

- Low Latency: The flash-optimized design provides ultra-low-latency data access, essential for real-time data processing and analytics tasks.

The Vast Data Platform empowers enterprises to unlock the full potential of AI-driven insights while maintaining a highly resilient, scalable, and manageable data storage environment.

VAST storage cluster components in the AI JVD lab

In VAST Data's architecture, a Quad Server Chassis or CBox (Compute Box) is a 2U high-density chassis that houses four dual-Xeon servers, each running VAST Server Containers. These servers, known as C-nodes, handle the compute services within a VAST cluster. The CBox works in conjunction with the DBox (Data Box), which provides the storage component of the system. DBoxes and CBoxes serve distinct roles:

- DBox: Provides storage capacity, housing NVMe SSDs that store data.

- CBox: Offers computational resources, running VAST's software services to manage and access the stored data. It delivers the necessary processing power to manage and access the stored data efficiently

Management of both DBoxes and CBoxes is centralized through VAST's management system, which provides a unified interface for configuring, monitoring, and maintaining the entire storage cluster.

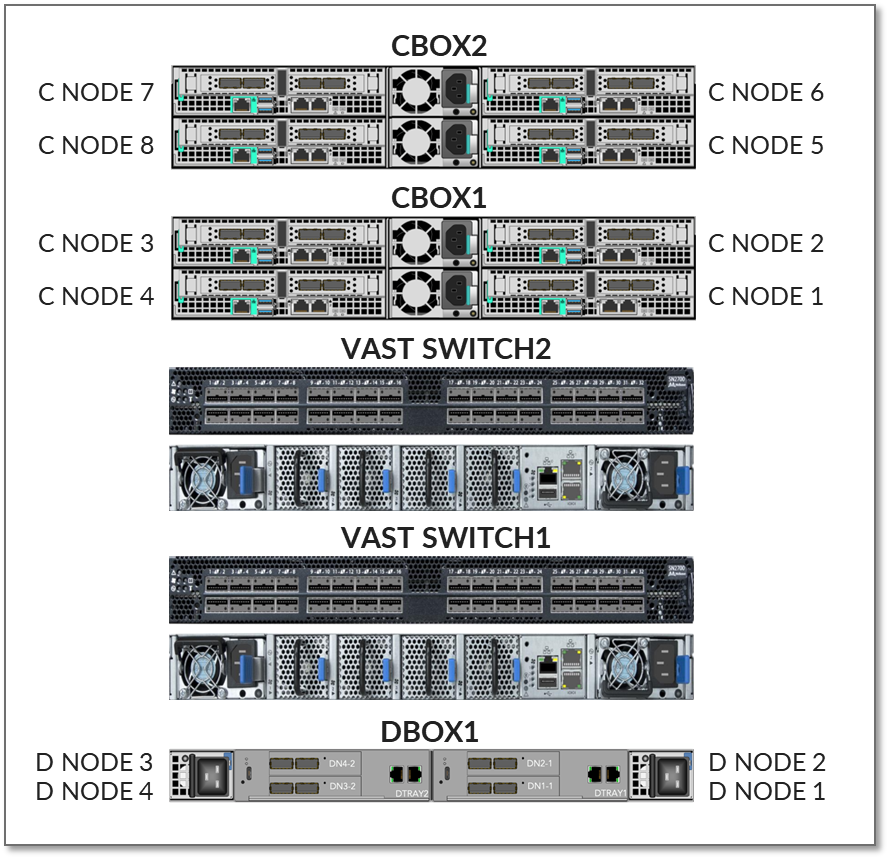

The tested VAST solution for this JVD comprises a 7 Rack Unit that includes:

- 2 x CBOX (QUAD-4N-IL-2NIC ) – 4 Slot

Modular Chassis

- Housing 8 C-nodes (4 C-nodes per C BOX)

- Each C-node with

- 2 x 100GE NICs &

- 1 x Ice Lake Gen4

- 1 x Ceres DBOX (DF-3015) - 4 Slot Modular Chassis

- Houses 4 D-nodes

- Each D-node comes with 2 x 100GE NICs

- Houses 22 NVMe SSDs (15.363 TB each)

Total of 338TB of raw storage and 240TB of usable storage.

- 2 x Internal Mellanox switches (SN2100) for the VAST NVMe fabric

Figure 56: 2 x 1 VAST solution

VAST storage cluster components connections and management

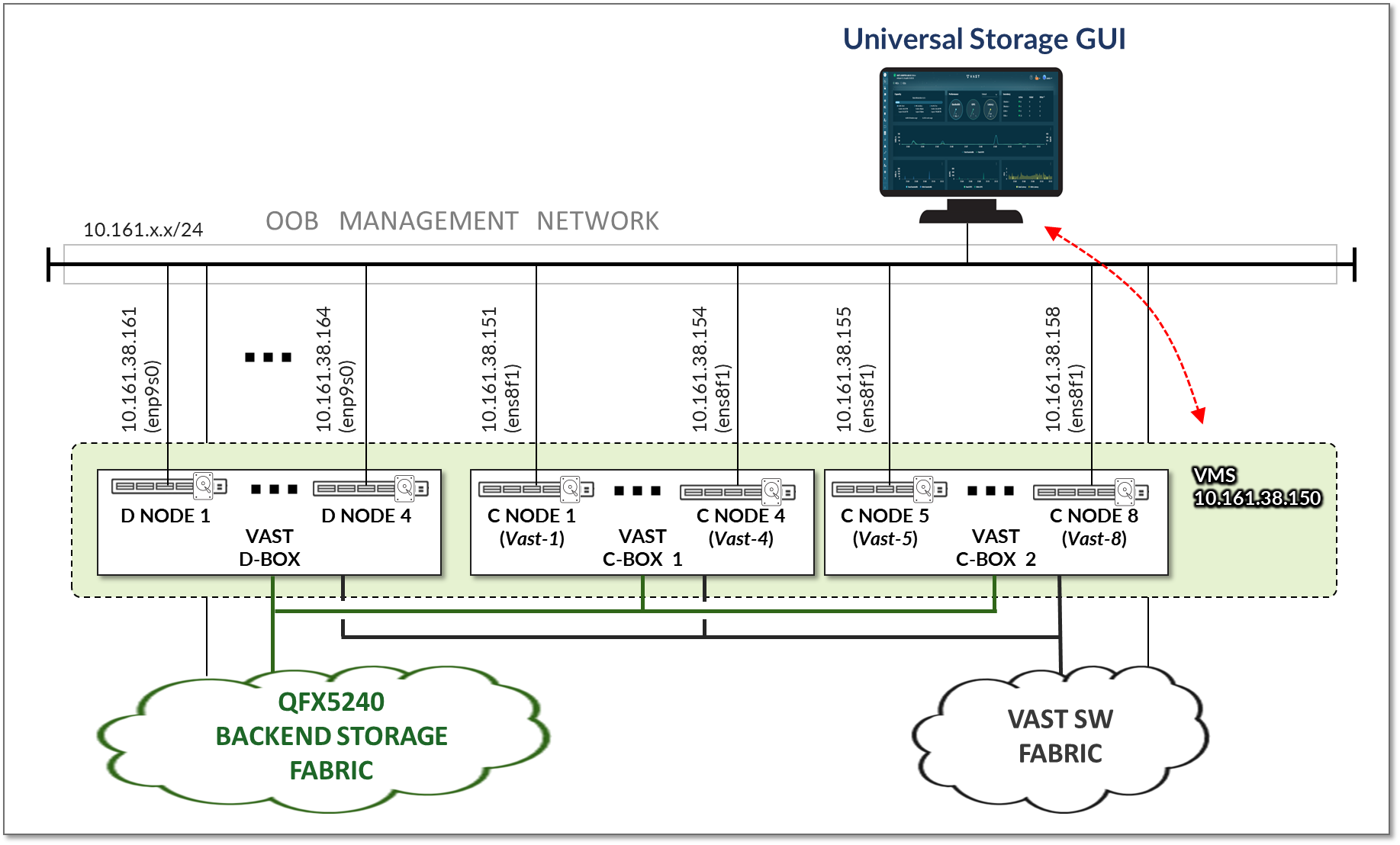

The VAST Storage cluster is managed by the Vast Control and Orchestration Layer (VMS), which oversees C-Nodes, D-Nodes, networking, security, monitoring, and overall system operations.

While it is possible to interact with VMS via CLI, VAST offers a powerful web-based UI (VAST Universal Storage GUI) that provides an intuitive way to configure, manage, and maintain the cluster—a key differentiator from traditional storage systems that are often CLI-driven.

All VMS management functions (from creating NFS exports to expanding the cluster) are exposed via a RESTful API. To simplify integration and usage, VMS publishes its API via Swagger, allowing for interactive documentation and code generation across different programming languages. Instead of directly controlling the system, both the GUI and CLI act as frontends consuming the RESTful API.

VMS runs on the C-Nodes, and the Universal Storage GUI is accessible from any web browser via a floating management IP assigned to the cluster as shown in Figure 57

Figure 57: VAST Universal Storage GUI connection

As also shown in Figure 57, all the cluster components are connected to the OOB management network, and the VMS IP address is assigned out of the same IP address range.

For more details about the Vast storage solution, you can review this whitepaper from Vast: The VAST Data Platform

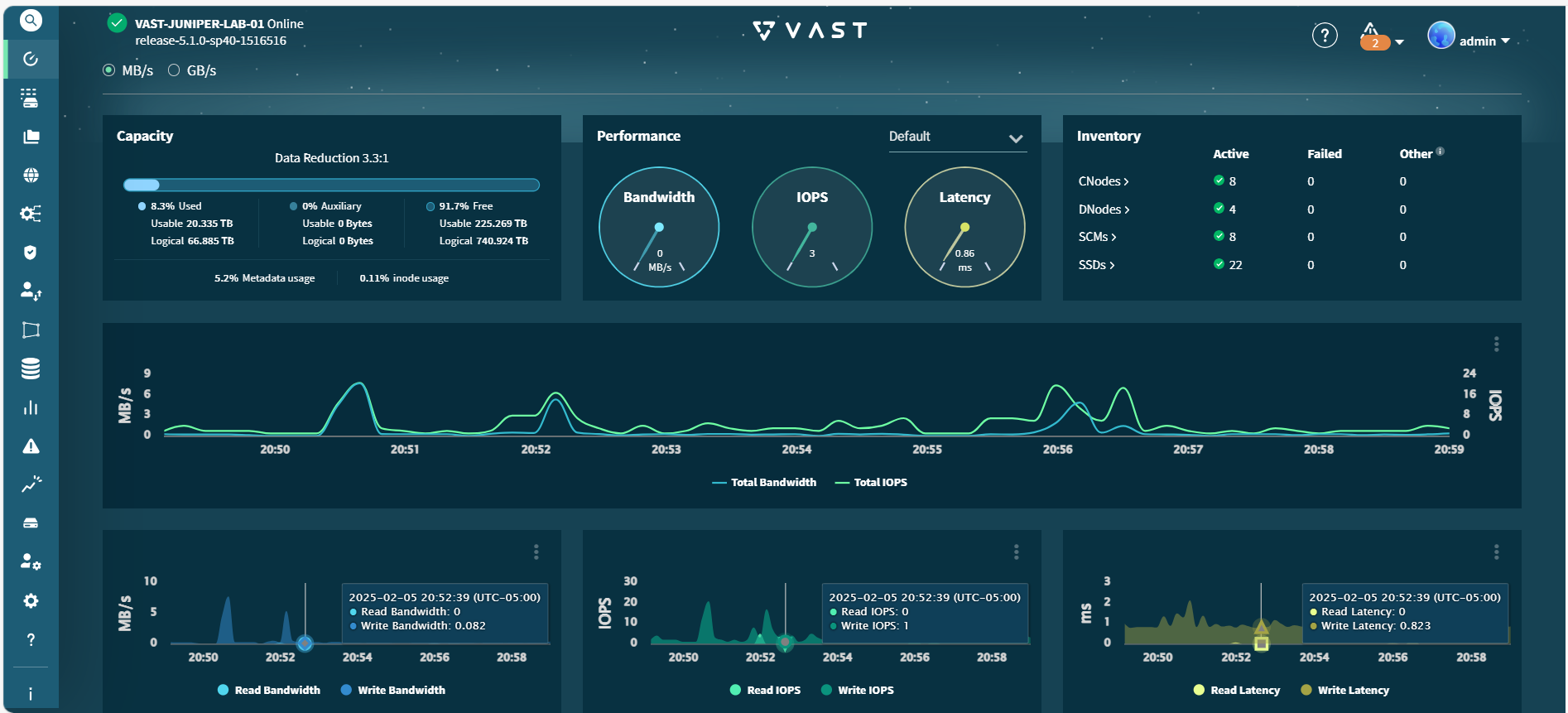

VAST Universal Storage GUI

The dashboard of the GUI displays capacity, physical and logical usage, overall performance, read/write bandwidth, IOPS, and overall latency in a user-friendly graphical format as shown in Figure 58

Figure 58: VAST Universal Storage GUI Dashboard

Under the infrastructure tab, you can check the configuration and status of all the cluster components including cboxes and C-nodes, dboxes and D-nodes, SSDs, NICs, and so on.

Figure 59: VAST Universal Storage GUI Infrastructure details

For more details about using the GUI check VAST Cluster 5.1 Administrator's Guide

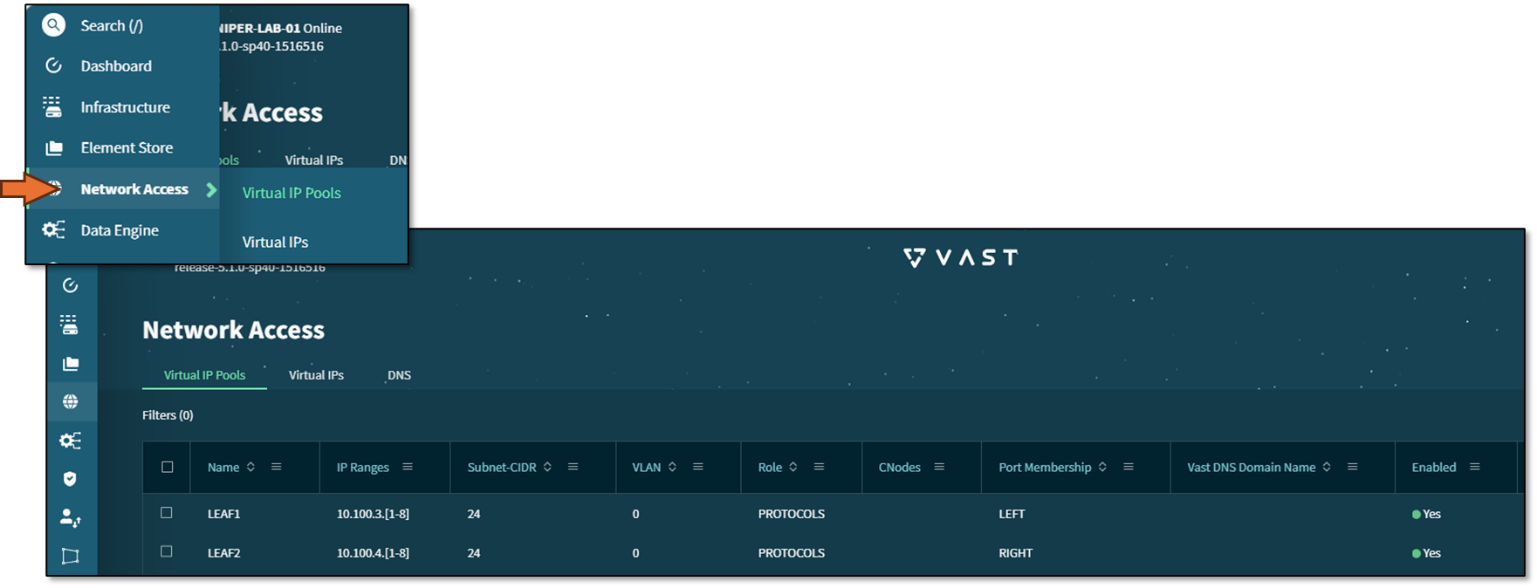

Network Configuration for the Juniper Vast Storage Cluster

As described in the Storage Backend sections, the Vast servers are dual-homed, and connected to separate storage backend switches (storage-leaf 5 and storage-leaf 6) using 100GE ports

Figure 60: Vast to Storage fabric Connectivity

.png)

The storage leaf nodes are configured with IRB interfaces (irb.3 and irb.4), which have IP addresses 10.100.3.254 and 10.100.4.254, respectively.

The C-Nodes dynamically receive IP addresses from the Virtual IP (VIP) pool and take responsibility for responding to ARP requests for their assigned VIPs.

The system automatically rebalances VIPs and load distribution whenever a C-Node fails, is removed, or a new node is added.

- If a C-Node goes offline due to failure or intentional removal, the VIPs it was serving are redistributed across the remaining C-Nodes

- If a new C-Node is added, some VIPs are reassigned to integrate the node into the load-balancing mechanism.

To ensure optimal load balancing and fault tolerance, VAST recommends configuring each VIP pool with 2 to 4 times as many VIPs as there are C-Nodes. This ensures that in the event of a node failure, its load is distributed across multiple remaining C-Nodes rather than overburdening just one.

VAST NFS:

The Lab Servers use VAST’s proprietary kernel module for higher performance. The kernel module allows for a 70% to 100% performance improvement.

fstab settings on the AMD GPU server:

10.100.3.1:/default /mnt/vast nfs nconnect=8,remoteports=10.100.3.1-10.100.3.8,rw,noatime,rsize=32768,wsize=32768,nolock,tcp,intr,fsc,nofail 0 0

Documentation about the Kernal Module and setup can be found here: https://vastnfs.vastdata.com/docs/4.0/Intro.html

Common Setting and recommendations

When you order your VAST system, you will be asked to complete an initial site survey with IP addresses, and other details so your system can be configured accordingly, by Vast support. The Vast GUI will be installed and ready.

We recommend the following:

- Set the MTU to 9000

- VAST NFS Kernel on servers

- Enable multipathing this server fstab settings

- Configure the VIP pools with 2 to 4 times as many VIPs as there are C-Nodes

Figure 61: Storage Interface Connectivity