APPENDIX: ERB Fabric Verification (Optional)

You may skip this optional chapter if you want. This information is presented to show more of the internal details on how the fabric is working.

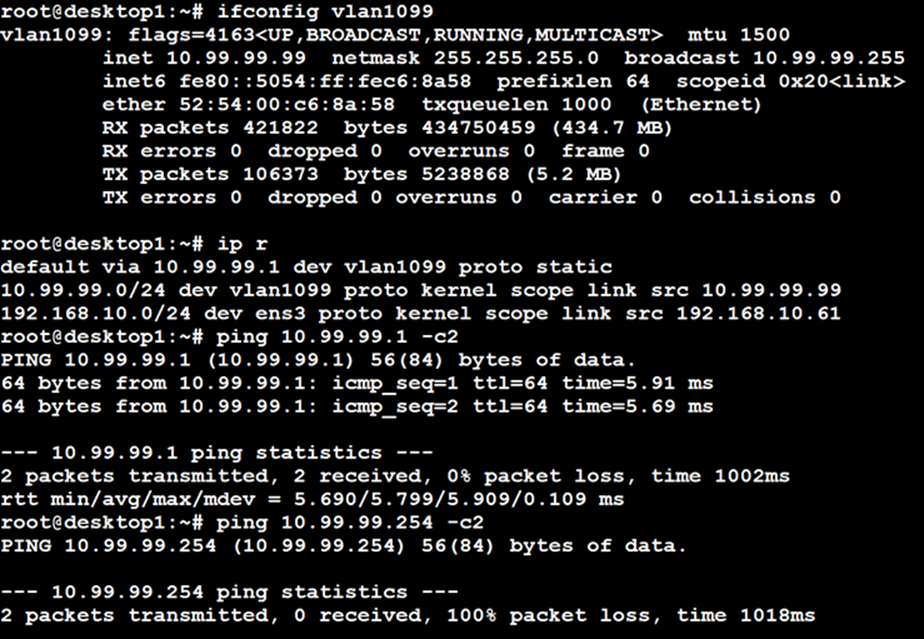

Verification of the Campus Fabric Core-Distribution ERB deployment. See Figure 1. Currently, there are two desktops to validate the fabric. Let’s take a quick look to see if Desktop1 can connect internally and externally.

Validation steps:

- Confirmed local IP address, VLAN, and default gateway were configured on Desktop1.

- Can ping default gateway – indicates that we can reach the distribution switch.

- Ping to WAN router failed (10.99.99.254) – we need to troubleshoot.

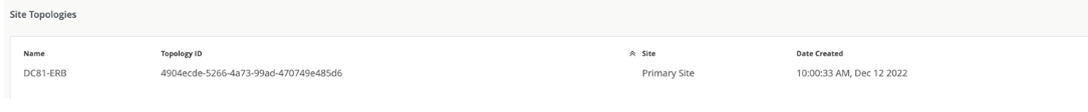

Start by validating the campus fabric in the portal by selecting the Campus Fabric option under the Organization tab on the left side of the portal.

Remote shell access into each device within the campus fabric is supported here as well as a visual representation of the following capabilities:

- BGP peering establishment.

- Transmit and receive traffic on a link-by-link basis.

- Telemetry, such as LLDP, from each device that verifies the physical build.

BGP Underlay

Purpose

Verifying the state of eBGP between the core and distribution layers is essential for EVPN-VXLAN to operate as expected. This network of point-to-point links between each layer supports:

- Load balancing using ECMP for greater resiliency and bandwidth efficiencies.

- BFD to decrease convergence times during failures.

- Loopback reachability to support VXLAN tunnelling.

Without requiring verification at each layer, the focus can be on Dist1 and 2 and their eBGP relationships with Core1 and 2. If both distribution switches have established eBGP peering sessions with both core switches, you can move to the next phase of verification.

Due to the automated assignment of loopback IP addresses, for this fabric, we have the following configuration to remember:

| Switch Type | Switch Name | Auto assigned Loopback IP |

|---|---|---|

| Core | Core1 | 172.16.254.2 |

| Core | Core2 | 172.16.254.1 |

| Distribution | Dist1 | 172.16.254.3 |

| Distribution | Dist2 | 172.16.254.4 |

| Access | Access1 | N/A |

| Access | Access2 | N/A |

Action

Verify that BGP sessions are established between core devices and distribution devices to ensure loopback reachability, BFD session status, and load-balancing using ECMP.

Operational data can be gathered through the campus fabric section of the portal as Remote Shell or using an external application such as SecureCRT or Putty.

Verification of BGP Peering

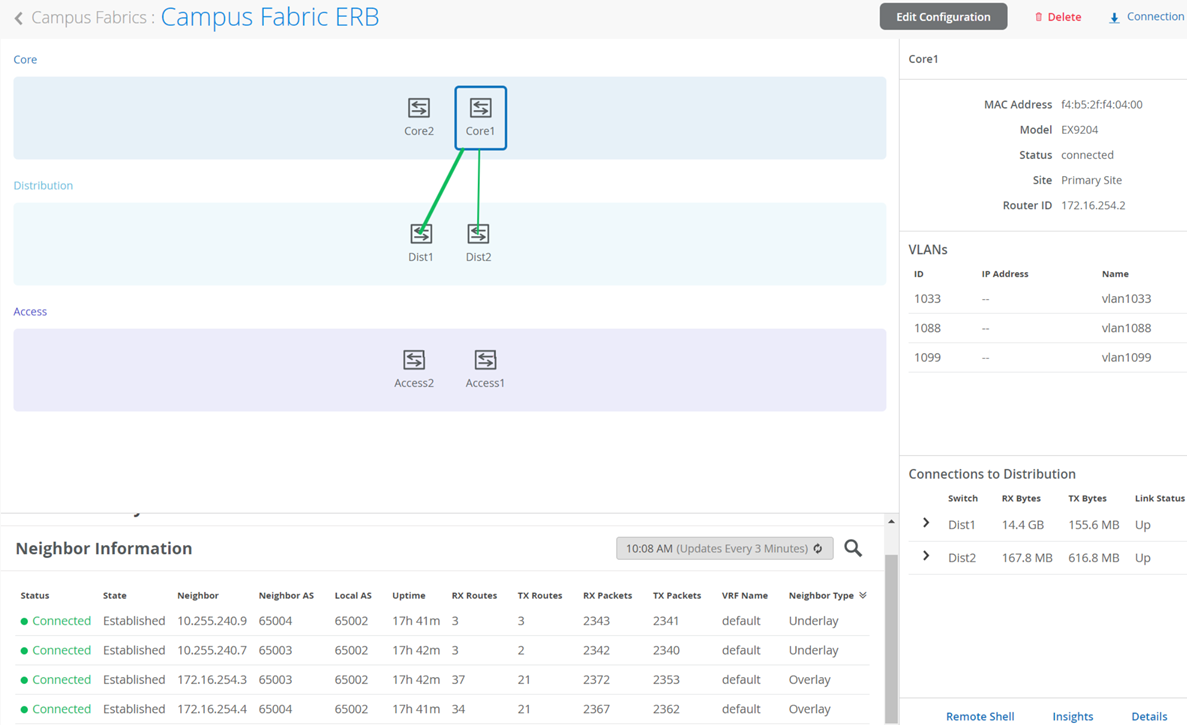

Dist1:

Access the Remote Shell via the lower-right of the campus fabric, from the switch view, or via Secure Shell (SSH).

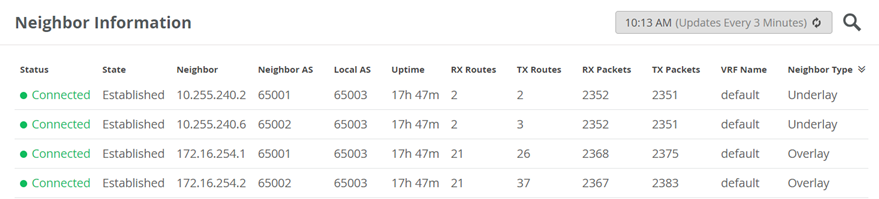

From the BGP summary, we can see that the underlay (10.255.240.X) peer relationships are established, which indicates that the underlay links are attached to the correct devices and the links are up.

It also shows the overlay (172.16.254.x) relationships are established and that it is peering at the correct loopback addresses. This demonstrates underlay loopback reachability.

We can also see routes received; time established are roughly equal which looks good so far.

The campus fabric build illustrates per device real-time BGP peering status shown below from Dist1:

If BGP is not established then go back and validate the underlay links and addressing, and that the loopback addresses are correct. Loopback addresses should be pingable from other loopback addresses.

Verification of BGP connections can be performed on any of the other switches (not shown).

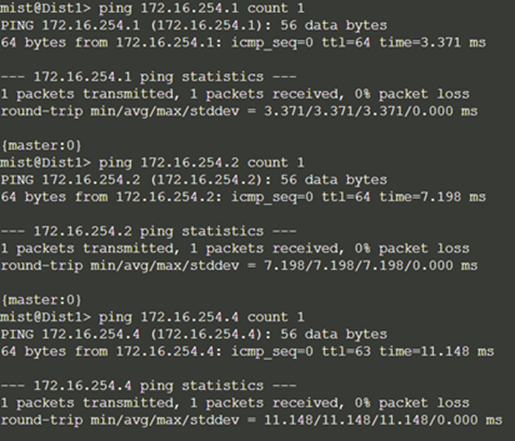

The primary goal of eBGP in the underlay is to provide loopback reachability between core and distribution devices in the campus fabric. This loopback is used to terminate VXLAN tunnels between devices. The following shows loopback reachability from Dist1 to all devices in the campus fabric:

eBGP sessions are established between core-distribution layers in the campus fabric. Loopback reachability has also been verified between core and distribution devices.

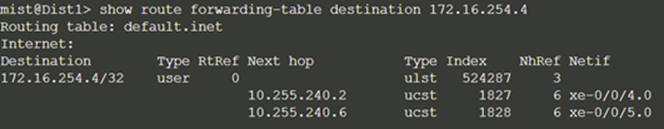

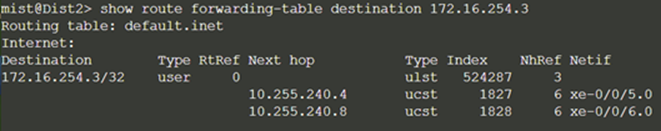

Let’s verify that the routes are established to the core and other devices across multiple paths. For example, Dist1 should leverage both paths through Core1 and 2 to reach Dist2 and vice versa.

Dist1: ECMP Loopback reachability to Dist2 through Core1/2

Dist2: ECMP Loopback reachability with Dist1 through Core1/2

This can be repeated for Core1 and 2 to verify ECMP load balancing.

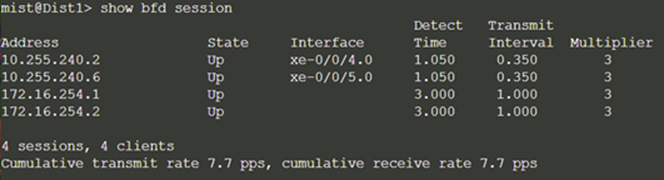

Finally, we validate BFD for fast convergence in the case of a link or device failure:

Conclusion: At this point, the BGP underlay and overlay are operational through the verification of eBGP between corresponding layers of the campus fabric and loopback routes are established between core and distribution layers.

EVPN VXLAN Verification Between Core and Distribution Switches

Since the desktop can ping its default gateway, we can assume the Ethernet switching tables are correctly populated, and VLAN and interface modes are correct. If pinging the default gateway failed, then troubleshoot underlay connectivity.

Verification of the EVPN Database on Both Core Switches

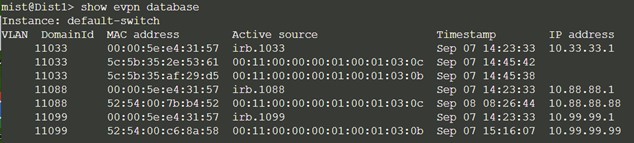

Dist1:

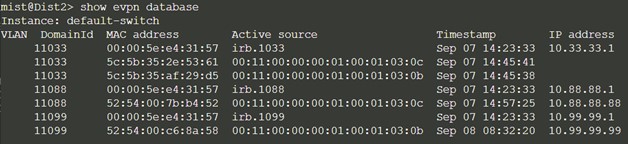

Dist2:

Both core switches have identical EVPN databases which is expected. Notice the entries for desktop1 (10.99.99.99) and desktop2 (10.88.88.88) present on each core switch. These entries are learned through the campus fabric from the ESI-LAGs off Dist1 and 2.

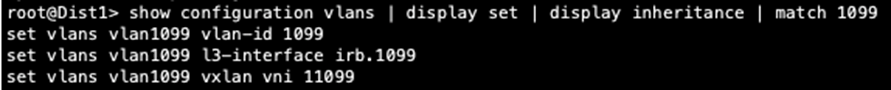

The 10.99.99.99 IP address is associated with irb.1099 and we see a VNI of 11099. Let's just double-check VLAN to VNI mapping on the distribution and core switches and verify the presence of L3 on the distribution switches.

Distribution:

Core:

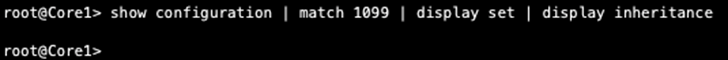

We now know that there can be an issue with the config or status of the core switches. The VLAN configuration stanza does not show 1099 which points to a lack of configuration on the core devices. We still have control plane output that displays both desktops’ IP and MAC addresses. Let’s keep troubleshooting.

Verification of VXLAN Tunnelling Between Distribution and Core Switches

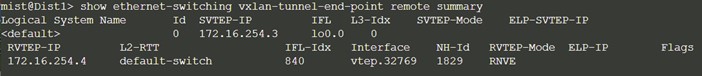

Dist1:

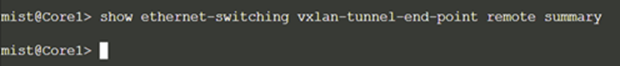

Core1:

The distribution switches still do not display Core1 and 2 as the remote tunnel destinations. The reason is that we have not yet configured the uplinks to the WAN router at the top of the fabric. Hence, no core switch knows about vlan1099, nor does it have any information about it.

We need to configure the attachment of the WAN router to complete the entire design. Without the WAN router configuration, the fabric only allows the following communications:

- The same VLAN/VNI on the same access switch but different ports.

- The same VLAN/VNI on different access switches.

- Different VLAN/VNI attached to the same VRF on the same access switch, but different ports.

- Different VLAN/VNI attached to the same VRF on different access switches.

All traffic between VRFs is always isolated inside the fabric. For security reasons, there is no possible configuration to perform route leaking between VRFs. This means that traffic between them is handled directly inside the fabric without the need to traverse through the WAN router as a possible enforcement point.