Bridged Overlay Design and Implementation

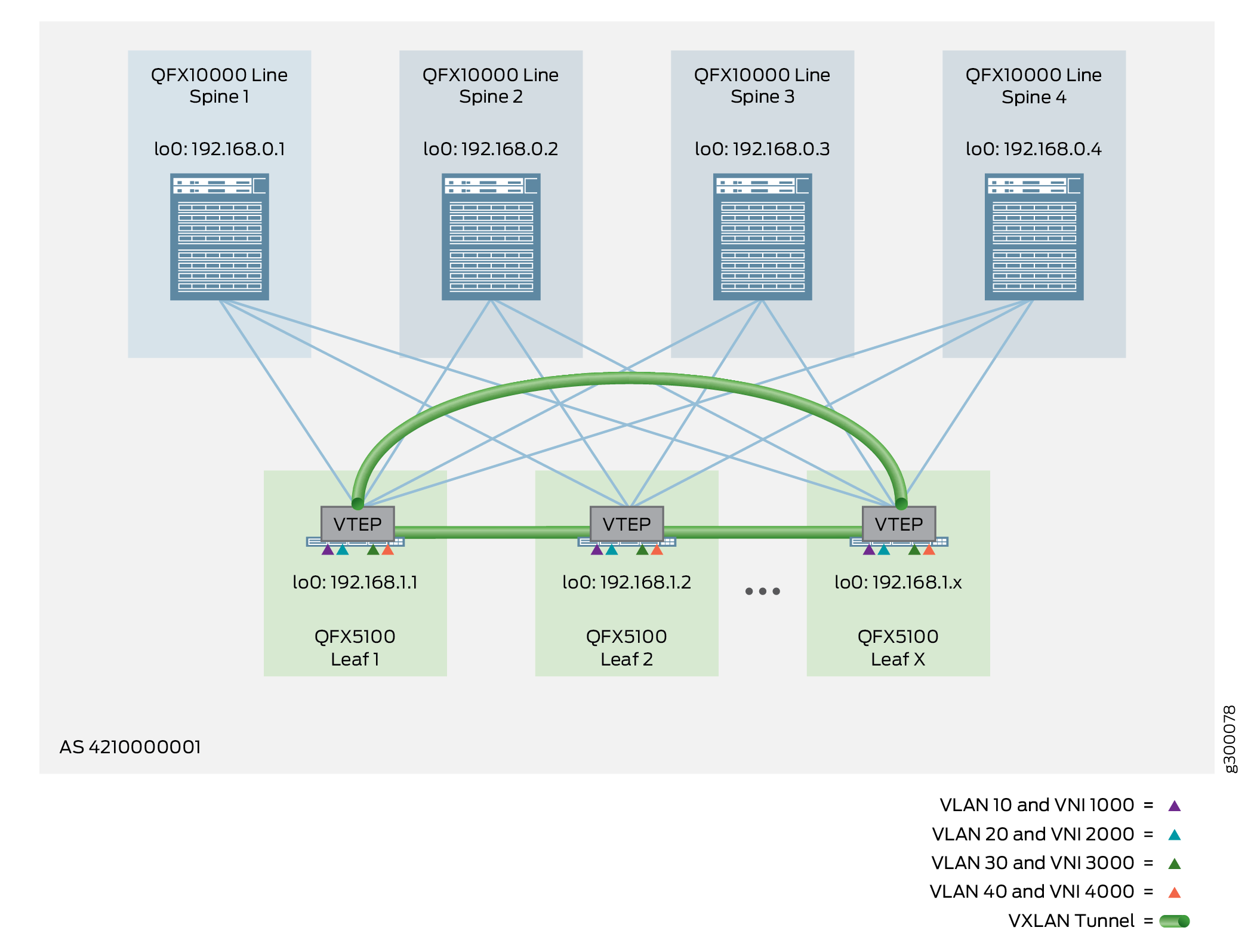

A bridged overlay provides Ethernet bridging between leaf devices in an EVPN network, as shown in Figure 1. This overlay type simply extends VLANs between the leaf devices across VXLAN tunnels. Bridged overlays provide an entry level overlay style for data center networks that require Ethernet connectivity but do not need routing services between the VLANs.

In this example, loopback interfaces on the leaf devices act as VXLAN tunnel endpoints (VTEPs). The tunnels enable the leaf devices to send VLAN traffic to other leaf devices and Ethernet-connected end systems in the data center. The spine devices only provide basic EBGP underlay and IBGP overlay connectivity for these leaf-to-leaf VXLAN tunnels.

If inter-VLAN routing is required for a bridged overlay, you can use an MX Series router or SRX Series security device that is external to the EVPN/VXLAN fabric. Otherwise, you can select one of the other overlay types that incorporate routing (such as an edge-routed bridging overlay, a centrally-routed bridging overlay, or a routed overlay) discussed in this Cloud Data Center Architecture Guide.

The following sections provide the detailed steps of how to configure a bridged overlay:

Configuring a Bridged Overlay

Bridged overlays are supported on all platforms included in this reference design. To configure a bridged overlay, you configure VNIs, VLANs, and VTEPs on the leaf devices, and BGP on the spine devices. We support either an IPv4 Fabric or an IPv6 Fabric (with supported platforms) as the fabric infrastructure with bridged overlay architectures.

When you implement this style of overlay on a spine device, the focus is on providing overlay transport services between the leaf devices. Consequently, you configure an IP Fabric underlay and IBGP overlay peering with IPv4, or an IPv6 Fabric underlay with EBGP IPv6 overlay peering. There are no VTEPs or IRB interfaces needed, because the spine device does not provide any routing functionality or EVPN/VXLAN capabilities in a bridged overlay.

On the leaf devices, you can configure a bridged overlay using the default switch instance or using MAC-VRF instances.

We support EVPN-VXLAN on devices running Junos OS Evolved only with MAC-VRF instance configurations.

In addition, we support the IPv6 Fabric infrastructure design only with MAC-VRF instance configurations.

Some configuration steps that affect the Layer 2 configuration differ with MAC-VRF instances. Likewise, one or two steps might differ for IPv6 Fabric configurations compared to IPv4 Fabric configurations. The leaf device configuration includes the following steps:

Enable EVPN with VXLAN encapsulation to connect to other leaf devices, and configure the loopback interface as a VTEP source interface. If you are using MAC-VRF instances instead of the default switching instance, configure a MAC-VRF instance with these parameters in the MAC-VRF instance. If your fabric uses an IPv6 Fabric, you configure the VTEP source interface as an IPv6 interface.

Establish route targets and route distinguishers. If you are using MAC-VRF instances instead of the default switching instance, configure a MAC-VRF instance with these parameters in the MAC-VRF instance.

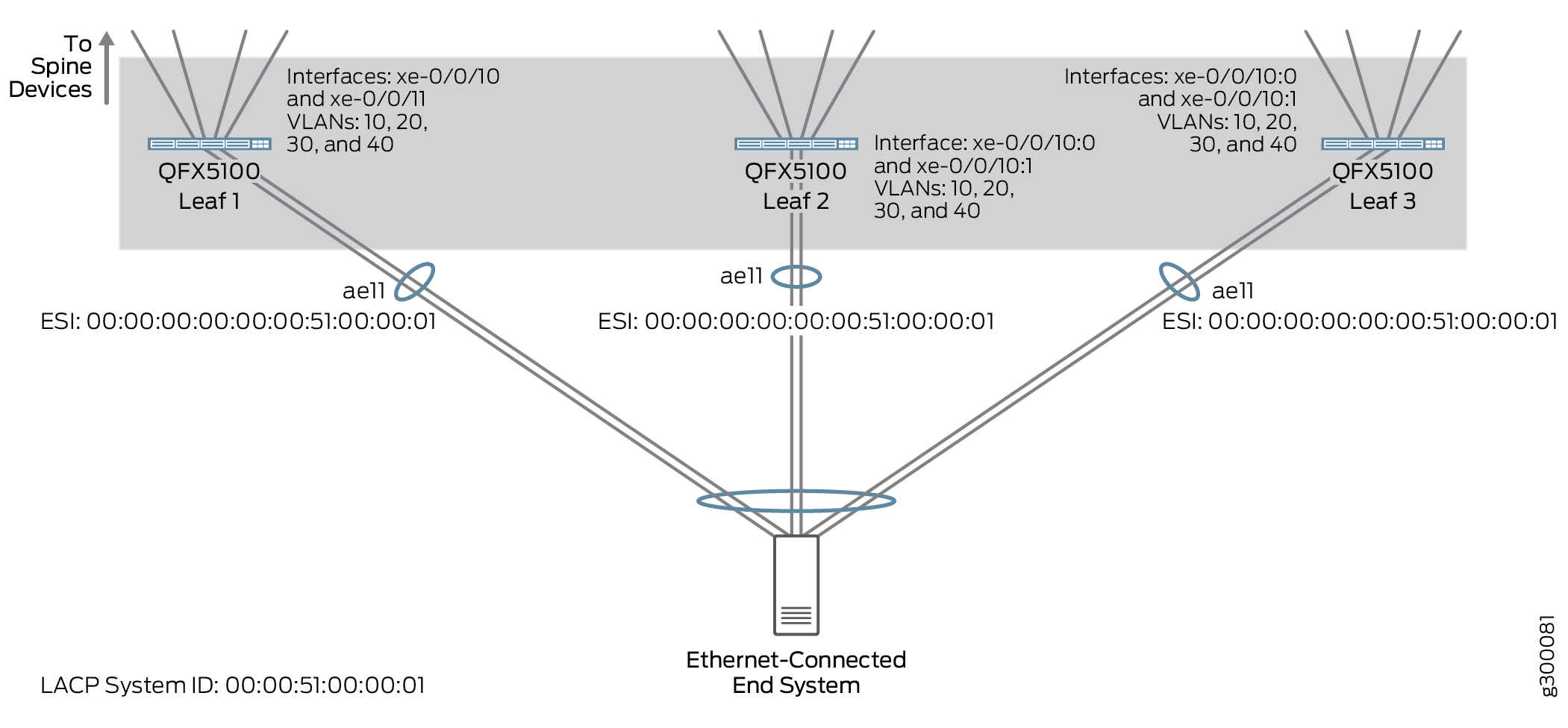

Configure Ethernet Segment Identifier (ESI) settings.

Map VLANs to VNIs.

Again, you do not include IRB interfaces or routing on the leaf devices for this overlay method.

The following sections provide the detailed steps of how to configure and verify the bridged overlay:

- Configuring a Bridged Overlay on the Spine Device

- Verifying a Bridged Overlay on the Spine Device

- Configuring a Bridged Overlay on the Leaf Device

- Verifying the Bridged Overlay on the Leaf Device

Configuring a Bridged Overlay on the Spine Device

To configure a bridged overlay on a spine device, perform the following steps:

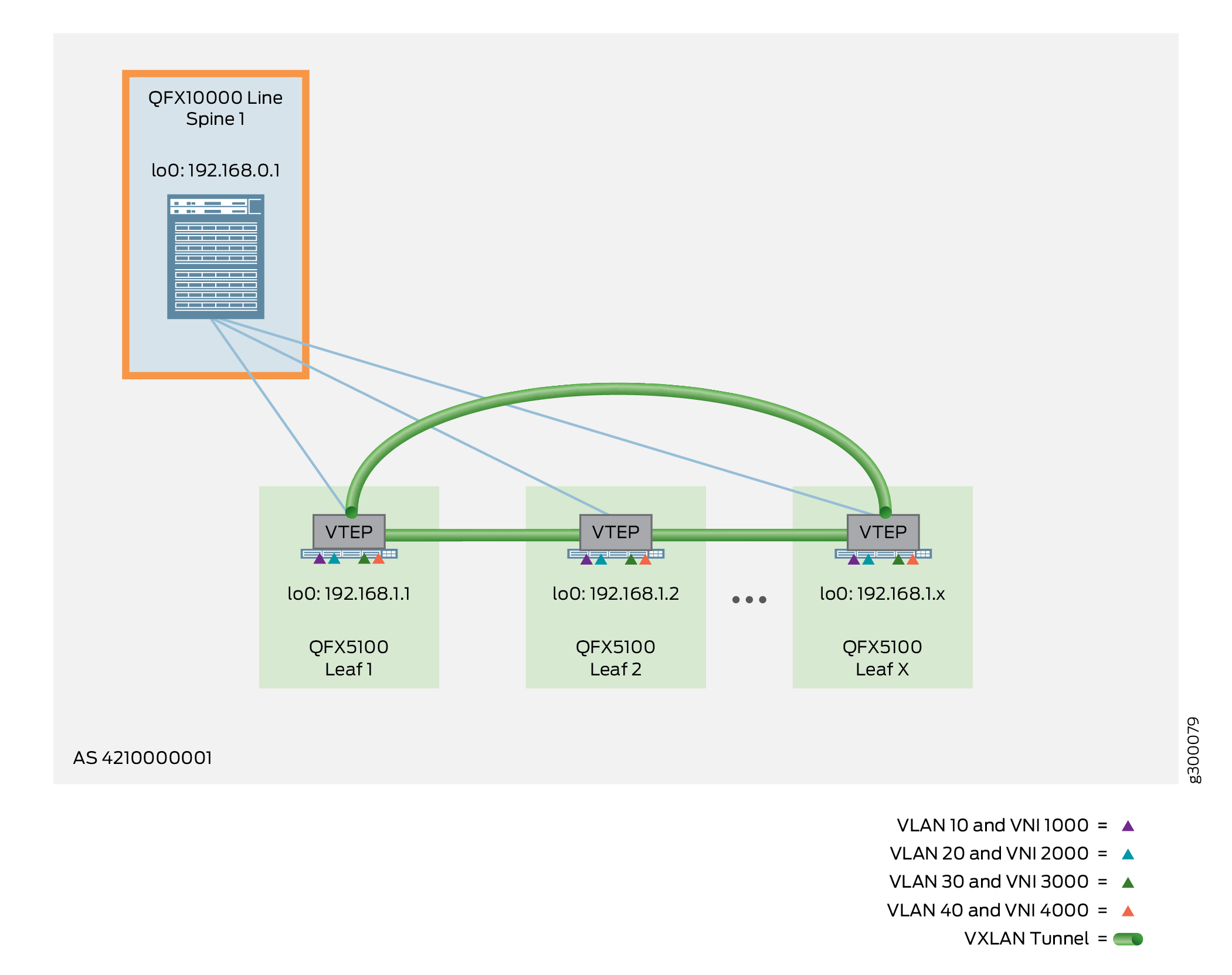

The following example shows the configuration for Spine 1, as shown in Figure 2.

Verifying a Bridged Overlay on the Spine Device

Issue the following commands to verify that the overlay is working properly on your spine devices:

Configuring a Bridged Overlay on the Leaf Device

To configure a bridged overlay on a leaf device, perform the following:

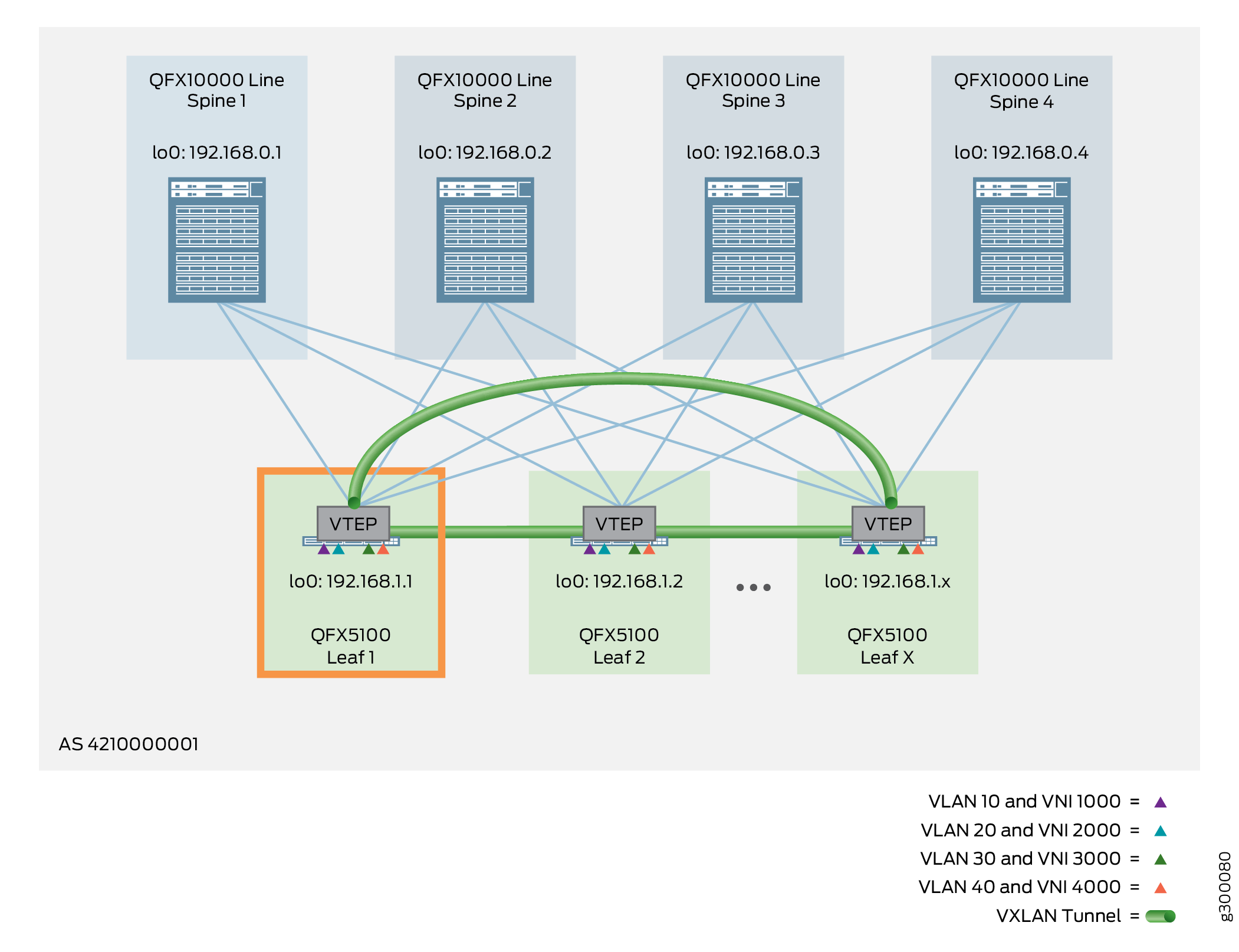

The following example shows the configuration for Leaf 1, as shown in Figure 3.

Verifying the Bridged Overlay on the Leaf Device

Run the following commands to verify that the overlay is working properly on your leaf devices.

The commands here show output for a default instance configuration. With a MAC-VRF instance configuration, you can alternatively use:

show mac-vrf forwardingcommands that are aliases for theshow ethernet-switchingcommands in this section.The

show mac-vrf routing instancecommand, which is an alias for theshow evpn instancecommand in this section.

See MAC-VRF Routing Instance Type Overview for tables of show

mac-vrf forwarding and show ethernet-switching command

mappings, and show mac-vrf routing command aliases for show evpn commands.

The output with a MAC-VRF instance configuration displays similar information for MAC-VRF routing instances as this section shows for the default instance. One main difference you might see is in the output with MAC-VRF instances on devices where you enable the shared tunnels feature. With shared tunnels enabled, you see VTEP interfaces in the following format:

vtep-index.shared-tunnel-unit

where:

index is the index associated with the MAC-VRF routing instance.

shared-tunnel-unit is the unit number associated with the shared tunnel remote VTEP logical interface.

For example, if a device has a MAC-VRF instance with index 26 and the instance connects to two remote VTEPs, the shared tunnel VTEP logical interfaces might look like this:

vtep-26.32823 vtep-26.32824

If your configuration uses an IPv6 Fabric, you provide IPv6 address parameters where applicable. Output from the commands that display IP addresses reflect the IPv6 device and interface addresses from the underlying fabric. See IPv6 Fabric Underlay and Overlay Network Design and Implementation with EBGP for the fabric parameters reflected in command outputs in this section with an IPv6 Fabric.

See Also

Bridged Overlay — Release History

Table 1 provides a history of all of the features in this section and their support within this reference design.

Release |

Description |

|---|---|

19.1R2 |

QFX10002-60C and QFX5120-32C switches running Junos OS Release 19.1R2 and later releases in the same release train support all features documented in this section. |

18.4R2 |

QFX5120-48Y switches running Junos OS Release 18.4R2 and later releases in the same release train support all features documented in this section. |

18.1R3-S3 |

QFX5110 switches running Junos OS Release 18.1R3-S3 and later releases in the same release train support all features documented in this section. |

17.3R3-S2 |

All devices in the reference design that support Junos OS Release 17.3R3-S2 and later releases in the same release train also support all features documented in this section. |