ON THIS PAGE

Configuring the Delay Before LDP Neighbors Are Considered Down

Configuring the Prefixes Advertised into LDP from the Routing Table

Configuring a Failure Action for the BFD Session on an LDP LSP

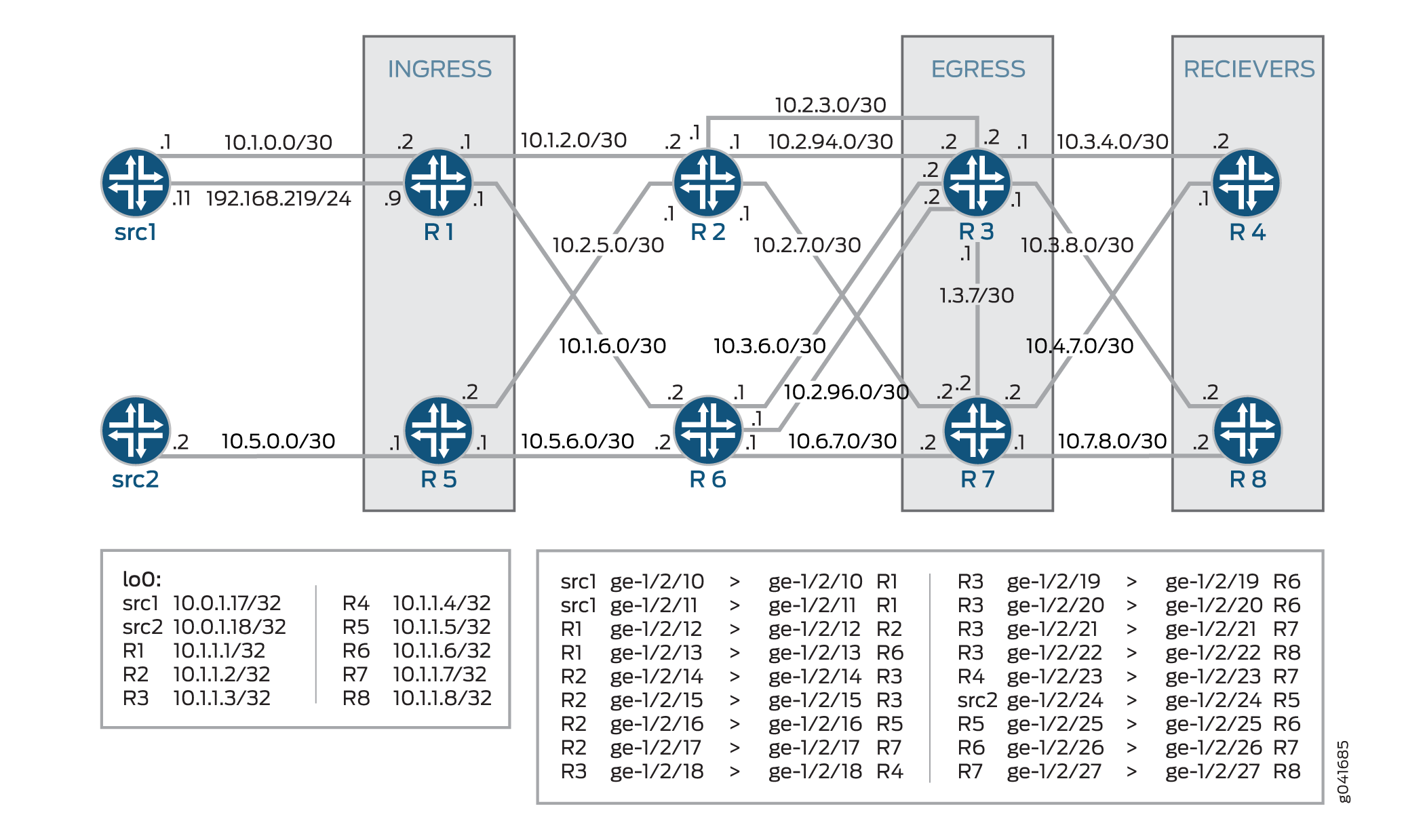

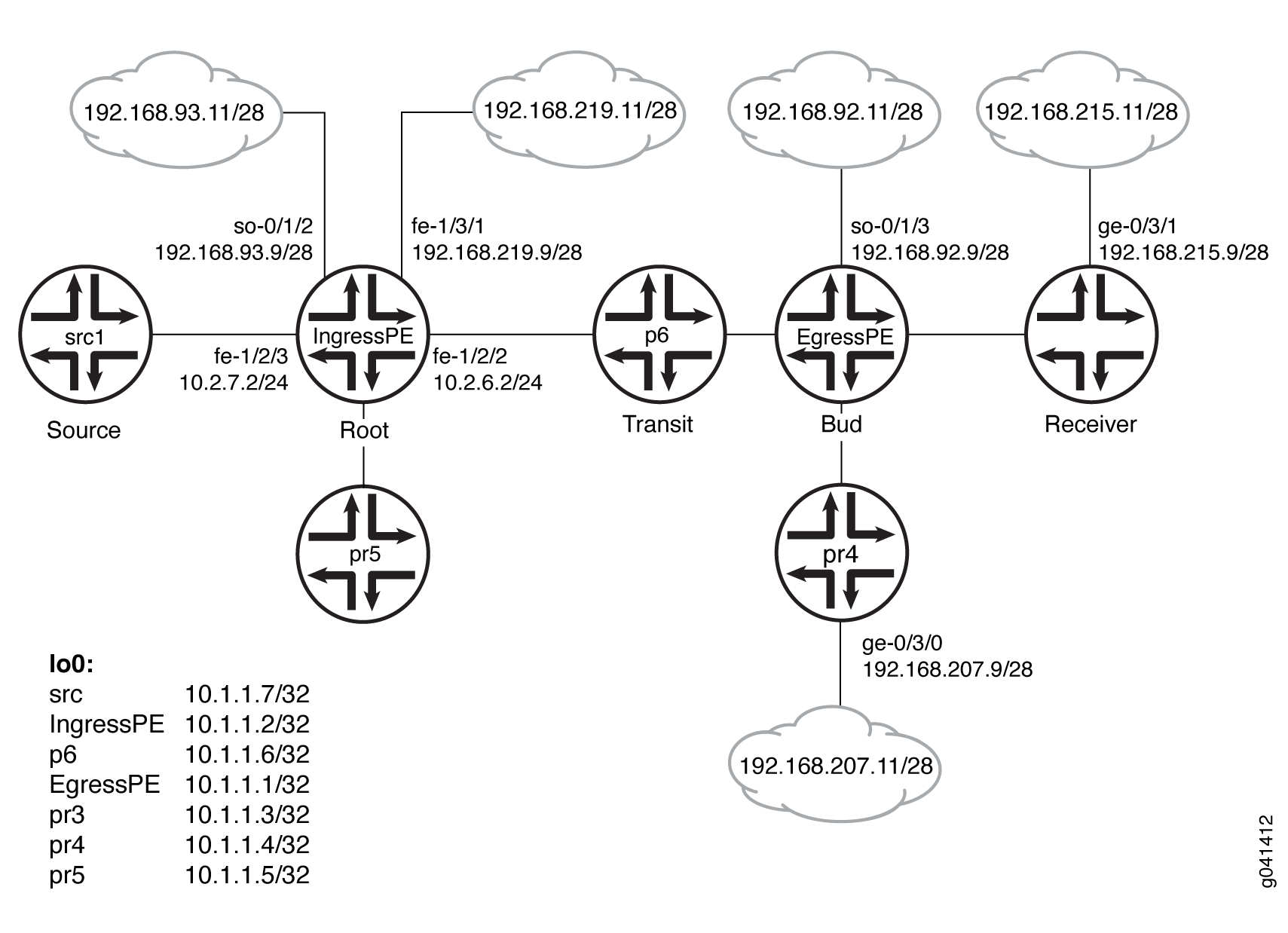

Example: Configuring Multicast-Only Fast Reroute in a Multipoint LDP Domain

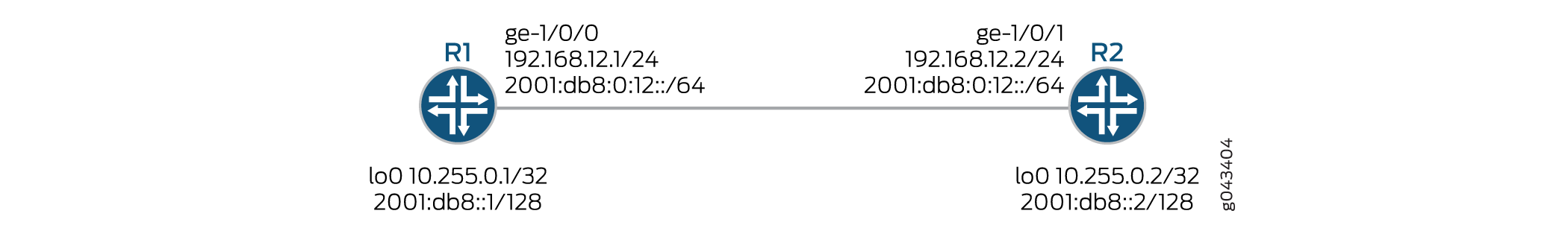

Example: Configuring Multipoint LDP In-Band Signaling for Point-to-Multipoint LSPs

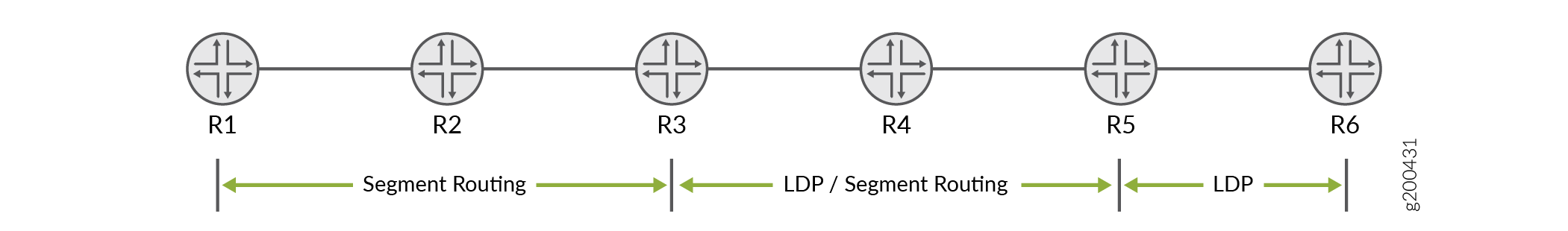

Mapping Client and Server for Segment Routing to LDP Interoperability

LDP Configuration

Minimum LDP Configuration

To enable LDP with minimal configuration:

Enable all relevant interfaces under family MPLS. In the case of directed LDP, the loopback interface needs to be enabled with family MPLS.

(Optional) Configure the relevant interfaces under the

[edit protocol mpls]hierarchy level.Enable LDP on a single interface, include the

ldpstatement and specify the interface using theinterfacestatement.

This is the minimum LDP configuration. All other LDP configuration statements are optional.

ldp { interface interface-name; }

To enable LDP on all interfaces, specify all for interface-name.

For a list of hierarchy levels at which you can include these statements, see the statement summary sections.

Enabling and Disabling LDP

LDP is routing-instance-aware. To enable LDP on a specific interface, include the following statements:

ldp { interface interface-name; }

For a list of hierarchy levels at which you can include these statements, see the statement summary sections.

To enable LDP on all interfaces, specify all for interface-name.

If you have configured interface properties on a group of interfaces

and want to disable LDP on one of the interfaces, include the interface statement with the disable option:

interface interface-name { disable; }

For a list of hierarchy levels at which you can include this statement, see the statement summary section.

Configuring the LDP Timer for Hello Messages

LDP hello messages enable LDP nodes to discover one another and to detect the failure of a neighbor or the link to the neighbor. Hello messages are sent periodically on all interfaces where LDP is enabled.

There are two types of LDP hello messages:

Link hello messages—Sent through the LDP interface as UDP packets addressed to the LDP discovery port. Receipt of an LDP link hello message on an interface identifies an adjacency with the LDP peer router.

Targeted hello messages—Sent as UDP packets addressed to the LDP discovery port at a specific address. Targeted hello messages are used to support LDP sessions between routers that are not directly connected. A targeted router determines whether to respond or ignore a targeted hello message. A targeted router that chooses to respond does so by periodically sending targeted hello messages back to the initiating router.

By default, LDP sends hello messages every 5 seconds for link hello messages and every 15 seconds for targeted hello messages. You can configure the LDP timer to alter how often both types of hello messages are sent. However, you cannot configure a time for the LDP timer that is greater than the LDP hold time. For more information, see Configuring the Delay Before LDP Neighbors Are Considered Down.

- Configuring the LDP Timer for Link Hello Messages

- Configuring the LDP Timer for Targeted Hello Messages

Configuring the LDP Timer for Link Hello Messages

To modify how often LDP sends link hello messages, specify a

new link hello message interval for the LDP timer using the hello-interval statement:

hello-interval seconds;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Configuring the LDP Timer for Targeted Hello Messages

To modify how often LDP sends targeted hello messages, specify

a new targeted hello message interval for the LDP timer by configuring

the hello-interval statement as an option for the targeted-hello statement:

targeted-hello { hello-interval seconds; }

For a list of hierarchy levels at which you can include these statements, see the statement summary sections for these statements.

Configuring the Delay Before LDP Neighbors Are Considered Down

The hold time determines how long an LDP node should wait for a hello message before declaring a neighbor to be down. This value is sent as part of a hello message so that each LDP node tells its neighbors how long to wait. The values sent by each neighbor do not have to match.

The hold time should normally be at least three times the hello interval. The default is 15 seconds for link hello messages and 45 seconds for targeted hello messages. However, it is possible to configure an LDP hold time that is close to the value for the hello interval.

By configuring an LDP hold time close to the hello interval (less than three times the hello interval), LDP neighbor failures might be detected more quickly. However, this also increases the possibility that the router might declare an LDP neighbor down that is still functioning normally. For more information, see Configuring the LDP Timer for Hello Messages.

The LDP hold time is also negotiated automatically between LDP peers. When two LDP peers advertise different LDP hold times to one another, the smaller value is used. If an LDP peer router advertises a shorter hold time than the value you have configured, the peer router’s advertised hold time is used. This negotiation can affect the LDP keepalive interval as well.

If the local LDP hold time is not shortened during LDP peer negotiation, the user-configured keepalive interval is left unchanged. However, if the local hold time is reduced during peer negotiation, the keepalive interval is recalculated. If the LDP hold time has been reduced during peer negotiation, the keepalive interval is reduced to one-third of the new hold time value. For example, if the new hold-time value is 45 seconds, the keepalive interval is set to 15 seconds.

This automated keepalive interval calculation can cause different keepalive intervals to be configured on each peer router. This enables the routers to be flexible in how often they send keepalive messages, because the LDP peer negotiation ensures they are sent more frequently than the LDP hold time.

When you reconfigure the hold-time interval, changes do not

take effect until after the session is reset. The hold time is negotiated

when the LDP peering session is initiated and cannot be renegotiated

as long as the session is up (required by RFC 5036, LDP Specification). To manually force the LDP session

to reset, issue the clear ldp session command.

- Configuring the LDP Hold Time for Link Hello Messages

- Configuring the LDP Hold Time for Targeted Hello Messages

Configuring the LDP Hold Time for Link Hello Messages

To modify how long an LDP node should wait for a link hello

message before declaring the neighbor down, specify a new time in

seconds using the hold-time statement:

hold-time seconds;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Configuring the LDP Hold Time for Targeted Hello Messages

To modify how long an LDP node should wait for a targeted hello

message before declaring the neighbor down, specify a new time in

seconds using the hold-time statement as an option for

the targeted-hello statement:

targeted-hello { hold-time seconds; }

For a list of hierarchy levels at which you can include these statements, see the statement summary sections for these statements.

Enabling Strict Targeted Hello Messages for LDP

Use strict targeted hello messages to prevent LDP sessions

from being established with remote neighbors that have not been specifically

configured. If you configure the strict-targeted-hellos statement, an LDP peer does not respond to targeted hello messages

coming from a source that is not one of its configured remote neighbors.

Configured remote neighbors can include:

Endpoints of RSVP tunnels for which LDP tunneling is configured

Layer 2 circuit neighbors

If an unconfigured neighbor sends a hello message, the

LDP peer ignores the message and logs an error (with the error trace flag) indicating the source. For example, if the LDP peer

received a targeted hello from the Internet address 10.0.0.1 and no

neighbor with this address is specifically configured, the following

message is printed to the LDP log file:

LDP: Ignoring targeted hello from 10.0.0.1

To enable strict targeted hello messages, include the strict-targeted-hellos statement:

strict-targeted-hellos;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Configuring the Interval for LDP Keepalive Messages

The keepalive interval determines how often a message is sent over the session to ensure that the keepalive timeout is not exceeded. If no other LDP traffic is sent over the session in this much time, a keepalive message is sent. The default is 10 seconds. The minimum value is 1 second.

The value configured for the keepalive interval can be altered during LDP session negotiation if the value configured for the LDP hold time on the peer router is lower than the value configured locally. For more information, see Configuring the Delay Before LDP Neighbors Are Considered Down.

To modify the keepalive interval, include the keepalive-interval statement:

keepalive-interval seconds;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Configuring the LDP Keepalive Timeout

After an LDP session is established, messages must be exchanged periodically to ensure that the session is still working. The keepalive timeout defines the amount of time that the neighbor LDP node waits before deciding that the session has failed. This value is usually set to at least three times the keepalive interval. The default is 30 seconds.

To modify the keepalive interval, include the keepalive-timeout statement:

keepalive-timeout seconds;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

The value configured for the keepalive-timeout statement

is displayed as the hold time when you issue the show ldp session

detail command.

Configuring Longest Match for LDP

In order to allow LDP to learn the routes aggregated or summarized across OSPF areas or ISIS levels in inter -domain, Junos OS allows you to configure longest match for LDP based on RFC5283.

Before you configure longest match for LDP, you must do the following:

Configure the device interfaces.

Configure the MPLS protocol.

Configure the OSPF protocol.

To configure longest match for LDP, you must do the following:

Example: Configuring Longest Match for LDP

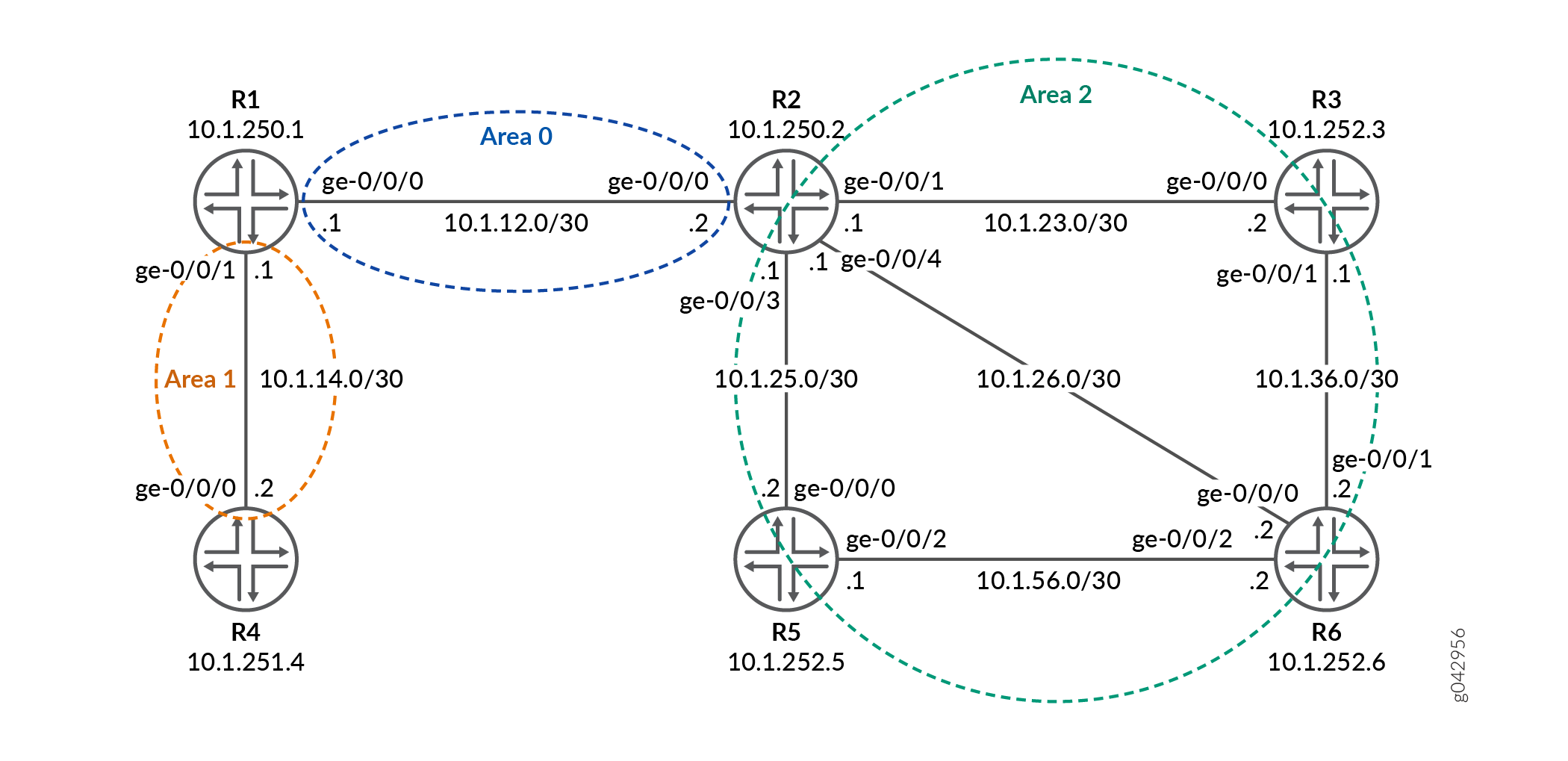

This example shows how to configure longest match for LDP based on RFC5283. This allows LDP to learn the routes aggregated or summarized across OSPF areas or ISIS levels in inter-domain.. The longest match policy provides per prefix granularity.

Requirements

This example uses the following hardware and software components:

Six MX Series routers with OSPF protocol, and LDP enabled on the connected interfaces.

Junos OS Release 16.1 or later running on all devices.

Before you begin:

Configure the device interfaces.

Configure OSPF.

Overview

LDP is often used to establish MPLS label-switched paths (LSPs)

throughout a complete network domain using an IGP such as OSPF or

IS-IS. In such a network, all links in the domain have IGP adjacencies

as well as LDP adjacencies. LDP establishes the LSPs on the shortest

path to a destination as determined by IP forwarding. In Junos OS,

the LDP implementation does an exact match lookup on the IP address

of the FEC in the RIB or IGP routes for label mapping. This exact

mapping requires MPLS end-to-end LDP endpoint IP addresses to be configured

in all the LERs. This defeats the purpose of IP hierarchical design

or default routing in access devices. Configuring longest-match helps to overcome this by suppressing the exact match behaviour

and setup LSP based on the longest matching route on per-prefix basis.

Topology

In the topology, Figure 1shows the longest match for LDP is configured on Device R0 .

Configuration

CLI Quick Configuration

To quickly configure this example, copy the

following commands, paste them into a text file, remove any line breaks,

change any details necessary to match your network configuration,

copy and paste the commands into the CLI at the [edit] hierarchy level, and then enter commit from configuration

mode.

R0

set interfaces ge-0/0/0 unit 0 family inet address 22.22.22.1/24 set interfaces ge-0/0/1 unit 0 family inet address 15.15.15.1/24 set interfaces ge-0/0/2 unit 0 family inet address 11.11.11.1/24 set interfaces ge-0/0/2 unit 0 family iso set interfaces ge-0/0/2 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.112.1/32 primary set interfaces lo0 unit 0 family inet address 10.255.112.1/32 preferred set interfaces lo0 unit 0 family iso address 49.0002.0192.0168.0001.00 set routing-options router-id 10.255.112.1 set protocols mpls interface ge-0/0/2.0 set protocols ospf area 0.0.0.1 interface ge-0/0/2.0 set protocols ospf area 0.0.0.1 interface lo0.0 passive set protocols ldp longest-match set protocols ldp interface ge-0/0/2.0 set protocols ldp interface lo0.0

R1

set interfaces ge-0/0/0 unit 0 family inet address 11.11.11.2/24 set interfaces ge-0/0/0 unit 0 family iso set interfaces ge-0/0/0 unit 0 family mpls set interfaces ge-0/0/1 unit 0 family inet address 12.12.12.1/24 set interfaces ge-0/0/1 unit 0 family iso set interfaces ge-0/0/1 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.112.2/32 primary set interfaces lo0 unit 0 family inet address 10.255.112.2/32 preferred set interfaces lo0 unit 0 family iso address 49.0002.0192.0168.0002.00 set routing-options router-id 10.255.112.2 set protocols mpls interface ge-0/0/0.0 set protocols mpls interface ge-0/0/1.0 set protocols ospf area 0.0.0.0 interface ge-0/0/1.0 set protocols ospf area 0.0.0.0 interface lo0.0 passive set protocols ospf area 0.0.0.1 interface ge-0/0/0.0 set protocols ldp longest-match set protocols ldp interface ge-0/0/0.0 set protocols ldp interface ge-0/0/1.0 set protocols ldp interface lo0.0

R2

set interfaces ge-0/0/0 unit 0 family inet address 24.24.24.1/24 set interfaces ge-0/0/0 unit 0 family iso set interfaces ge-0/0/0 unit 0 family mpls set interfaces ge-0/0/1 unit 0 family inet address 12.12.12.2/24 set interfaces ge-0/0/1 unit 0 family iso set interfaces ge-0/0/1 unit 0 family mpls set interfaces ge-0/0/2 unit 0 family inet address 23.23.23.1/24 set interfaces ge-0/0/2 unit 0 family iso set interfaces ge-0/0/2 unit 0 family mpls set interfaces ge-0/0/3 unit 0 family inet address 22.22.22.2/24 set interfaces ge-0/0/4 unit 0 family inet address 25.25.25.1/24 set interfaces ge-0/0/4 unit 0 family iso set interfaces ge-0/0/4 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.111.4/32 primary set interfaces lo0 unit 0 family inet address 10.255.111.4/32 preferred set interfaces lo0 unit 0 family iso address 49.0003.0192.0168.0003.00 set routing-options router-id 10.255.111.4 set protocols mpls interface ge-0/0/1.0 set protocols mpls interface ge-0/0/2.0 set protocols mpls interface ge-0/0/0.0 set protocols mpls interface ge-0/0/4.0 set protocols ospf area 0.0.0.0 interface ge-0/0/1.0 set protocols ospf area 0.0.0.0 interface lo0.0 passive set protocols ospf area 0.0.0.2 area-range 10.255.111.0/24 set protocols ospf area 0.0.0.2 interface ge-0/0/2.0 set protocols ospf area 0.0.0.2 interface ge-0/0/0.0 set protocols ospf area 0.0.0.2 interface ge-0/0/4.0 set protocols ldp interface ge-0/0/0.0 set protocols ldp interface ge-0/0/1.0 set protocols ldp interface ge-0/0/2.0 set protocols ldp interface ge-0/0/4.0 set protocols ldp interface lo0.0

R3

set interfaces ge-0/0/0 unit 0 family inet address 35.35.35.1/24 set interfaces ge-0/0/0 unit 0 family iso set interfaces ge-0/0/0 unit 0 family mpls set interfaces ge-0/0/1 unit 0 family inet address 23.23.23.2/24 set interfaces ge-0/0/1 unit 0 family iso set interfaces ge-0/0/1 unit 0 family mpls set interfaces ge-0/0/2 unit 0 family inet address 34.34.34.1/24 set interfaces ge-0/0/2 unit 0 family iso set interfaces ge-0/0/2 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.111.1/32 primary set interfaces lo0 unit 0 family inet address 10.255.111.1/32 preferred set interfaces lo0 unit 0 family iso address 49.0003.0192.0168.0004.00 set routing-options router-id 10.255.111.1 set protocols mpls interface ge-0/0/1.0 set protocols ospf area 0.0.0.2 interface ge-0/0/1.0 set protocols ospf area 0.0.0.2 interface fxp0.0 disable set protocols ospf area 0.0.0.2 interface lo0.0 passive set protocols ldp interface ge-0/0/1.0 set protocols ldp interface lo0.0

R4

set interfaces ge-0/0/0 unit 0 family inet address 45.45.45.1/24 set interfaces ge-0/0/0 unit 0 family iso set interfaces ge-0/0/0 unit 0 family mpls set interfaces ge-0/0/1 unit 0 family inet address 24.24.24.2/24 set interfaces ge-0/0/1 unit 0 family iso set interfaces ge-0/0/1 unit 0 family mpls set interfaces ge-0/0/2 unit 0 family inet address 34.34.34.2/24 set interfaces ge-0/0/2 unit 0 family iso set interfaces ge-0/0/2 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.111.2/32 primary set interfaces lo0 unit 0 family inet address 10.255.111.2/32 preferred set interfaces lo0 unit 0 family iso address 49.0003.0192.0168.0005.00 set routing-options router-id 10.255.111.2 set protocols mpls interface ge-0/0/1.0 set protocols ospf area 0.0.0.2 interface ge-0/0/1.0 set protocols ospf area 0.0.0.2 interface fxp0.0 disable set protocols ospf area 0.0.0.2 interface lo0.0 passive set protocols ldp interface ge-0/0/1.0 set protocols ldp interface lo0.0

R5

set interfaces ge-0/0/0 unit 0 family inet address 25.25.25.2/24 set interfaces ge-0/0/0 unit 0 family iso set interfaces ge-0/0/0 unit 0 family mpls set interfaces ge-0/0/1 unit 0 family inet address 15.15.15.2/24 set interfaces ge-0/0/2 unit 0 family inet address 35.35.35.2/24 set interfaces ge-0/0/3 unit 0 family inet address 45.45.45.2/24 set interfaces ge-0/0/3 unit 0 family iso set interfaces ge-0/0/3 unit 0 family mpls set interfaces lo0 unit 0 family inet address 10.255.111.3/32 primary set interfaces lo0 unit 0 family inet address 10.255.111.3/32 preferred set interfaces lo0 unit 0 family iso address 49.0003.0192.0168.0006.00 set routing-options router-id 10.255.111.3 set protocols mpls interface ge-0/0/0.0 set protocols ospf area 0.0.0.2 interface ge-0/0/0.0 set protocols ospf area 0.0.0.2 interface fxp0.0 disable set protocols ospf area 0.0.0.2 interface lo0.0 passive set protocols ldp interface ge-0/0/0.0 set protocols ldp interface lo0.0

Configuring Device R0

Step-by-Step Procedure

The following example requires that you navigate various levels in the configuration hierarchy. For information about navigating the CLI, see Using the CLI Editor in Configuration Mode in the CLI User Guide.

To configure Device R0:

Configure the interfaces.

[edit interfaces] set ge-0/0/0 unit 0 family inet address 22.22.22.1/24 set ge-0/0/1 unit 0 family inet address 15.15.15.1/24 set ge-0/0/2 unit 0 family inet address 11.11.11.1/24 set ge-0/0/2 unit 0 family iso set ge-0/0/2 unit 0 family mpls

Assign the loopback addresses to the device.

[edit interfaces lo0 unit 0 family] set inet address 10.255.112.1/32 primary set inet address 10.255.112.1/32 preferred set iso address 49.0002.0192.0168.0001.00

Configure the router ID.

[edit routing-options] set router-id 10.255.112.1

Configure the MPLS protocol on the interface.

[edit protocols mpls] set interface ge-0/0/2.0

Configure the OSPF protocol on the interface.

[edit protocols ospf] set area 0.0.0.1 interface ge-0/0/2.0 set area 0.0.0.1 interface lo0.0 passive

Configure longest match for the LDP protocol.

[edit protocols ldp] set longest-match

Configure the LDP protocol on the interface.

[edit protocols ldp] set interface ge-0/0/2.0 set interface lo0.0

Results

From configuration mode, confirm your configuration by entering the show interfaces, show protocols, and show routing-options commands. If the output does not display the intended configuration, repeat the instructions in this example to correct the configuration.

user@R0# show interfaces

ge-0/0/0 {

unit 0 {

family inet {

address 22.22.22.1/24;

}

}

}

ge-0/0/1 {

unit 0 {

family inet {

address 15.15.15.1/24;

}

}

}

ge-0/0/2 {

unit 0 {

family inet {

address 11.11.11.1/24;

}

family iso;

family mpls;

}

}

lo0 {

unit 0 {

family inet {

address 10.255.112.1/32 {

primary;

preferred;

}

}

family iso {

address 49.0002.0192.0168.0001.00;

}

}

}

user@R0# show protocols

mpls {

interface ge-0/0/2.0;

}

ospf {

area 0.0.0.1 {

interface ge-0/0/2.0;

interface lo0.0 {

passive;

}

}

}

ldp {

longest-match;

interface ge-0/0/2.0;

interface lo0.0;

}

user@R0# show routing-options router-id 10.255.112.1;

If you are done configuring the device, enter commit from the configuration mode.

Verification

Confirm that the configuration is working properly.

- Verifying the Routes

- Verifying LDP Overview Information

- Verify the LDP Entries in the Internal Topology Table

- Verify Only FEC Information of LDP Route

- Verify FEC and Shadow Routes of LDP

Verifying the Routes

Purpose

Verify that the expected routes are learned.

Action

On Device R0, from operational mode, run the show

route command to display the routes in the routing table.

user@R0> show route

inet.0: 62 destinations, 62 routes (62 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.4.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.5.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.6.128.0/17 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.9.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.10.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.13.4.0/23 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.13.10.0/23 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.82.0.0/15 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.84.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.85.12.0/22 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.92.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.92.16.0/20 *[Direct/0] 10:08:01

> via fxp0.0

10.92.20.175/32 *[Local/0] 10:08:01

Local via fxp0.0

10.94.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.99.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.102.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.150.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.155.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.157.64.0/19 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.160.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.204.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.205.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.206.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.207.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.209.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.212.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.213.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.214.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.215.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.216.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.218.13.0/24 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.218.14.0/24 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.218.16.0/20 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.218.32.0/20 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.227.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

10.255.111.0/24 *[OSPF/10] 09:52:14, metric 3

> to 11.11.11.2 via ge-0/0/2.0

10.255.111.4/32 *[OSPF/10] 09:54:10, metric 2

> to 11.11.11.2 via ge-0/0/2.0

10.255.112.1/32 *[Direct/0] 09:55:05

> via lo0.0

10.255.112.2/32 *[OSPF/10] 09:54:18, metric 1

> to 11.11.11.2 via ge-0/0/2.0

11.11.11.0/24 *[Direct/0] 09:55:05

> via ge-0/0/2.0

11.11.11.1/32 *[Local/0] 09:55:05

Local via ge-0/0/2.0

12.12.12.0/24 *[OSPF/10] 09:54:18, metric 2

> to 11.11.11.2 via ge-0/0/2.0

15.15.15.0/24 *[Direct/0] 09:55:05

> via ge-0/0/1.0

15.15.15.1/32 *[Local/0] 09:55:05

Local via ge-0/0/1.0

22.22.22.0/24 *[Direct/0] 09:55:05

> via ge-0/0/0.0

22.22.22.1/32 *[Local/0] 09:55:05

Local via ge-0/0/0.0

23.23.23.0/24 *[OSPF/10] 09:54:10, metric 3

> to 11.11.11.2 via ge-0/0/2.0

24.24.24.0/24 *[OSPF/10] 09:54:10, metric 3

> to 11.11.11.2 via ge-0/0/2.0

25.25.25.0/24 *[OSPF/10] 09:54:10, metric 3

> to 11.11.11.2 via ge-0/0/2.0

128.92.17.45/32 *[OSPF/10] 09:54:05, metric 3

> to 11.11.11.2 via ge-0/0/2.0

128.92.20.175/32 *[Direct/0] 10:08:01

> via lo0.0

128.92.21.186/32 *[OSPF/10] 09:54:10, metric 3

> to 11.11.11.2 via ge-0/0/2.0

128.92.25.135/32 *[OSPF/10] 09:54:10, metric 3

> to 11.11.11.2 via ge-0/0/2.0

128.92.27.91/32 *[OSPF/10] 09:54:18, metric 1

> to 11.11.11.2 via ge-0/0/2.0

128.92.28.70/32 *[OSPF/10] 09:54:10, metric 2

> to 11.11.11.2 via ge-0/0/2.0

172.16.0.0/12 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

192.168.0.0/16 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

192.168.102.0/23 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

207.17.136.0/24 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

207.17.136.192/32 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

207.17.137.0/24 *[Static/5] 10:08:01

> to 10.92.31.254 via fxp0.0

224.0.0.5/32 *[OSPF/10] 09:55:05, metric 1

MultiRecv

inet.3: 5 destinations, 5 routes (5 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.255.111.1/32 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Push 300128

10.255.111.2/32 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Push 300144

10.255.111.3/32 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Push 300160

10.255.111.4/32 *[LDP/9] 09:54:10, metric 2, tag 0

> to 11.11.11.2 via ge-0/0/2.0, Push 300000

10.255.112.2/32 *[LDP/9] 09:54:48, metric 1, tag 0

> to 11.11.11.2 via ge-0/0/2.0

iso.0: 2 destinations, 2 routes (2 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

47.0005.80ff.f800.0000.0108.0001.1280.9202.0175/152

*[Direct/0] 10:08:01

> via lo0.0

49.0002.0192.0168.0001/72

*[Direct/0] 09:55:05

> via lo0.0

mpls.0: 10 destinations, 10 routes (10 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

0 *[MPLS/0] 09:55:05, metric 1

Receive

1 *[MPLS/0] 09:55:05, metric 1

Receive

2 *[MPLS/0] 09:55:05, metric 1

Receive

13 *[MPLS/0] 09:55:05, metric 1

Receive

300064 *[LDP/9] 09:54:48, metric 1

> to 11.11.11.2 via ge-0/0/2.0, Pop

300064(S=0) *[LDP/9] 09:54:48, metric 1

> to 11.11.11.2 via ge-0/0/2.0, Pop

300112 *[LDP/9] 09:54:10, metric 2, tag 0

> to 11.11.11.2 via ge-0/0/2.0, Swap 300000

300192 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Swap 300128

300208 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Swap 300144

300224 *[LDP/9] 09:41:03, metric 3

> to 11.11.11.2 via ge-0/0/2.0, Swap 300160

inet6.0: 2 destinations, 2 routes (2 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

abcd::128:92:20:175/128

*[Direct/0] 10:08:01

> via lo0.0

fe80::5668:a50f:fcc1:1f9c/128

*[Direct/0] 10:08:01

> via lo0.0

Meaning

The output shows all the routes in the routing table of Device R0.

Verifying LDP Overview Information

Purpose

Display LDP overview information.

Action

On Device R0, from operational mode, run the show

ldp overview command to display the overview of the LDP.

user@R0> show ldp overview

Instance: master

Reference count: 2

Router ID: 10.255.112.1

Message id: 8

Configuration sequence: 6

Deaggregate: disabled

Explicit null: disabled

IPv6 tunneling: disabled

Strict targeted hellos: disabled

Loopback if added: yes

Route preference: 9

Unicast transit LSP chaining: disabled

P2MP transit LSP chaining: disabled

Transit LSP statistics based on route statistics: disabled

LDP route acknowledgement: enabled

LDP mtu discovery: disabled

Longest Match: enabled

Capabilities enabled: none

Egress FEC capabilities enabled: entropy-label-capability

Downstream unsolicited Sessions:

Operational: 1

Retention: liberal

Control: ordered

Auto targeted sessions:

Auto targeted: disabled

Timers:

Keepalive interval: 10, Keepalive timeout: 30

Link hello interval: 5, Link hello hold time: 15

Targeted hello interval: 15, Targeted hello hold time: 45

Label withdraw delay: 60, Make before break timeout: 30

Make before break switchover delay: 3

Link protection timeout: 120

Graceful restart:

Restart: disabled, Helper: enabled, Restart in process: false

Reconnect time: 60000, Max neighbor reconnect time: 120000

Recovery time: 160000, Max neighbor recovery time: 240000

Traffic Engineering:

Bgp igp: disabled

Both ribs: disabled

Mpls forwarding: disabled

IGP:

Tracking igp metric: disabled

Sync session up delay: 10

Session protection:

Session protection: disabled

Session protection timeout: 0

Interface addresses advertising:

11.11.11.1

10.255.112.1

128.92.20.175

Label allocation:

Current number of LDP labels allocated: 5

Total number of LDP labels allocated: 11

Total number of LDP labels freed: 6

Total number of LDP label allocation failure: 0

Current number of labels allocated by all protocols: 5

Meaning

The output displays the LDP overview information of Device R0

Verify the LDP Entries in the Internal Topology Table

Purpose

Display the route entries in the Label Distribution Protocol (LDP) internal topology table.

Action

On Device R0, from operational mode, run the show

ldp route command to display the internal topology table of

LDP.

user@R0> show ldp route

Destination Next-hop intf/lsp/table Next-hop address

10.4.0.0/16 fxp0.0 10.92.31.254

10.5.0.0/16 fxp0.0 10.92.31.254

10.6.128.0/17 fxp0.0 10.92.31.254

10.9.0.0/16 fxp0.0 10.92.31.254

10.10.0.0/16 fxp0.0 10.92.31.254

10.13.4.0/23 fxp0.0 10.92.31.254

10.13.10.0/23 fxp0.0 10.92.31.254

10.82.0.0/15 fxp0.0 10.92.31.254

10.84.0.0/16 fxp0.0 10.92.31.254

10.85.12.0/22 fxp0.0 10.92.31.254

10.92.0.0/16 fxp0.0 10.92.31.254

10.92.16.0/20 fxp0.0

10.92.20.175/32

10.94.0.0/16 fxp0.0 10.92.31.254

10.99.0.0/16 fxp0.0 10.92.31.254

10.102.0.0/16 fxp0.0 10.92.31.254

10.150.0.0/16 fxp0.0 10.92.31.254

10.155.0.0/16 fxp0.0 10.92.31.254

10.157.64.0/19 fxp0.0 10.92.31.254

10.160.0.0/16 fxp0.0 10.92.31.254

10.204.0.0/16 fxp0.0 10.92.31.254

10.205.0.0/16 fxp0.0 10.92.31.254

10.206.0.0/16 fxp0.0 10.92.31.254

10.207.0.0/16 fxp0.0 10.92.31.254

10.209.0.0/16 fxp0.0 10.92.31.254

10.212.0.0/16 fxp0.0 10.92.31.254

10.213.0.0/16 fxp0.0 10.92.31.254

10.214.0.0/16 fxp0.0 10.92.31.254

10.215.0.0/16 fxp0.0 10.92.31.254

10.216.0.0/16 fxp0.0 10.92.31.254

10.218.13.0/24 fxp0.0 10.92.31.254

10.218.14.0/24 fxp0.0 10.92.31.254

10.218.16.0/20 fxp0.0 10.92.31.254

10.218.32.0/20 fxp0.0 10.92.31.254

10.227.0.0/16 fxp0.0 10.92.31.254

10.255.111.0/24 ge-0/0/2.0 11.11.11.2

10.255.111.4/32 ge-0/0/2.0 11.11.11.2

10.255.112.1/32 lo0.0

10.255.112.2/32 ge-0/0/2.0 11.11.11.2

11.11.11.0/24 ge-0/0/2.0

11.11.11.1/32

12.12.12.0/24 ge-0/0/2.0 11.11.11.2

15.15.15.0/24 ge-0/0/1.0

15.15.15.1/32

22.22.22.0/24 ge-0/0/0.0

22.22.22.1/32

23.23.23.0/24 ge-0/0/2.0 11.11.11.2

24.24.24.0/24 ge-0/0/2.0 11.11.11.2

25.25.25.0/24 ge-0/0/2.0 11.11.11.2

128.92.17.45/32 ge-0/0/2.0 11.11.11.2

128.92.20.175/32 lo0.0

128.92.21.186/32 ge-0/0/2.0 11.11.11.2

128.92.25.135/32 ge-0/0/2.0 11.11.11.2

128.92.27.91/32 ge-0/0/2.0 11.11.11.2

128.92.28.70/32 ge-0/0/2.0 11.11.11.2

172.16.0.0/12 fxp0.0 10.92.31.254

192.168.0.0/16 fxp0.0 10.92.31.254

192.168.102.0/23 fxp0.0 10.92.31.254

207.17.136.0/24 fxp0.0 10.92.31.254

207.17.136.192/32 fxp0.0 10.92.31.254

207.17.137.0/24 fxp0.0 10.92.31.254

224.0.0.5/32

Meaning

The output displays the route entries in the Label Distribution Protocol (LDP) internal topology table of Device R0.

Verify Only FEC Information of LDP Route

Purpose

Display only the FEC information of LDP route.

Action

On Device R0, from operational mode, run the show

ldp route fec-only command to display the routes in the routing

table.

user@R0> show ldp route fec-only

Destination Next-hop intf/lsp/table Next-hop address

10.255.111.1/32 ge-0/0/2.0 11.11.11.2

10.255.111.2/32 ge-0/0/2.0 11.11.11.2

10.255.111.3/32 ge-0/0/2.0 11.11.11.2

10.255.111.4/32 ge-0/0/2.0 11.11.11.2

10.255.112.1/32 lo0.0

10.255.112.2/32 ge-0/0/2.0 11.11.11.2

Meaning

The output displays only the FEC routes of LDP protocol available for Device R0.

Verify FEC and Shadow Routes of LDP

Purpose

Display the FEC and the shadow routes in the routing table.

Action

On Device R0, from operational mode, run the show

ldp route fec-and-route command to display the FEC and shadow

routes in the routing table.

user@R0> show ldp route fec-and-route

Destination Next-hop intf/lsp/table Next-hop address

10.4.0.0/16 fxp0.0 10.92.31.254

10.5.0.0/16 fxp0.0 10.92.31.254

10.6.128.0/17 fxp0.0 10.92.31.254

10.9.0.0/16 fxp0.0 10.92.31.254

10.10.0.0/16 fxp0.0 10.92.31.254

10.13.4.0/23 fxp0.0 10.92.31.254

10.13.10.0/23 fxp0.0 10.92.31.254

10.82.0.0/15 fxp0.0 10.92.31.254

10.84.0.0/16 fxp0.0 10.92.31.254

10.85.12.0/22 fxp0.0 10.92.31.254

10.92.0.0/16 fxp0.0 10.92.31.254

10.92.16.0/20 fxp0.0

10.92.20.175/32

10.94.0.0/16 fxp0.0 10.92.31.254

10.99.0.0/16 fxp0.0 10.92.31.254

10.102.0.0/16 fxp0.0 10.92.31.254

10.150.0.0/16 fxp0.0 10.92.31.254

10.155.0.0/16 fxp0.0 10.92.31.254

10.157.64.0/19 fxp0.0 10.92.31.254

10.160.0.0/16 fxp0.0 10.92.31.254

10.204.0.0/16 fxp0.0 10.92.31.254

10.205.0.0/16 fxp0.0 10.92.31.254

10.206.0.0/16 fxp0.0 10.92.31.254

10.207.0.0/16 fxp0.0 10.92.31.254

10.209.0.0/16 fxp0.0 10.92.31.254

10.212.0.0/16 fxp0.0 10.92.31.254

10.213.0.0/16 fxp0.0 10.92.31.254

10.214.0.0/16 fxp0.0 10.92.31.254

10.215.0.0/16 fxp0.0 10.92.31.254

10.216.0.0/16 fxp0.0 10.92.31.254

10.218.13.0/24 fxp0.0 10.92.31.254

10.218.14.0/24 fxp0.0 10.92.31.254

10.218.16.0/20 fxp0.0 10.92.31.254

10.218.32.0/20 fxp0.0 10.92.31.254

10.227.0.0/16 fxp0.0 10.92.31.254

10.255.111.0/24 ge-0/0/2.0 11.11.11.2

10.255.111.1/32 ge-0/0/2.0 11.11.11.2

10.255.111.2/32 ge-0/0/2.0 11.11.11.2

10.255.111.3/32 ge-0/0/2.0 11.11.11.2

10.255.111.4/32 ge-0/0/2.0 11.11.11.2

10.255.111.4/32 ge-0/0/2.0 11.11.11.2

10.255.112.1/32 lo0.0

10.255.112.1/32 lo0.0

10.255.112.2/32 ge-0/0/2.0 11.11.11.2

10.255.112.2/32 ge-0/0/2.0 11.11.11.2

11.11.11.0/24 ge-0/0/2.0

11.11.11.1/32

12.12.12.0/24 ge-0/0/2.0 11.11.11.2

15.15.15.0/24 ge-0/0/1.0

15.15.15.1/32

22.22.22.0/24 ge-0/0/0.0

22.22.22.1/32

23.23.23.0/24 ge-0/0/2.0 11.11.11.2

24.24.24.0/24 ge-0/0/2.0 11.11.11.2

25.25.25.0/24 ge-0/0/2.0 11.11.11.2

128.92.17.45/32 ge-0/0/2.0 11.11.11.2

128.92.20.175/32 lo0.0

128.92.21.186/32 ge-0/0/2.0 11.11.11.2

128.92.25.135/32 ge-0/0/2.0 11.11.11.2

128.92.27.91/32 ge-0/0/2.0 11.11.11.2

128.92.28.70/32 ge-0/0/2.0 11.11.11.2

172.16.0.0/12 fxp0.0 10.92.31.254

192.168.0.0/16 fxp0.0 10.92.31.254

192.168.102.0/23 fxp0.0 10.92.31.254

207.17.136.0/24 fxp0.0 10.92.31.254

207.17.136.192/32 fxp0.0 10.92.31.254

207.17.137.0/24 fxp0.0 10.92.31.254

224.0.0.5/32

Meaning

The output displays the FEC and the shadow routes of Device R0

Configuring LDP Route Preferences

When several protocols calculate routes to the same destination, route preferences are used to select which route is installed in the forwarding table. The route with the lowest preference value is selected. The preference value can be a number in the range 0 through 255. By default, LDP routes have a preference value of 9.

To modify the route preferences, include the preference statement:

preference preference;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

LDP Graceful Restart

LDP graceful restart enables a router whose LDP control plane is undergoing a restart to continue to forward traffic while recovering its state from neighboring routers. It also enables a router on which helper mode is enabled to assist a neighboring router that is attempting to restart LDP.

During session initialization, a router advertises its ability to perform LDP graceful restart or to take advantage of a neighbor performing LDP graceful restart by sending the graceful restart TLV. This TLV contains two fields relevant to LDP graceful restart: the reconnect time and the recovery time. The values of the reconnect and recovery times indicate the graceful restart capabilities supported by the router.

When a router discovers that a neighboring router is restarting, it waits until the end of the recovery time before attempting to reconnect. The recovery time is the length of time a router waits for LDP to restart gracefully. The recovery time period begins when an initialization message is sent or received. This time period is also typically the length of time that a neighboring router maintains its information about the restarting router, allowing it to continue to forward traffic.

You can configure LDP graceful restart in both the master instance for the LDP protocol and for a specific routing instance. You can disable graceful restart at the global level for all protocols, at the protocol level for LDP only, and on a specific routing instance. LDP graceful restart is disabled by default, because at the global level, graceful restart is disabled by default. However, helper mode (the ability to assist a neighboring router attempting a graceful restart) is enabled by default.

The following are some of the behaviors associated with LDP graceful restart:

Outgoing labels are not maintained in restarts. New outgoing labels are allocated.

When a router is restarting, no label-map messages are sent to neighbors that support graceful restart until the restarting router has stabilized (label-map messages are immediately sent to neighbors that do not support graceful restart). However, all other messages (keepalive, address-message, notification, and release) are sent as usual. Distributing these other messages prevents the router from distributing incomplete information.

Helper mode and graceful restart are independent. You can disable graceful restart in the configuration, but still allow the router to cooperate with a neighbor attempting to restart gracefully.

Configuring LDP Graceful Restart

When

you alter the graceful restart configuration at either the [edit

routing-options graceful-restart] or [edit protocols ldp

graceful-restart] hierarchy levels, any running LDP session

is automatically restarted to apply the graceful restart configuration.

This behavior mirrors the behavior of BGP when you alter its graceful

restart configuration.

By default, graceful restart helper mode is enabled, but graceful restart is disabled. Thus, the default behavior of a router is to assist neighboring routers attempting a graceful restart, but not to attempt a graceful restart itself.

To configure LDP graceful restart, see the following sections:

- Enabling Graceful Restart

- Disabling LDP Graceful Restart or Helper Mode

- Configuring Reconnect Time

- Configuring Recovery Time and Maximum Recovery Time

Enabling Graceful Restart

To enable LDP graceful restart, you also need to enable graceful

restart on the router. To enable graceful restart, include the graceful-restart statement:

graceful-restart;

You can include this statement at the following hierarchy levels:

[edit routing-options][edit logical-systems logical-system-name routing-options]

ACX Series routers do not support [edit logical-systems

logical-system-name routing-options] hierarchy level.

The graceful-restart statement enables graceful restart

for all protocols supporting this feature on the router. For more

information about graceful restart, see the Junos OS Routing Protocols Library for Routing Devices.

By default, LDP graceful restart is enabled when you enable graceful restart at both the LDP protocol level and on all the routing instances. However, you can disable both LDP graceful restart and LDP graceful restart helper mode.

Disabling LDP Graceful Restart or Helper Mode

To disable LDP graceful restart and recovery, include the disable statement:

ldp {

graceful-restart {

disable;

}

}

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

You can disable helper mode at the LDP protocols level only.

You cannot disable helper mode for a specific routing instance. To

disable LDP helper mode, include the helper-disable statement:

ldp {

graceful-restart {

helper-disable;

}

}

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

The following LDP graceful restart configurations are possible:

LDP graceful restart and helper mode are both enabled.

LDP graceful restart is disabled but helper mode is enabled. A router configured in this way cannot restart gracefully but can help a restarting neighbor.

LDP graceful restart and helper mode are both disabled. The router does not use LDP graceful restart or the graceful restart type, length, and value (TLV) sent in the initialization message. The router behaves as a router that cannot support LDP graceful restart.

A configuration error is issued if you attempt to enable graceful restart and disable helper mode.

Configuring Reconnect Time

After the LDP connection between neighbors fails, neighbors wait a certain amount of time for the gracefully restarting router to resume sending LDP messages. After the wait period, the LDP session can be reestablished. You can configure the wait period in seconds. This value is included in the fault tolerant session TLV sent in LDP initialization messages when LDP graceful restart is enabled.

Suppose that Router A and Router B are LDP neighbors. Router A is the restarting Router. The reconnect time is the time that Router A tells Router B to wait after Router B detects that Router A restarted.

To configure the reconnect time, include the reconnect-time statement:

graceful-restart { reconnect-time seconds; }

You can set the reconnect time to a value in the range from 30 through 300 seconds. By default, it is 60 seconds.

For a list of hierarchy levels at which you can configure these statements, see the statement summary sections for these statements.

Configuring Recovery Time and Maximum Recovery Time

The recovery time is the amount of time a router waits for LDP to restart gracefully. The recovery time period begins when an initialization message is sent or received. This period is also typically the amount of time that a neighboring router maintains its information about the restarting router, allowing it to continue to forward traffic.

To prevent a neighboring router from being adversely affected if it receives a false value for the recovery time from the restarting router, you can configure the maximum recovery time on the neighboring router. A neighboring router maintains its state for the shorter of the two times. For example, Router A is performing an LDP graceful restart. It has sent a recovery time of 900 seconds to neighboring Router B. However, Router B has its maximum recovery time configured at 400 seconds. Router B will only wait for 400 seconds before it purges its LDP information from Router A.

To configure recovery time, include the recovery-time statement and the maximum-neighbor-recovery-time statement:

graceful-restart { maximum-neighbor-recovery-time seconds; recovery-time seconds; }

For a list of hierarchy levels at which you can configure these statements, see the statement summary sections for these statements.

Filtering Inbound LDP Label Bindings

You can filter received LDP label bindings, applying policies

to accept or deny bindings advertised by neighboring routers. To configure

received-label filtering, include the import statement:

import [ policy-names ];

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

The named policy (configured at the [edit policy-options] hierarchy level) is applied to all label bindings received from

all LDP neighbors. All filtering is done with from statements. Table 1 lists the only from operators

that apply to LDP received-label filtering.

from Operator |

Description |

|---|---|

|

Matches on bindings received from a neighbor that is adjacent over the specified interface |

|

Matches on bindings received from the specified LDP router ID |

|

Matches on bindings received from a neighbor advertising the specified interface address |

|

Matches on bindings with the specified prefix |

If a binding is filtered, it still appears in the LDP database, but is not considered for installation as part of a label-switched path (LSP).

Generally, applying policies in LDP can be used only to block the establishment of LSPs, not to control their routing. This is because the path that an LSP follows is determined by unicast routing, and not by LDP. However, when there are multiple equal-cost paths to the destination through different neighbors, you can use LDP filtering to exclude some of the possible next hops from consideration. (Otherwise, LDP chooses one of the possible next hops at random.)

LDP sessions are not bound to interfaces or interface addresses.

LDP advertises only per-router (not per-interface) labels; so if multiple

parallel links exist between two routers, only one LDP session is

established, and it is not bound to a single interface. When a router

has multiple adjacencies to the same neighbor, take care to ensure

that the filter does what is expected. (Generally, using next-hop and interface is not appropriate in this case.)

If a label has been filtered (meaning that it has been rejected by the policy and is not used to construct an LSP), it is marked as filtered in the database:

user@host> show ldp database Input label database, 10.10.255.1:0-10.10.255.6:0 Label Prefix 3 10.10.255.6/32 (Filtered) Output label database, 10.10.255.1:0-10.10.255.6:0 Label Prefix 3 10.10.255.1/32 (Filtered)

For more information about how to configure policies for LDP, see the Routing Policies, Firewall Filters, and Traffic Policers User Guide.

Examples: Filtering Inbound LDP Label Bindings

Accept only /32 prefixes from all neighbors:

[edit]

protocols {

ldp {

import only-32;

...

}

}

policy-options {

policy-statement only-32 {

term first {

from {

route-filter 0.0.0.0/0 upto /31;

}

then reject;

}

then accept;

}

}

Accept 131.108/16 or longer from

router ID 10.10.255.2 and accept all prefixes

from all other neighbors:

[edit]

protocols {

ldp {

import nosy-neighbor;

...

}

}

policy-options {

policy-statement nosy-neighbor {

term first {

from {

neighbor 10.10.255.2;

route-filter 131.108.0.0/16 orlonger accept;

route-filter 0.0.0.0/0 orlonger reject;

}

}

then accept;

}

}

Filtering Outbound LDP Label Bindings

You can configure export policies to filter LDP outbound labels.

You can filter outbound label bindings by applying routing policies

to block bindings from being advertised to neighboring routers. To

configure outbound label filtering, include the export statement:

export [policy-name];

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

The named export policy (configured at the [edit policy-options] hierarchy level) is applied to all label bindings transmitted to

all LDP neighbors. The only from operator that applies

to LDP outbound label filtering is route-filter, which

matches bindings with the specified prefix. The only to operators that apply to outbound label filtering are the operators

in Table 2.

to Operator |

Description |

|---|---|

|

Matches on bindings sent to a neighbor that is adjacent over the specified interface |

|

Matches on bindings sent to the specified LDP router ID |

|

Matches on bindings sent to a neighbor advertising the specified interface address |

If a binding is filtered, the binding is not advertised to the neighboring router, but it can be installed as part of an LSP on the local router. You can apply policies in LDP to block the establishment of LSPs, but not to control their routing. The path an LSP follows is determined by unicast routing, not by LDP.

LDP sessions are not bound to interfaces or interface addresses. LDP advertises only per-router (not per-interface) labels. If multiple parallel links exist between two routers, only one LDP session is established, and it is not bound to a single interface.

Do not use the next-hop and interface operators

when a router has multiple adjacencies to the same neighbor.

Filtered labels are marked in the database:

user@host> show ldp database Input label database, 10.10.255.1:0-10.10.255.3:0 Label Prefix 100007 10.10.255.2/32 3 10.10.255.3/32 Output label database, 10.10.255.1:0-10.10.255.3:0 Label Prefix 3 10.10.255.1/32 100001 10.10.255.6/32 (Filtered)

For more information about how to configure policies for LDP, see the Routing Policies, Firewall Filters, and Traffic Policers User Guide.

Examples: Filtering Outbound LDP Label Bindings

Block transmission of the route for 10.10.255.6/32 to any neighbors:

[edit protocols]

ldp {

export block-one;

}

policy-options {

policy-statement block-one {

term first {

from {

route-filter 10.10.255.6/32 exact;

}

then reject;

}

then accept;

}

}

Send only 131.108/16 or longer to

router ID 10.10.255.2, and send all prefixes

to all other routers:

[edit protocols]

ldp {

export limit-lsps;

}

policy-options {

policy-statement limit-lsps {

term allow-one {

from {

route-filter 131.108.0.0/16 orlonger;

}

to {

neighbor 10.10.255.2;

}

then accept;

}

term block-the-rest {

to {

neighbor 10.10.255.2;

}

then reject;

}

then accept;

}

}

Specifying the Transport Address Used by LDP

Routers must first establish a TCP session between each other before they can establish an LDP session. The TCP session enables the routers to exchange the label advertisements needed for the LDP session. To establish the TCP session, each router must learn the other router's transport address. The transport address is an IP address used to identify the TCP session over which the LDP session will run.

To configure the LDP transport address, include the transport-address statement:

transport-address (router-id | interface);

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

If you specify the router-id option, the address of the router

identifier is used as the transport address (unless otherwise configured, the router

identifier is typically the same as the loopback address). If you specify the

interface option, the interface address is used as the transport

address for any LDP sessions to neighbors that can be reached over that interface. Note

that the router identifier is used as the transport address by default.

For proper operation the LDP transport address must be reachable. The router-ID is an identifier, not a routable IP address. For this reason its recommended that the router-ID be set to match the loopback address, and that the loopback address is advertised by the IGP.

You cannot specify the interface option when there are multiple parallel

links to the same LDP neighbor, because the LDP specification requires that the same

transport address be advertised on all interfaces to the same neighbor. If LDP detects

multiple parallel links to the same neighbor, it disables interfaces to that neighbor

one by one until the condition is cleared, either by disconnecting the neighbor on an

interface or by specifying the router-id option.

Control Transport Address Used for Targeted-LDP Session

To establish a TCP session between two devices, each device must learn the other device’s transport address. The transport address is an IP address used to identify the TCP session over which the LDP session operates. Earlier, this transport address could only be the router-ID or an interface address. With the LDP transport-address feature, you can explicitly configure any IP address as the transport address for targeted LDP neighbors for Layer 2 circuit, MPLS, and VPLS adjacencies. This enables you to control the targeted-LDP sessions using transport-address configuration.

- Benefits of Controlling Transport Address Used for Targeted-LDP Session

- Targeted-LDP Transport Address Overview

- Transport Address Preference

- Troubleshooting Transport Address Configuration

Benefits of Controlling Transport Address Used for Targeted-LDP Session

Configuring transport address for establishing targeted-LDP sessions has the following benefits:

Flexible interface configurations—Provides the flexibility of configuring multiple IP addresses for one loopback interface without interrupting the creation of LDP session between the targeted-LDP neighbors.

Ease of operation—Transport address configured at the interface-level, allows you to use more than one protocol in the IGP backbone for LDP. This enables smooth and easy operations.

Targeted-LDP Transport Address Overview

Prior to Junos OS Release 19.1R1, LDP provided support only for router-ID or the interface address as the transport address on any LDP interface. The adjacencies formed on that interface used one of the IP addresses assigned to the interface or the router-ID. In case of targeted adjacency, the interface is the loopback interface. When multiple loopback addresses were configured on the device, the transport address could not be derived for the interface, and as a result, the LDP session could not be established.

Starting in Junos OS Release 19.1R1, in addition to the default

IP addresses used for transport address of targeted-LDP sessions,

you can configure any other IP address as the transport address under

the session, session-group, and interface configuration statements. The transport address configuration is

applicable for configured neighbors only including Layer 2 circuits,

MPLS, and VPLS adjacencies. This configuration does not apply to discovered

adjacencies (targeted or not).

Transport Address Preference

You can configure transport address for targeted-LDP sessions at the session, session-group, and interface level.

After the transport address is configured, the targeted-LDP session is established based on the transport address preference of LDP.

The order of preference of transport address for targeted neighbor (configured through Layer 2 circuit, MPLS, VPLS, and LDP configuration) is as follows:

Under

[edit protocols ldp session]hierarchy.Under

[edit protocols ldp session-group]hierarchy.Under

[edit protocols ldp interfcae lo0]hierarchy.Under

[edit protocols ldp]hierarchy.Default address.

The order of preference of transport address for the discovered neighbors is as follows:

Under

[edit protocols ldp interfcae]hierarchy.Under

[edit protocols ldp]hierarchy.Default address.

The order of preference of transport address for auto-targeted neighbors where LDP is configured to accept hello packets is as follows:

Under

[edit protocols ldp interfcae lo0]hierarchy.Under

[edit protocols ldp]hierarchy.Default address.

Troubleshooting Transport Address Configuration

You can use the following show command outputs to troubleshoot targeted-LDP sessions:

show ldp sessionshow ldp neighborThe

detaillevel of output of theshow ldp neighborcommand displays the transport address sent in the hello messages to the targeted neighbor. If this address is not reachable from the neighbor, the LDP session does not come up.show configuration protocols ldp

You can also enable LDP traceoptions for further troubleshooting.

If the configuration is changed from using a transport address that is invalid (non reachable) to transport address that is valid, the following traces can be observed:

May 29 10:47:11.569722 Incoming connect from 10.55.1.4 May 29 10:47:11.570064 Connection 10.55.1.4 state Closed -> Open May 29 10:47:11.570727 Session 10.55.1.4 state Nonexistent -> Initialized May 29 10:47:11.570768 Session 10.55.1.4 state Initialized -> OpenRec May 29 10:47:11.570799 LDP: Session param Max PDU length 4096 from 10.55.1.4, negotiated 4096 May 29 10:47:11.570823 Session 10.55.1.4 GR state Nonexistent -> Operational May 29 10:47:11.669295 Session 10.55.1.4 state OpenRec -> Operational May 29 10:47:11.669387 RPD_LDP_SESSIONUP: LDP session 10.55.1.4 is up

If the configuration is changed from using a transport address that is valid to transport address that is invalid (non reachable),the following traces can be observed:

May 29 10:42:36.317942 Session 10.55.1.4 GR state Operational -> Nonexistent May 29 10:42:36.318171 Session 10.55.1.4 state Operational -> Closing May 29 10:42:36.318208 LDP session 10.55.1.4 is down, reason: received notification from peer May 29 10:42:36.318236 RPD_LDP_SESSIONDOWN: LDP session 10.55.1.4 is down, reason: received notification from peer May 29 10:42:36.320081 Connection 10.55.1.4 state Open -> Closed May 29 10:42:36.322411 Session 10.55.1.4 state Closing -> Nonexistent

In case of faulty configuration, perform the following troubleshooting tasks:

Check the

address family. The transport address that is configured under thesessionstatement must belong to the same address family as the neighbor or session.The address that is configured as the transport address under a

neighbororsessionstatement must be local to the router for the targeted hello messages to start. You can check if the address is configured. If the address is not configured under any interface, the configuration is rejected.

Configuring the Prefixes Advertised into LDP from the Routing Table

You can control the set of prefixes that are advertised into

LDP and cause the router to be the egress router for those prefixes.

By default, only the loopback address is advertised into LDP. To configure

the set of prefixes from the routing table to be advertised into LDP,

include the egress-policy statement:

egress-policy policy-name;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

If you configure an egress policy for LDP that does not include the loopback address, it is no longer advertised in LDP. To continue to advertise the loopback address, you need to explicitly configure it as a part of the LDP egress policy.

The named policy (configured at the [edit policy-options] or [edit logical-systems logical-system-name policy-options] hierarchy level) is applied to all routes

in the routing table. Those routes that match the policy are advertised

into LDP. You can control the set of neighbors to which those prefixes

are advertised by using the export statement. Only from operators are considered; you can use any valid from operator. For more information, see the Junos OS Routing Protocols Library for Routing Devices.

ACX Series routers do not support [edit logical-systems] hierarchy level.

Example: Configuring the Prefixes Advertised into LDP

Advertise all connected routes into LDP:

[edit protocols]

ldp {

egress-policy connected-only;

}

policy-options {

policy-statement connected-only {

from {

protocol direct;

}

then accept;

}

}

Configuring FEC Deaggregation

When an LDP egress router advertises multiple prefixes, the prefixes are bound to a single label and aggregated into a single forwarding equivalence class (FEC). By default, LDP maintains this aggregation as the advertisement traverses the network.

Normally, because an LSP is not split across multiple next hops and the prefixes are bound into a single LSP, load-balancing across equal-cost paths does not occur. You can, however, load-balance across equal-cost paths if you configure a load-balancing policy and deaggregate the FECs.

Deaggregating the FECs causes each prefix to be bound to a separate label and become a separate LSP.

To configure deaggregated FECs, include the deaggregate statement:

deaggregate;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

For all LDP sessions, you can configure deaggregated FECs only globally.

Deaggregating a FEC allows the resulting multiple LSPs to be distributed across multiple equal-cost paths and distributes LSPs across the multiple next hops on the egress segments but installs only one next hop per LSP.

To aggregate FECs, include the no-deaggregate statement:

no-deaggregate;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

For all LDP sessions, you can configure aggregated FECs only globally.

Configuring Policers for LDP FECs

You can configure the Junos OS to track and police traffic for LDP FECs. LDP FEC policers can be used to do any of the following:

Track or police the ingress traffic for an LDP FEC.

Track or police the transit traffic for an LDP FEC.

Track or police LDP FEC traffic originating from a specific forwarding class.

Track or police LDP FEC traffic originating from a specific virtual routing and forwarding (VRF) site.

Discard false traffic bound for a specific LDP FEC.

To police traffic for an LDP FEC, you must first configure a

filter. Specifically, you need to configure either the interface statement or the interface-set statement at the [edit firewall family protocol-family filter filter-name term term-name from] hierarchy level. The interface statement allows you to

match the filter to a single interface. The interface-set statement allows you to match the filter to multiple interfaces.

For more information on how to configure the interface statement, the interface-set statement, and policers

for LDP FECs, see the Routing Policies, Firewall Filters, and Traffic Policers User Guide.

Once you have configured the filters, you need to include them

in the policing statement configuration for LDP. To configure

policers for LDP FECs, include the policing statement:

policing { fec fec-address { ingress-traffic filter-name; transit-traffic filter-name; } }

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

The policing statement includes the following options:

fec—Specify the FEC address for the LDP FEC you want to police.ingress-filter—Specify the name of the ingress traffic filter.transit-traffic—Specify the name of the transit traffic filter.

Configuring LDP IPv4 FEC Filtering

By default, when a targeted LDP session is established, the Junos OS always exchanges both the IPv4 forwarding equivalence classes (FECs) and the Layer 2 circuit FECs over the targeted LDP session. For an LDP session to an indirectly connected neighbor, you might only want to export Layer 2 circuit FECs to the neighbor if the session was specifically configured to support Layer 2 circuits or VPLS.

In a mixed vendor network where all non-BGP prefixes are advertised into LDP, the LDP database can become large. For this type of environment, it can be useful to prevent the advertisement of IPv4 FECs over LDP sessions formed because of Layer 2 circuit or LDP VPLS configuration. Similarly, it can be useful to filter any IPv4 FECs received in this sort of environment.

If all the LDP neighbors associated with an LDP session are

Layer 2 only, you can configure the Junos OS to advertise only

Layer 2 circuit FECs by configuring the l2-smart-policy statement. This feature also automatically filters out the IPv4

FECs received on this session. Configuring an explicit export or import

policy that is activated for l2-smart-policy disables this

feature in the corresponding direction.

If one of the LDP session’s neighbors is formed because of a discovered adjacency or if the adjacency is formed because of an LDP tunneling configuration on one or more RSVP LSPs, the IPv4 FECs are advertised and received using the default behavior.

To prevent LDP from exporting IPv4 FECs over LDP sessions with

Layer 2 neighbors only and to filter out IPv4 FECs received over

such sessions, include the l2-smart-policy statement:

l2-smart-policy;

For a list of hierarchy levels at which you can configure this statement, see the statement summary for this statement.

Configuring BFD for LDP LSPs

You can configure Bidirectional Forwarding Detection (BFD) for LDP LSPs. The BFD protocol is a simple hello mechanism that detects failures in a network. Hello packets are sent at a specified, regular interval. A neighbor failure is detected when the router stops receiving a reply after a specified interval. BFD works with a wide variety of network environments and topologies. The failure detection timers for BFD have shorter time limits than the failure detection mechanisms of static routes, providing faster detection.

An error is logged whenever a BFD session for a path fails. The following shows how BFD for LDP LSP log messages might appear:

RPD_LDP_BFD_UP: LDP BFD session for FEC 10.255.16.14/32 is up RPD_LDP_BFD_DOWN: LDP BFD session for FEC 10.255.16.14/32 is down

You can also configure BFD for RSVP LSPs, as described in Configuring BFD for RSVP-Signaled LSPs.

The BFD failure detection timers are adaptive and can be adjusted

to be more or less aggressive. For example, the timers can adapt to

a higher value if the adjacency fails, or a neighbor can negotiate

a higher value for a timer than the configured value. The timers adapt

to a higher value when a BFD session flap occurs more than three times

in a span of 15 seconds. A back-off algorithm increases the receive

(Rx) interval by two if the local BFD instance is the reason for the

session flap. The transmission (Tx) interval is increased by two if

the remote BFD instance is the reason for the session flap. You can

use the clear bfd adaptation command to return BFD interval

timers to their configured values. The clear bfd adaptation command is hitless, meaning that the command does not affect traffic

flow on the routing device.

To enable BFD for LDP LSPs, include the oam and bfd-liveness-detection statements:

oam { bfd-liveness-detection { detection-time threshold milliseconds; ecmp; failure-action { remove-nexthop; remove-route; } holddown-interval seconds; ingress-policy ingress-policy-name; minimum-interval milliseconds; minimum-receive-interval milliseconds; minimum-transmit-interval milliseconds; multiplier detection-time-multiplier; no-adaptation; transmit-interval { minimum-interval milliseconds; threshold milliseconds; } version (0 | 1 | automatic); } fec fec-address { bfd-liveness-detection { detection-time threshold milliseconds; ecmp; failure-action { remove-nexthop; remove-route; } holddown-interval milliseconds; ingress-policy ingress-policy-name; minimum-interval milliseconds; minimum-receive-interval milliseconds; minimum-transmit-interval milliseconds; multiplier detection-time-multiplier; no-adaptation; transmit-interval { minimum-interval milliseconds; threshold milliseconds; } version (0 | 1 | automatic); } no-bfd-liveness-detection; periodic-traceroute { disable; exp exp-value; fanout fanout-value; frequency minutes; paths number-of-paths; retries retry-attempts; source address; ttl ttl-value; wait seconds; } } lsp-ping-interval seconds; periodic-traceroute { disable; exp exp-value; fanout fanout-value; frequency minutes; paths number-of-paths; retries retry-attempts; source address; ttl ttl-value; wait seconds; } }

You can enable BFD for the LDP LSPs associated with a specific

forwarding equivalence class (FEC) by configuring the FEC address

using the fec option at the [edit protocols ldp] hierarchy level. Alternatively, you can configure an Operation Administration

and Management (OAM) ingress policy to enable BFD on a range of FEC

addresses. For more information, see Configuring OAM Ingress Policies for LDP.

You cannot enable BFD LDP LSPs unless their equivalent FEC addresses are explicitly configured or OAM is enabled on the FECs using an OAM ingress policy. If BFD is not enabled for any FEC addresses, the BFD session will not come up.

You can configure the oam statement at the

following hierarchy levels:

[edit protocols ldp][edit logical-systems logical-system-name protocols ldp]

ACX Series routers do not support [edit logical-systems] hierarchy level.

The oam statement includes the following options:

fec—Specify the FEC address. You must either specify a FEC address or configure an OAM ingress policy to ensure that the BFD session comes up.lsp-ping-interval—Specify the duration of the LSP ping interval in seconds. To issue a ping on an LDP-signaled LSP, use theping mpls ldpcommand. For more information, see the CLI Explorer.

The bfd-liveness-detection statement includes

the following options:

ecmp—Cause LDP to establish BFD sessions for all ECMP paths configured for the specified FEC. If you configure theecmpoption, you must also configure theperiodic-traceroutestatement for the specified FEC. If you do not do so, the commit operation fails. You can configure theperiodic-traceroutestatement at the global hierarchy level ([edit protocols ldp oam]) while only configuring theecmpoption for a specific FEC ([edit protocols ldp oam fec address bfd-liveness-detection]).holddown-interval—Specify the duration the BFD session should remain up before adding the route or next hop. Specifying a time of 0 seconds causes the route or next hop to be added as soon as the BFD session comes back up.

minimum-interval—Specify the minimum transmit and receive interval. If you configure theminimum-intervaloption, you do not need to configure theminimum-receive-intervaloption or theminimum-transmit-intervaloption.minimum-receive-interval—Specify the minimum receive interval. The range is from 1 through 255,000 milliseconds.minimum-transmit-interval—Specify the minimum transmit interval. The range is from 1 through 255,000 milliseconds.multiplier—Specify the detection time multiplier. The range is from 1 through 255.version—Specify the BFD version. The options are BFD version 0 or BFD version 1. By default, the Junos OS software attempts to automatically determine the BFD version.

Configuring ECMP-Aware BFD for LDP LSPs

When you configure BFD for a FEC, a BFD session is established for only one active local next-hop for the router. However, you can configure multiple BFD sessions, one for each FEC associated with a specific equal-cost multipath (ECMP) path. For this to function properly, you also need to configure LDP LSP periodic traceroute. (See Configuring LDP LSP Traceroute.) LDP LSP traceroute is used to discover ECMP paths. A BFD session is initiated for each ECMP path discovered. Whenever a BFD session for one of the ECMP paths fails, an error is logged.

LDP LSP traceroute is run periodically to check the integrity of the ECMP paths. The following might occur when a problem is discovered:

If the latest LDP LSP traceroute for a FEC differs from the previous traceroute, the BFD sessions associated with that FEC (the BFD sessions for address ranges that have changed from previous run) are brought down and new BFD sessions are initiated for the destination addresses in the altered ranges.

If the LDP LSP traceroute returns an error (for example, a timeout), all the BFD sessions associated with that FEC are torn down.

To configure LDP to establish BFD sessions for all ECMP paths

configured for the specified FEC, include the ecmp statement.

ecmp;

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Along with the ecmp statement, you must also include

the periodic-traceroute statement, either in the global

LDP OAM configuration (at the [edit protocols ldp oam] or [edit logical-systems logical-system-name protocols

ldp oam] hierarchy level) or in the configuration for the specified

FEC (at the [edit protocols ldp oam fec address] or [edit logical-systems logical-system-name protocols ldp oam fec address] hierarchy

level). Otherwise, the commit operation fails.

ACX Series routers do not support [edit logical-systems] hierarchy level.

Configuring a Failure Action for the BFD Session on an LDP LSP

You can configure route and next-hop properties in the event of a BFD session failure event on an LDP LSP. The failure event could be an existing BFD session that has gone down or could be a BFD session that never came up. LDP adds back the route or next hop when the relevant BFD session comes back up.

You can configure one of the following failure action

options for the failure-action statement in the event of

a BFD session failure on the LDP LSP:

remove-nexthop—Removes the route corresponding to the next hop of the LSP's route at the ingress node when a BFD session failure event is detected.remove-route—Removes the route corresponding to the LSP from the appropriate routing tables when a BFD session failure event is detected. If the LSP is configured with ECMP and a BFD session corresponding to any path goes down, the route is removed.

To configure a failure action in the event of a BFD session

failure on an LDP LSP, include either the remove-nexthop option or the remove-route option for the failure-action statement:

failure-action { remove-nexthop; remove-route; }

For a list of hierarchy levels at which you can include this statement, see the statement summary section for this statement.

Configuring the Holddown Interval for the BFD Session

You can specify the duration the BFD session should be

up before adding a route or next hop by configuring the holddown-interval statement at either the [edit protocols ldp oam bfd-livenesss-detection] hierarchy level or at the [edit protocols ldp oam fec address