Configuring Chassis Clustering on SRX Series Devices

SRX Series Services gateways can be configured to operate in cluster mode, where a pair of devices can be connected together and configured to operate like a single device to provide high availability. When configured as a chassis cluster, the two nodes back up each other, with one node acting as the primary device and the other as the secondary device, ensuring stateful failover of processes and services in the event of system or hardware failure. If the primary device fails, the secondary device takes over processing of traffic.

For SRX300, SRX320, SRX340, SRX345, and SRX380 devices, connect ge-0/0/1 on node 0 to ge-0/0/1 on node 1. The factory-default configuration does not include HA configuration. To enable HA, if the physical interfaces used by HA have some configurations, these configurations need to be removed. Table 1 lists the physical interfaces used by HA on SRX300, SRX320, SRX340, SRX345, and SRX380.

Device |

fxp0 Interface (HA MGT) |

fxp1 Interface (HA Control) |

Fab Interface |

|---|---|---|---|

SRX300 |

ge-0/0/0 |

ge-0/0/1 |

User defined |

SRX320 |

ge-0/0/0 |

ge-0/0/1 |

User defined |

SRX340 |

dedicated |

ge-0/0/1 |

User defined |

SRX345 |

dedicated |

ge-0/0/1 |

User defined |

SRX380 |

dedicated |

ge-0/0/1 |

User defined |

For more information, see the following topics:

Example: Configure Chassis Clustering on SRX Series Firewalls

This example shows how to set up chassis clustering on an SRX Series Firewall (using SRX1500 or SRX1600 as example).

Requirements

Before you begin:

Physically connect the two devices and ensure that they are the same models. For example, on the SRX1500 or SRX1600 Firewall, connect the dedicated control ports on node 0 and node 1.

Set the two devices to cluster mode and reboot the devices. You must enter the following operational mode commands on both devices, for example:

On node 0:

user@host> set chassis cluster cluster-id 1 node 0 reboot

On node 1:

user@host> set chassis cluster cluster-id 1 node 1 reboot

The cluster-id is the same on both devices, but the node ID must be different because one device is node 0 and the other device is node 1. The range for the cluster-id is 0 through 255 and setting it to 0 is equivalent to disabling cluster mode.

After clustering occurs for the devices, continuing with the SRX1500 or SRX1600 Firewall example, the ge-0/0/0 interface on node 1 changes to ge-7/0/0.

After clustering occurs,

For SRX300 devices, the ge-0/0/1 interface on node 1 changes to ge-1/0/1.

For SRX320 devices, the ge-0/0/1 interface on node 1 changes to ge-3/0/1.

For SRX340 and SRX345 devices, the ge-0/0/1 interface on node 1 changes to ge-5/0/1.

After the reboot, the following interfaces are assigned and repurposed to form a cluster:

For SRX300 and SRX320 devices, ge-0/0/0 becomes fxp0 and is used for individual management of the chassis cluster.

SRX340 and SRX345 devices contain a dedicated port fxp0.

For all SRX300, SRX320, SRX340, SRX345, and SRX380 devices, ge-0/0/1 becomes fxp1 and is used as the control link within the chassis cluster.

The other interfaces are also renamed on the secondary device.

See Understanding SRX Series Chassis Cluster Slot Numbering and Physical Port and Logical Interface Naming for complete mapping of the SRX Series Firewalls.

From this point forward, configuration of the cluster is synchronized between the node members and the two separate devices function as one device.

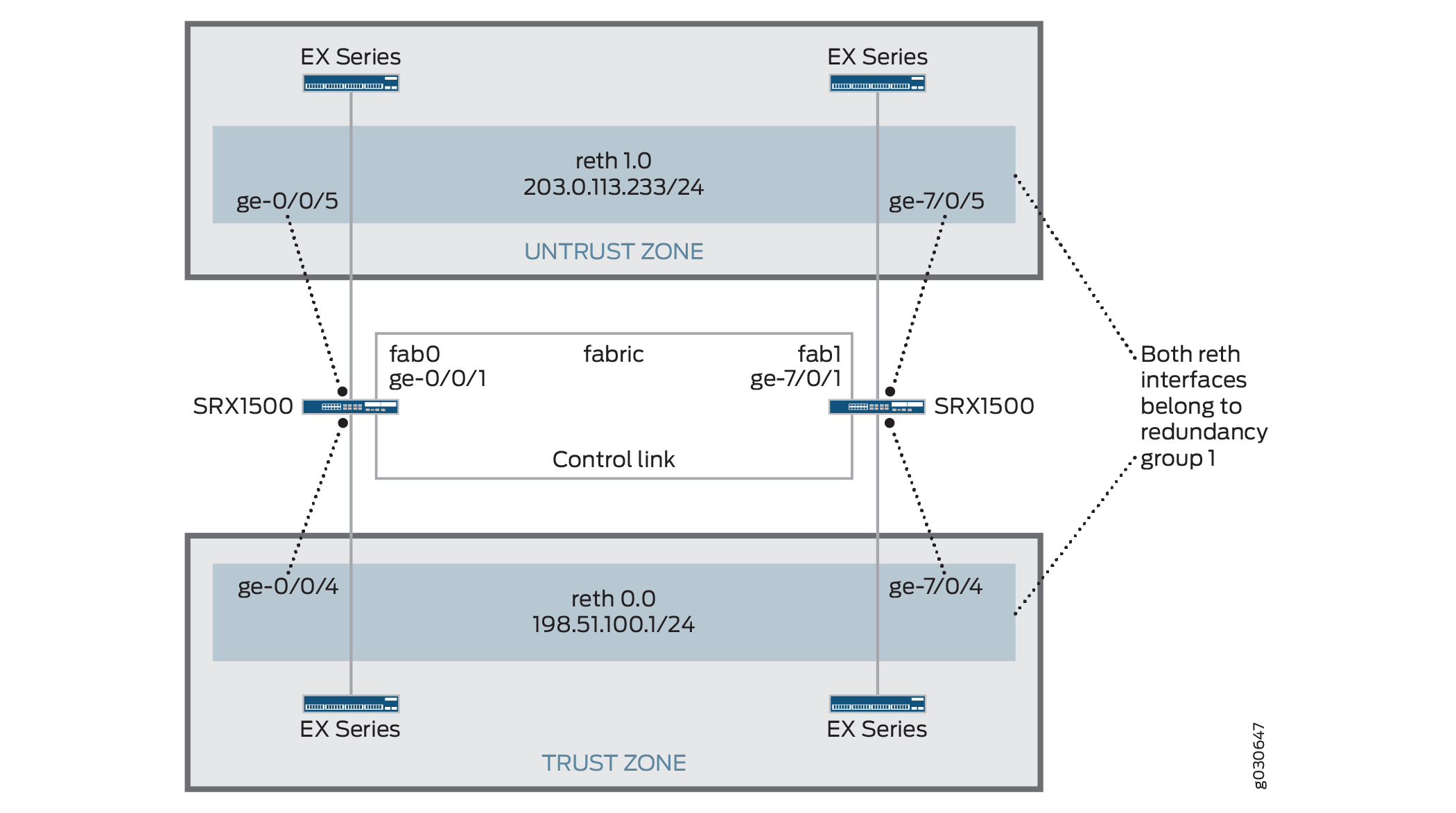

Overview

This example shows how to set up chassis clustering on an SRX Series Firewall using the SRX1500 or SRX1600 device as example.

The node 1 renumbers its interfaces by adding the total number of system FPCs to the original FPC number of the interface. See Table 2 for interface renumbering on the SRX Series Firewall.

SRX Series Services Gateway |

Renumbering Constant |

Node 0 Interface Name |

Node 1 Interface Name |

|---|---|---|---|

|

SRX300 |

1 |

ge-0/0/0 |

ge-1/0/0 |

|

SRX320 |

3 |

ge-0/0/0 |

ge-3/0/0 |

|

SRX340 SRX345 SRX380 |

5 |

ge-0/0/0 |

ge-5/0/0 |

|

SRX1500 |

7 |

ge-0/0/0 |

ge-7/0/0 |

|

SRX1600 |

7 |

ge-0/0/0 |

ge-7/0/0 |

After clustering is enabled, the system creates fxp0, fxp1, and em0 interfaces. Depending on the device, the fxp0, fxp1, and em0 interfaces that are mapped to a physical interface are not user defined. However, the fab interface is user defined.

Figure 1 shows the topology used in this example.

Configuration

Procedure

CLI Quick Configuration

To quickly configure a chassis cluster on an SRX1500 Firewall, copy the following commands and paste them into the CLI:

On {primary:node0}

[edit]

set groups node0 system host-name srx1500-1

set groups node0 interfaces fxp0 unit 0 family inet address 192.16.35.46/24

set groups node1 system host-name srx1500-2

set groups node1 interfaces fxp0 unit 0 family inet address 192.16.35.47/24

set groups node0 system backup-router <backup next-hop from fxp0> destination <management network/mask>

set groups node1 system backup-router <backup next-hop from fxp0> destination <management network/mask>

set apply-groups "${node}"

set interfaces fab0 fabric-options member-interfaces ge-0/0/1

set interfaces fab1 fabric-options member-interfaces ge-7/0/1

set chassis cluster redundancy-group 0 node 0 priority 100

set chassis cluster redundancy-group 0 node 1 priority 1

set chassis cluster redundancy-group 1 node 0 priority 100

set chassis cluster redundancy-group 1 node 1 priority 1

set chassis cluster redundancy-group 1 interface-monitor ge-0/0/5 weight 255

set chassis cluster redundancy-group 1 interface-monitor ge-0/0/4 weight 255

set chassis cluster redundancy-group 1 interface-monitor ge-7/0/5 weight 255

set chassis cluster redundancy-group 1 interface-monitor ge-7/0/4 weight 255

set chassis cluster reth-count 2

set interfaces ge-0/0/5 gigether-options redundant-parent reth1

set interfaces ge-7/0/5 gigether-options redundant-parent reth1

set interfaces reth1 redundant-ether-options redundancy-group 1

set interfaces reth1 unit 0 family inet address 203.0.113.233/24

set interfaces ge-0/0/4 gigether-options redundant-parent reth0

set interfaces ge-7/0/4 gigether-options redundant-parent reth0

set interfaces reth0 redundant-ether-options redundancy-group 1

set interfaces reth0 unit 0 family inet address 198.51.100.1/24

set security zones security-zone Untrust interfaces reth1.0

set security zones security-zone Trust interfaces reth0.0

If you are configuring SRX300, SRX320, SRX340, SRX345, and SRX380 devices, see Table 3 for command and interface settings for your device and substitute these commands into your CLI.

|

Command |

SRX300 |

SRX320 |

SRX340 SRX345 SRX380 |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Step-by-Step Procedure

The following example requires you to navigate various levels in the configuration hierarchy. For instructions on how to do that, see Using the CLI Editor in Configuration Mode in the CLI User Guide.

To configure a chassis cluster on an SRX Series Firewall:

Perform Steps 1 through 5 on the primary device (node 0). They

are automatically copied over to the secondary device (node 1) when

you execute a commit command. The configurations are synchronized

because the control link and fab link interfaces are activated. To

verify the configurations, use the show interface terse command and review the output.

Set up hostnames and management IP addresses for each device using configuration groups. These configurations are specific to each device and are unique to its specific node.

user@host# set groups node0 system host-name srx1500-1 user@host# set groups node0 interfaces fxp0 unit 0 family inet address 192.16.35.46/24 user@host# set groups node1 system host-name srx1500-2 user@host# set groups node1 interfaces fxp0 unit 0 family inet address 192.16.35.47/24

Set the default route and backup router for each node.

user@host# set groups node0 system backup-router <backup next-hop from fxp0> destination <management network/mask> user@host# set groups node1 system backup-router <backup next-hop from fxp0> destination <management network/mask>

Set the

apply-groupcommand so that the individual configurations for each node set by the previous commands are applied only to that node.user@host# set apply-groups "${node}"Define the interfaces used for the fab connection (data plane links for RTO sync) by using physical ports ge-0/0/1 from each node. These interfaces must be connected back-to-back, or through a Layer 2 infrastructure.

user@host# set interfaces fab0 fabric-options member-interfaces ge-0/0/1 user@host# set interfaces fab1 fabric-options member-interfaces ge-7/0/1

Set up redundancy group 0 for the Routing Engine failover properties, and set up redundancy group 1 (all interfaces are in one redundancy group in this example) to define the failover properties for the redundant Ethernet interfaces.

user@host# set chassis cluster redundancy-group 0 node 0 priority 100 user@host# set chassis cluster redundancy-group 0 node 1 priority 1 user@host# set chassis cluster redundancy-group 1 node 0 priority 100 user@host# set chassis cluster redundancy-group 1 node 1 priority 1

Set up interface monitoring to monitor the health of the interfaces and trigger redundancy group failover.

We do not recommend Interface monitoring for redundancy group 0 because it causes the control plane to switch from one node to another node in case interface flap occurs.

user@host# set chassis cluster redundancy-group 1 interface-monitor ge-0/0/5 weight 255 user@host# set chassis cluster redundancy-group 1 interface-monitor ge-0/0/4 weight 255 user@host# set chassis cluster redundancy-group 1 interface-monitor ge-7/0/5 weight 255 user@host# set chassis cluster redundancy-group 1 interface-monitor ge-7/0/4 weight 255

Interface failover only occurs after the weight reaches 0.

Set up the redundant Ethernet (reth) interfaces and assign the redundant interface to a zone.

user@host# set chassis cluster reth-count 2 user@host# set interfaces ge-0/0/5 gigether-options redundant-parent reth1 user@host# set interfaces ge-7/0/5 gigether-options redundant-parent reth1 user@host# set interfaces reth1 redundant-ether-options redundancy-group 1 user@host# set interfaces reth1 unit 0 family inet address 203.0.113.233/24 user@host# set interfaces ge-0/0/4 gigether-options redundant-parent reth0 user@host# set interfaces ge-7/0/4 gigether-options redundant-parent reth0 user@host# set interfaces reth0 redundant-ether-options redundancy-group 1 user@host# set interfaces reth0 unit 0 family inet address 198.51.100.1/24 user@host# set security zones security-zone Untrust interfaces reth1.0 user@host# set security zones security-zone Trust interfaces reth0.0

Results

From operational mode, confirm your configuration by

entering the show configuration command. If the output

does not display the intended configuration, repeat the configuration

instructions in this example to correct it.

For brevity, this show command output includes only

the configuration that is relevant to this example. Any other configuration

on the system has been replaced with ellipses (...).

> show configuration

version x.xx.x;

groups {

node0 {

system {

host-name SRX1500-1;

backup-router 10.100.22.1 destination 66.129.243.0/24;

}

interfaces {

fxp0 {

unit 0 {

family inet {

address 192.16.35.46/24;

}

}

}

}

}

node1 {

system {

host-name SRX1500-2;

backup-router 10.100.21.1 destination 66.129.243.0/24; }

interfaces {

fxp0 {

unit 0 {

family inet {

address 192.16.35.47/24;

}

}

}

}

}

}

apply-groups "${node}";

chassis {

cluster {

reth-count 2;

redundancy-group 0 {

node 0 priority 100;

node 1 priority 1;

}

redundancy-group 1 {

node 0 priority 100;

node 1 priority 1;

interface-monitor {

ge–0/0/5 weight 255;

ge–0/0/4 weight 255;

ge–7/0/5 weight 255;

ge–7/0/4 weight 255;

}

}

}

}

interfaces {

ge–0/0/5 {

gigether–options {

redundant–parent reth1;

}

}

ge–0/0/4 {

gigether–options {

redundant–parent reth0;

}

}

ge–7/0/5 {

gigether–options {

redundant–parent reth1;

}

}

ge–7/0/4 {

gigether–options {

redundant–parent reth0;

}

}

fab0 {

fabric–options {

member–interfaces {

ge–0/0/1;

}

}

}

fab1 {

fabric–options {

member–interfaces {

ge–7/0/1;

}

}

}

reth0 {

redundant–ether–options {

redundancy–group 1;

}

unit 0 {

family inet {

address 198.51.100.1/24;

}

}

}

reth1 {

redundant–ether–options {

redundancy–group 1;

}

unit 0 {

family inet {

address 203.0.113.233/24;

}

}

}

}

...

security {

zones {

security–zone Untrust {

interfaces {

reth1.0;

}

}

security–zone Trust {

interfaces {

reth0.0;

}

}

}

policies {

from–zone Trust to–zone Untrust {

policy 1 {

match {

source–address any;

destination–address any;

application any;

}

then {

permit;

}

}

}

}

}If you are done configuring the device, enter commit from configuration mode.

Verification

Confirm that the configuration is working properly.

- Verifying Chassis Cluster Status

- Verifying Chassis Cluster Interfaces

- Verifying Chassis Cluster Statistics

- Verifying Chassis Cluster Control Plane Statistics

- Verifying Chassis Cluster Data Plane Statistics

- Verifying Chassis Cluster Redundancy Group Status

- Troubleshooting with Logs

Verifying Chassis Cluster Status

Purpose

Verify the chassis cluster status, failover status, and redundancy group information.

Action

From operational mode, enter the show chassis cluster

status command.

{primary:node0}

user@host# show chassis cluster status

Cluster ID: 1

Node Priority Status Preempt Manual failover

Redundancy group: 0 , Failover count: 1

node0 100 primary no no

node1 1 secondary no no

Redundancy group: 1 , Failover count: 1

node0 0 primary no no

node1 0 secondary no no

Verifying Chassis Cluster Interfaces

Purpose

Verify information about chassis cluster interfaces.

Action

From operational mode, enter the show chassis cluster

interfaces command.

{primary:node0}

user@host> show chassis cluster interfaces

Control link name: em0

Redundant-ethernet Information:

Name Status Redundancy-group

reth0 Up 1

reth1 Up 1

Interface Monitoring:

Interface Weight Status Redundancy-group

ge-7/0/5 255 Up 1

ge-7/0/4 255 Up 1

ge-0/0/5 255 Up 1

ge-0/0/4 255 Up 1

Verifying Chassis Cluster Statistics

Purpose

Verify information about the statistics of the different objects being synchronized, the fabric and control interface hellos, and the status of the monitored interfaces in the cluster.

Action

From operational mode, enter the show chassis cluster

statistics command.

{primary:node0}

user@host> show chassis cluster statistics

Control link statistics:

Control link 0:

Heartbeat packets sent: 2276

Heartbeat packets received: 2280

Heartbeat packets errors: 0

Fabric link statistics:

Child link 0

Probes sent: 2272

Probes received: 597

Services Synchronized:

Service name RTOs sent RTOs received

Translation context 0 0

Incoming NAT 0 0

Resource manager 6 0

Session create 161 0

Session close 148 0

Session change 0 0

Gate create 0 0

Session ageout refresh requests 0 0

Session ageout refresh replies 0 0

IPSec VPN 0 0

Firewall user authentication 0 0

MGCP ALG 0 0

H323 ALG 0 0

SIP ALG 0 0

SCCP ALG 0 0

PPTP ALG 0 0

RPC ALG 0 0

RTSP ALG 0 0

RAS ALG 0 0

MAC address learning 0 0

GPRS GTP 0 0

Verifying Chassis Cluster Control Plane Statistics

Purpose

Verify information about chassis cluster control plane statistics (heartbeats sent and received) and the fabric link statistics (probes sent and received).

Action

From operational mode, enter the show chassis cluster

control-plane statistics command.

{primary:node0}

user@host> show chassis cluster control-plane statistics

Control link statistics:

Control link 0:

Heartbeat packets sent: 2294

Heartbeat packets received: 2298

Heartbeat packets errors: 0

Fabric link statistics:

Child link 0

Probes sent: 2290

Probes received: 615

Verifying Chassis Cluster Data Plane Statistics

Purpose

Verify information about the number of RTOs sent and received for services.

Action

From operational mode, enter the show chassis cluster

data-plane statistics command.

{primary:node0}

user@host> show chassis cluster data-plane statistics

Services Synchronized:

Service name RTOs sent RTOs received

Translation context 0 0

Incoming NAT 0 0

Resource manager 6 0

Session create 161 0

Session close 148 0

Session change 0 0

Gate create 0 0

Session ageout refresh requests 0 0

Session ageout refresh replies 0 0

IPSec VPN 0 0

Firewall user authentication 0 0

MGCP ALG 0 0

H323 ALG 0 0

SIP ALG 0 0

SCCP ALG 0 0

PPTP ALG 0 0

RPC ALG 0 0

RTSP ALG 0 0

RAS ALG 0 0

MAC address learning 0 0

GPRS GTP 0 0

Verifying Chassis Cluster Redundancy Group Status

Purpose

Verify the state and priority of both nodes in a cluster and information about whether the primary node has been preempted or whether there has been a manual failover.

Action

From operational mode, enter the chassis cluster

status redundancy-group command.

{primary:node0}

user@host> show chassis cluster status redundancy-group 1

Cluster ID: 1

Node Priority Status Preempt Manual failover

Redundancy group: 1, Failover count: 1

node0 100 primary no no

node1 50 secondary no no

Troubleshooting with Logs

Purpose

Use these logs to identify any chassis cluster issues. You should run these logs on both nodes.

Action

From operational mode, enter these show log commands.

user@host> show log jsrpd user@host> show log chassisd user@host> show log messages user@host> show log dcd user@host> show traceoptions

Viewing a Chassis Cluster Configuration

Purpose

Display chassis cluster verification options.

Action

From the CLI, enter the show chassis cluster ? command:

{primary:node1}

user@host> show chassis cluster ?

Possible completions:

interfaces Display chassis-cluster interfaces

statistics Display chassis-cluster traffic statistics

status Display chassis-cluster status

Viewing Chassis Cluster Statistics

Purpose

Display information about chassis cluster services and interfaces.

Action

From the CLI, enter the show chassis cluster statistics command:

{primary:node1}

user@host> show chassis cluster statistics

Control link statistics:

Control link 0:

Heartbeat packets sent: 798

Heartbeat packets received: 784

Fabric link statistics:

Child link 0

Probes sent: 793

Probes received: 0

Services Synchronized:

Service name RTOs sent RTOs received

Translation context 0 0

Incoming NAT 0 0

Resource manager 0 0

Session create 0 0

Session close 0 0

Session change 0 0

Gate create 0 0

Session ageout refresh requests 0 0

Session ageout refresh replies 0 0

IPSec VPN 0 0

Firewall user authentication 0 0

MGCP ALG 0 0

H323 ALG 0 0

SIP ALG 0 0

SCCP ALG 0 0

PPTP ALG 0 0

RTSP ALG 0 0

{primary:node1}

user@host> show chassis cluster statistics

Control link statistics:

Control link 0:

Heartbeat packets sent: 258689

Heartbeat packets received: 258684

Control link 1:

Heartbeat packets sent: 258689

Heartbeat packets received: 258684

Fabric link statistics:

Child link 0

Probes sent: 258681

Probes received: 258681

Child link 1

Probes sent: 258501

Probes received: 258501

Services Synchronized:

Service name RTOs sent RTOs received

Translation context 0 0

Incoming NAT 0 0

Resource manager 0 0

Session create 1 0

Session close 1 0

Session change 0 0

Gate create 0 0

Session ageout refresh requests 0 0

Session ageout refresh replies 0 0

IPSec VPN 0 0

Firewall user authentication 0 0

MGCP ALG 0 0

H323 ALG 0 0

SIP ALG 0 0

SCCP ALG 0 0

PPTP ALG 0 0

RPC ALG 0 0

RTSP ALG 0 0

RAS ALG 0 0

MAC address learning 0 0

GPRS GTP 0 0

{primary:node1}

user@host> show chassis cluster statistics

Control link statistics:

Control link 0:

Heartbeat packets sent: 82371

Heartbeat packets received: 82321

Control link 1:

Heartbeat packets sent: 0

Heartbeat packets received: 0Clearing Chassis Cluster Statistics

To clear displayed information about chassis cluster services

and interfaces, enter the clear chassis cluster statistics command from the CLI:

{primary:node1}

user@host> clear chassis cluster statistics

Cleared control-plane statistics

Cleared data-plane statisticsUnderstanding Automatic Chassis Cluster Synchronization Between Primary and Secondary Nodes

When you set up an SRX Series chassis cluster, the SRX Series Firewalls must be identical, including their configuration. The chassis cluster synchronization feature automatically synchronizes the configuration from the primary node to the secondary node when the secondary joins the primary as a cluster. By eliminating the manual work needed to ensure the same configurations on each node in the cluster, this feature reduces expenses.

If you want to disable automatic chassis cluster synchronization

between the primary and secondary nodes, you can do so by entering

the set chassis cluster configuration-synchronize no-secondary-bootup-auto command in configuration mode.

At any time, to reenable automatic chassis cluster synchronization,

use the delete chassis cluster configuration-synchronize no-secondary-bootup-auto command in configuration mode.

To see whether the automatic chassis cluster synchronization

is enabled or not, and to see the status of the synchronization, enter

the show chassis cluster information configuration-synchronization operational command.

Either the entire configuration from the primary node is applied successfully to the secondary node, or the secondary node retains its original configuration. There is no partial synchronization.

If you create a cluster with cluster IDs greater than 16, and then decide to roll back to a previous release image that does not support extended cluster IDs, the system comes up as standalone.

If you have a cluster set up and running with an earlier release of Junos OS, you can upgrade to Junos OS Release 12.1X45-D10 and re-create a cluster with cluster IDs greater than 16. However, if for any reason you decide to revert to the previous version of Junos OS that did not support extended cluster IDs, the system comes up with standalone devices after you reboot. However, if the cluster ID set is less than 16 and you roll back to a previous release, the system will come back with the previous setup.

See Also

Verifying Chassis Cluster Configuration Synchronization Status

Purpose

Display the configuration synchronization status of a chassis Understanding Automatic Chassis Cluster Synchronization Between Primary and Secondary Nodescluster.

Action

From the CLI, enter the show chassis cluster information

configuration-synchronization command:

{primary:node0}

user@host> show chassis cluster information configuration-synchronization

node0:

--------------------------------------------------------------------------

Configuration Synchronization:

Status:

Activation status: Enabled

Last sync operation: Auto-Sync

Last sync result: Not needed

Last sync mgd messages:

Events:

Mar 5 01:48:53.662 : Auto-Sync: Not needed.

node1:

--------------------------------------------------------------------------

Configuration Synchronization:

Status:

Activation status: Enabled

Last sync operation: Auto-Sync

Last sync result: Succeeded

Last sync mgd messages:

mgd: rcp: /config/juniper.conf: No such file or directory

mgd: commit complete

Events:

Mar 5 01:48:55.339 : Auto-Sync: In progress. Attempt: 1

Mar 5 01:49:40.664 : Auto-Sync: Succeeded. Attempt: 1