Determine a Campus Fabric Topology

Juniper Networks campus fabrics provide a single, standards-based Ethernet VPN-Virtual Extensible LAN (EVPN-VXLAN) solution that you can deploy on any campus. You can deploy campus fabrics on a two-tier network with a collapsed core or a campus-wide system that involves multiple buildings with separate distribution and core layers.

This topic lists multiple switch models that support the various campus fabric deployments. In the case of the QFX5130, though all variants support campus fabric, only the following variants are supported on Mist: QFX-5130-32CD, QFX-5130-48C, and QFX-5130-48CM.

You can build and manage a campus fabric using the Mist portal. This topic describes the following campus fabric topologies supported by Juniper Mist.

-

EVPN Multihoming

-

Campus Fabric Core-Distribution

-

Campus Fabric IP Clos

Based on your specific requirements, you can build a campus fabric at the organization level or site level. An organization-level configuration is used when you want a single, unified fabric across multiple sites. Note that an organization-level topology only serves use cases where sites are connected via a common pair of cores. A site-level configuration is used when each site operates independently.

The topology type EVPN Multihoming is available only for the site-specific campus fabric. You cannot build it at the organization level.

To help you determine which campus fabric to use, the following sections describe the use cases that each of the above topologies addresses:

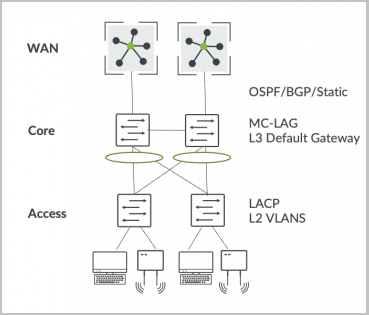

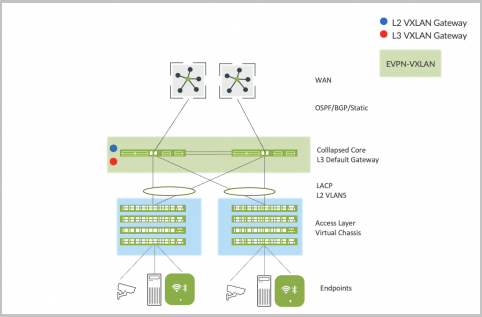

EVPN Multihoming for Collapsed Core

The Juniper Networks campus fabrics EVPN multihoming solution supports a collapsed core architecture, which is a small to mid-size enterprise networking architecture. In a collapsed core model, you deploy up to two Ethernet switching platforms that are interconnected using technologies such as Virtual Router Redundancy Protocol (VRRP), Hot Standby Router Protocol (HSRP) and multichassis link aggregation group (MC-LAG). The endpoint devices include laptops, access points (APs), printers, and Internet of Things (IoT) devices. These endpoint devices plug in to the access layer using various Ethernet speeds, such as 100M, 1G, 2.5G, and 10G. The access layer switching platforms are multihomed to each collapsed core Ethernet switch in the core of the network.

The following image represents the traditional collapsed core deployment model:

However, the traditional collapsed core deployment model presents the following challenges:

-

Its proprietary MC-LAG technology requires a homogeneous vendor approach.

-

It lacks horizontal scale. It supports only up to two core devices in a single topology.

-

It lacks native traffic isolation capabilities in the core.

-

Not all implementations support active-active load balancing to the access layer.

EVPN Multihoming addresses these challenges and provides the following advantages:

-

Provides standards based EVPN-VXLAN framework.

-

Supports horizontal scale up to four core devices.

-

Provides traffic isolation capabilities native to EVPN-VXLAN.

-

Provides native active-active load-balancing support to the access layer using Ethernet Switch Identifier-link aggregation groups (ESI-LAGs).

-

Provides standard Link Aggregation Control Protocol (LACP) at the access layer.

-

Mitigates the need for spanning tree protocol (STP) between the core and access layer.

Choose EVPN Multihoming if you want to:

-

Retain your investment in the access layer.

-

Refresh your legacy hardware that supports collapsed core.

-

Scale your deployment beyond two devices in the core.

-

Leverage the existing access layer without introducing any new hardware or software models.

-

Provide native active-active load-balancing support for the access layer through ESI-LAG.

-

Mitigate the need for STP between the core and the access layer.

-

Use the standards-based EVPN-VXLAN framework in the core.

The following Juniper platforms support EVPN Multihoming:

- Core layer devices: EX4100, EX4300-48MP, EX4400, EX4650, EX9200, QFX5120, QFX5110, QFX5700, and all QFX5130 except the QFX5130E-32CD

- Access layer devices: Third party devices using LACP, Juniper Virtual Chassis, or standalone EX switches

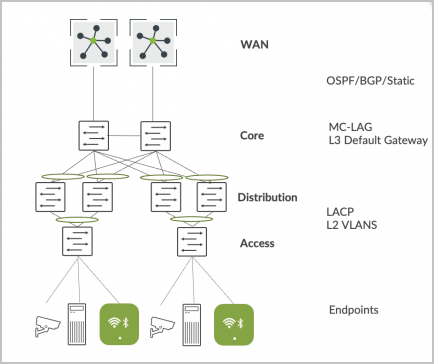

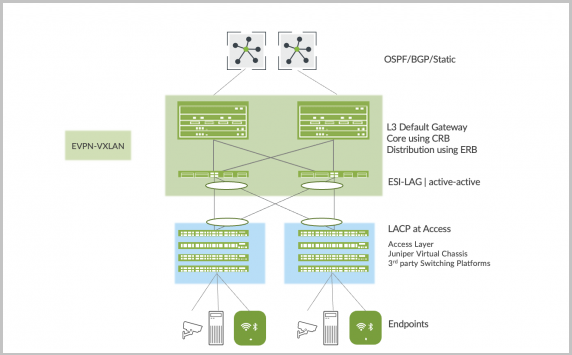

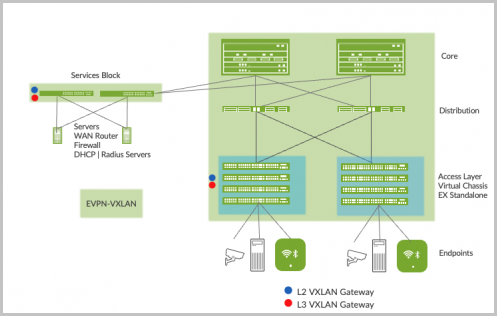

Campus Fabric Core-Distribution for Traditional 3-Stage Architecture

Enterprise networks that scale past the collapsed core model typically deploy a traditional three-stage architecture involving the core, distribution, and access layers. In this case, the core layer provides the Layer 2 (L2) or Layer 3 (L3) connectivity to all users, printers, APs, and so on. And the core devices interconnect with the dual WAN routers using standards-based OSPF or BGP technologies.

This traditional deployment model faces the following challenges:

-

Its proprietary core MC-LAG technology requires a homogeneous vendor approach.

-

Only up to two core devices are supported in a single topology.

-

Lack of native traffic isolation capabilities anywhere in this network.

-

Requires STP between the distribution and access layers and potentially between the core and distribution layers. This results in sub-optimal use of links.

- Careful planning is required if you need to move the L3 boundary between core and distribution layers.

-

VLAN extensibility requires deploying VLANs across all links between access switches.

The Campus Fabric Core-Distribution architecture addresses these challenges in the physical layout of a three-stage model and provides the following advantages:

-

Helps in retaining your investment in the access layer. In an enterprise network, your company makes most of the Ethernet switching hardware investment in the access layer where endpoints terminate. The endpoint devices (including laptops, APs, printers, and IOT devices) plug in to the access layer. These devices use various Ethernet speeds, such as 100M, 1G, 2.5G, and 10G.

-

Provides a standards-based EVPN-VXLAN framework.

-

Supports horizontal scale at the core and distribution layers, supporting an IP Clos architecture.

-

Provides traffic isolation capabilities native to EVPN-VXLAN.

-

Provides native active-active load balancing to the access layer using ESI-LAG.

-

Provides standard LACP at the access layer.

-

Mitigates the need for STP between all layers.

-

Supports the following topology subtypes:

- Centrally routed bridging (CRB): Targets north-south traffic patterns with the L3 boundary or default gateway shared between all core devices.

- Edge-routed bridging (ERB): Targets east-west traffic patterns and IP multicast with the L3 boundary or the default gateway shared between all distribution devices.

To know about more benefits of Campus Fabric Core-Distribution deployments, See Benefits of Campus Fabric Core-Distribution.

Choose Campus Fabric Core-Distribution if you want to:

-

Retain your investment in the access layer while leveraging the existing LACP technology.

-

Retain your investment in the core and distribution layers.

-

Have an IP Clos architecture between core and distribution built on standards-based EVPN-VXLAN.

-

Have active-active load-balancing at all layers, as listed below:

-

Equal-cost multipath (ECMP) between the core and distribution layers

-

ESI-LAG towards the access layer

-

-

Mitigate the need for STP between all layers.

The following Juniper platforms support Campus Fabric Core-Distribution (CRB/ERB):

-

Core layer devices: EX4650, EX9200, EX4400-48F, EX4400-24X, QFX5120, QFX5110, QFX5700, and QFX5130

-

Distribution layer devices: EX4650, EX9200, EX4400-48F, EX4400-24X, QFX5120, QFX5110, QFX5700, and QFX5130

-

Access layer devices: Third party devices using LACP, Juniper Virtual Chassis, or standalone EX switches

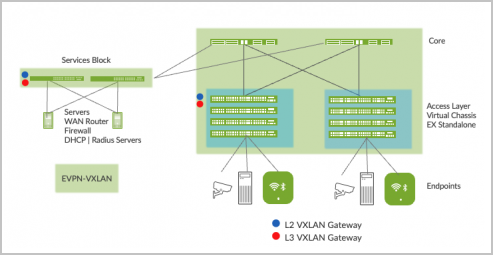

Campus Fabric IP Clos for Micro-Segmentation at Access Layer

Enterprise networks need to accommodate the growing demand for cloud-ready, scalable, and efficient networks. This demand includes a great number of IoT and mobile devices. This also creates the need for segmentation and security. IP Clos architectures help enterprises meet these challenges. An IP Clos solution provides increased scalability and segmentation using a standards-based EVPN-VXLAN architecture with Group Based Policy (GBP) capacity.

A Campus Fabric IP Clos architecture provides the following advantages:

-

Micro-segmentation at the access layer using standards-based Group Based Policy

-

Integration with third-party network access control (NAC) or RADIUS deployments

-

Standards-based EVPN-VXLAN framework across all layers

-

Flexibility in scale supporting 3-stage and 5-stage IP Clos deployments

Note: The IP Clos architecture also supports a two-stage topology consisting of an access layer and a core layer, with the core layer acting as the services block. -

Traffic-isolation capabilities native to EVPN-VXLAN

-

Native active-active load balancing within campus fabric by utilizing ECMP

-

Network optimized for IP multicast

-

Fast convergence between all layers, using a fine-tuned Bidirectional Forwarding Detection (BFD)

-

Optional Services Block for customers who wish to deploy a lean core layer

-

Mitigated need for STP between all layers

To know about more benefits of Campus Fabric IP Clos deployments, see Benefits of Campus Fabric IP Clos.

The following images represents the 3-stage and 5-stage IP Clos deployment.

The following Juniper Network platforms support Campus Fabric IP Clos:

-

Core layer devices: EX9200, EX4400-48F, EX4400-24X, EX4650, QFX5120, QFX5110, QFX5700, and QFX5130

-

Distribution layer devices: EX9200, EX4400-48F, EX4400-24X, EX4650, QFX5120, QFX5110, QFX5700, and QFX5130

-

Access layer devices: EX4100, EX4300-MP, and EX4400

-

Services Block devices: QFX5120, EX4650, EX4400-24X, EX4400, QFX5130, QFX5700, EX9200, and QFX10k