EVPN Multihoming Designated Forwarder Election

The designated forwarder (DF) manages broadcast, unknown unicast, and multicast (BUM) traffic to prevent loops and ensure efficient traffic distribution.

DF Election Overview

Depending on the multihoming mode of operation, traffic to a multihomed customer edge (CE) device uses one or all the multihomed provider edge (PE) devices to reach the customer site. The designated forwarder (DF) election procedure ensures only one endpoint, the DF handles the broadcast, unknown unicast, and multicast (BUM) traffic for a given Ethernet segment, thereby preventing forwarding loops and optimizing network performance.

The DF election process dynamically responds to various network events such as configuration changes, BGP session transitions, or link state changes. This adaptability enables the network to maintain efficient traffic forwarding without manual intervention. When a triggering event occurs, the DF election mechanism re-evaluates and potentially reassigns the DF role, maintaining optimal traffic handling across the network.

The DF election hold timer prevents the election process from starting prematurely.

This ensures the network has time to stabilize before the election procedure begins.

The timer defaults to 3 seconds. However, you can modify it with the

designated-forwarder-election-hold-time

statement at the [edit <routing-instances name>

protocols evpn] hierarchy level to suit your network's stability and

performance needs. This timer value must be the same across all the PE routers

connected to the same Ethernet segment.

The default DF election procedure (as specified in RFC 7432) uses IP addresses and service carving to elect a DF for each EVPN instance (EVI). This election procedure is revertive, so when the elected DF fails and then recovers from that failure it will preempt existing DF.

The NTP-based Designated Forwarder (DF) election mechanism ensures the DF election is performed simultaneously at a predetermined Service Carving Time (SCT) within an Ethernet Segment. This synchronization minimizes the risks of loops, duplicate traffic, and traffic discarding associated with traditional timer-based elections. This mechanism ensures that DF roles are consistently applied across all PEs, improving robustness and reliability in scenarios such as the addition of new PEs or recovery from failures. Key features include skew handling to prevent duplicate traffic, coordinated recovery processes for multiple PE failures, and compatibility with existing DF election algorithms. Enhancements also allow for per EVPN instance (per-evi) DF election and integration with both IPv4 and IPv6 underlay networks.

The preference-based DF election procedure uses manually configured preference

values, the Don’t Preempt (DP) bit, and router ID or loopback address to elect the

DF. Starting in Junos OS Release 24.2, the preference statement includes a non-revertive option

that prevents the preemption of the existing DF after a failure. This

non-revertive option avoids a service impact if the old DF

recovers after failing. The default behavior is revertive.

Benefits of Designated Forwarder (DF) Election

-

Prevents forwarding loops—By electing a single multihomed Ethernet segment to handle BUM traffic, the DF election ensures that only one endpoint forwards traffic, significantly reducing the risk of forwarding loops and enhancing network stability.

-

Adapts dynamically to network changes—The DF election process responds dynamically to network changes, such as new interface configurations or recovery from link failures, maintaining network stability and operational efficiency.

-

Ensures efficient failover—The presence of a backup DF (BDF), which remains in a blocking state until it is needed to take over, ensures smooth failover and continuous network operation with minimal traffic disruption, thereby improving overall network resilience.

-

Reduces route processing overhead—Utilizing the ES-Import extended community for route filtering ensures that only relevant routes are imported by PEs connected to the same Ethernet segment, which reduces unnecessary route processing and maintains efficient route management.

-

Maintains network consistency—The mass withdrawal mechanism, triggered by the withdrawal of Ethernet autodiscovery routes after a link failure, invalidates stale MAC addresses on remote PEs, ensuring that the network state remains consistent and preventing issues caused by outdated MAC address information.

-

Distributes traffic efficiently—The DF election process balances the load across multiple PEs, ensuring that no single segment is overwhelmed with traffic, which optimizes network performance and resource utilization.

DF Election Roles

The designated forwarder (DF) election process involves selecting a forwarding role as follows:

-

Designated forwarder (DF)—The PE router that announces the MAC advertisement route for the customer site's MAC address. This PE router is the primary PE router that forwards BUM traffic to the multihomed CE device and is called the designated forwarder (DF) PE router.

-

Backup designated forwarder (BDF)—Each router in the set of PE routers that advertise the Ethernet autodiscovery route for the same ESI and serve as the backup path in case the DF fails, is called a backup designated forwarder (BDF). A BDF is also called a non-DF router.

The DF election process elects a local PE router as the BDF, which then puts the multihomed interface connecting to the customer site into a blocking state for the active-standby mode. The interface stays in the blocking state until the BDF is elected as the DF for the Ethernet segment.

-

Non-designated forwarder (non-DF)—Other PE routers not selected as the DF. The BDF is also considered to be a non-DF.

DF Election Trigger

In general, the following conditions will trigger the DF election process:

-

When you configure an interface with a nonzero ESI, or when the PE router transitions from an isolated-from-the-core (no BGP session) state to a connected-to-the-core (has established BGP session) state. These conditions also trigger the hold timer. By default, the PE puts the interface into a blocking state until the router is elected as the DF.

-

After completing a DF election process, a PE router receives a new Ethernet segment route or detects the withdrawal of an existing Ethernet segment route. Neither of these trigger the hold timer.

-

When an interface of a non-DF PE router recovers from a link failure. In this case the PE router has no knowledge of the hold time imposed by other PE routers. As a result, the recovered PE router does not trigger a hold timer.

DF Election Procedure (RFC 7432)

Service carving refers to the default procedure for DF election at the granularity of the ESI and EVI. With service carving, it is possible to elect multiple DFs per Ethernet segment (one per EVI) to perform load-balancing of multi-destination traffic for a given Ethernet segment. The load-balancing procedures carve up the EVI space among the PE nodes evenly, in such a way that every PE is the DF for a disjoint set of EVIs.

The service carving procedure is as follows:

When a PE router discovers the ESI of the attached Ethernet segment, it advertises an autodiscovery route per Ethernet segment with the associated ES-import extended community attribute.

The PE router starts a hold timer (default value of 3 seconds) in order to receive the autodiscovery routes from other PE nodes connected to the same Ethernet segment. This timer value must be the same across all the PE routers connected to the same Ethernet segment.

You can overwrite the default hold timer using the

designated-forwarder-election-hold-timeconfiguration statement at the[edit <routing-instances name> protocols evpn]hierarchy level.When the hold timer expires, each PE router builds an ordered list of the IP addresses of all the PE nodes connected to the Ethernet segment (including itself), in increasing numeric order. The system assigns every PE router an ordinal indicating its position in the ordered list, starting with 0 for the PE with the numerically lowest IP address. The DF for a given EVI is then determined by the ordinal matching the VLAN ID modulo the number of PEs, providing a deterministic and predictable method for DF selection. For example, if the VLAN ID is 10 and there are three PEs, the DF would be the PE with the ordinal number that corresponds to 10 modulo 3, (10 mod 3 = 1).

The PE router elected as the DF for a given EVI unblocks traffic for the Ethernet tags associated with that EVI. The DF PE unblocks multi-destination traffic in the egress direction toward the Ethernet segment. All the non-DF PE routers continue to drop multi-destination traffic (for the associated EVIs) in the egress direction toward the Ethernet segment.

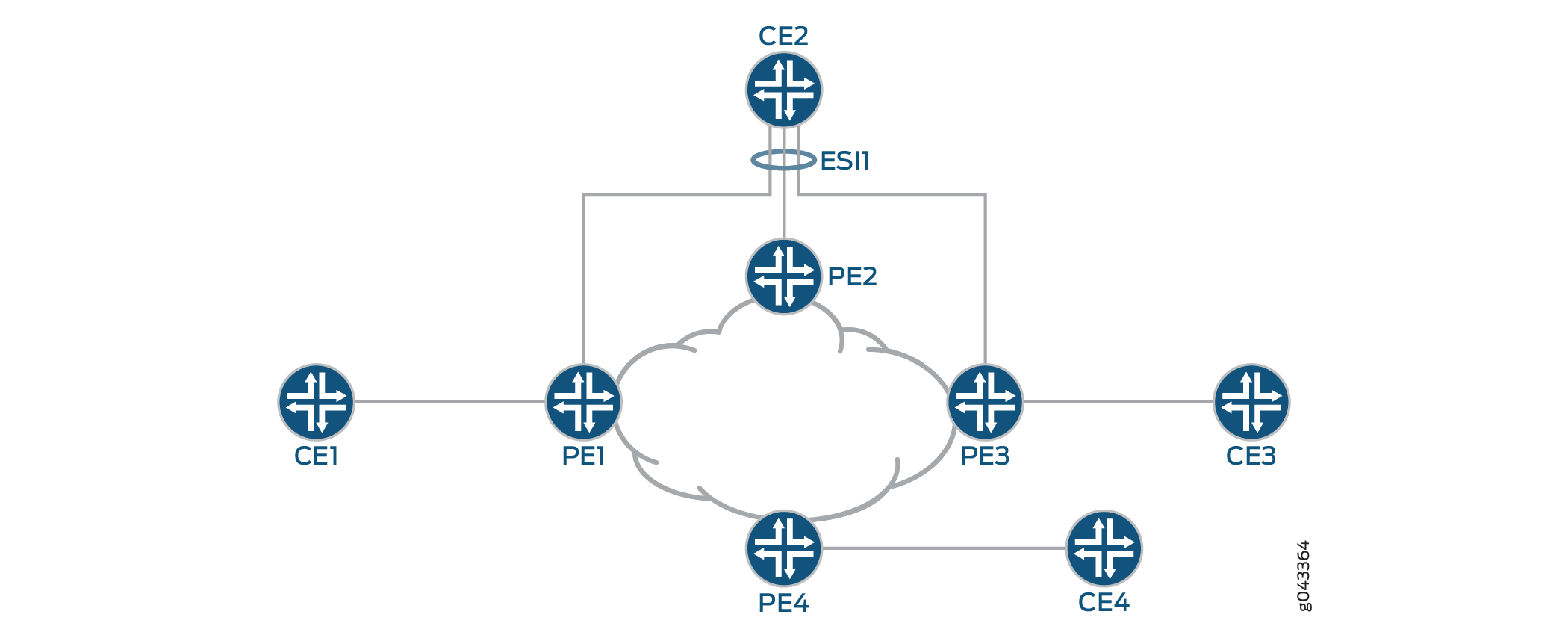

In Figure 1, Routers PE1, PE2, and PE3 perform a DF election for active-active multihoming. Each router can become the DF for a particular VLAN from a range of VLANs configured on ESI1 and a non-DF for other VLANs. Each DF forwards BUM traffic on the ESI and VLAN it serves. The non-DF PE routers block the BUM traffic on those particular Ethernet segments.

NTP-Based DF Election

- Overview

- Benefits of NTP-Based DF Election

- Integration with Existing Algorithms

- Skew Handling and Concurrent Recoveries

Overview

The NTP-based DF election mechanism leverages NTP or PTP to synchronize the DF election on all peering PE devices within an EVPN segment. When a new PE device joins the segment or a previously failed PE recovers, it announces a "Service Carving Time" (SCT) to its peers. This SCT is an absolute timestamp at which all PEs simultaneously execute the DF election process, ensuring that the DF role is assigned consistently across the network. This precise synchronization mitigates the risks of traffic loops, duplicates, and discarding that can result from asynchronous DF elections.

The NTP-based DF election introduces additional enhancements to improve network stability. Key features include skew handling to prevent duplicate traffic, coordinated recovery processes for multiple PE failures, and compatibility with existing DF election algorithms.

To configure the NTP-based DF election, you enable the feature using the

df-election-ntp statement under the global protocols

evpn configuration. Additionally, use the

designated-forwarder-election-hold-time configuration

statement at the [edit <routing-instances name>

protocols evpn] hierarchy level

to

specify the delay time for the election in seconds, with a range of 1-1800

seconds and a default of 3 seconds. For monitoring, the show route table

extensive command displays the SCT extended community on Type 4

routes, including columns and fields to indicate the NTP-based DF election

capability and SCT timestamps. These commands facilitate precise configuration

and monitoring, ensuring that your network infrastructure maintains synchronized

and reliable DF elections.

Benefits of NTP-Based DF Election

-

Minimizes the risk of traffic loops and discarding by ensuring that all PE devices apply the DF election results simultaneously.

-

Enhances network stability through precise timing coordination, reducing the chances of duplicate traffic during the DF election process.

-

Supports seamless integration with existing DF election algorithms and both IPv4 and IPv6 underlay networks, ensuring compatibility and flexibility with current network setups.

-

Improves recovery efficiency by coordinating the DF election process during simultaneous PE recoveries, preventing redundant election executions and maintaining a single synchronized event.

-

Allows for per EVPN instance DF election, enhancing granularity and control over DF role assignments, which is particularly beneficial for VLAN-based services.

Integration with Existing Algorithms

The NTP-based DF election mechanism is designed to integrate seamlessly with existing DF election algorithms. It supports both MOD-based and preference-based algorithms, providing flexibility in how DF roles are assigned within the network. This compatibility ensures that the NTP-based mechanism can be adopted without requiring significant changes to your current DF election configurations. Additionally, the mechanism supports both IPv4 and IPv6 underlay networks, enabling you to deploy the feature across diverse network environments.

For VLAN-based services, the NTP-based DF election supports per EVPN instance (per-evi) DF election. This feature ensures that DF role switchovers occur only if all access interfaces under the EVPN instance are down, providing finer granularity and control over DF assignments. This granularity is particularly beneficial in scenarios where VLAN-based services require precise control over traffic forwarding decisions. Overall, the NTP-based DF election mechanism enhances the stability and reliability of your EVPN segments, ensuring consistent and synchronized DF elections across your network.

Skew Handling and Concurrent Recoveries

The NTP-based DF election introduces a skew handling mechanism to prevent duplicate traffic. This mechanism introduces a slight delay (default -10 milliseconds) to ensure that previously active PEs perform the service carving just before the newly inserted PE. This prevents duplicate traffic by ensuring that all PEs have a current and consistent view of the network state before the election. Furthermore, in scenarios where multiple PEs recover simultaneously, the DF election process is triggered only once, based on the largest SCT value received. This single coordinated election event eliminates the potential for redundant DF elections and ensures network consistency.

If any PE does not support the NTP-based DF election, the system reverts to the default timer-based DF election as specified in RFC 7432. This fallback mechanism ensures compatibility and continuity across the network.

Preference-Based DF Election

- Overview

- Benefits of Preference-Based Designated Forwarder (DF) Election

- Preference-Based DF Election Procedure

- DF Election Algorithm Mismatch

- DF Election Algorithm Migration

- Changing Preference for Maintenance

- Non-Revertive Mode

- Load Balancing with Preference-Based DF Election

Overview

The DF election based on RFC 7432 fails to meet the operational requirements of some service providers. To address this issue, we introduced the preference-based DF election feature that enables control of the DF election based on administrative preference values set on interfaces.

The preference-based DF election feature offers network operators the flexibility to manage DF roles with preference values configured on interfaces. In scenarios where the primary link must handle most of the traffic, this strategy optimizes both throughput and resource allocation.

Starting in Junos OS Release 24.2, we provide more configuration options to customize the preference-based DF election process:

-

The preference

non-revertiveoption improves network stability by ensuring a previously designated DF does not preempt the current DF when it comes back online after a failure. -

The preference

least, and the evpndesignated-forwarder-preference-highestand evpndesignated-forwarder-preference-leaststatements enable you to select whether the election process uses the highest or lowest preference values at the ESI and EVI levels.

Please refer to Feature Explorer for a complete list of the products that support these features.

Benefits of Preference-Based Designated Forwarder (DF) Election

-

Optimized traffic flow—Configuring the DF based on interface attributes like bandwidth ensures optimal link selection. This results in more efficient traffic distribution and better use of network resources.

-

Enhanced operational control—Manually configuring preference values gives you greater control over the DF election process and ensure the most suitable link is used.

-

Enhanced network stability—The preference

non-revertiveoption prevents a returning DF from preempting the current DF. This eliminates unnecessary disruptions, and ensures continuous service stability. -

Granular load balancing control—You can configure DF election preferences. You can also select the DF based on the highest or lowest preference values at the ESI and EVI levels. This enables you to effectively distribute traffic across multiple links, leading to improved network performance.

-

Maintenance flexibility—You can adjust preference values to switch the DF role during maintenance activities, facilitating operational flexibility and reducing the impact of maintenance on service continuity.

Preference-Based DF Election Procedure

The preference-based DF election uses manually configured interface preference values when electing a DF. Manual configuration of preference values gives you enhanced control over the DF election process. You can set specific preferences on interfaces to influence which node acts as the DF.

The preference-based DF election proceeds as follows:

Configure the DF election type preference value under an ESI.

The multihoming PE devices advertise the configured preference value and DP bit using the DF election extended community in the EVPN Type 4 routes.

After receiving the EVPN Type 4 route, the PE devices build the list of candidate DF devices, in the order of the preference value, DP bit, and IP address.

When the DF timer expires, the PE devices elect the DF.

By default, the DF election is based on the highest preference value. However, you can configure the preference-based DF election process to choose the DF based on the lowest preference value using the preference

leastor evpndesignated-forwarder-preference-leaststatements.Note:The evpn configuration for the

designated-forwarder-preference-highestordesignated-forwarder-preference-leastoptions should be the same on the competing multihoming EVIs; otherwise the election process might elect two DFs, which can cause traffic loss or traffic loops.Also, when you configure either of these options at the

[edit protocols evpn]hierarchy level, note that the option applies to EVPN multihoming only in the default switch instance, rather than globally to all defined EVIs on the device. These settings are available at the[edit protocols evpn]hierarchy level only on platforms that support a default switch instance. To enable these options for EVPN routing instances other than a default switch instance, you must configure the options in each routing instance at the[edit routing-instances name protocols evpn]hierarchy level.If multiple DF candidates have the same preference value, then the PE device selects the DF based on the DP bit. When those DF candidates have the same DP bit value, the process elects the DF based on the lowest IP address.

DF Election Algorithm Mismatch

When there is a mismatch between a locally configured DF election algorithm and a remote PE device’s DF election algorithm, then all the PE devices should fall back to the default DF election as specified in RFC 7432.

DF Election Algorithm Migration

Migration from the traditional modulo-based DF election to the new preference-based method requires careful planning. Typically, this involves a maintenance window where interfaces with the same ESI on non-DF PEs are brought down. You then configure the new DF election algorithm on the DF PE before applying it to the other multihoming PEs. This structured approach ensures a smooth transition with minimal service impact.

Perform the migration using the following steps:

Bring down all the interfaces with the same ESI on the non-DF PE devices.

Configure the current DF PE with the preference-based DF election options.

Configure the preference-based DF election options on the non-DF PE devices.

Bring up all the interfaces on the non-DF PE devices.

After reconfiguring and bringing the interfaces back online, verify that the DF election process is functioning as intended. Monitor the network to ensure that the designated forwarders are correctly elected based on the configured preferences. This step is crucial to confirm that the new settings are correctly applied and that the network operates smoothly with the enhanced DF election mechanism.

Changing Preference for Maintenance

The ability to change preference values during maintenance activities enhances operational flexibility. You can switch DF roles as needed by simply changing the configured preference value on a selected device.

Change the DF for a given ESI by performing one of the following steps:

Change the preference value to a higher value on a current non-DF device.

Change the preference value to a lower value on the current DF device.

Changing the preference value for an ESI can lead to some traffic loss during the short duration required to integrate the delay in the updated BGP route propagation with the new preference value.

Non-Revertive Mode

Beginning with Junos OS Release 24.2R1, you can enable the

non-revertive option under the [edit interfaces

name esi df-election-type preference] hierarchy, which helps to ensure that your network

remains stable across link failures and recoveries. When you configure this

option per ESI, it provides granular control and ensures each segment adheres to

the desired operational behavior.

The non-revertive option ensures that once a DF is elected, it

will not be preempted by the previously designated DF coming back online after a

failure. This non-revertive mode is key in maintaining a stable network

environment and avoiding unnecessary service disruptions.

Please refer to Feature Explorer for a complete list of the products that support this feature.

The non-revertive preference-based DF election option

doesn’t work if:

-

You configure the

no-core-isolationoption under the [edit protocols evpn] hierarchy, and any of the following events occur:-

You reboot the device.

-

You run the restart routing command.

-

You run the clear bgp neighbor command.

-

-

You have the graceful restart (GR) feature enabled

set routing-options graceful-restart), and the device goes through a graceful restart.

Load Balancing with Preference-Based DF Election

The preference-based DF election enables load balancing by selecting DFs based on

the highest or lowest preference values. By default, the DF is selected based on

the highest preference value. You can configure DF election type preference

least on the interface (ESI level) to choose the DF based on

lowest preference value.

[edit interfaces interface-name]

esi {

XX:XX:XX:XX:XX:XX:XX:XX:XX:XX;

df-election-type {

preference {

value value;

least;

}

}

}You can also configure evpn

designated-forwarder-preference-highest or

designated-forwarder-preference-least at the EVI level.

[edit routing-instances]

instance-name {

instance-type evpn;

protocols {

evpn {

(designated-forwarder-preference-highest | designated-forwarder-preference-least);

}

}

}The EVI level configurations override the ESI level configurations when both are used as shown in Table 1 below.

You can use these configurations to manage load balancing in different scenarios as in the following examples.

Single ESI Under Multiple EVIs

Configure load balancing for a single ESI under multiple EVIs using various combinations of the following statements:

-

DF election type preference

leastat the ESI level. -

evpn

designated-forwarder-preference-leastordesignated-forwarder-preference-highestat the EVI level.

|

Case No. |

|

|

|

Result on EVI-1 |

Result on EVI-2 |

|---|---|---|---|---|---|

|

1 |

No |

No |

No |

Highest |

Highest |

|

2 |

No |

Yes |

Yes (optional command) |

Lowest |

Highest |

|

3 |

Yes |

No |

No |

Lowest |

Lowest |

|

4 |

Yes |

No |

Yes |

Lowest |

Highest |

Multiple ESIs Under a Single EVI

When configuring load balancing for multiple ESIs under a single EVI, use the

ESI default setting to select the highest preference or configure preference

least on the ESI to select the lowest preference.

Do not configure the evpn

designated-forwarder-preference-least or

designated-forwarder-preference-highest statements at

the EVI level because they will override the ESI level configurations.

|

|

|

Result for ES1-1 on EVI-1 |

Result for ESI-2 on EVI-1 |

|---|---|---|---|

|

No |

No |

Highest |

Highest |

|

Yes |

No |

Lowest |

Highest |

DF Verification

The following show commands offer detailed insights into DF election preferences and statuses, aiding in effective troubleshooting and monitoring:

These commands display detailed information about the EVPN instance, including DF election preferences and the current DF status. The ESI info command provides insights into the current DF and backup forwarders along with their preference values and non-revertive status.

By mastering these commands, you can effectively implement and manage the DF Election feature, ensuring a robust and efficient network environment.

DF Election for Virtual Switch

The virtual switch permits multiple bridge domains in one EVPN instance (EVI). It also accommodates both trunk and access ports. You can configure flexible Ethernet services on the port, enabling different VLANs on a single port to become part of different EVIs.

See the following for more information:

The DF election for virtual switch depends on the following:

-

Port mode—Sub-interface, trunk interface, and access port

-

EVI mode—Virtual switch with EVPN and EVPN-EVI

In the virtual switch, multiple Ethernet tags can be associated with a single EVI, wherein the numerically lowest Ethernet tag value in the EVI is used for the DF election.

Handling Failover

A failover can occur when:

-

The DF PE router loses its DF role.

-

There is a link or port failure on the DF PE router.

On losing the DF role, the PE router puts the customer-facing interface on the DF into the blocking state.

A link or port failure triggers a DF election process, which results in the BDF PE router's election as the DF. At that time, unicast traffic and BUM flow of traffic will be affected as follows:

Unicast Traffic

-

CE to Core—The CE device continues to flood traffic on all the links. The previous BDF PE router changes the EVPN multihomed status of the interface from the blocking state to the forwarding state, and traffic is learned and forwarded through this PE router.

-

Core to CE—The failed DF PE router withdraws the Ethernet autodiscovery route per Ethernet segment and the locally-learned MAC routes, causing the remote PE routers to redirect traffic to the BDF.

The transition of the BDF PE router to the DF role can take some time, causing the EVPN multihomed status of the interface to continue to be in the blocking state, resulting in traffic loss.

BUM Traffic

-

CE to Core—All the traffic is routed toward the BDF.

-

Core to CE—The remote PE routers flood the BUM traffic in the core.

Constraints and Limitations for QFX5K Series Switches

The QFX5K series devices only support port based DF elections and not the DF election per EVI per ESI procedure. You can use the following configuration options to configure port based DF elections for either MOD-based or preference-based DF elections.

Use the df-election-granularity per-esi option when configuring a MOD-based election.

set interfaces interface-name esi df-election-granularity per-esi

When configuring a single-active port based MOD DF election add the lacp-oos-ndf option.

set interfaces interface-name esi df-election-granularity per-esi lacp-oos-ndf

Use the df-election-type preference option to configure a preference-based DF election.

set interfaces interface-name esi df-election-type preference